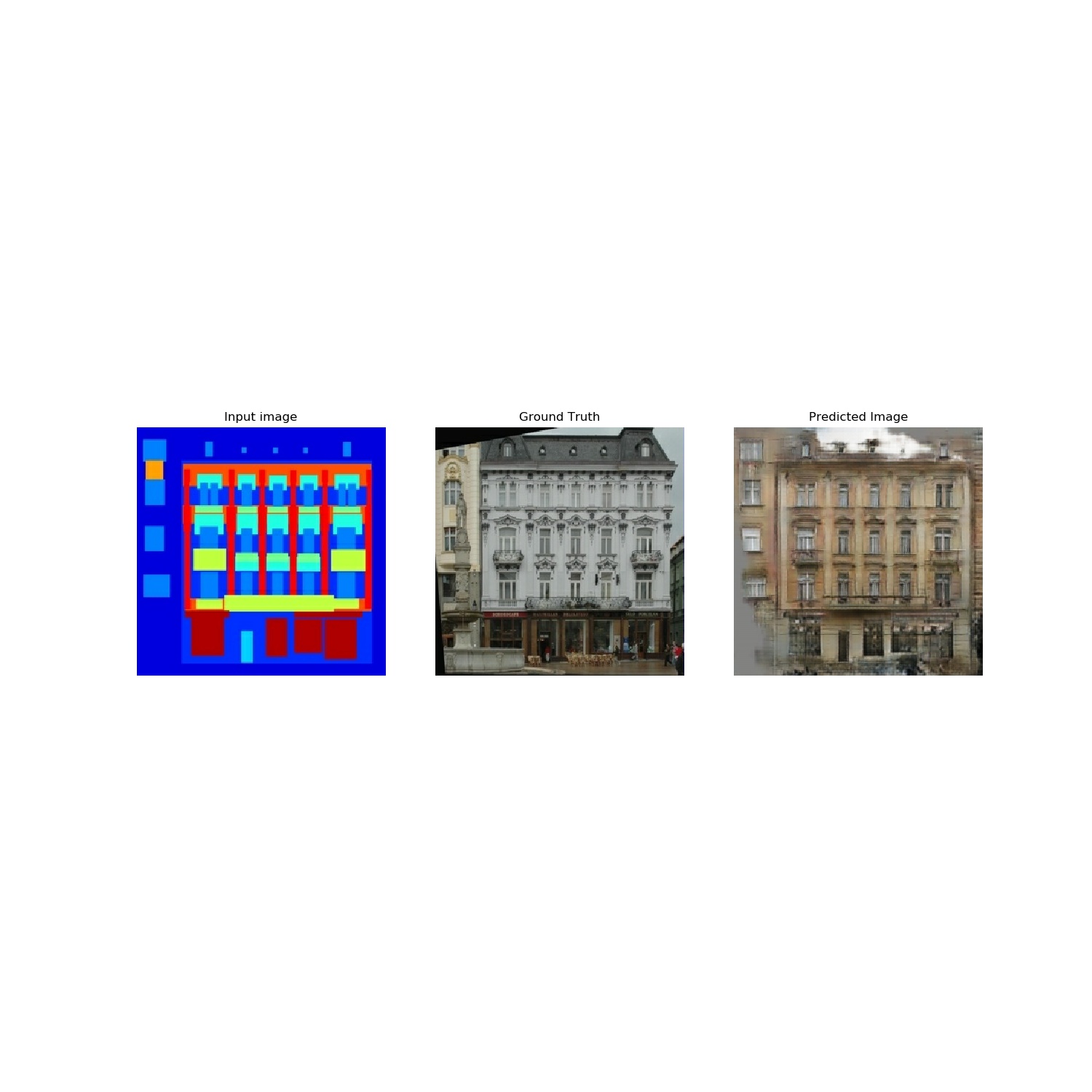

- Implementation of "Image-to-image translation with conditional adversarial networks"

- https://arxiv.org/abs/1611.07004

- https://github.com/tensorflow/docs/blob/master/site/en/r2/tutorials/generative/pix2pix.ipynb

- The code is written with tensorflow eager execution (2.0)

- Download "facades.tar.gz" dataset and unzip it. https://people.eecs.berkeley.edu/~tinghuiz/projects/pix2pix/datasets/

- Modify the

BASE_PATHvariable inpix2pix.pyto the data. - run

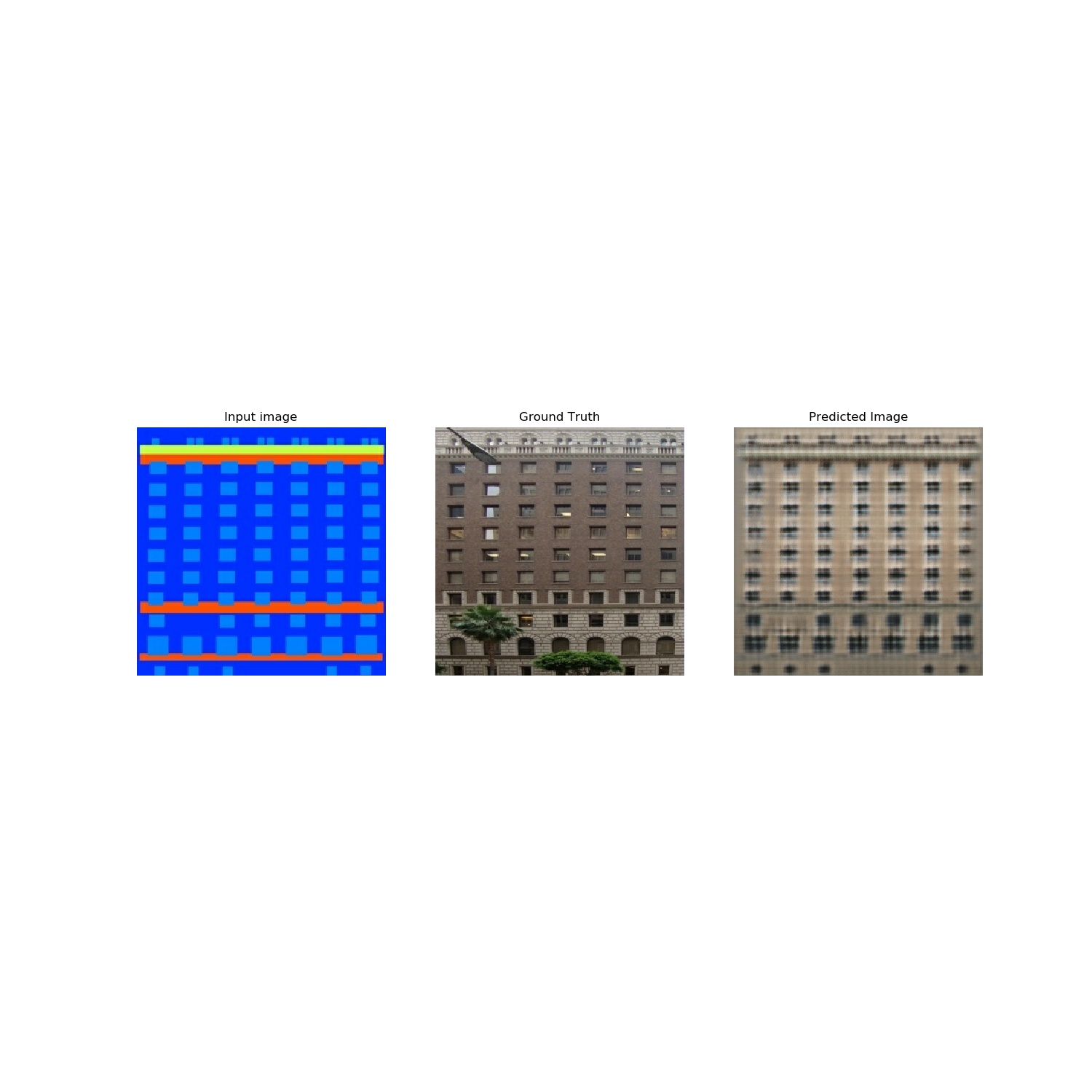

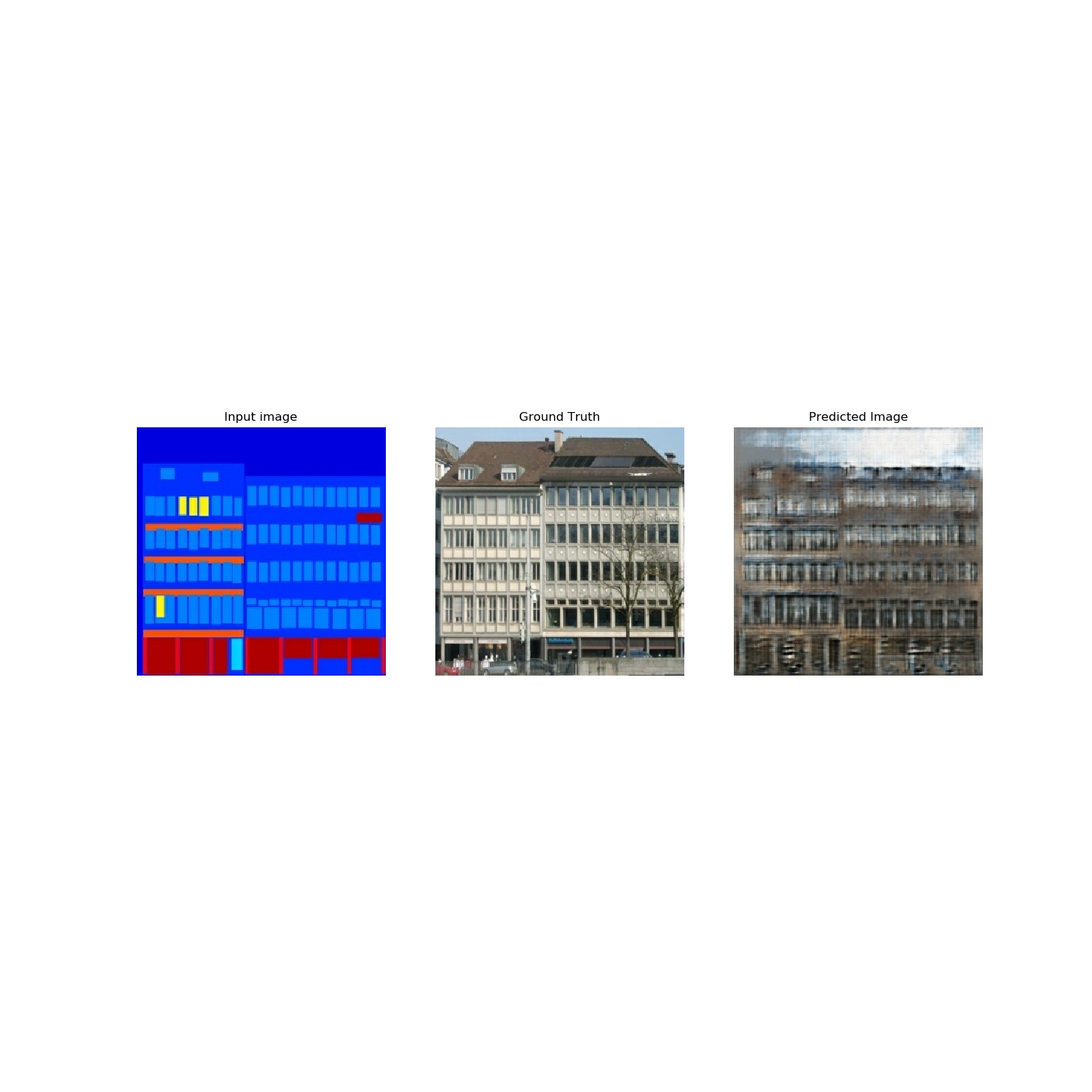

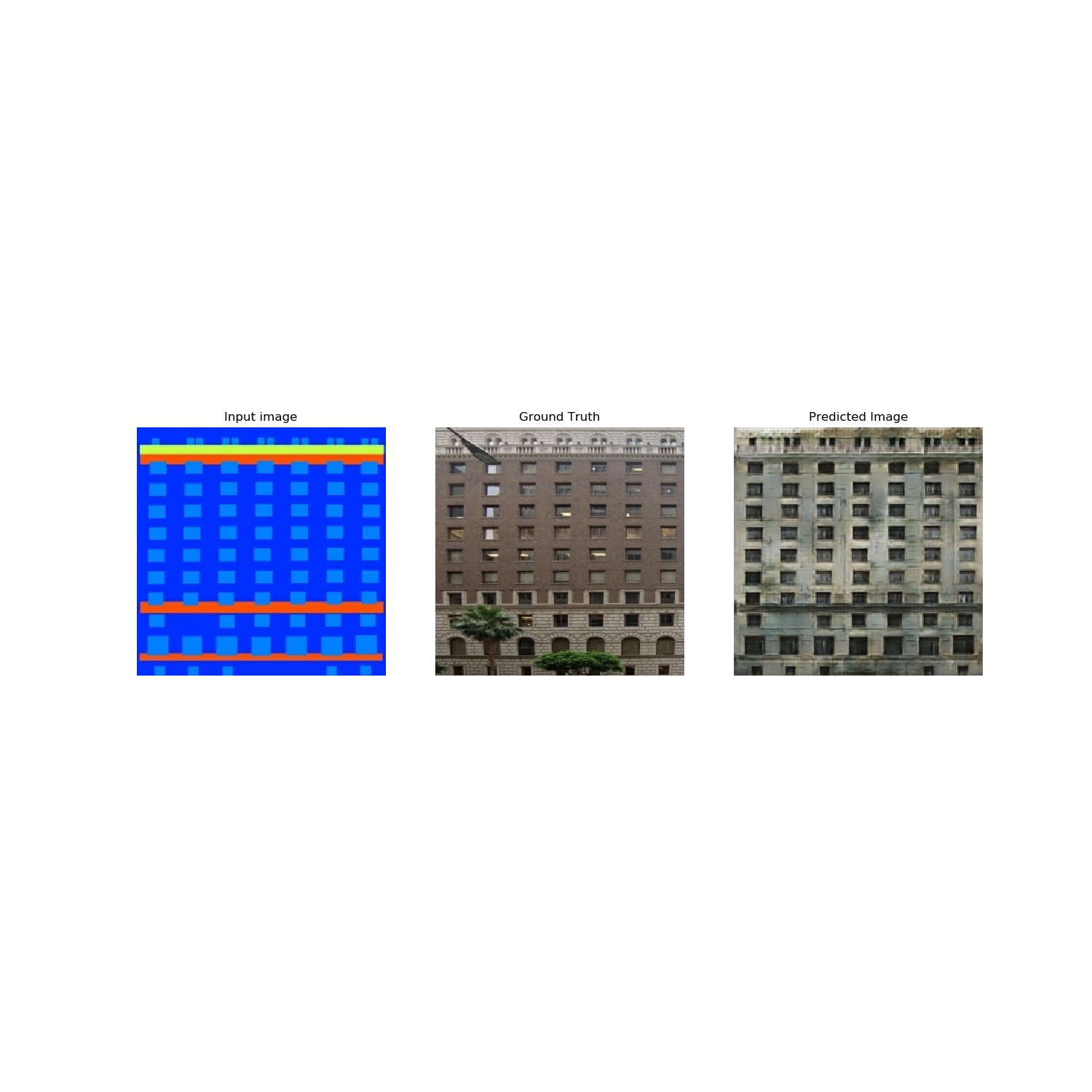

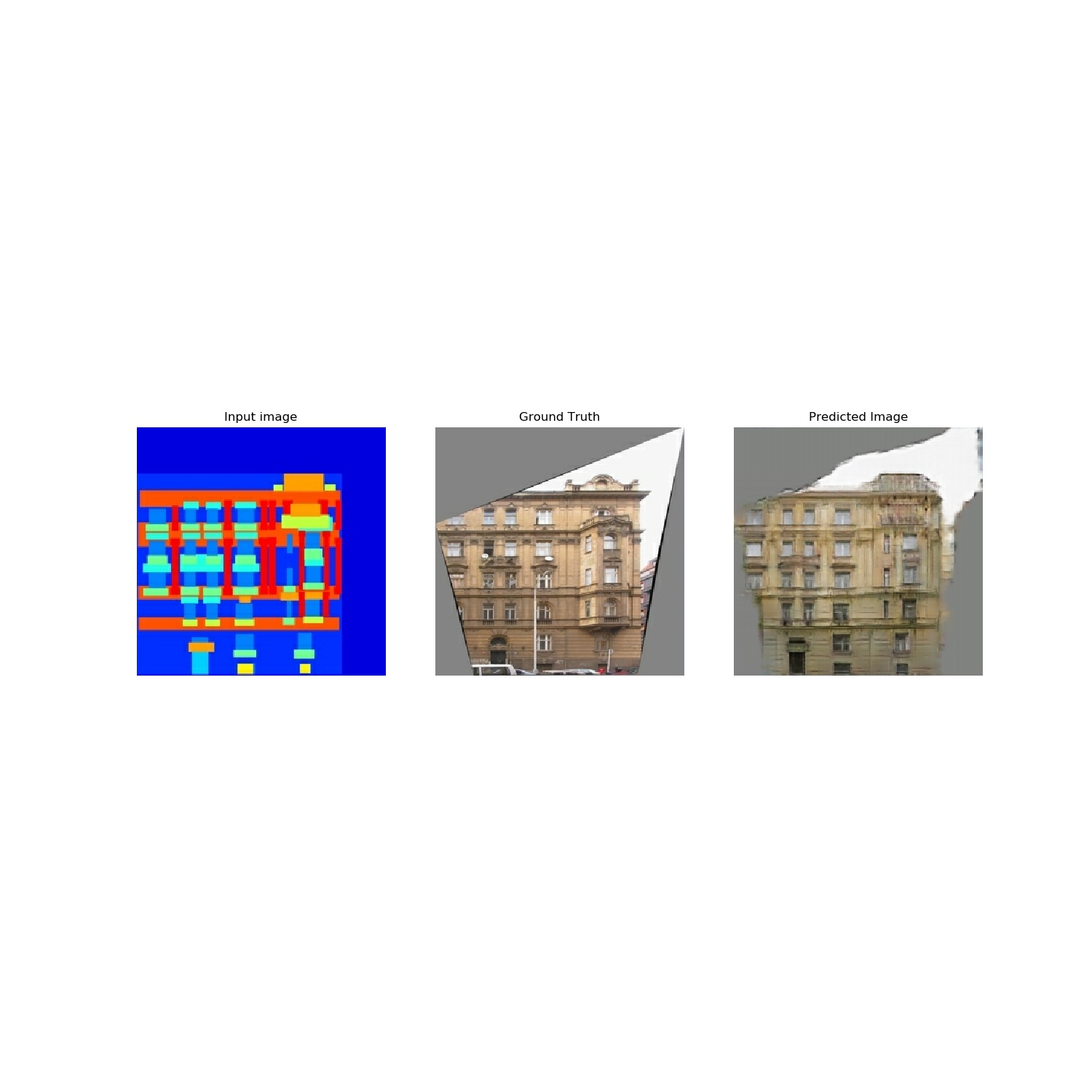

python pix2pix.pyto train the model, the weights will stored inweights/, and generated image will saved ingenerated_img/

run python test.py to load weights from weights/ and generate 5 images.

- The Generator is a U-NET and defined in

PixGenerator.py. The output istanhactivated. - The Discriminator is a PatchGAN network with size 30*30.

- The dataset use

random_jitterandNomalizedto [-1,1] - The process in written in

data_preprocess.py

- The

DropOutis used in training and testing, according to the paper. There is no random vectorzas input like original GAN. The random of input is represented by dropout. - In training,

BATCH_SIZE = 1obtains better results. However, if the generator is a naive 'encoder-decocer' network, you should usebase_size > 1. TheU-NETused here can prevent the activations of bottle-neck layer to become 0. - In the original paper, the author called 'instance batch normalization'.