LatPlan : A domain-independent, image-based classical planner

- NEWS Initial release: Published after AAAI18.

- NEWS Updates on version 2: Mainly the refactoring. AAAI18 experiments still works.

- NEWS Updates on version 2.1: Version 2 was not really working and I could finally have time to fix it. Added ZSAE experiments.

- NEWS Updates on version 2.2: Backported more minor improvements from the private repository

- NEWS Updates on version 3.0: Updates for DSAMA system. More refactoring is done on the learner code. We newly introduced ama3-planner which takes a PDDL domain file.

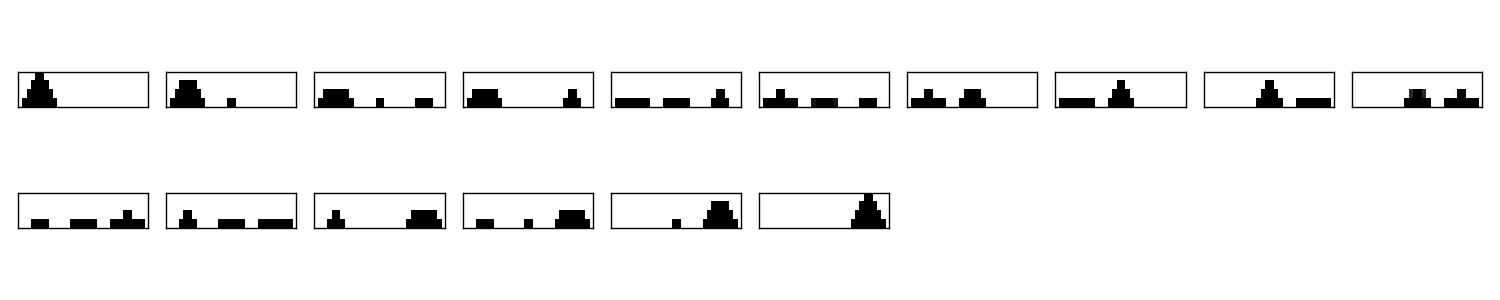

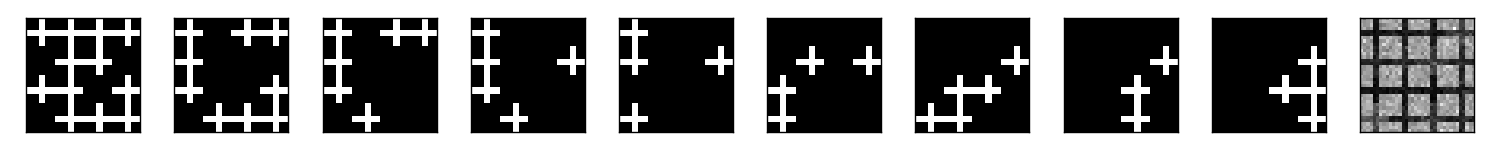

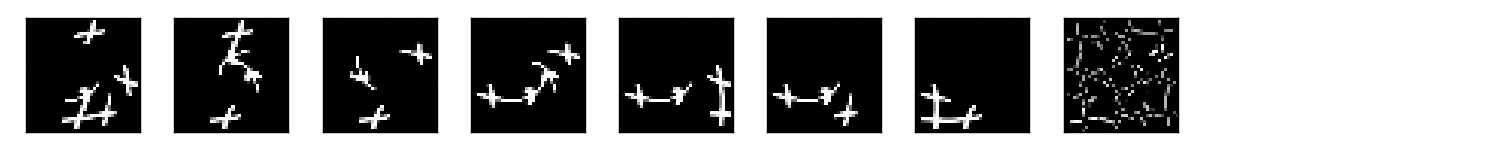

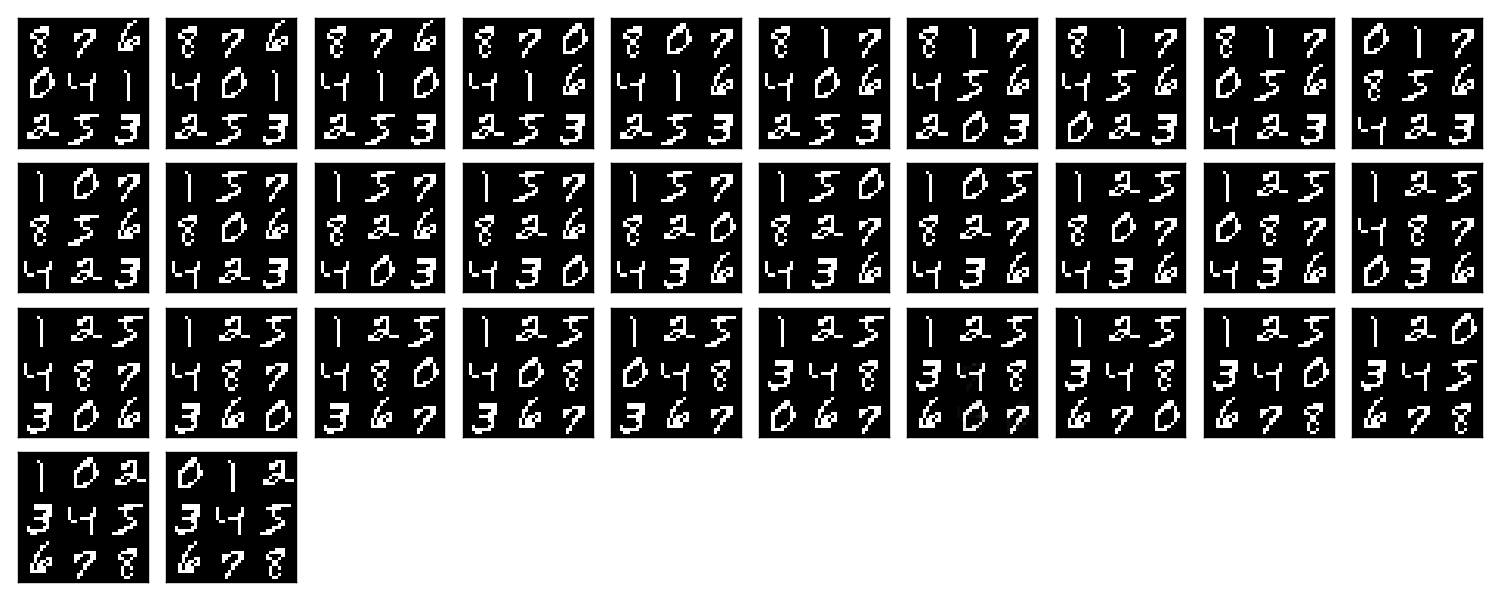

- NEWS Updates on version 4.0: Updates for Cube-Space AE in IJCAI2020 paper. This is a version that can exploit the full potential of search heuristics (lower bounds in Branch-and-Bound), such as LM-cut or Bisimulation Merge-and-Shrink in Fast Downward. We now included a script for 15-puzzle instances randomly sampled from the states 14 or 21 steps away from the goal. We also included a script for Korf’s 100 instances of 15-puzzle, but the accuracy was not sufficient in those problems where the shortest path length are typically around 50. Problems that require deeper searches also require more model accuracy because errors accumulate in each state transition.

This repository contains the source code of LatPlan.

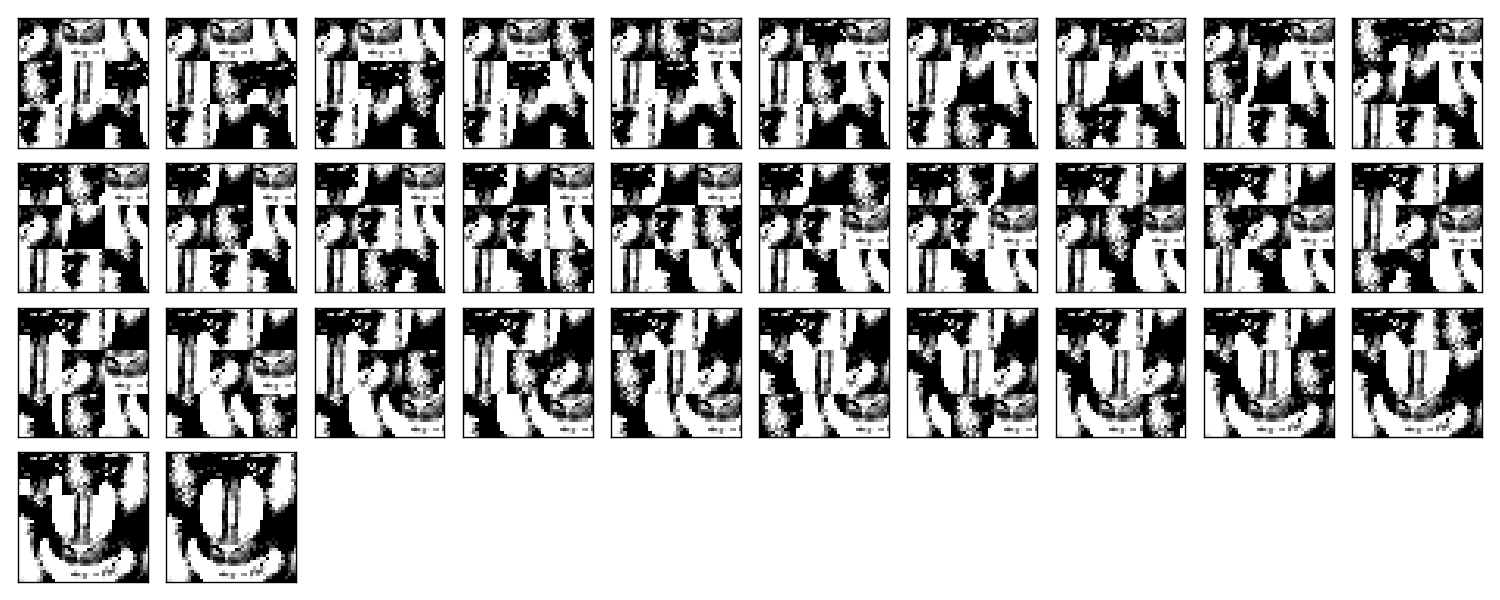

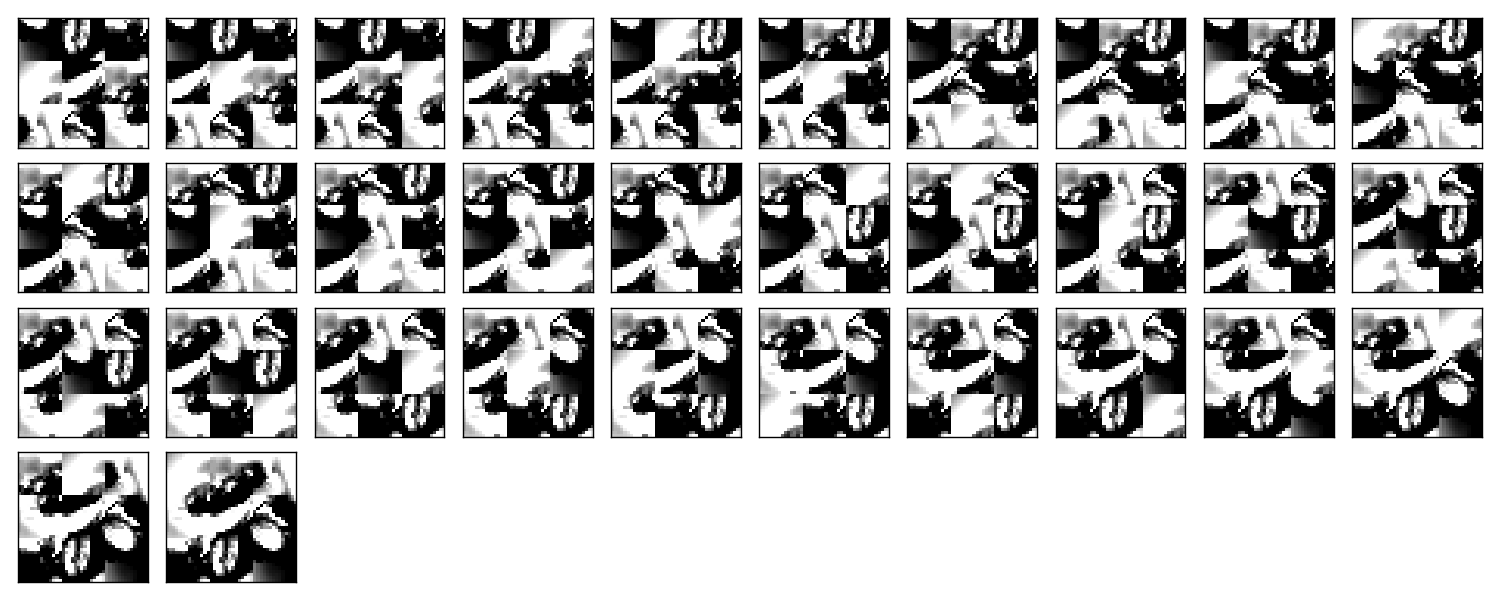

- Asai, M; Muise, C.: 2020. Learning Neural-Symbolic Descriptive Planning Models via Cube-Space Priors: The Voyage Home (to STRIPS).

- Accepted in IJCAI-2020 (Accept ratio 12.6%). https://arxiv.org/abs/2004.12850

- Asai, M.: 2019. Neural-Symbolic Descriptive Action Model from Images: The Search for STRIPS.

- Asai, M.: 2019. Unsupervised Grounding of Plannable First-Order Logic Representation from Images (code not yet available)

- Accepted in ICAPS-2019, Learning and Planning Track. https://arxiv.org/abs/1902.08093

- Asai, M.; Kajino, F: 2019. Towards Stable Symbol Grounding with Zero-Suppressed State AutoEncoder

- Accepted in ICAPS-2019, Learning and Planning Track. https://arxiv.org/abs/1903.11277

- Asai, M.; Fukunaga, A: 2018. Classical Planning in Deep Latent Space: Breaking the Subsymbolic-Symbolic Boundary.

- Accepted in AAAI-2018. https://arxiv.org/abs/1705.00154

- Asai, M.; Fukunaga, A: 2017. Classical Planning in Deep Latent Space: From Unlabeled Images to PDDL (and back).

- In Knowledge Engineering for Planning and Scheduling (KEPS) Workshop (ICAPS2017).

- In Cognitum Workshop at ICJAI-2017.

- In Neural-Symbolic Workshop 2017.

install.sh should install the required libraries on a standard Ubuntu rig.

It requires sudo several times. However Python packages are installed in the user directory.

(We plan to migrate to conda and pip-based dependency resolution soon.)

Next, customize the following files for your job scheduler before running.

The job submission commands are stored in a variable $common, which by default

has the value like jbsub -mem 32g -cores 1+1 -queue x86_24h.

You also need to uncomment the commands to run.

By default, everything is commented out and nothing runs.

# You first need to set up a dataset.

./setup-dataset.sh

# This script launches the training for Cube-Space AEs, as well as SAEs used for AMA2.

./train_all.sh

# This script extracts PDDL files from the Cube-Space AE training results.

./train_others.sh

# This script launches the training for AAE, AD and SD for AMA2.

# The number of actions in AAE is tuned by the hyperparameter tuner.

./train_aae.sh

# This script trains AAEs with a fixed number of actions without tuning.

# It was used in the SAE + Cube-AAE experiments.

./train_aae-fixedactions.sh

# When the training finished, generate the problem instances.

# This script samples the initial states from the frontier of dijkstra search.

(cd problem-instances; ./example-dijkstra.sh)

# This script generates 15-puzzle instances.

(cd problem-instances-16; ./example-dijkstra.sh)

# This script generates Korf's 100 instances for 15-puzzle.

(cd problem-instances-16-korf; ./example-korf.sh)

# modify these scripts to adjust the job submission commands for your job scheduler.

./run_ama2_all.sh

./run_ama3_all.sh

./run_ama3_all-16.sh

./run_ama3_all-16-korf.sh

./run_ama3_all-cube-aae.sh

# after the experiments, run this script to generate the tables and figures.

# for details read the source code

./generate-all-csv.sh

Required software

Python 3.5 or later is required.

On Ubuntu, prerequisites can be installed via launching ./install.sh .

The script compiles several C++ and Lisp binaries. However, it does not affect the

running system (e.g. installing a custom software under /usr/) except for

performing several apt-get install from the official ubuntu repository.

The applications installed by the script consists of:

mercurial g++ cmake make python flex bison g++-multilib— these are required for compiling Fast Downward.git build-essential automake libcurl4-openssl-dev— these are required for compiling [Roswell](http://roswell.github.io/). OSX users should usebrew install roswell.gnuplot— for plotting.parallel— for running some scripts. If you haven’t used it yourself, give it a try! It is a very nice tool.python3-pip python3-pil— python packages.tensorflow keras h5py matplotlib progressbar2 timeout_decorator ansicolors scipy scikit-image imageio— more python packages.bash-completion byobu htop moshThese are not necessary :) but I just use this script also for setting up the environment in a new machine.

file structure

- config.py, config_cpu.py

- keras/tensorflow configuration.

- model.py

- network definitions.

- strips.py

- (Bad name!) the program for training an SAE, and writes the propositional encoding of states/transitions to a CSV file.

- state_discriminator3.py

- The program for training an SD.

- action_autoencoder.py

- The program for training an AAE.

- action_discriminator.py

- The program for training an AD.

- ama1-planner.py

- Latplan using AMA1.

- ama2-planner.py

- Latplan using AMA2.

- ama3-planner.py

- Latplan using the visual input (init goal) and a PDDL domain file.

- run_ama{1,2,3}_all.sh

- Run all experiments.

- various sh files

- supporting scripts.

- util/

- contains general-purpose utility functions for python code.

- tests/

- test files, mostly the unit tests for domain generator/validator

- samples/

- where the learned results should go. Each SAE training results are stored in a subdirectory.

- puzzles/

- code for domain generators/validators.

- puzzles/*.py

- each file represents a domain.

- puzzles/model/*.py

- the core model (successor rules etc.) of the domain. this is disentangled from the images.

- problem-instances/

- where the input problem isntances / experimental results should go.

- helper/

- helper scripts for AMA1.

- (git submodule) planner-scripts/

- My personal scripts for invoking domain-independent planners. Not just Fast Downward.