Author: Joseph Wilson

Artificial Intelligence is quickly becoming part of every product or service we, as consumers, interact with. A.I. is also making entirely new services and industries possible. Have you ever ordered or takeout during Covid-19? Even though you've given your vehicle information, you still have to call the restaurant or blink your lights to let them know you're ready to pick-up.

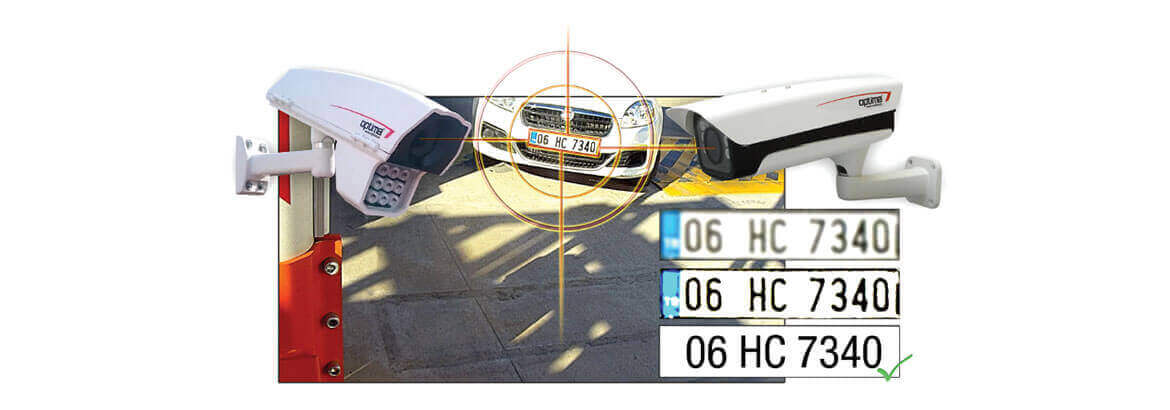

Now imagine a second scenario - you pull up in the parking lot, your license plate is recognized, the staff are notified you're ready to pick up your order, and either the staff or app can offer you your favorite sides, sauces, drinks, or even discount coupons based on your previous orders.

Small businesses and franchise chains can add new features of acknowledging customers as well as gaining insight into customer loyalty. Such insights can be used for individually tailored marketing, much like how your web browser provides a litany of information about your purchasing habits to every site you visit.

Currently there is a huge cost for license plate reading solutions that small businesses can't leverage. This is why most users of the technology involve Law Enforcement, Parking Garages, and Toll Roads.

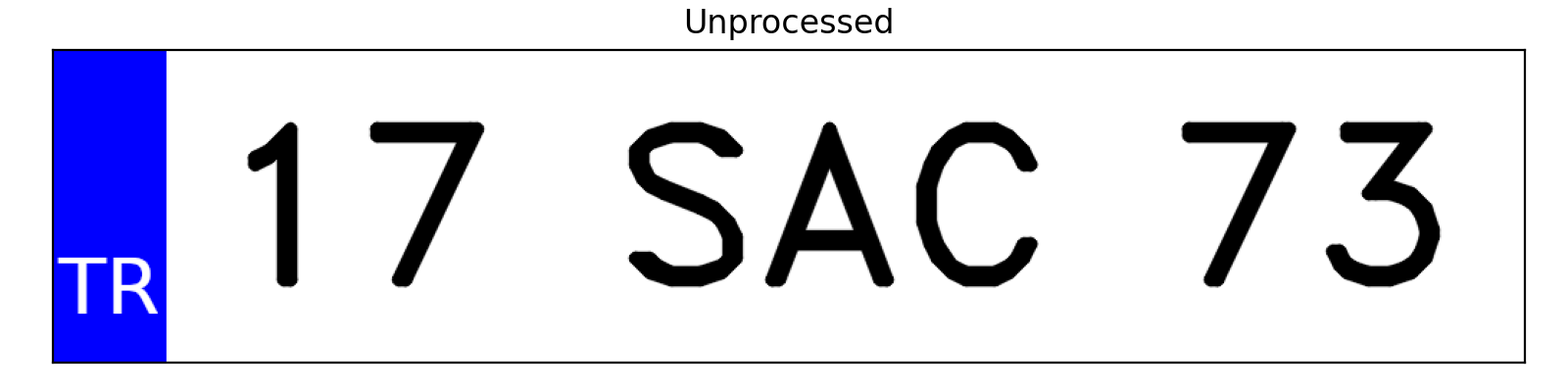

The dataset consisted of 100,000 generated images of Turkish license plates and have pixel dimensions of (1025 x 218). This dataset had been generated in order to address a lack of license plate from the state of Turkey. The license plates used 33 characters - 10 numbers and 23 letters (no Q, W, or X). The images were originally in color, represented by 3 color channels - Red, Green, Blue (RGB) In terms of a data array, this translates into a data shape of (100000, 218, 1025, 3).

source - Tolga Üstünkök, Atılım University, Turkey

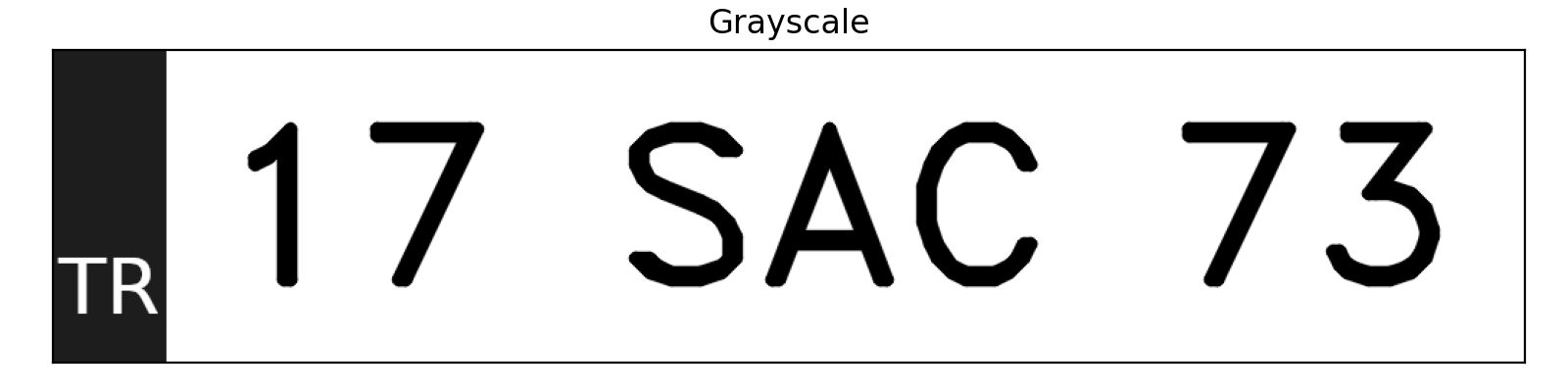

I first began by converting the images to grayscale to simplify the next transformations as well as reduce the data. For those following along, now our data array shape would be (100000, 218, 1025, 1).

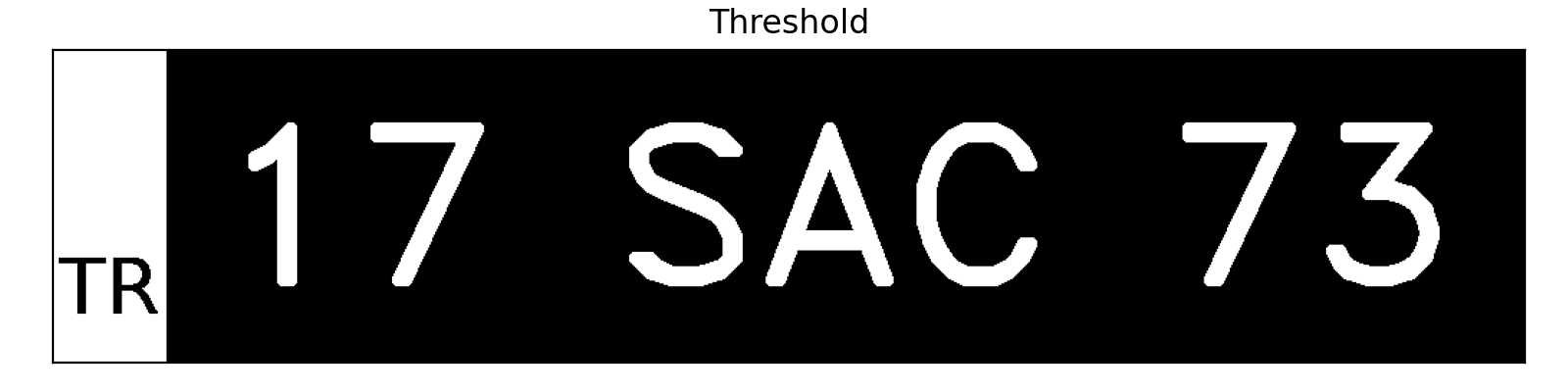

A Threshold was then applied to increase contrast and strip away more obvious noise in the image.

One of the first designs used a morphological operator, dilation, to smooth the thresholded images, but this also led to the system misidentifying some characters as a single letter due to how large and fuzzy the characters became.

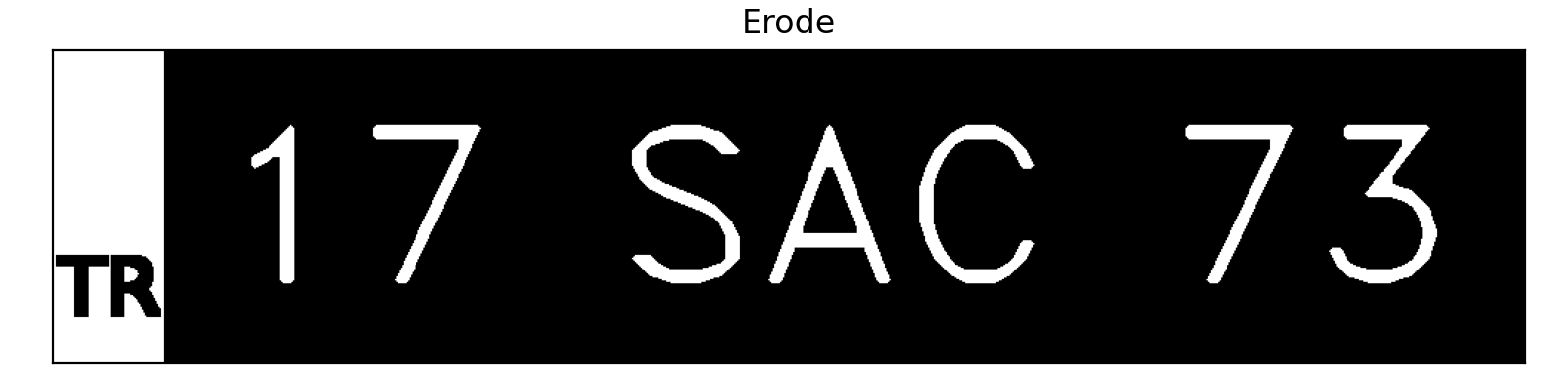

So I opted to instead use an erosion morphological operator, to increase the distance between letters.

Next was a gaussian blur to smooth and soften the image.

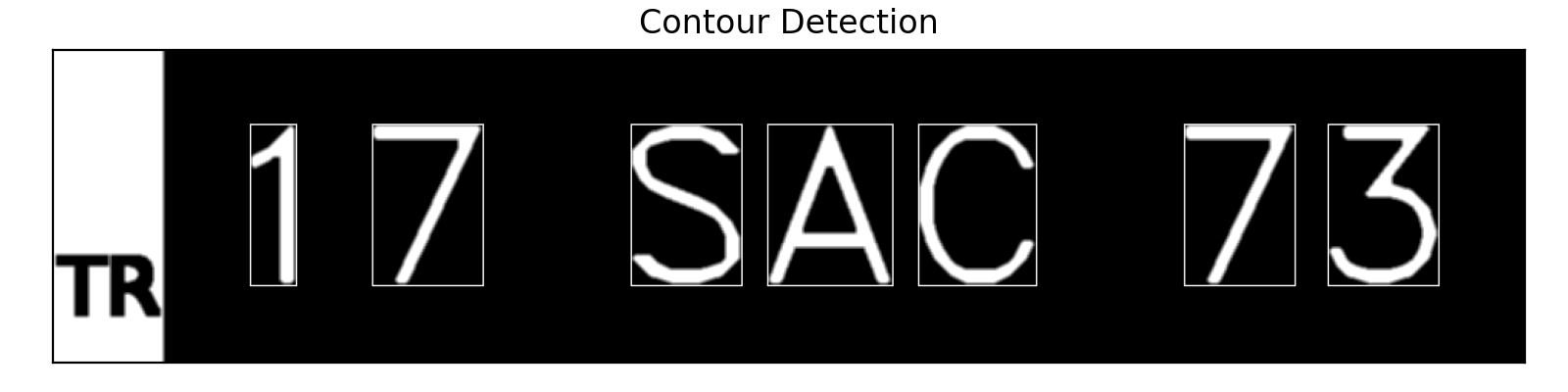

Finally the image was sent through a segmentation function that uses Contour Detection to identify shapes in the image. Those shapes were then screened for obvious false-positives by area and proportions.

The segmentation allows the CNN to specialize in classifying each segment as one of the 33 characters, instead of the entire license plate at once.

What remained were the individual character segments, all reshaped to be a consistent (30 x 30) pixels in size (a general requirement for such a model to function).

As a result, the data array was transformed into a shape of (700000, 30, 30, 1).

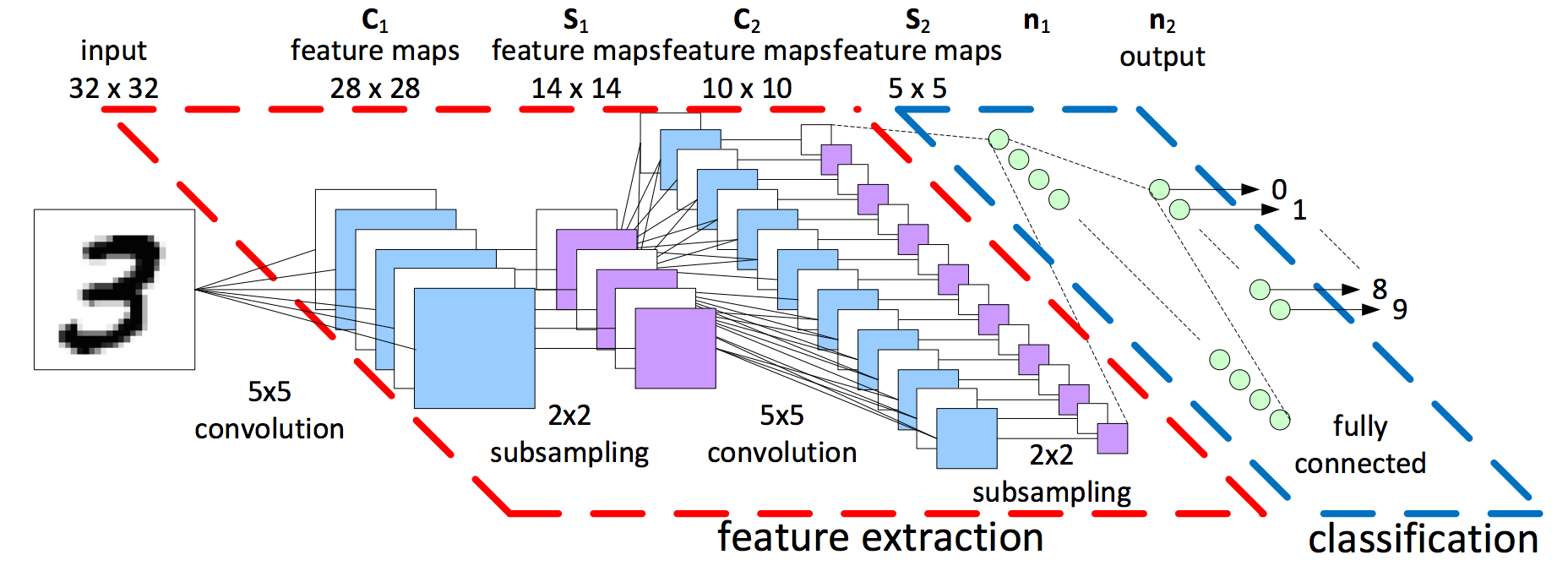

While testing multiple architecture designs, I realized the models would very easily get to 100% accuracy for this generated dataset. The final model for this phase of the project was selected for its simplicity, fast training time, and small size (< 3 MB) that could be deployed on mobile devices and web browsers.

This diagram is for illustrative purposes, as the CNN model for this phase of the project was able to be much simpler in size and complexity.

1. Input Character Segment

- (30, 30, 1)

2. 40 x Convolution layers

- Kernel size (4, 4), no padding

- output (27, 27, 40)

3. Pooling (sub-sampling)

- output (13, 13, 40)

4. Pooling (sub-sampling)

- output (6, 6, 40)

5. Flatten

- (1440)

6. Dense Neural Network

- 20 neurons

- relu activation

7. Dense Neural Network

- 33 neurons

- softmax activation

- Loss function being optimized: categorical cross entropy

- This is used to calculate performance at each epoch

- Total Parameters: 30,193

- the number of feature weights this network is tweaking in order to improve its performance classifying the characters

- Optimization Method: Adam

- It uses both "gradient" as well as "momentum" - it's simply the best optimizer to start with for general purposes

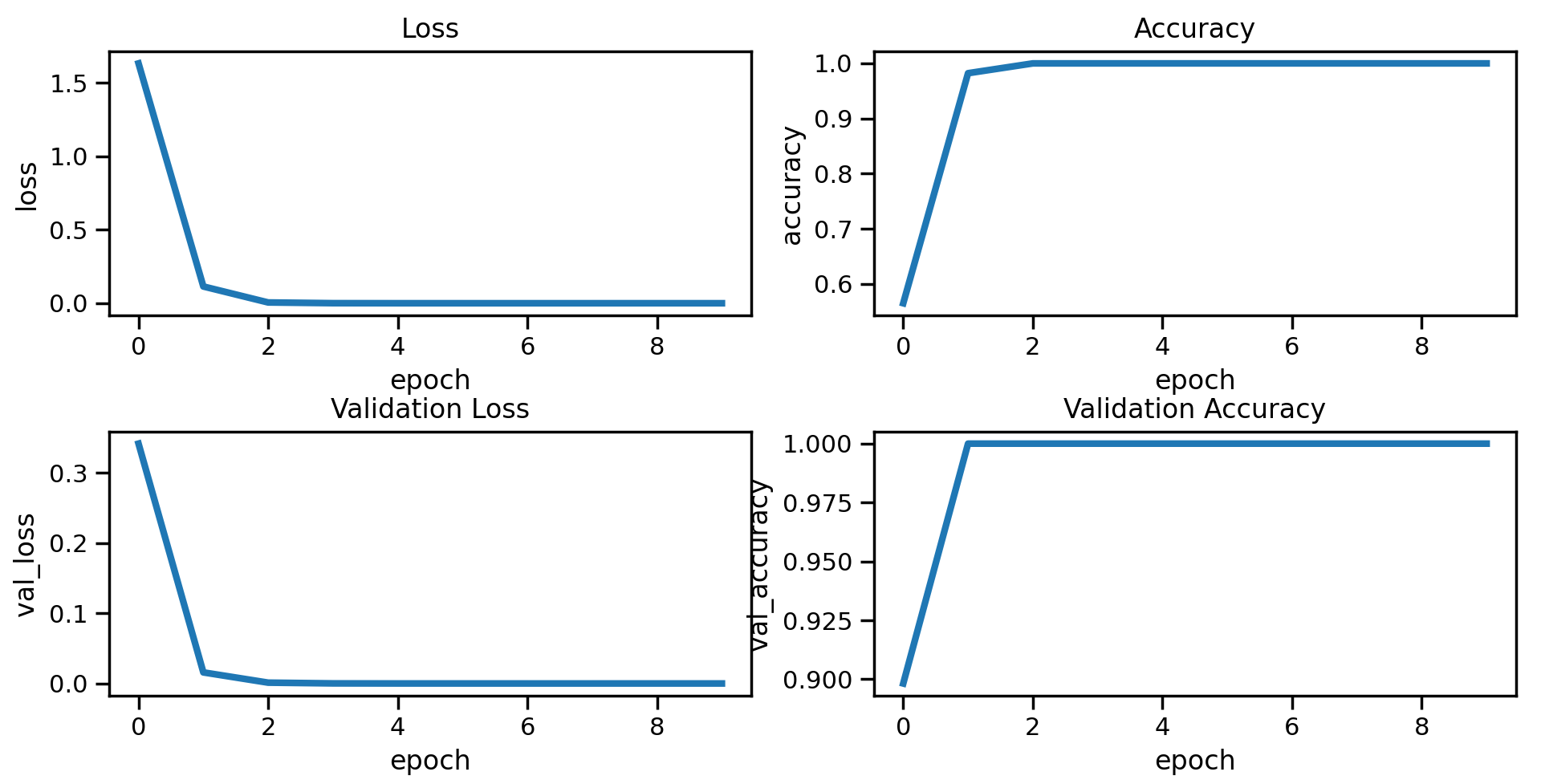

20 images per batch were sent through the CNN for classification during a single epoch. After each epoch, the CNN would update each of the weights for each feature map and neuron in order to improve its classification performance. This process repeated for 10 epochs total, as the model would quickly reach necessary feature weights for > 90% accuracy within 5 epochs.

This project was a long but fruitful success as far as building the first iteration of a license plate recognition package. It is fully possible for new users to clone the repository, run the setup files and begin using the package to process and read license plate images.

However, more work is to be done to make the repository more accessible to the general open source community both for further developement as well as user experience.

- CNN created and validated

- flake8 compliant

- Documentation completed

- Pytest suite written

- Code refactoring review

- Pipeline rebuilt to process noisier images

- CNN rebuilt to classify variety of images

- python

- opencv

- numpy

- keras

- tensorflow

- matplotlib

- flake8

-

git clone https://github.com/Business-Wizard/License_Plate_Recognition.git -

Move terminal to the newly made license_plate_recognition folder

-

pip install . -

pip install -r requirements.txt -

Place new license plates into license_plate_recognition/data/raw/

-

Use the

predict_model.pyto make use of the pre-trained model or tweak the code to your purposes

Project Organization

├── LICENSE

├── README.md <- The top-level README for developers using this project.

├── data

│ ├── external <- Data from third party sources.

│ ├── interim <- Intermediate data that has been transformed.

│ ├── processed <- The final, canonical data sets for modeling.

| └── raw <- New unprocessed images for reading.

│

├── models <- Trained and serialized models, model predictions, or model summaries

│

├── requirements.txt <- The requirements file for reproducing the analysis environment, e.g.

│ generated with `pip freeze > requirements.txt`

│

├── setup.py <- makes project pip installable (pip install -e .) so src can be imported

└── src <- Source code for use in this project.

├── __init__.py <- Makes src a Python module

│

├── data <- Scripts to download or generate data

│ └── make_dataset.py

│

├── models <- Scripts to train models and then use trained models to make

│ │ predictions

│ ├── predict_model.py

│ └── train_model.py

│

└── visualization <- Scripts to create exploratory and results oriented visualizations

└── visualize.py

Further Reading:

-

Interactive - Spot the Surveillance

-

Article - Texas adds ALPR to toll roads

-

Article - Large budget needed for ALPR systems

Acknowledgements:

-

image - Parking Garage

-

image - Street Surveillance

-

image - Fixed ALPR

-

image - CNN diagram

Project based on the cookiecutter data science project template. #cookiecutterdatascience