Pytorch Reimplementation of Style2Paints V3 (https://github.com/lllyasviel/style2paints/blob/master/papers/sa.pdf)

One should modify simulate_step*.ipynd or simulate_step*.py with your own data path before runing this script.

See script : simulate_step1.ipynb

See script : simulate_step2.ipynb

I merged this part with the Pytorch data-loader. Refer to ./Pytorch-Style2paints/dataset_multi.py

| Ground truth | Color draft |

|

|

|

|

I chose [0, 20) user hints from the ground truth randomly, and pasted them on a hint map with 3 channels( RGB while the paper used RGBA )

| Ground truth | User hints |

|

|

|

|

|

Just run train.py. Don't forget to modify the easydict in the train.py script!

All the hyper-parameters are chosen as same as the original paper.

args = easydict.EasyDict({

'epochs' : 100,

'batch_size' : 16,

'train_path' : 'train data path'#'./your train data path/train',

'val_path' : 'val data path'#'./your val data path/val',

'sketch_path' : 'sketch path'#"./your sketch data path/sketch",

'draft_path' : 'STL path'#"./your STL data path/STL",

'save_path' : 'result path'#"./your save path/results" ,

'img_size' : 270,

're_size' : 256,

'learning_rate' : 1e-5,

'gpus' : '[0,1,2,3]',

'lr_steps' : [5, 10, 15, 20],

"lr_decay" : 0.1,

'lamda_L1' : 0.01,

'workers' : 16,

'weight_decay' : 1e-4

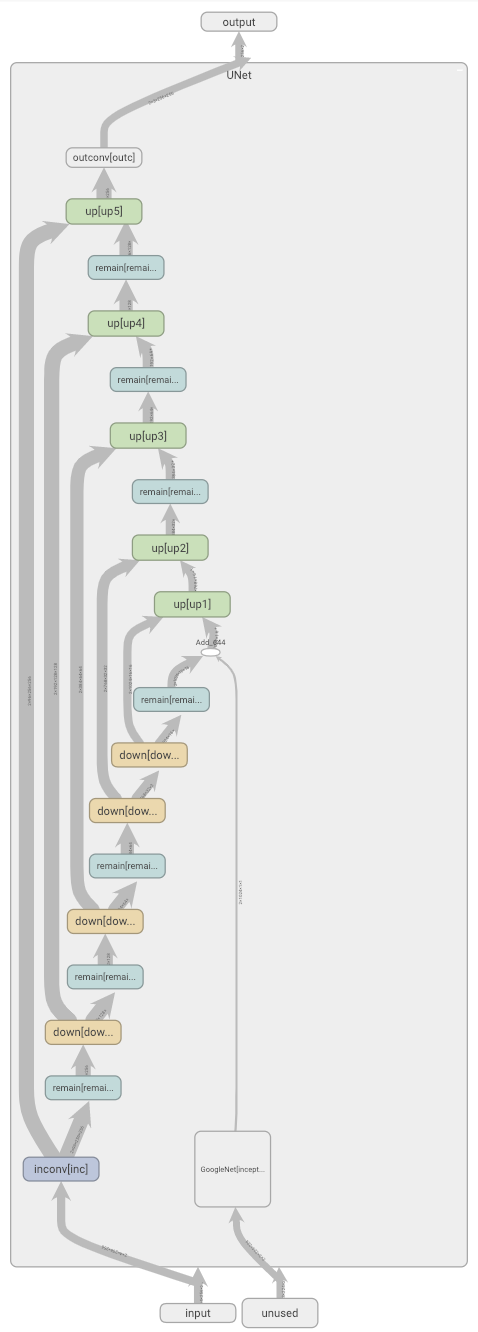

})for i, (input, df, gt) in enumerate(train_loader):Input : (batch_size, 4, 256, 256) : sketch(1 channel) + hint map(3 channels)

df : (batch_size, 3, 224, 224) : simulated data which is the input of Inception V1

gt: (batch_size, 3, 256, 256) : ground truth

INPUT: df : (batch_size, 3, 224, 224) : simulated data which is the input of Inception V1

OUTPUT: latent code (batch_size, 1024, 1, 1)

INPUT: Input : (batch_size, 4, 256, 256) : sketch(1 channel) + hint map(3 channels)

OUTPUT: output : (batch_size, 3, 256, 256) : colorized manuscripts