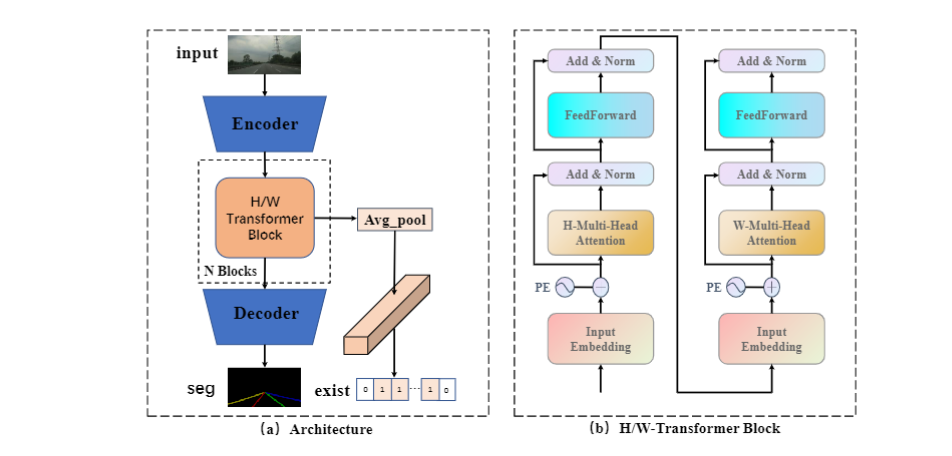

PyTorch implementation of the paper "[HWLane: HW-Transformer for Lane Detection]".

1.-.Trim.-.Trim.-.Trim.-.Trim.-.Trim.mp4

- HWLane achieves SOTA results on VIL-100, CULane, and Tusimple Dataset.

-

Clone the HWLane repository

git clone https://github.com/Cuibaby/HWLane.gitWe call this directory as

$HWLane_ROOT -

Create a conda virtual environment and activate it (conda is optional)

conda create -n HWLane python=3.8 -y conda activate HWLane

-

Install dependencies

# Install pytorch firstly, the cudatoolkit version should be same in your system. (you can also use pip to install pytorch and torchvision) conda install pytorch torchvision cudatoolkit=10.1 -c pytorch # Or you can install via pip pip install torch torchvision # Install python packages pip install -r requirements.txt

-

Data preparation

Download VIL100, CULane and Tusimple. Then extract them to

$VIL100ROOT$CULANEROOTand$TUSIMPLEROOT. Create link todatadirectory.cd $MFIALane_ROOT mkdir -p data ln -s $VIL100ROOT data/VIL100 ln -s $CULANEROOT data/CULane ln -s $TUSIMPLEROOT data/tusimple

For CULane, you should have structure like this:

$CULANEROOT/driver_xx_xxframe # data folders x6 $CULANEROOT/laneseg_label_w16 # lane segmentation labels $CULANEROOT/list # data listsFor Tusimple, you should have structure like this:

$TUSIMPLEROOT/clips # data folders $TUSIMPLEROOT/lable_data_xxxx.json # label json file x4 $TUSIMPLEROOT/test_tasks_0627.json # test tasks json file $TUSIMPLEROOT/test_label.json # test label json fileFor Tusimple, the segmentation annotation is not provided, hence we need to generate segmentation from the json annotation.

python tools/generate_seg_tusimple.py --root $TUSIMPLEROOT # this will generate seg_label directory

For VIL100, you should have structure like this:

$VIL100ROOT/Annotations $VIL100ROOT/data $VIL100ROOT/JPEGImages $VIL100ROOT/Json $VIL100ROOT/list $VIL100ROOT/test -

Install CULane evaluation tools.

This tools requires OpenCV C++. Please follow here to install OpenCV C++. Or just install opencv with command

sudo apt-get install libopencv-devThen compile the evaluation tool of CULane.

cd $HWLane_ROOT/runner/evaluator/culane/lane_evaluation make cd -

Note that, the default

opencvversion is 3. If you use opencv2, please modify theOPENCV_VERSION := 3toOPENCV_VERSION := 2in theMakefile.If you have problems installing the C++ version, you can remove the $lane_evaluation and change the 'type=Py_CULane' in the config file to use the pure Python version for evaluation.

For training, run

CUDA_VISIBLE_DEVICES=0,1,2,3,4 python main.py [configs/path_to_your_config] --gpus [gpu_ids]For example, run

CUDA_VISIBLE_DEVICES=0,1,2,3,4 python main.py configs/culane.py --gpus 0 1 2 3For testing, run

CUDA_VISIBLE_DEVICES=0,1,2,3,4 python main.py c[configs/path_to_your_config] --validate --load_from [path_to_your_model] [gpu_num]For example, run

CUDA_VISIBLE_DEVICES=0,1,2,3,4 python main.py configs/culane.py --validate --load_from culane.pth --gpus 0 1 2 3

CUDA_VISIBLE_DEVICES=0,1,2,3,4 python main.py configs/tusimple.py --validate --load_from tusimple.pth --gpus 0 1 2 3

CUDA_VISIBLE_DEVICES=0,1,2,3,4 python main.py configs/vilane.py --validate --load_from vilane.pth --gpus 0 1 2 3We provide three trained ResNet/VGG models on VIL100, CULane and Tusimple.

| Dataset | Backbone | Metric paper | Metric This repo | Model |

|---|---|---|---|---|

| VIL100 | ResNet34 | 91.9 | 91.9 | Comming Soon |

| Tusimple | ResNet18 | 96.83 | 96.83 | Comming Soon |

| CULane | VGG16 | 76.9 | 76.9 | Comming Soon |

Just add --view.

For example:

python main.py configs/culane.py --validate --load_from culane.pth --gpus 0 1 2 3 --viewYou will get the result in the directory: work_dirs/[DATASET]/xxx/vis.

@ARTICLE{10507728,

author={Zhao, Jing and Qiu, Zengyu and Hu, Huiqin and Sun, Shiliang},

journal={IEEE Transactions on Intelligent Transportation Systems},

title={HWLane: HW-Transformer for Lane Detection},

year={2024},

volume={},

number={},

pages={1-11},

keywords={Lane detection;Feature extraction;Computational modeling;Task analysis;Visualization;Current transformers;Convolutional neural networks;Deep learning;lane detection;transformer;self-knowledge distillation},

doi={10.1109/TITS.2024.3386531}}

@ARTICLE{9872124,

author={Qiu, Zengyu and Zhao, Jing and Sun, Shiliang},

journal={IEEE Transactions on Intelligent Transportation Systems},

title={MFIALane: Multiscale Feature Information Aggregator Network for Lane Detection},

year={2022},

volume={},

number={},

pages={1-13},

doi={10.1109/TITS.2022.3195742}

}The code is modified from RESA and SCNN, Tusimple Benchmark. It's also recommended for you to try LaneDet.