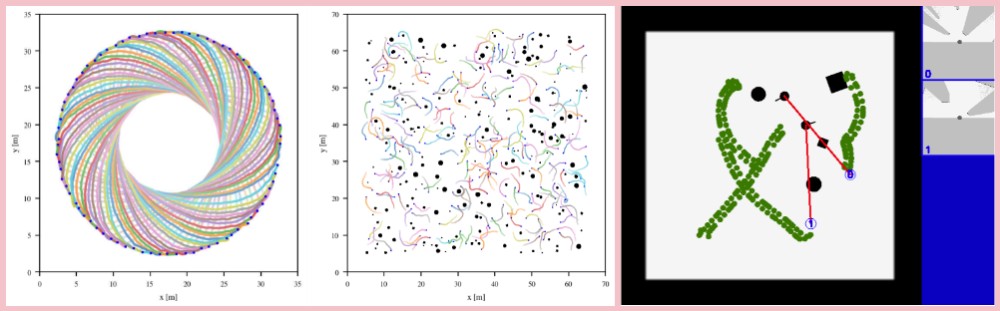

A customized grid map-based navigation simulation platform following the Gym API.

- Very fast, based on C++, customized for robot navigation.

- Accurate, not perform physical simulation and time simulation.

- Parallelization,support multi-scene and multi-robot.

- Pedestrian simulation,contains pedestrian simulation based on Pedsim and ORCA.

- Gym API, easy to use in conjunction with other reinforcement learning frameworks.

- Grid_map output, directly outputs grid_map or raw laser data.

- Parameterized configuration, easily configure environment information via yaml file, including robot shape and size, pedestrian strategy, environmental map, and more.

- Very lightweight, only depends on OpenCV for visualization. 200 robots and 200 obstacles have been simulated.

Recommended system environment: Ubuntu20 + ROS noetic + python3

sudo apt-get install libedit-dev

git clone git@github.com:DRL-Navigation/img_env.git

cd img_env

catkin_make --only-pkg-with-deps img_env

echo "source `pwd`/devel/setup.bash" >> ~/.bashrc

Enter envs/cfg to configure the yaml to be run. Note, set env_num parameter to run nodes simultaneously.

python create_launch.py test envs/cfg/test.yaml

the first param is task name, other params is yaml file,if you want to run different yaml files together,

python create_launch.py test A.yaml B.yaml C.yaml

the generated file are located at src/img_env/tmp

open up a new terminal

roslaunch img_env test.launch

python env_test.py

envs/state/state.py

following valuse are all type of numpy, and the first dim means robot_num.

vector_states, [robot_num, state_dim] vector,state_dim=3 which means the x,y position and yaw to target

sensor_maps, [robot_num, batch, 48, 48] egocentric sensor map.

is_collisions, [robot_num] 0 means no collision, 1 means get collision with static obstacles, 2 means get collision with pedestrians, 3 means get collision with other robots.

is_arrives, [robot_num] 1 means reach the goal while 0 is not.

lasers, [robot_num, laser_range_total] original laser scan data.

ped_vector_states, [robot_num, n] n = 1 + vector_info_number * max_ped_number, where 1 means the real ped number

ped_maps, [robot_num, 3, 48, 48] egocentric 3-channel pedestrians map, which means x,y velocity and position of the nearby pedestrains.

step_ds, [robot_num] the distance of getting close to the target each step.

ped_min_dists, [robot_num] the closest distance of nearby pedestrains, used in reward function.

To modify reward, action space and other operations, please wrapper the package according to the Gym API.

!!! NOTE: The order of filling in wrappers in yaml is very important. The first one is enclosure in the innermost layer and executed first, and the last one is enclosured in the outermost layer and executed last. Imagine preorder traversal, postorder traversal of the binary tree.

All parameters are set in yaml file, they are sent to C++

the 3 regular behaviour of pedestrains:

pedsim, rvo, ervo, thanks to their codes are all opensourced, we converge them and put in 3rd_party

-

pedsimis controled by SFM -

rvois controled by orca -

ervodenotes the emitional version of rvo

to change pedestrain behaviour, set yaml file ped_sim:type with one of [ pedscene,rvoscene,ervoscene]

parameters server

# close GUI

rosparam set /image_ped_circle0/show_gui false

# open GUI

rosparam set /image_ped_circle0/show_gui true

# `image_ped_circle0` is the node name, which is configured in the yaml file, `env_name`+env_id

# type `rosnode list` show all nodes

@Article{chen2020distributed,

title = {Distributed Non-Communicating Multi-Robot Collision Avoidance via Map-Based Deep Reinforcement Learning},

author = {Chen, Guangda and Yao, Shunyi and Ma, Jun and Pan, Lifan and Chen, Yu'an and Xu, Pei and Ji, Jianmin and Chen, Xiaoping},

journal = {Sensors},

volume = {20},

number = {17},

pages = {4836},

year = {2020},

publisher = {Multidisciplinary Digital Publishing Institute},

doi = {10.3390/s20174836},

url = {https://www.mdpi.com/1424-8220/20/17/4836}

};

@inproceedings{yao2021crowd,

title={Crowd-Aware Robot Navigation for Pedestrians with Multiple Collision Avoidance Strategies via Map-based Deep Reinforcement Learning},

author={Yao, Shunyi and Chen, Guangda and Qiu, Quecheng and Ma, Jun and Chen, Xiaoping and Ji, Jianmin},

booktitle={2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={8144--8150},

year={2021},

organization={IEEE}

}

@article{qiu2023training,

title={Training A Non-Cooperator to Identify Vulnerabilities and Improve Robustness for Robot Navigation},

author={Qiu, Quecheng and Zhang, Xuyang and Yao, Shunyi and Chen, Guangda and Hua, Bei and Ji, Jianmin and others},

journal={IEEE Robotics and Automation Letters},

year={2023},

publisher={IEEE}

}

chen2020distributed Sensors-20

git clone git@github.com:DRL-Navigation/DDRL4NAV.git --recurse-submodules

cd DDRL4NAV/USTC_lab/env/img_env

catkin_make --only-pkg-with-deps img_env

echo "source `pwd`/devel/setup.bash" >> ~/.bashrc

python create_launch.py sensor_map envs/cfg/sensor_nap.yaml

roslaunch img_env sensor_map.launch

# open a new terminal

cd DDRL4NAV/sh

bash start_redis.sh

bash start.sh config/config_sensor_map.sh

bash tfboard.sh config/config_sensor_map.sh

# show gui

rosparam set /sensor_map0/show_gui true

yao2021crowd IROS-21

git clone git@github.com:DRL-Navigation/DDRL4NAV.git --recurse-submodules

cd DDRL4NAV/USTC_lab/env/img_env

catkin_make --only-pkg-with-deps img_env

echo "source `pwd`/devel/setup.bash" >> ~/.bashrc

python create_launch.py ped_map envs/cfg/ped_nap.yaml

roslaunch img_env ped_map.launch

# open a new terminal

cd DDRL4NAV/sh

bash start_redis.sh

bash start.sh config/config_ped_map.sh

bash tfboard.sh config/config_ped_map.sh

# show gui

rosparam set /ped_map0/show_gui true