- 06/2021: check out our domain adaptation for panoptic segmentation paper Cross-View Regularization for Domain Adaptive Panoptic Segmentation (accepted to CVPR 2021). We design a domain adaptive panoptic segmentation network that exploits inter-style consistency and inter-task regularization for optimal domain adaptation in panoptic segmentation.Code avaliable.

- 06/2021: check out our domain generalization paper FSDR: Frequency Space Domain Randomization for Domain Generalization (accepted to CVPR 2021). Inspired by the idea of JPEG that converts spatial images into multiple frequency components (FCs), we propose Frequency Space Domain Randomization (FSDR) that randomizes images in frequency space by keeping domain-invariant FCs (DIFs) and randomizing domain-variant FCs (DVFs) only. Code avaliable.

- 06/2021: check out our domain adapation for object detection paper Uncertainty-Aware Unsupervised Domain Adaptation in Object Detection (accepted to IEEE TMM 2021). We design a uncertainty-aware domain adaptation network (UaDAN) that introduces conditional adversarial learning to align well-aligned and poorly-aligned samples separately in different manners. Code avaliable.

Scale variance minimization for unsupervised domain adaptation in image segmentation

Scale variance minimization for unsupervised domain adaptation in image segmentation

Dayan Guan, Jiaxing Huang, Xiao Aoran, Shijian Lu

School of Computer Science Engineering, Nanyang Technological University, Singapore

Pattern Recognition, 2021.

If you find this code useful for your research, please cite our paper:

@article{guan2021scale,

title={Scale variance minimization for unsupervised domain adaptation in image segmentation},

author={Guan, Dayan and Huang, Jiaxing and Lu, Shijian and Xiao, Aoran},

journal={Pattern Recognition},

volume={112},

pages={107764},

year={2021},

publisher={Elsevier}

}

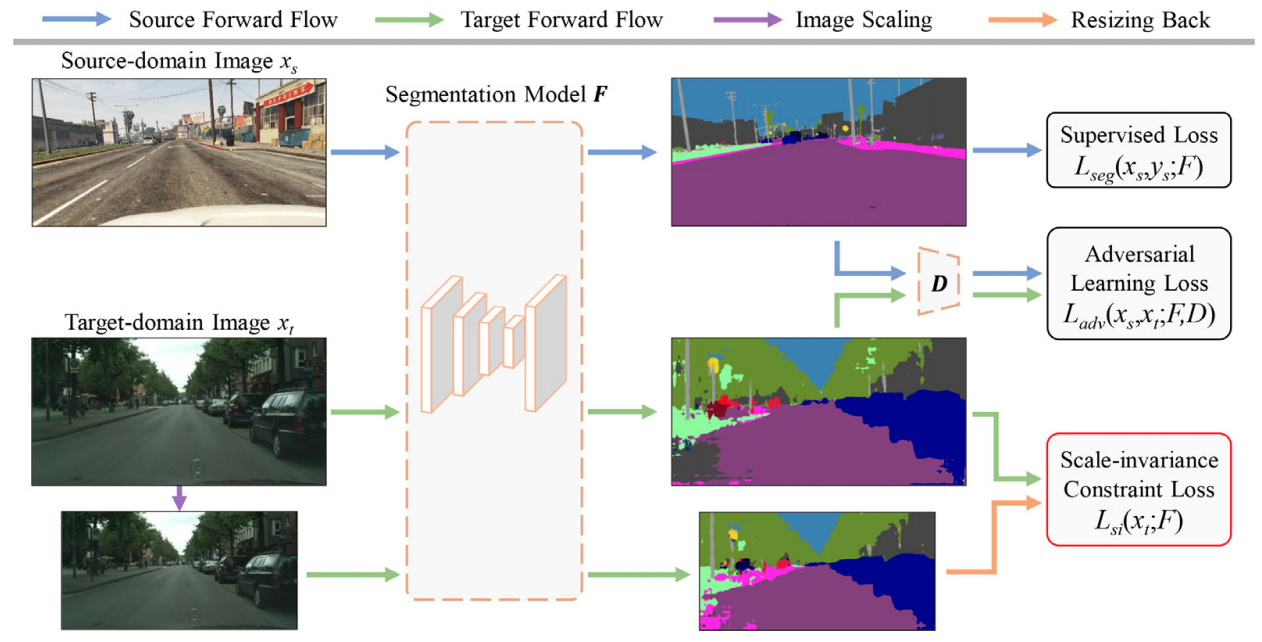

We focus on unsupervised domain adaptation (UDA) in image segmentation. Existing works address this challenge largely by aligning inter-domain representations, which may lead over-alignment that impairs the semantic structures of images and further target-domain segmentation performance. We design a scale variance minimization (SVMin) method by enforcing the intra-image semantic structure consistency in the target domain. Specifically, SVMin leverages an intrinsic property that simple scale transformation has little effect on the semantic structures of images. It thus introduces certain supervision in the target domain by imposing a scale-invariance constraint while learning to segment an image and its scale-transformation concurrently. Additionally, SVMin is complementary to most existing UDA techniques and can be easily incorporated with consistent performance boost but little extra parameters. Extensive experiments show that our method achieves superior domain adaptive segmentation performance as compared with the state-of-the-art. Preliminary studies show that SVMin can be easily adapted for UDA-based image classification.

- Conda enviroment:

conda create -n svmin python=3.6

conda activate svmin

conda install -c menpo opencv

pip install torch==1.0.0 torchvision==0.2.1- Clone the ADVENT:

git clone https://github.com/valeoai/ADVENT.git

pip install -e ./ADVENT- Clone the CRST:

git clone https://github.com/yzou2/CRST.git

pip install packaging h5py- Clone the repo:

git clone https://github.com/Dayan-Guan/SVMin.git

pip install -e ./SVMin

cp SVMin/crst/*py CRST

cp SVMin/crst/deeplab/*py CRST/deeplab- GTA5: Please follow the instructions here to download images and semantic segmentation annotations. The GTA5 dataset directory should have this basic structure:

SVMin/data/GTA5/ % GTA dataset root

SVMin/data/GTA5/images/ % GTA images

SVMin/data/GTA5/labels/ % Semantic segmentation labels

...- Cityscapes: Please follow the instructions in Cityscape to download the images and validation ground-truths. The Cityscapes dataset directory should have this basic structure:

SVMin/data/Cityscapes/ % Cityscapes dataset root

SVMin/data/Cityscapes/leftImg8bit % Cityscapes images

SVMin/data/Cityscapes/leftImg8bit/val

SVMin/data/Cityscapes/gtFine % Semantic segmentation labels

SVMin/data/Cityscapes/gtFine/val

...Pre-trained models can be downloaded here and put in SVMin/pretrained_models

To evaluate SVMin:

python test.py --cfg configs/SVMin_pretrained.ymlTo evaluate SVMin_AL:

python test.py --cfg configs/SVMin_AL_pretrained.ymlTo evaluate SVMin_AL_TR:

python evaluate_advent.py --test-flipping --data-dir ../SVMin/data/Cityscapes --restore-from ../SVMin/pretrained_models/SVMin_AL_TR_pretrained.pth --save ../SVMin/experiments/GTA2Cityscapes_SVMin_AL_TRTo train SVMin:

python train.py --cfg configs/SVMin.ymlTo test SVMin:

python test.py --cfg configs/SVMin.ymlThis codebase is heavily borrowed from ADVENT and CRST.

If you have any questions, please contact: dayan.guan@ntu.edu.sg