This is a repository to help all readers who are interested in handling noisy labels.

If your papers are missing or you have other requests, please contact to ghkswns91@gmail.com.

We will update this repository and paper on a regular basis to maintain up-to-date.

Feb 16, 2022: Our survey paper was accepted to TNNLS journal (IF=10.451) [arxiv version]Feb 17, 2022: Last update: including papers published in 2021 and 2022

@article{song2022survey,

title={Learning from Noisy Labels with Deep Neural Networks: A Survey},

author={Song, Hwanjun and Kim, Minseok and Park, Dongmin and Shin, Yooju and Jae-Gil Lee},

journal={IEEE Transactions on Neural Networks and Learning Systems},

year={2022}}

All Papers are sorted chronologically according to five categories below, so that you can find related papers more quickly.

We also provide a tabular form of summarization with their methodological comaprison (Table 2 in the paper). - [here]

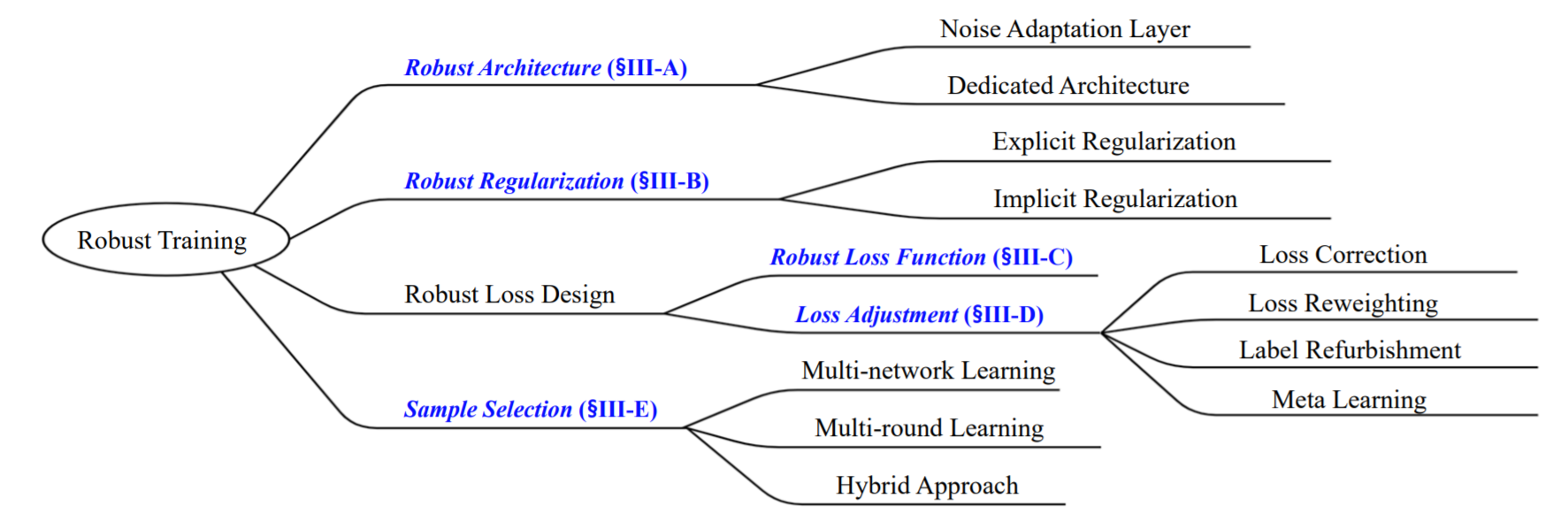

This is a brief summary for the categorization. Please see Section III in our survey paper for the details - [here]

[Index: Robust Architecture, Robust Regularization, Robust Loss Function, Loss Adjsutment, Sample Selection]

Robust Learning for Noisy Labels

|--- A. Robust Architecture

|--- A.1. Noise Adaptation Layer: adding a noise adaptation layer at the top of an underlying DNN to learn label transition process

|--- A.2. Dedicated Architecture: developing a dedicated architecture to reliably support more diverse types of label noises.

|--- B. Robust Regularization

|--- B.1. Explicit Regularization: an explicit form that modifies the expected tarining loss, e.g., weight decay and dropout.

|--- B.2. Implicit Regularization: an implicit form that gives the effect of stochasticity, e.g., data augmentation and mini-batch SGD.

|--- C. Robust Loss Function: designing a new loss function robust to label noise.

|--- D. Loss Adjsutment

|--- D.1. Loss Correction: multiplying the estimated transition matrix to the prediction for all the observable labels.

|--- D.2. Loss Reweighting: multiplying the estimated example confidence (weight) to the example loss.

|--- D.3. Label Refurbishment: replacing the original label with other reliable one.

|--- D.4. Meta Learning: finding an optimal adjustment rule for loss reweighing or label refurbishment.

|--- E. Sample Selection

|--- E.1. Multi-network Learning: collaborative learning or co-training to identify clean examples from noisy data.

|--- E.2. Multi-round Learning: refining the selected clean set through training multiple rounds.

|--- E.3. Hybrid Leanring: combining a specific sample selection strategy with a specific semi-supervised learning model or other orthogonal directions.

In addition, there are some valuable theoretical or empirical papers for understanding the nature of noisy labels.

Go to Theoretical or Empirical Understanding.

| Year | Venue | Title | Implementation |

|---|---|---|---|

| 2015 | ICCV | Webly supervised learning of convolutional networks | Official (Caffe) |

| 2015 | ICLRW | Training convolutional networks with noisy labels | Unofficial (Keras) |

| 2016 | ICDM | Learning deep networks from noisy labels with dropout regularization | Official (MATLAB) |

| 2016 | ICASSP | Training deep neural-networks based on unreliable labels | Unofficial (Chainer) |

| 2017 | ICLR | Training deep neural-networks using a noise adaptation layer | Official (Keras) |

| Year | Venue | Title | Implementation |

|---|---|---|---|

| 2015 | CVPR | Learning from massive noisy labeled data for image classification | Official (Caffe) |

| 2018 | NeurIPS | Masking: A new perspective of noisy supervision | Official (TensorFlow) |

| 2018 | TIP | Deep learning from noisy image labels with quality embedding | N/A |

| 2019 | ICML | Robust inference via generative classifiers for handling noisy labels | Official (PyTorch) |

| Year | Venue | Title | Implementation |

|---|---|---|---|

| 2017 | TNNLS | Multiclass learning with partially corrupted labels | Unofficial (PyTorch) |

| 2017 | NeurIPS | Active Bias: Training more accurate neural networks by emphasizing high variance samples | Unofficial (TensorFlow) |

| Year | Venue | Title | Implementation |

|---|---|---|---|

| 2017 | NeurIPSW | Learning to learn from weak supervision by full supervision | Unofficial (TensorFlow) |

| 2017 | ICCV | Learning from noisy labels with distillation | N/A |

| 2018 | ICML | Learning to reweight examples for robust deep learning | Official (TensorFlow) |

| 2019 | NeurIPS | Meta-Weight-Net: Learning an explicit mapping for sample weighting | Official (PyTorch) |

| 2020 | CVPR | Distilling effective supervision from severe label noise | Official (TensorFlow) |

| 2021 | AAAI | Meta label correction for noisy label learning | Official (PyTorch) |

| 2021 | ICCV | Adaptive Label Noise Cleaning with Meta-Supervision for Deep Face Recognition | N/A |

| Year | Venue | Title | Implementation |

|---|---|---|---|

| 2019 | ICML | SELFIE: Refurbishing unclean samples for robust deep learning | Official (TensorFlow) |

| 2020 | ICLR | SELF: Learning to filter noisy labels with self-ensembling | N/A |

| 2020 | ICLR | DivideMix: Learning with noisy labels as semi-supervised learning | Official (PyTorch) |

| 2021 | ICLR | Robust curriculum learning: from clean label detection to noisy label self-correction | N/A |

| 2021 | NeurIPS | Understanding and Improving Early Stopping for Learning with Noisy Labels | Official (PyTorch) |

How Does a Neural Network’s Architecture Impact Its Robustness to Noisy Labels, NeurIPS 2021 [Link]

Beyond Class-Conditional Assumption: A Primary Attempt to Combat Instance-Dependent Label Noise, AAAI 2021 [Link]

Understanding Instance-Level Label Noise: Disparate Impacts and Treatments, ICML 2021 [Link]

Learning with Noisy Labels Revisited: A Study Using Real-World Human Annotations, ICLR 2022 [Link]

There have been some studies to solve more realistic setups associated with noisy labels.

- Online Continual Learning on a Contaminated Data Stream with Blurry Task Boundaries, CVPR 2022, [code]

This paper addresses the problem of noisy labels in the online continual learning setup. - Learning from Multiple Annotator Noisy Labels via Sample-wise Label Fusion, ECCV 2022, [code]

This paper addresses the scenario in which each data instance has multiple noisy labels from annotators (instead of a single label).