This is a collection of papers on leveraging Large Language Models in Graph Tasks. It's based on our survey paper: A Survey of Graph Meets Large Language Model: Progress and Future Directions.

We will try to make this list updated frequently. If you found any error or any missed paper, please don't hesitate to open issues or pull requests.

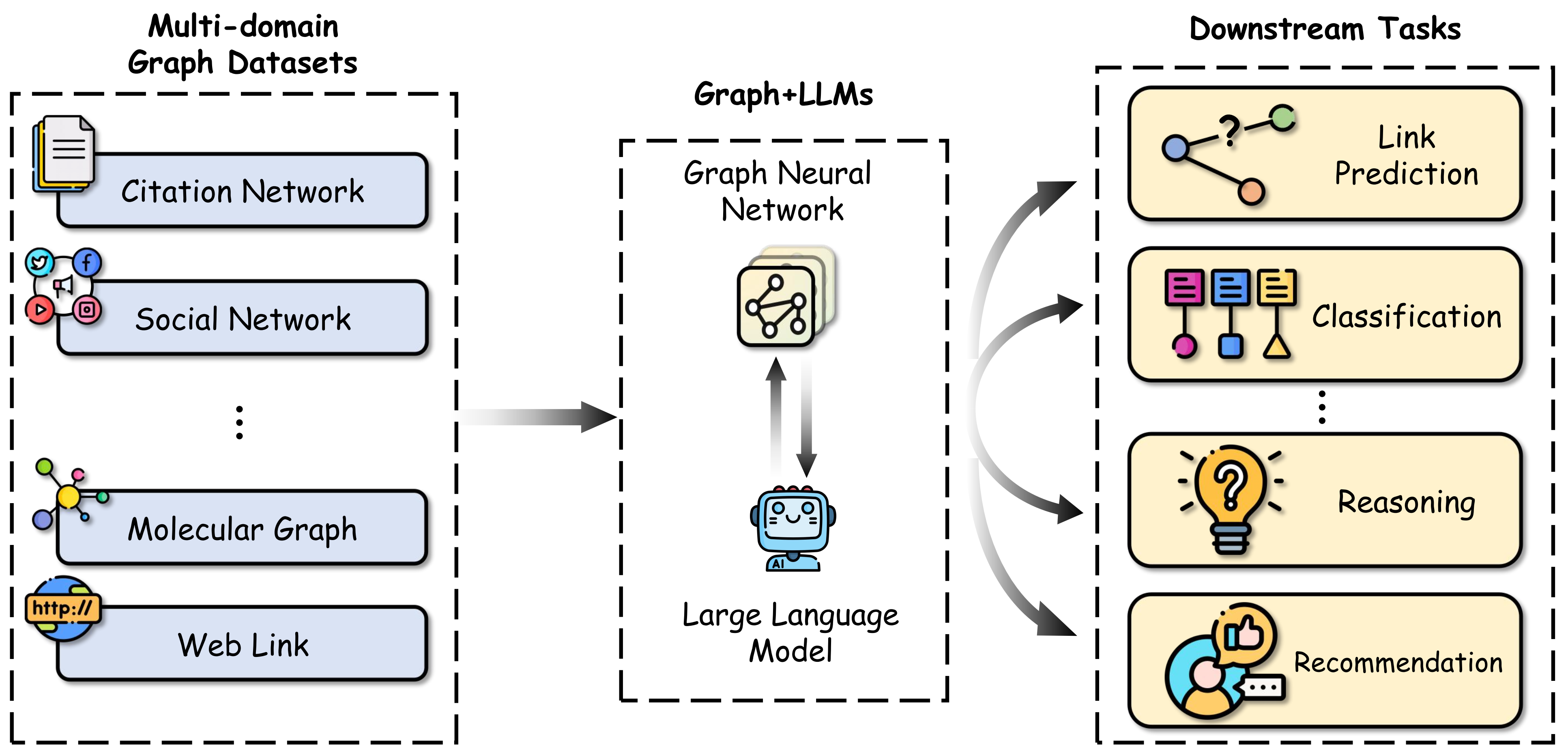

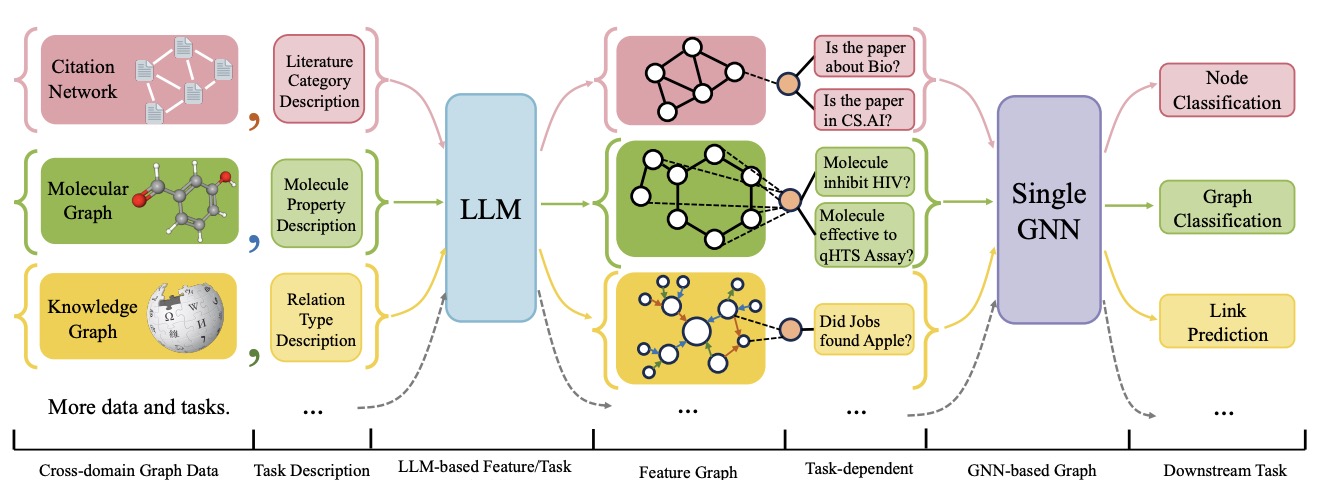

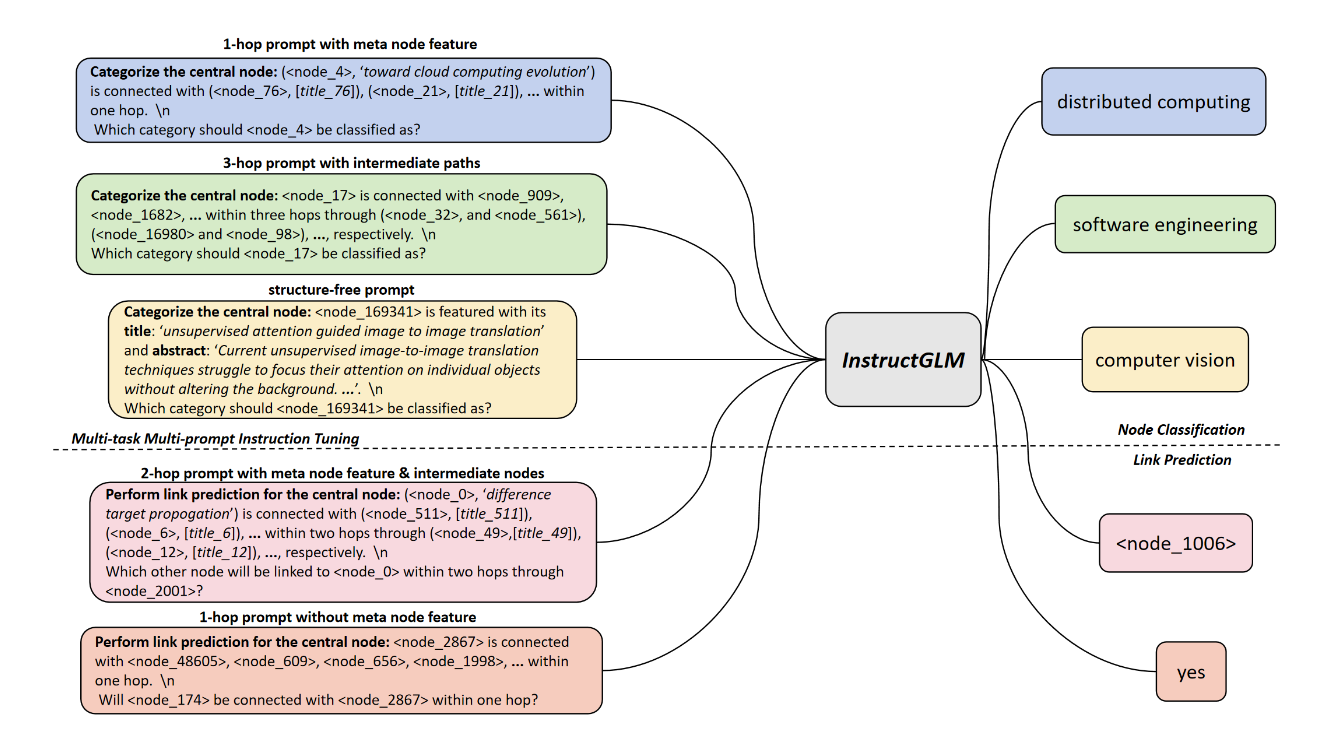

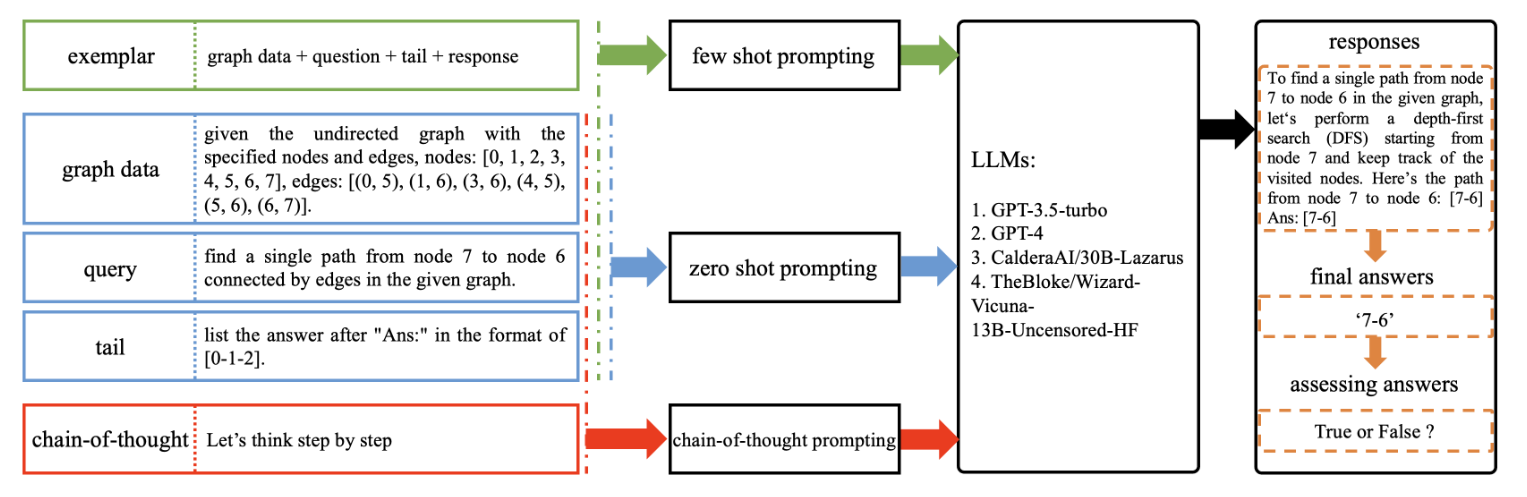

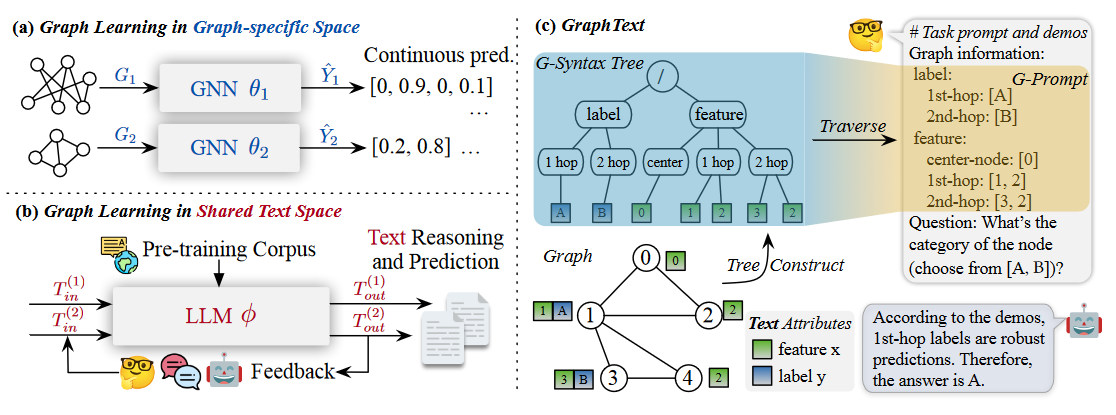

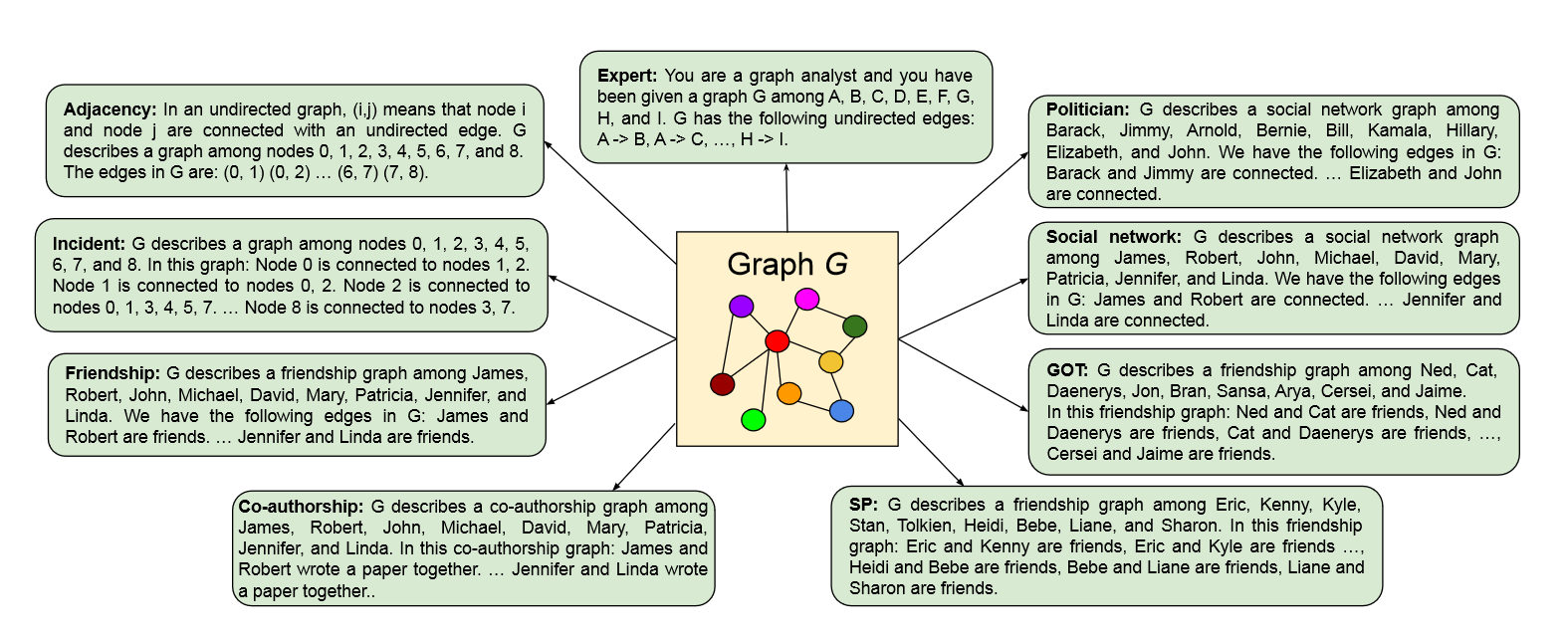

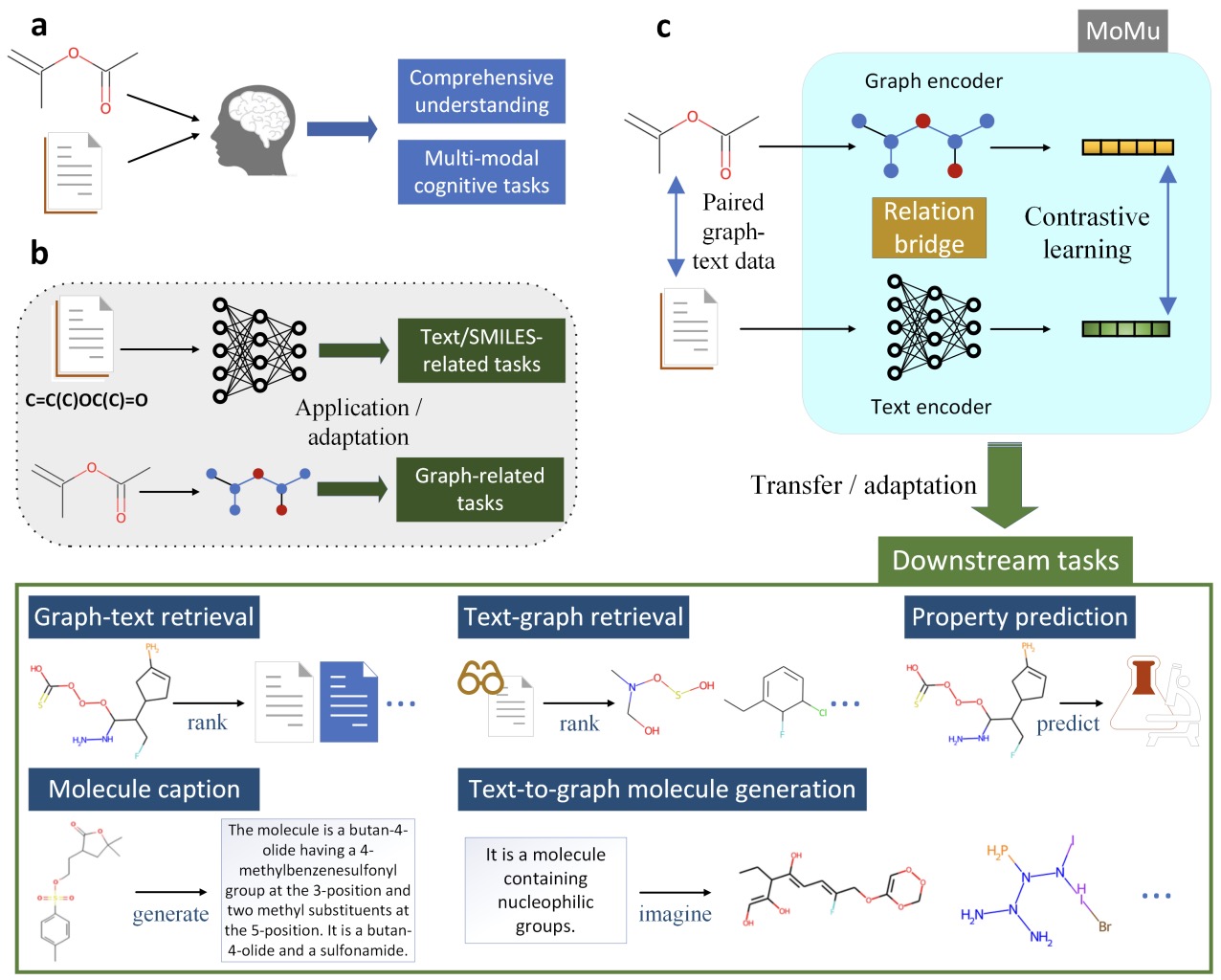

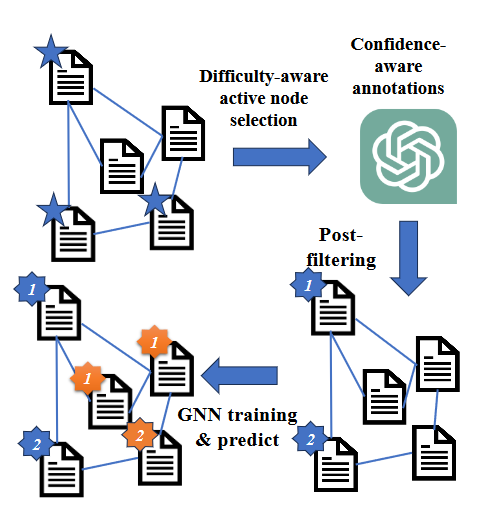

With the help of LLMs, there has been a notable shift in the way we interact with graphs, particularly those containing nodes associated with text attributes. The integration of LLMs with traditional GNNs can be mutually beneficial and enhance graph learning. While GNNs are proficient at capturing structural information, they primarily rely on semantically constrained embeddings as node features, limiting their ability to express the full complexities of the nodes. Incorporating LLMs, GNNs can be enhanced with stronger node features that effectively capture both structural and contextual aspects. On the other hand, LLMs excel at encoding text but often struggle to capture structural information present in graph data. Combining GNNs with LLMs can leverage the robust textual understanding of LLMs while harnessing GNNs' ability to capture structural relationships, leading to more comprehensive and powerful graph learning.

Figure 1. The overview of Graph Meets LLMs.

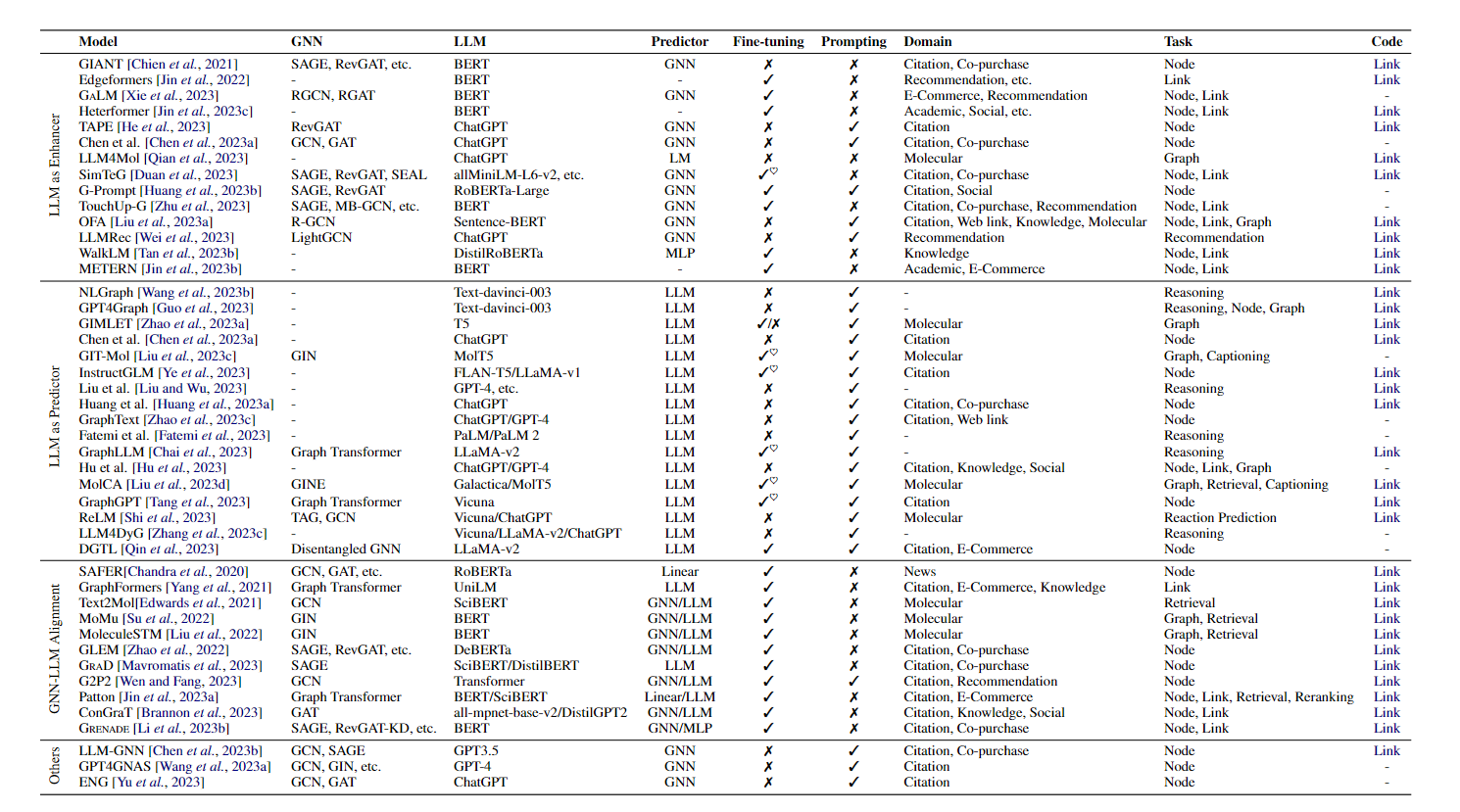

Table 1. A summary of models that leverage LLMs to assist graph-related tasks in literature, ordered by their release time. Fine-tuning denotes whether it is necessary to fine-tune the parameters of LLMs, and ♥ indicates that models employ parameter-efficient fine-tuning (PEFT) strategies, such as LoRA and prefix tuning. Prompting indicates the use of text-formatted prompts in LLMs, done manually or automatically. Acronyms in Task: Node refers to node-level tasks; Link refers to link-level tasks; Graph refers to graph-level tasks; Reasoning refers to Graph Reasoning; Retrieval refers to Graph-Text Retrieval; Captioning refers to Graph Captioning.

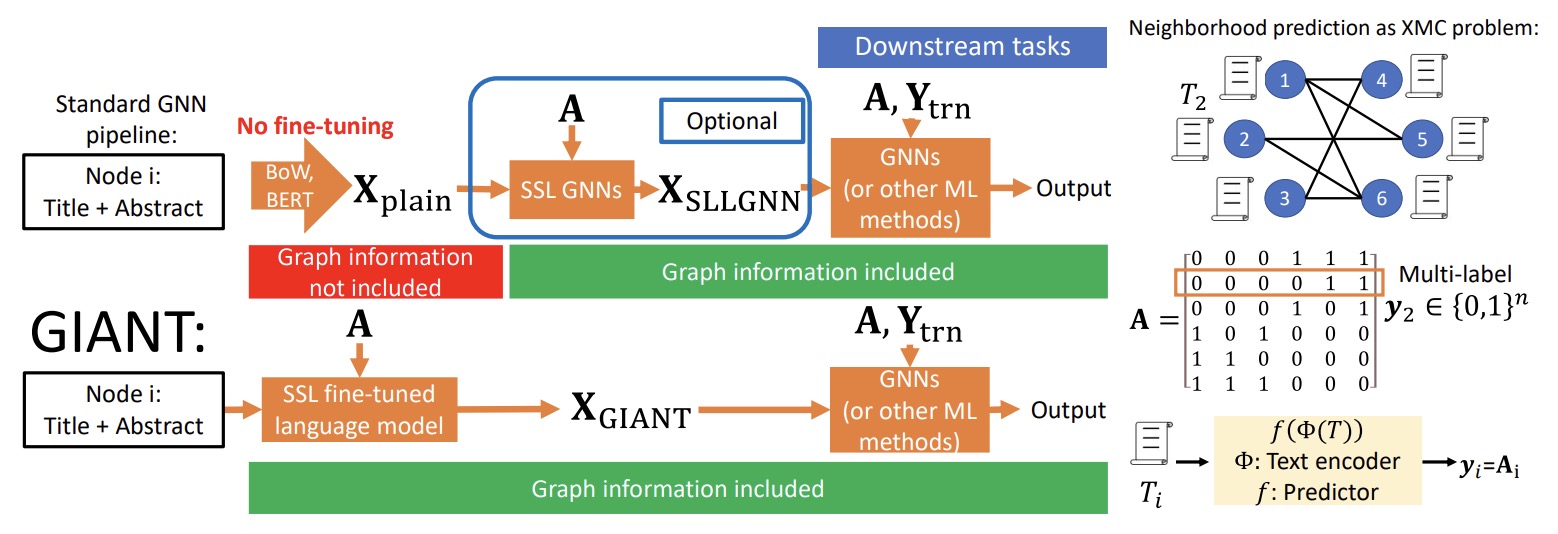

- (2022.03) [ICLR' 2022] Node Feature Extraction by Self-Supervised Multi-scale Neighborhood Prediction [Paper | Code]

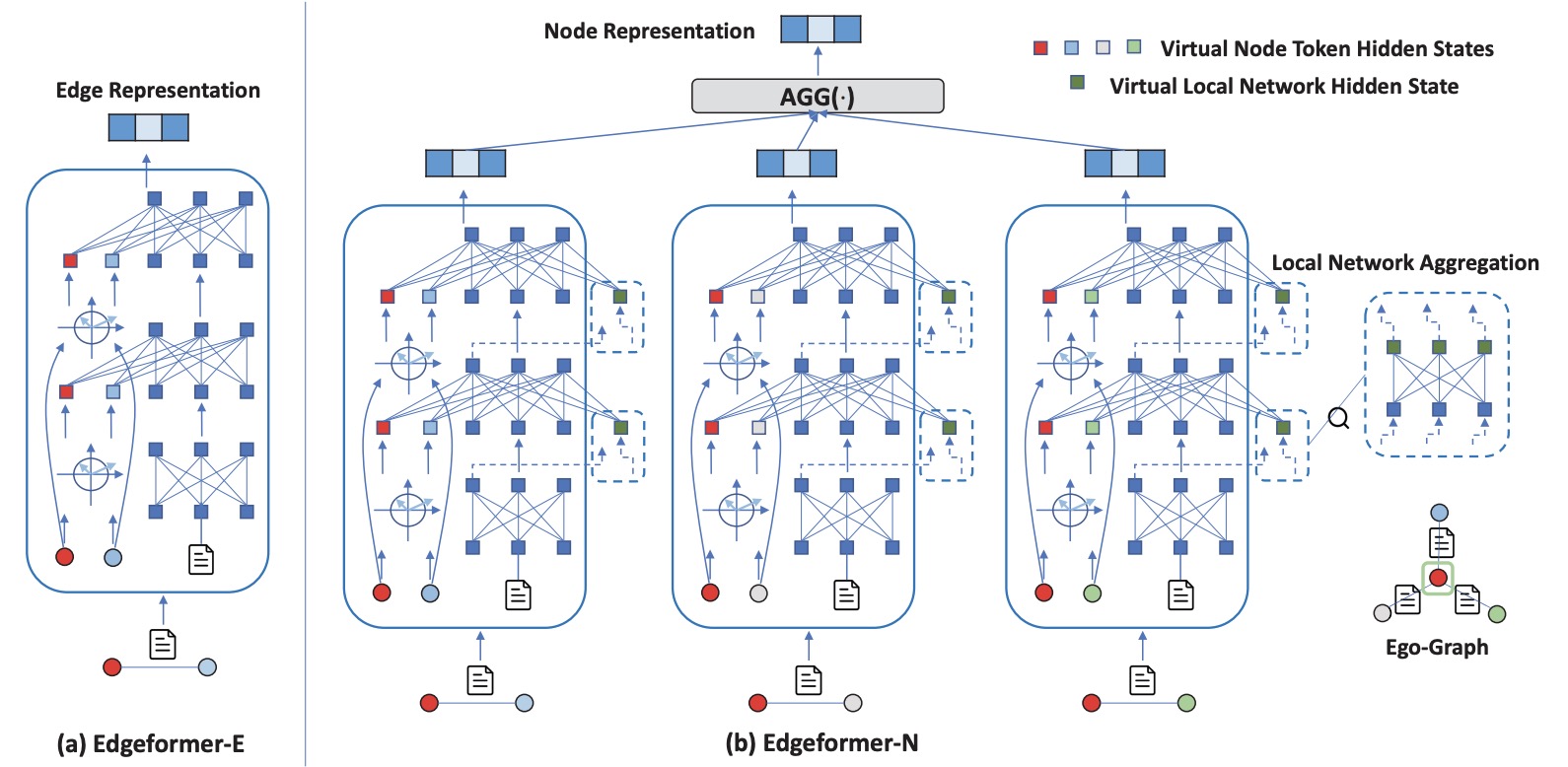

- (2023.02) [ICLR' 2023] Edgeformers: Graph-Empowered Transformers for Representation Learning on Textual-Edge Networks [Paper | Code]

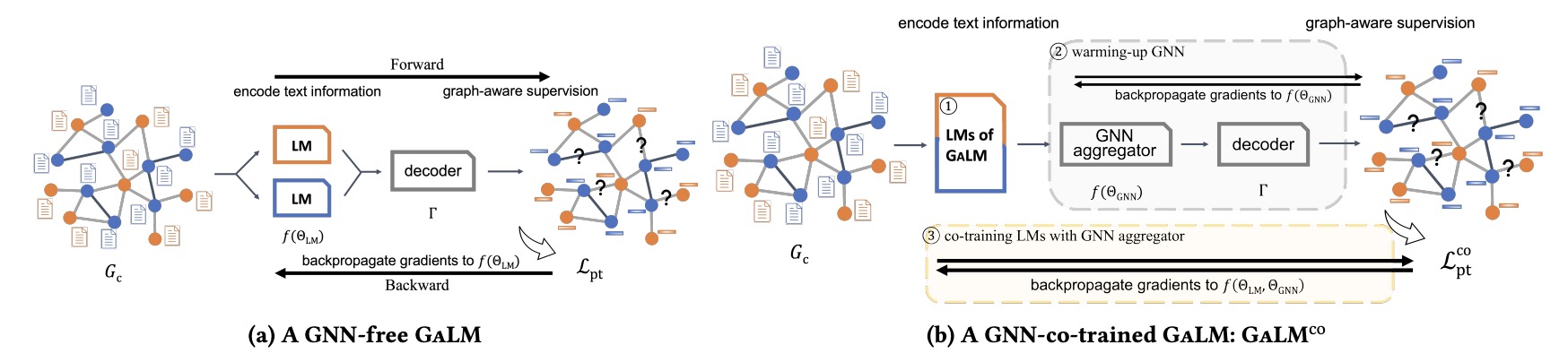

- (2023.05) [KDD' 2023] Graph-Aware Language Model Pre-Training on a Large Graph Corpus Can Help Multiple Graph Applications [Paper]

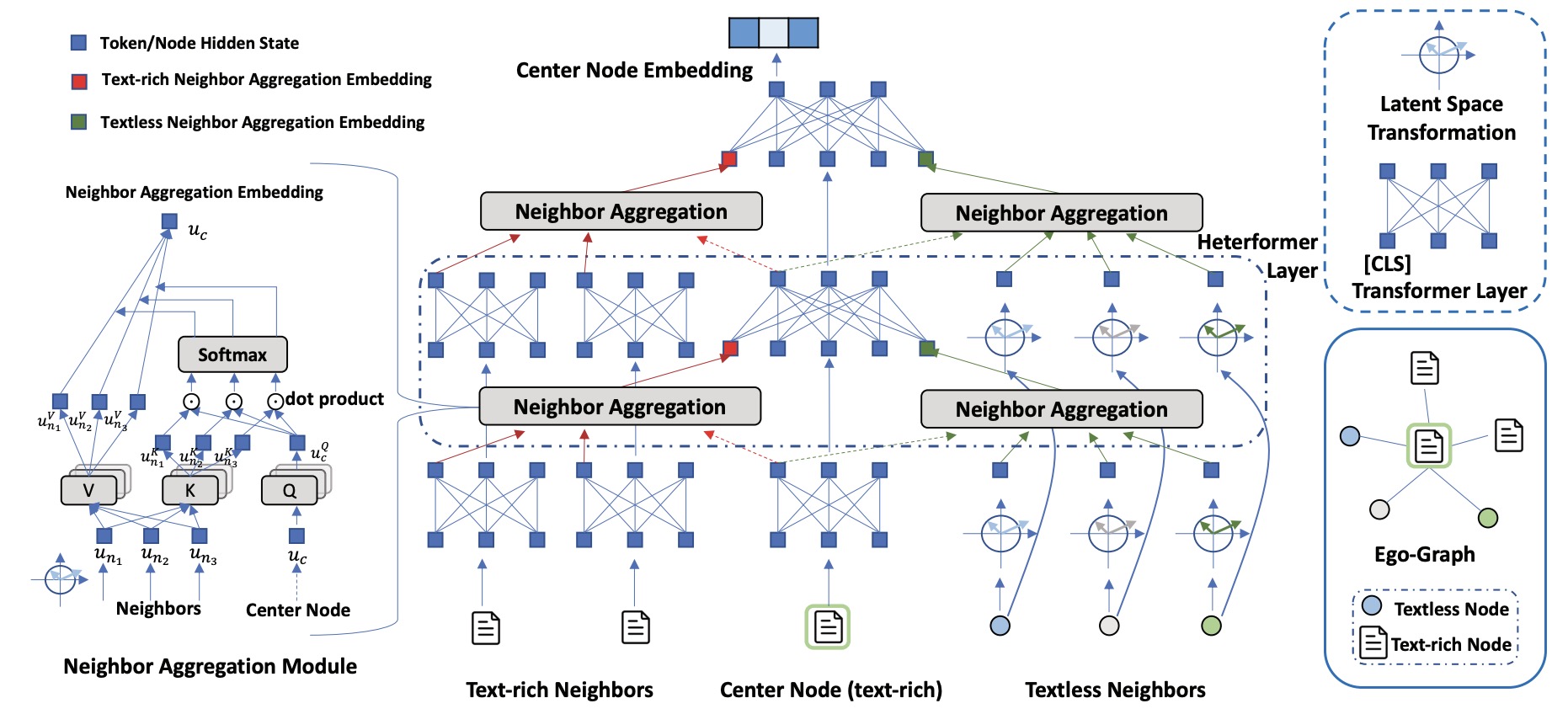

- (2023.06) [KDD' 2023] Heterformer: Transformer-based Deep Node Representation Learning on Heterogeneous Text-Rich Networks [Paper | Code]

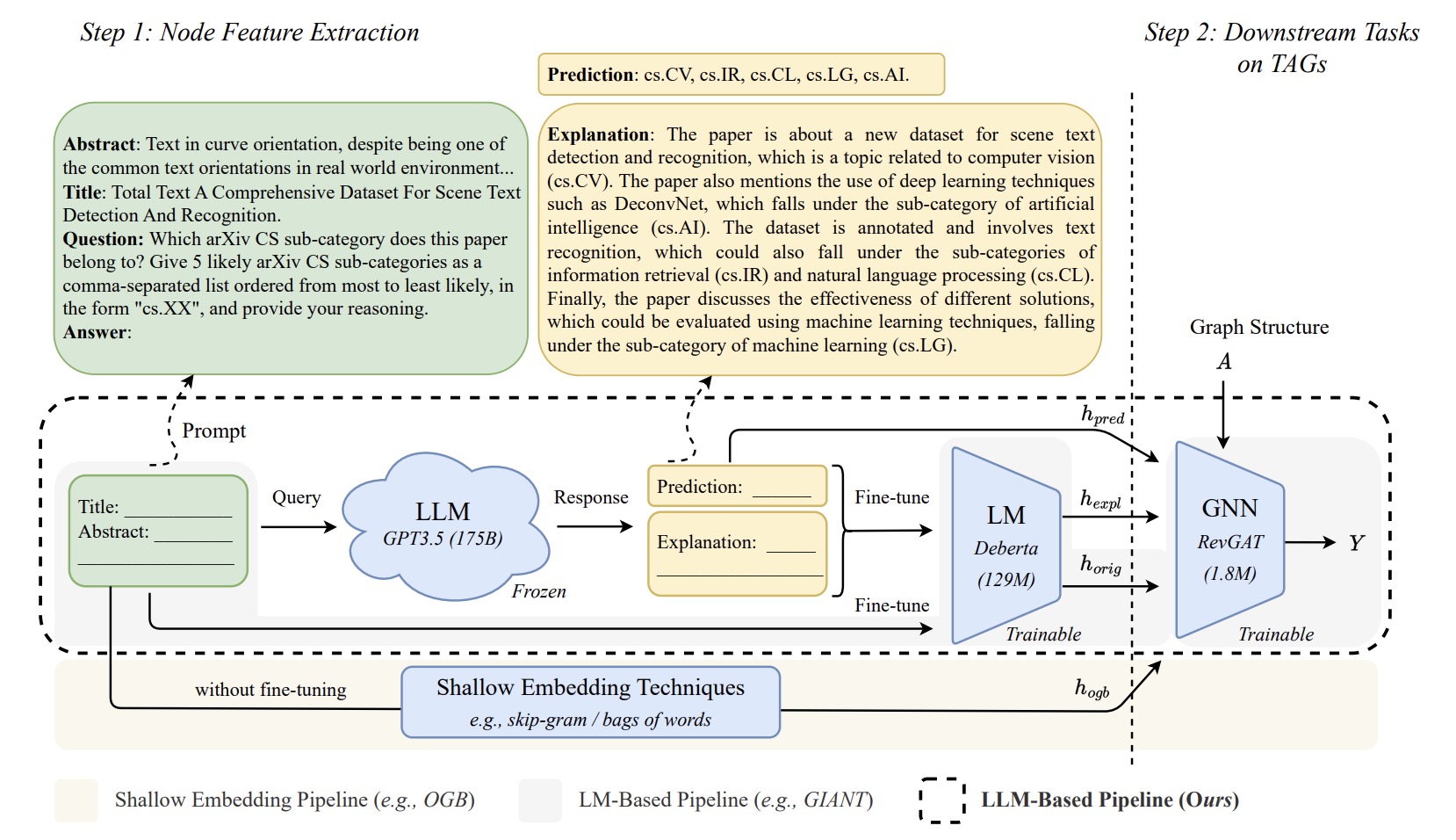

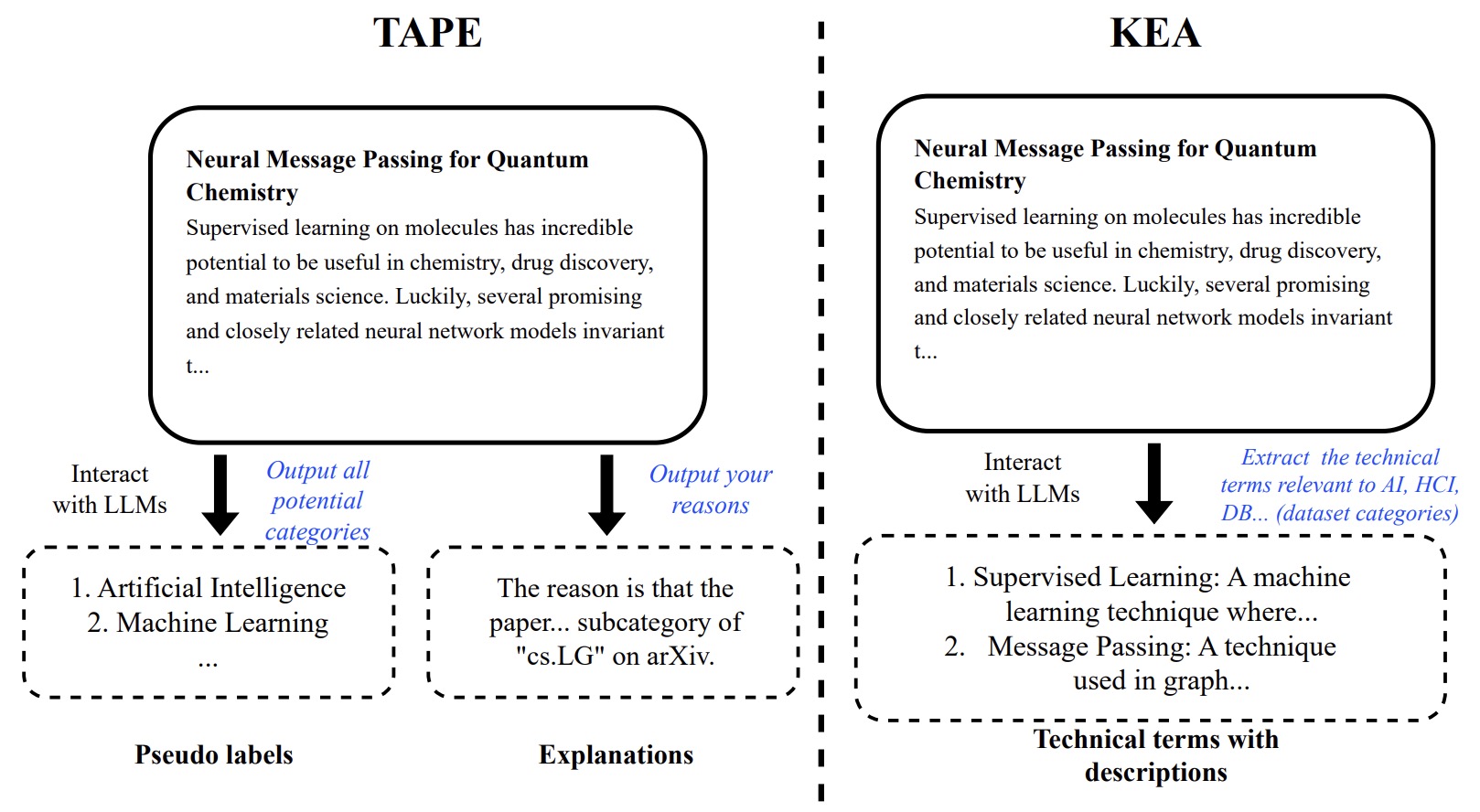

- (2023.05) [Arxiv' 2023] Harnessing Explanations: LLM-to-LM Interpreter for Enhanced Text-Attributed Graph Representation Learning [Paper | Code]

- (2023.08) [Arxiv' 2023] Exploring the potential of large language models (llms) in learning on graphs [Paper]

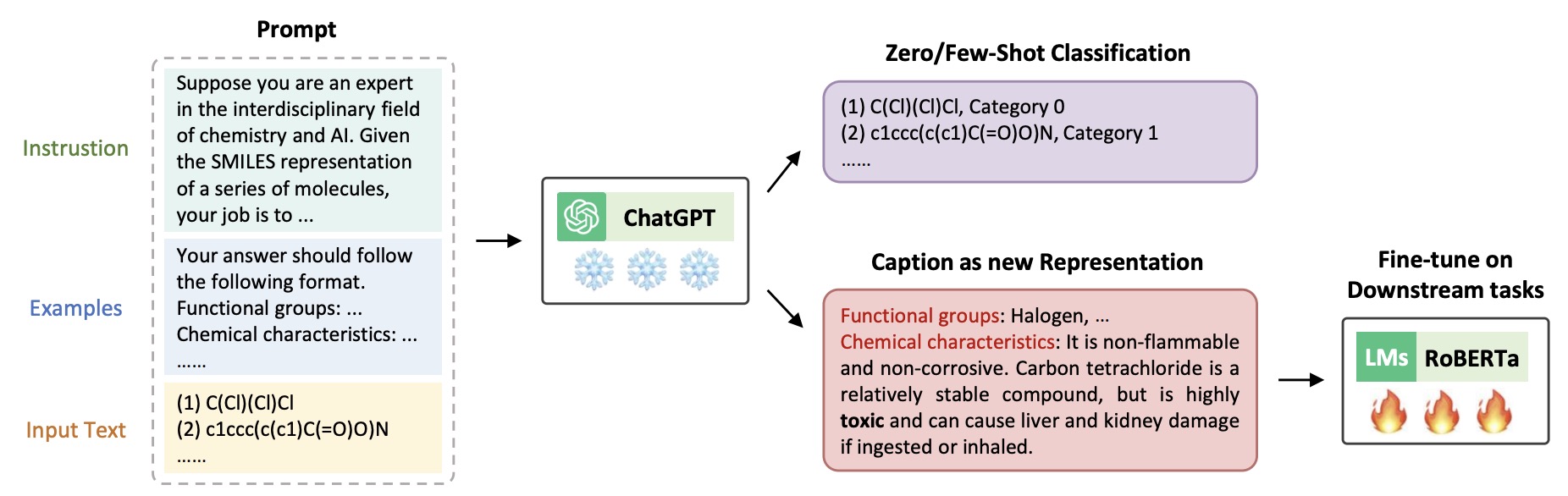

- (2023.07) [Arxiv' 2023] Can Large Language Models Empower Molecular Property Prediction? [Paper | Code]

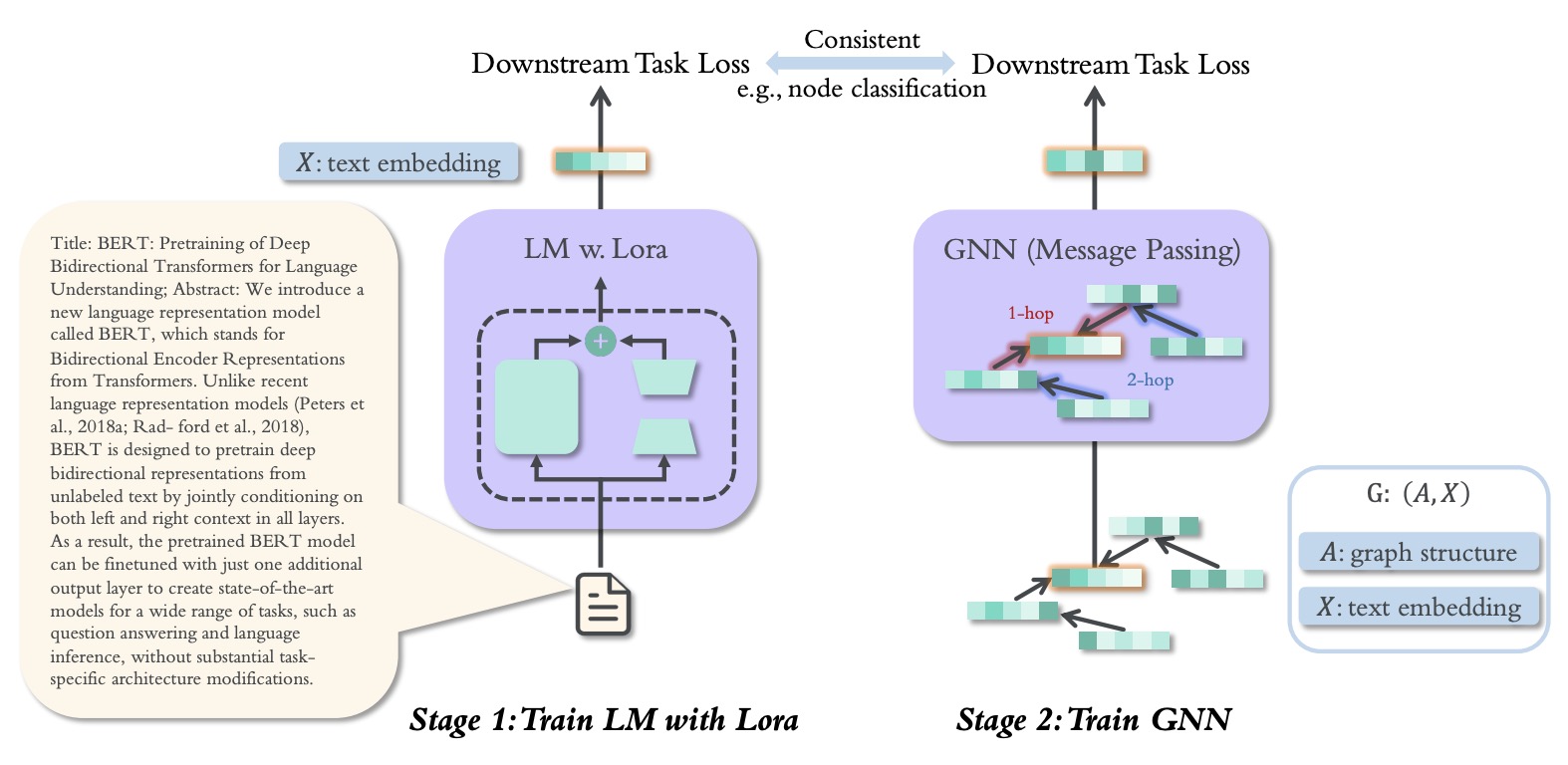

- (2023.08) [Arxiv' 2023] Simteg: A frustratingly simple approach improves textual graph learning [Paper | Code]

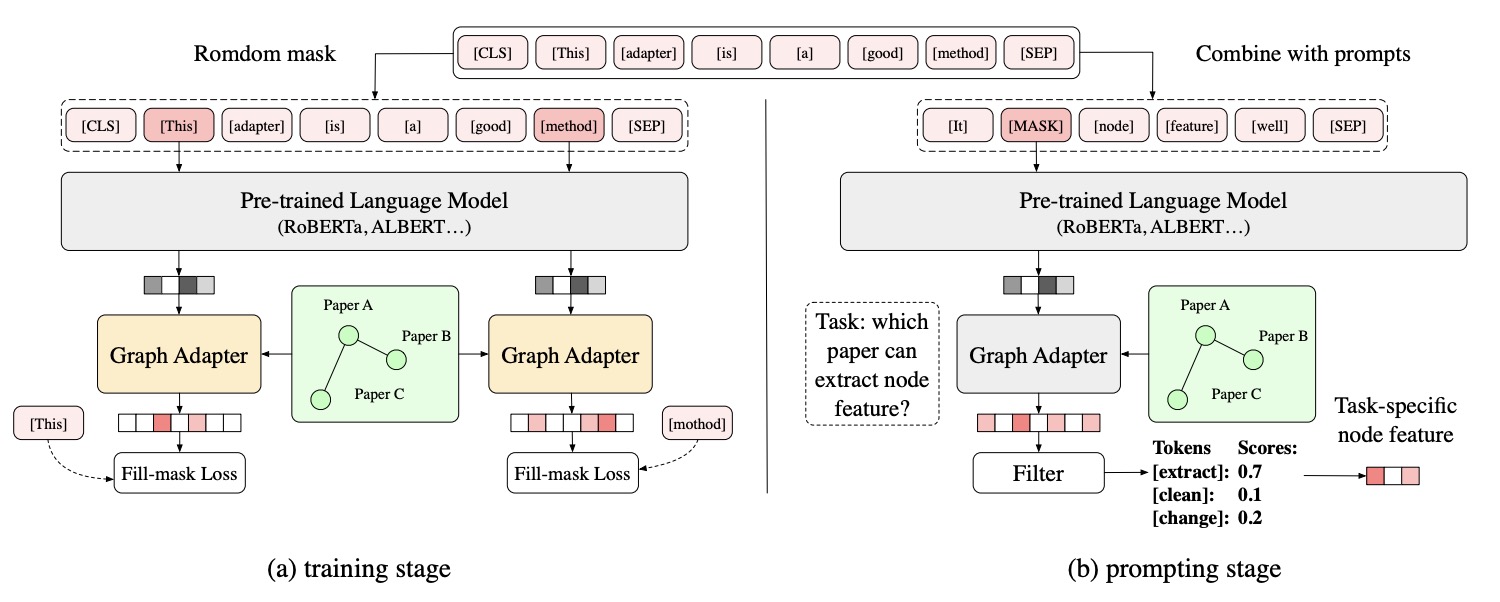

- (2023.09) [Arxiv' 2023] Prompt-based Node Feature Extractor for Few-shot Learning on Text-Attributed Graphs [Paper]

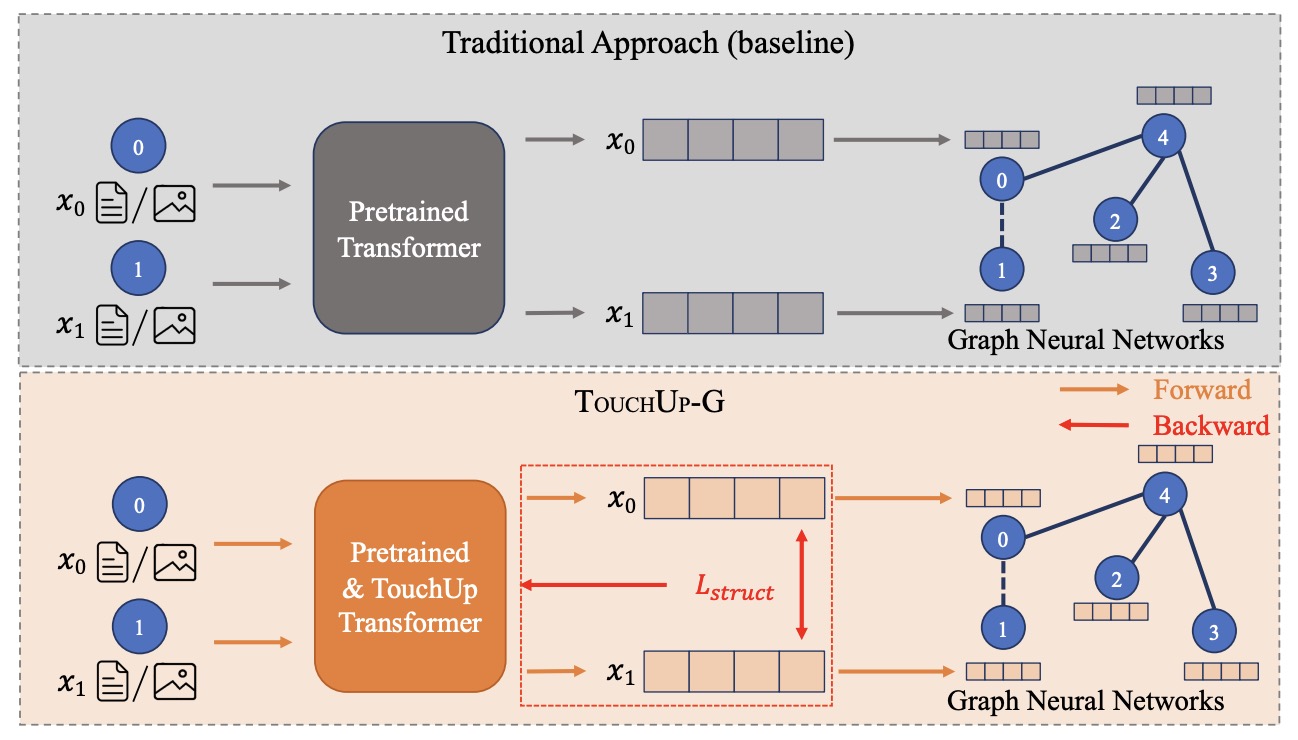

- (2023.09) [Arxiv' 2023] TouchUp-G: Improving Feature Representation through Graph-Centric Finetuning [Paper]

- (2023.09) [Arxiv' 2023] One for All: Towards Training One Graph Model for All Classification Tasks [Paper | Code]

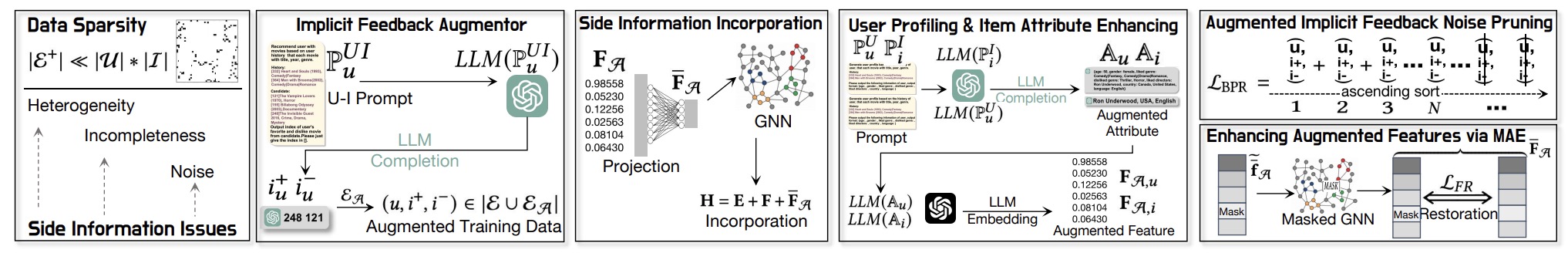

- (2023.11) [WSDM' 2024] LLMRec: Large Language Models with Graph Augmentation for Recommendation [Paper | Code]

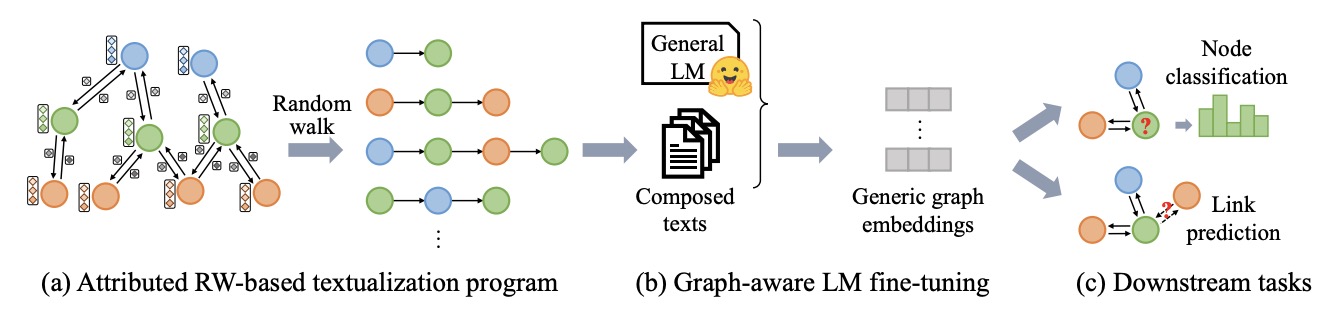

- (2023.11) [NeurIPS' 2023] WalkLM: A Uniform Language Model Fine-tuning Framework for Attributed Graph Embedding [Paper | Code]

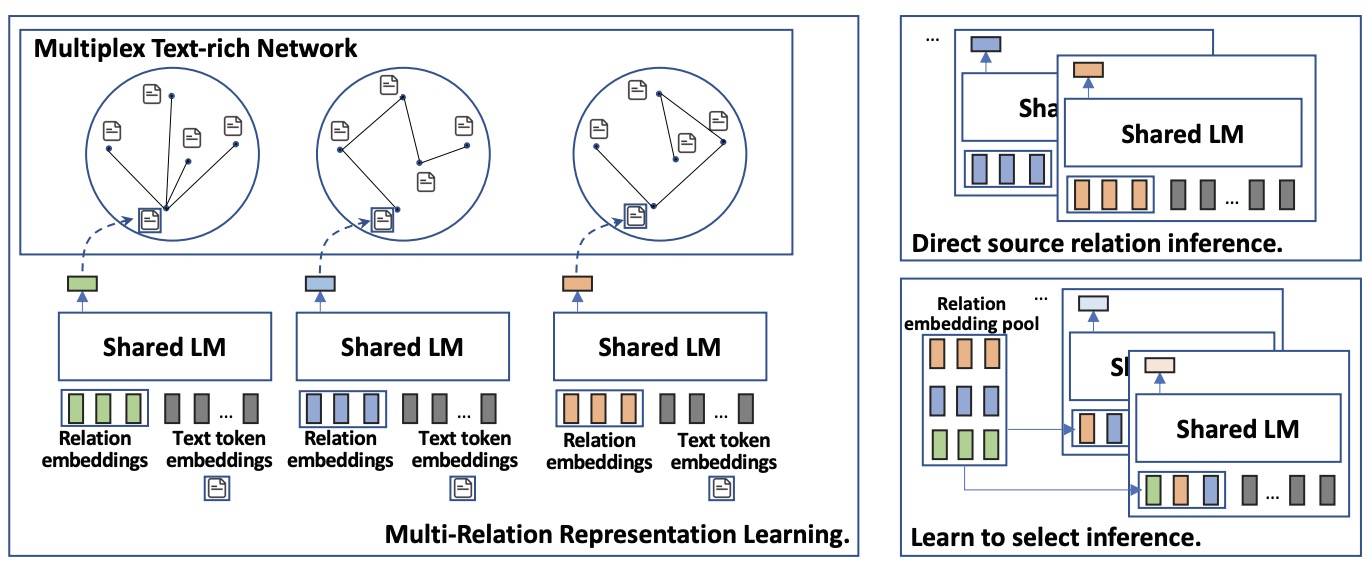

- (2023.10) [Arxiv' 2023] Learning Multiplex Embeddings on Text-rich Networks with One Text Encoder [Paper | Code]

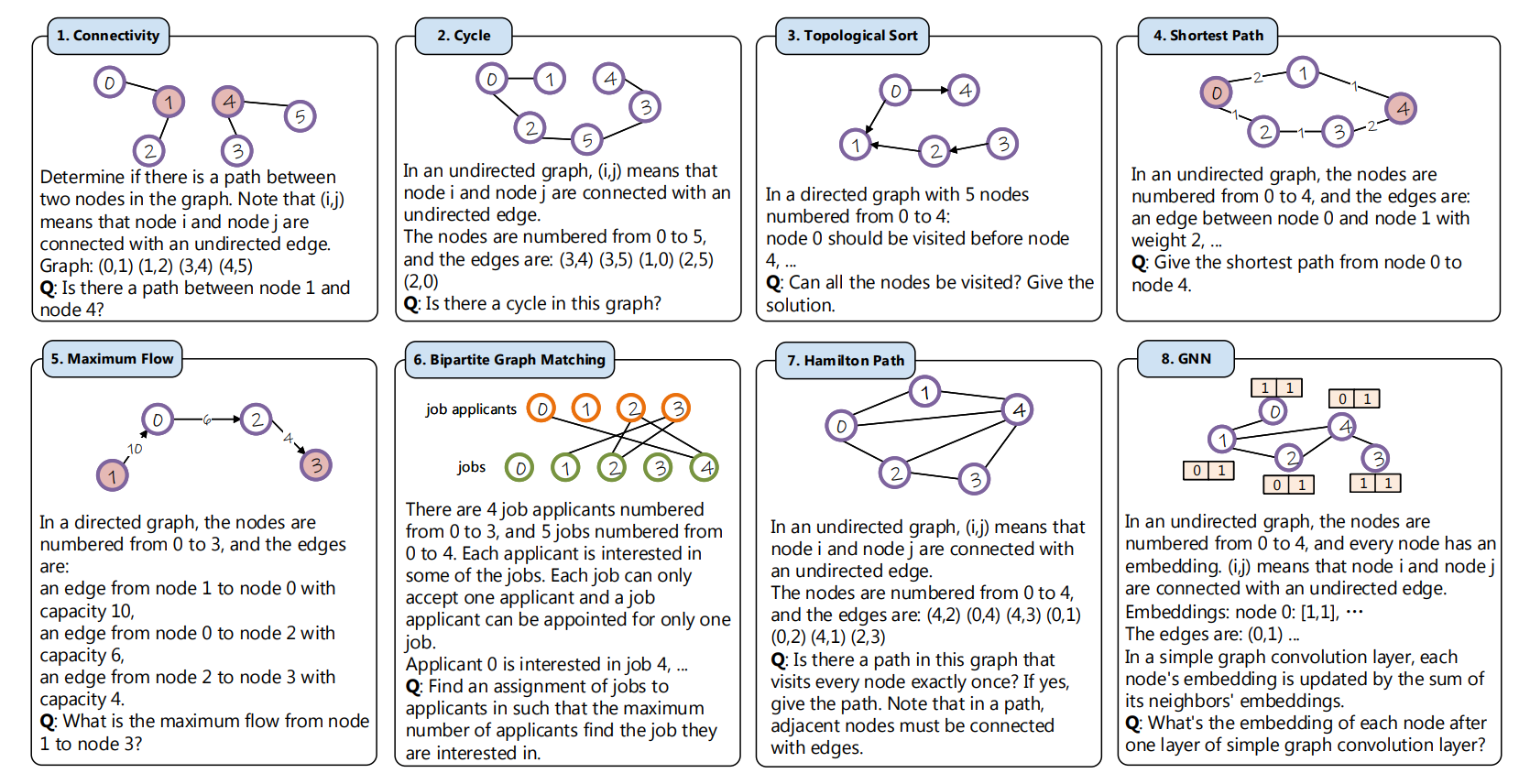

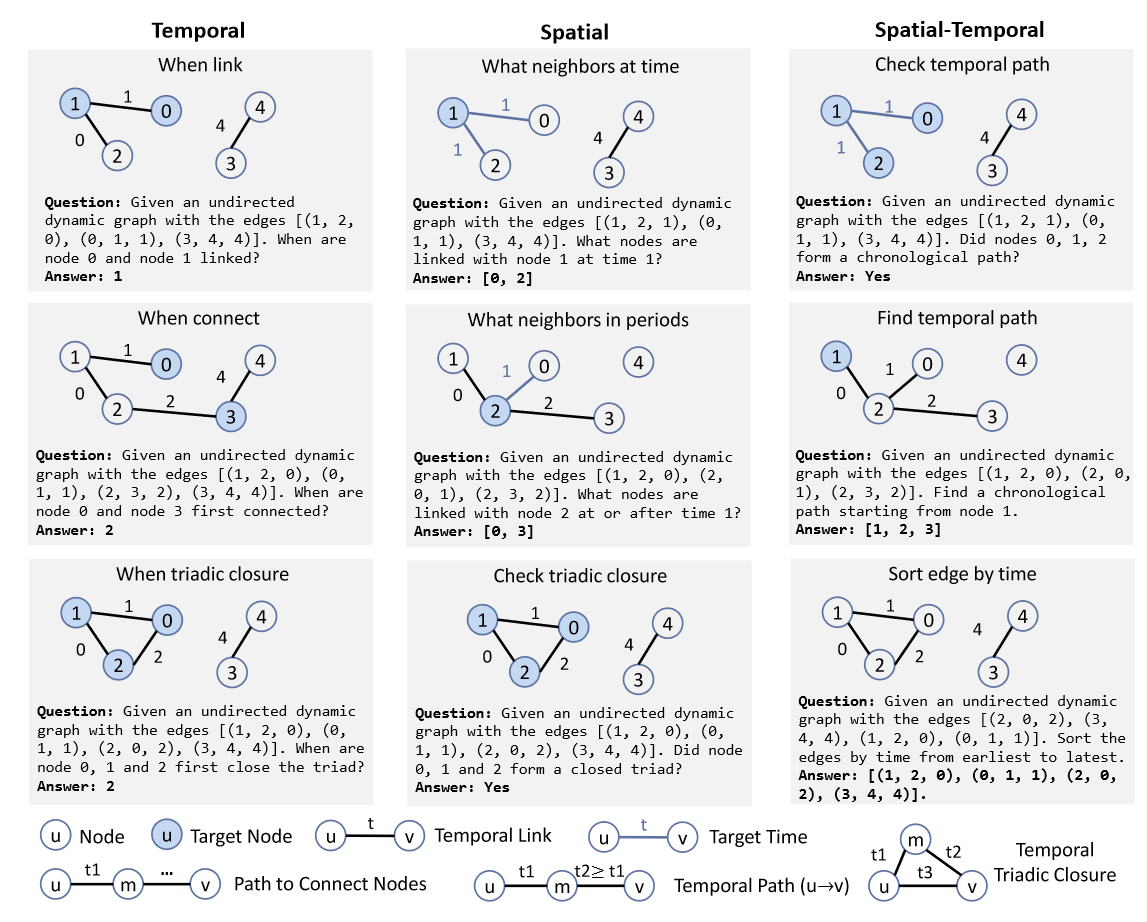

- (2023.05) [NeurIPS' 2023] Can language models solve graph problems in natural language? [Paper | Code]

- (2023.05) [Arxiv' 2023] GPT4Graph: Can Large Language Models Understand Graph Structured Data? An Empirical Evaluation and Benchmarking [Paper | Code]

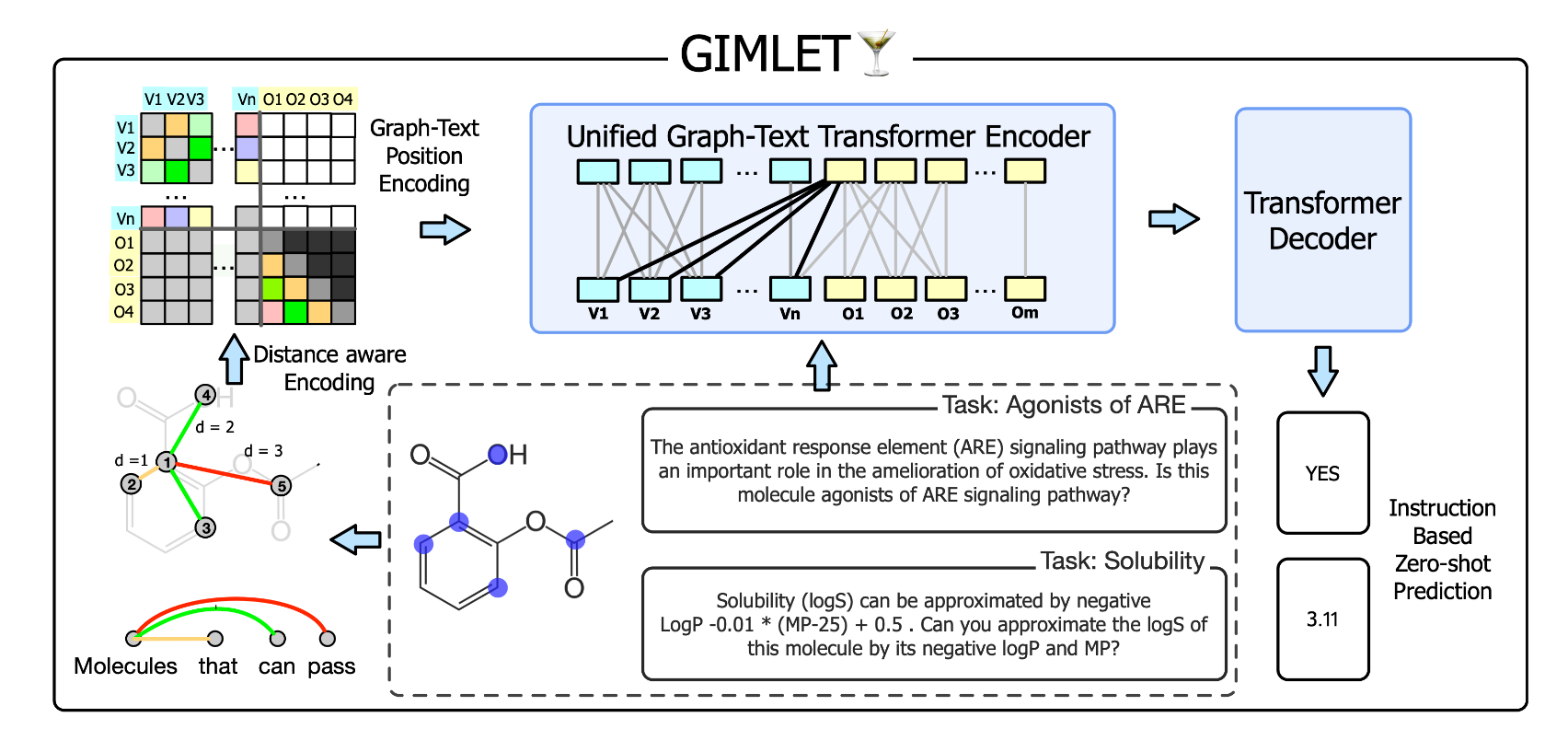

- (2023.06) [NeurIPS' 2023] GIMLET: A Unified Graph-Text Model for Instruction-Based Molecule Zero-Shot Learning [Paper | Code]

- (2023.07) [Arxiv' 2023] Exploring the Potential of Large Language Models (LLMs) in Learning on Graphs [Paper | Code]

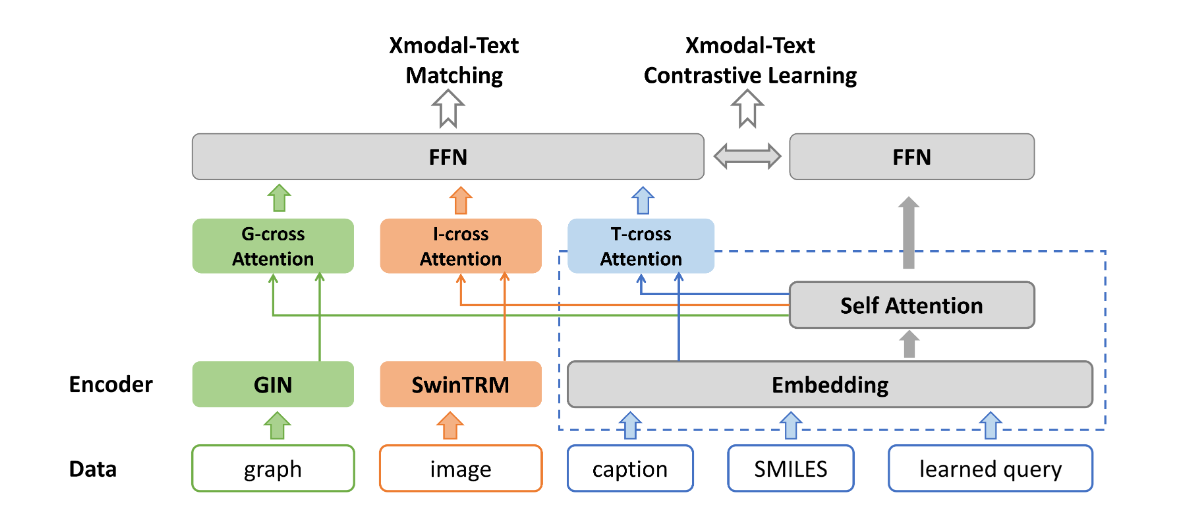

- (2023.08) [Arxiv' 2023] GIT-Mol: A Multi-modal Large Language Model for Molecular Science with Graph, Image, and Text [Paper]

- (2023.08) [Arxiv' 2023] Natural Language is All a Graph Needs [Paper | Code]

- (2023.08) [Arxiv' 2023] Evaluating Large Language Models on Graphs: Performance Insights and Comparative Analysis [Paper | Code]

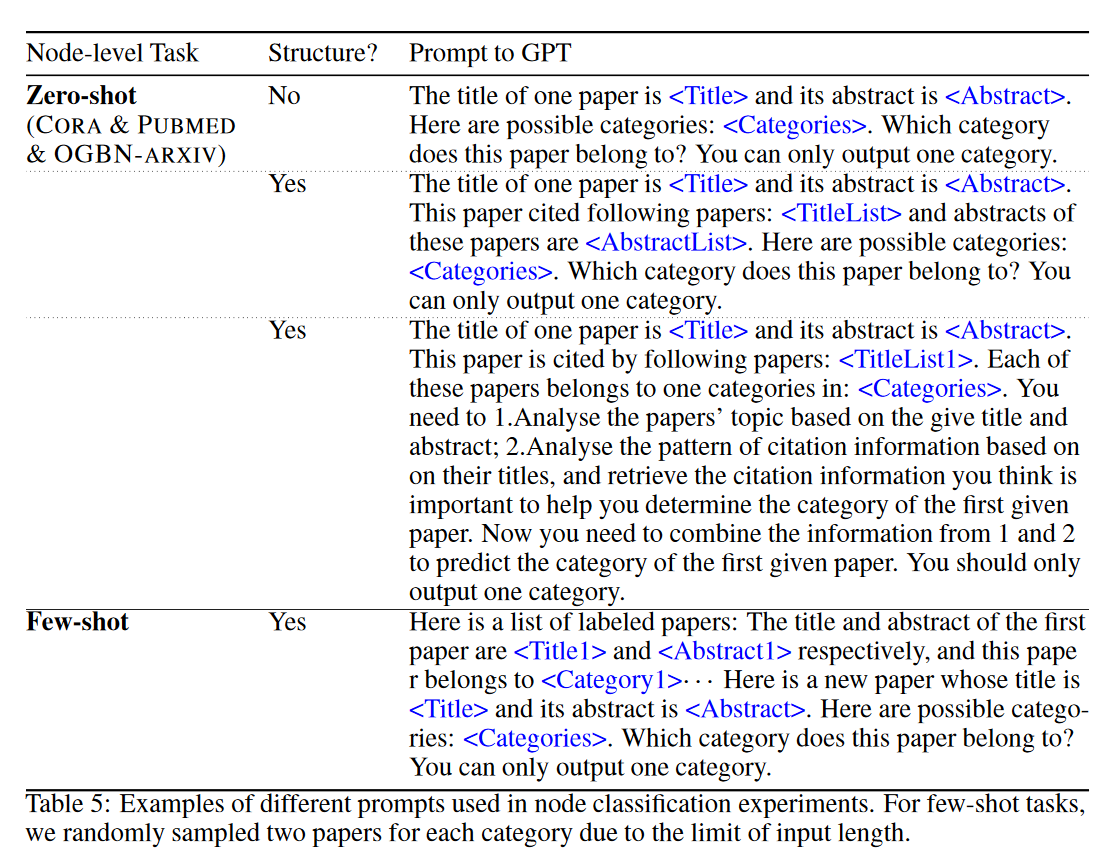

- (2023.09) [Arxiv' 2023] Can LLMs Effectively Leverage Graph Structural Information: When and Why [Paper | Code]

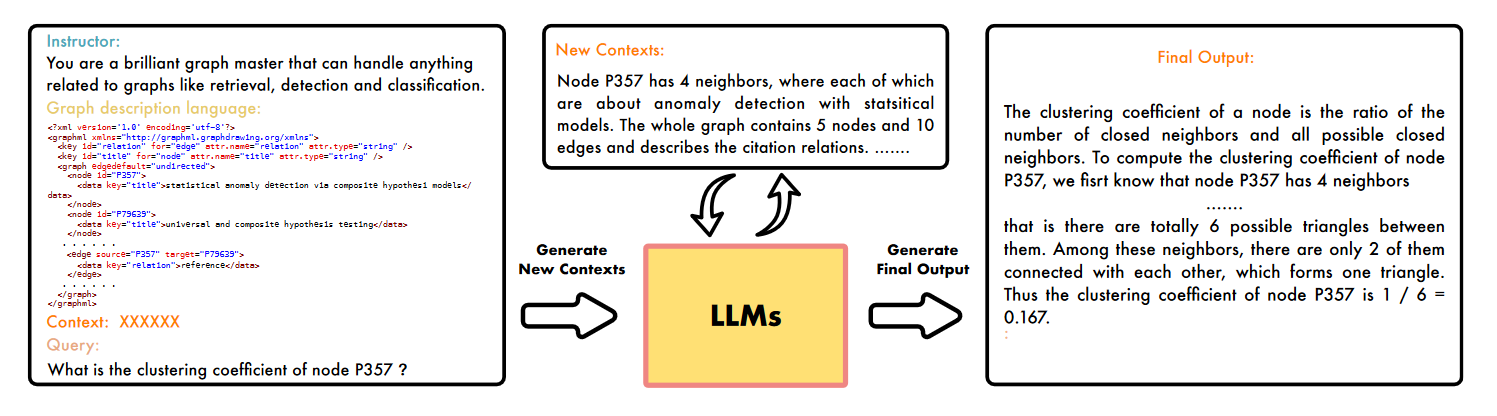

- (2023.10) [Arxiv' 2023] GraphText: Graph Reasoning in Text Space [Paper]

- (2023.10) [Arxiv' 2023] Talk like a Graph: Encoding Graphs for Large Language Models [Paper]

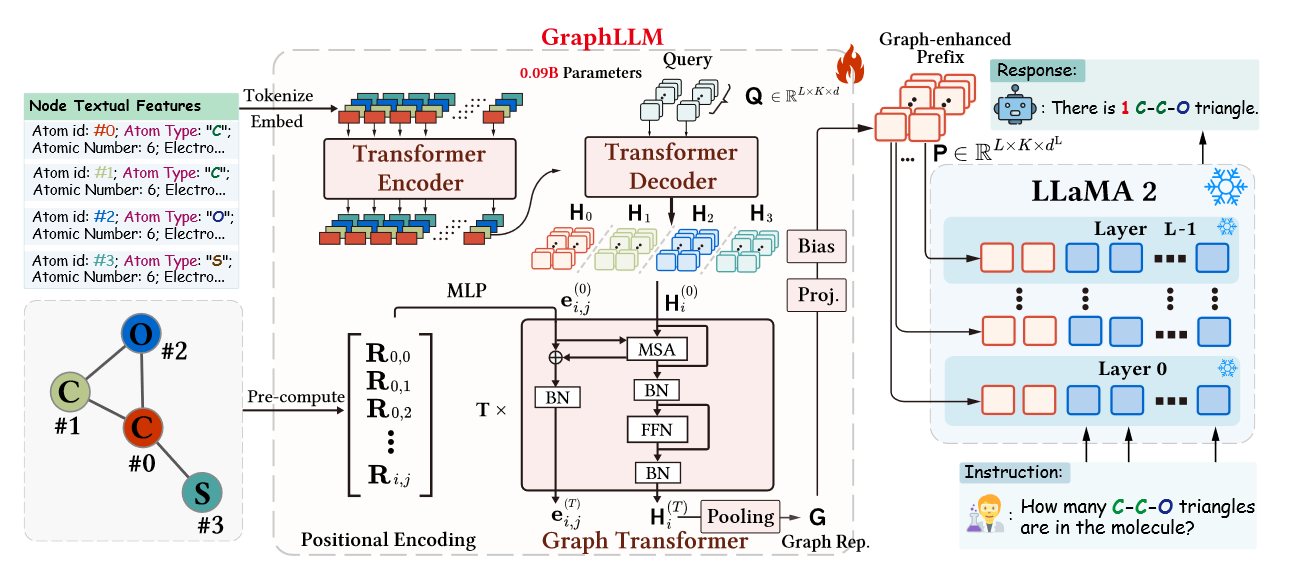

- (2023.10) [Arxiv' 2023] GraphLLM: Boosting Graph Reasoning Ability of Large Language Model [Paper | Code]

- (2023.10) [Arxiv' 2023] Beyond Text: A Deep Dive into Large Language Model [Paper]

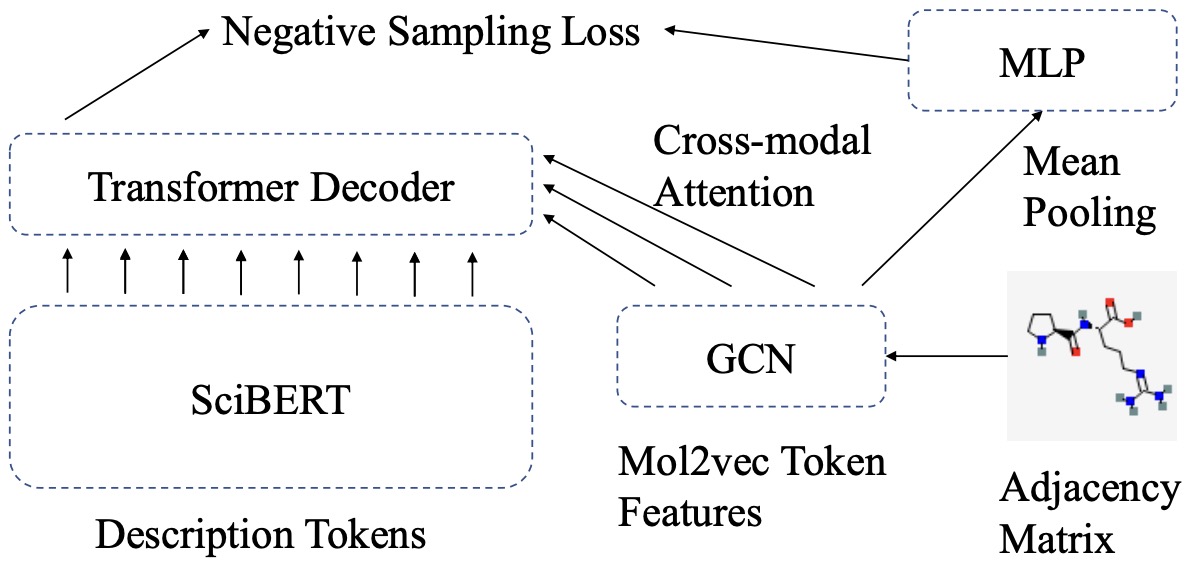

- (2023.10) [EMNLP' 2023] MolCA: Molecular Graph-Language Modeling with Cross-Modal Projector and Uni-Modal Adapter [Paper | Code]

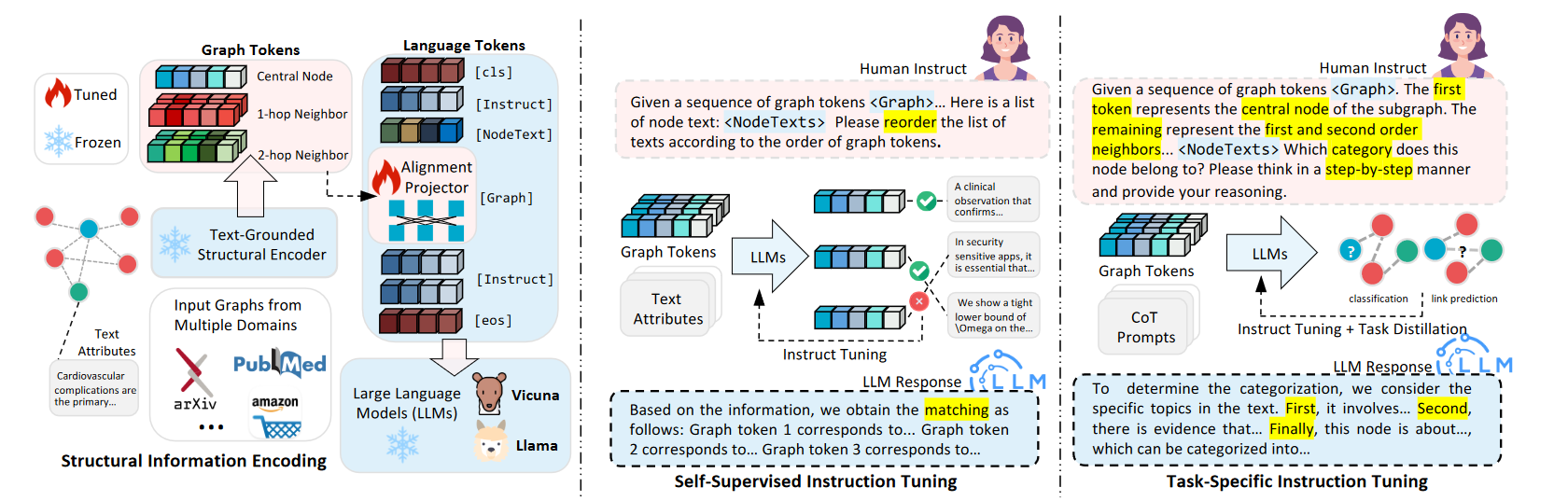

- (2023.10) [Arxiv' 2023] GraphGPT: Graph Instruction Tuning for Large Language Models [Paper | Code]

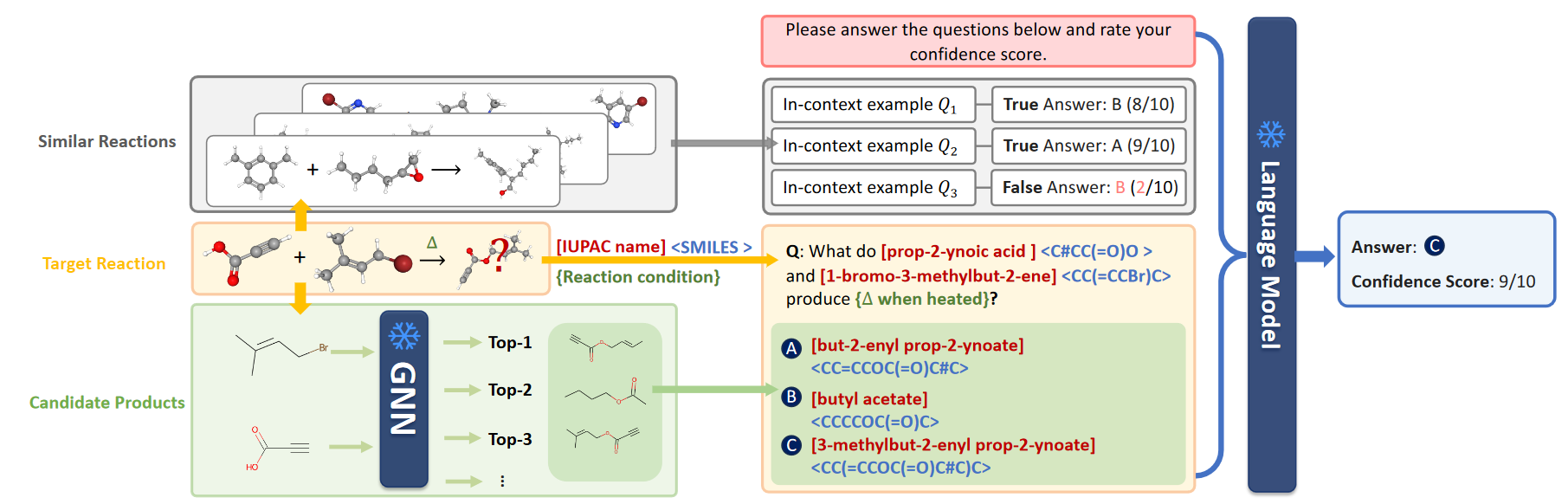

- (2023.10) [EMNLP' 2023] ReLM: Leveraging Language Models for Enhanced Chemical Reaction Prediction [Paper | Code]

- (2023.10) [Arxiv' 2023] LLM4DyG: Can Large Language Models Solve Problems on Dynamic Graphs? [Paper]

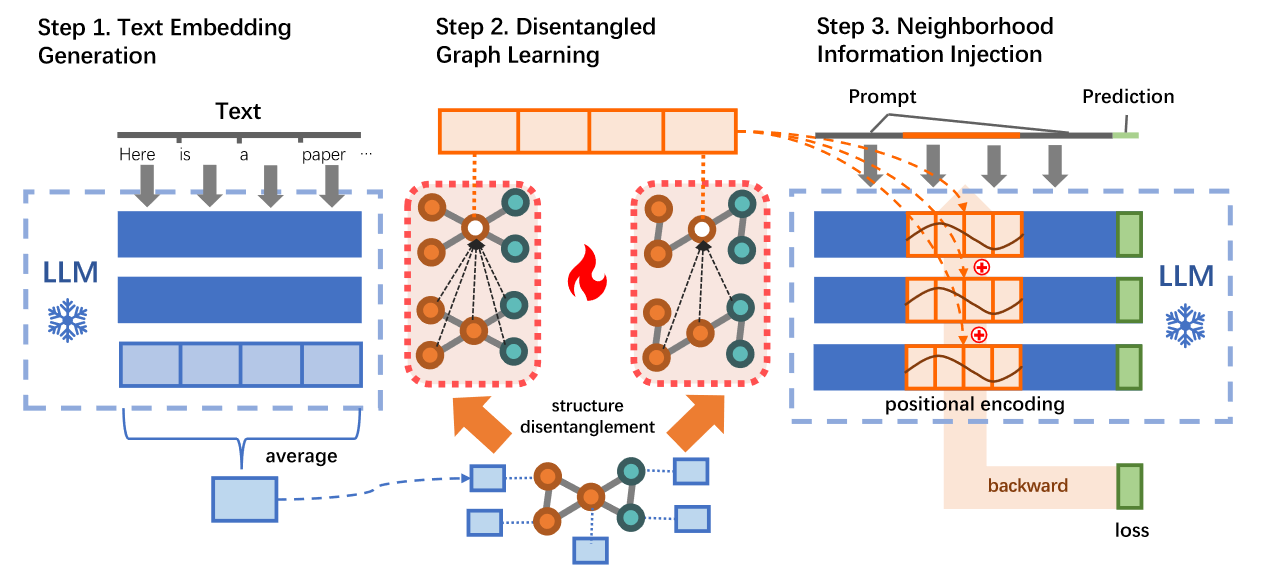

- (2023.10) [Arxiv' 2023] Disentangled Representation Learning with Large Language Models for Text-Attributed Graphs [Paper]

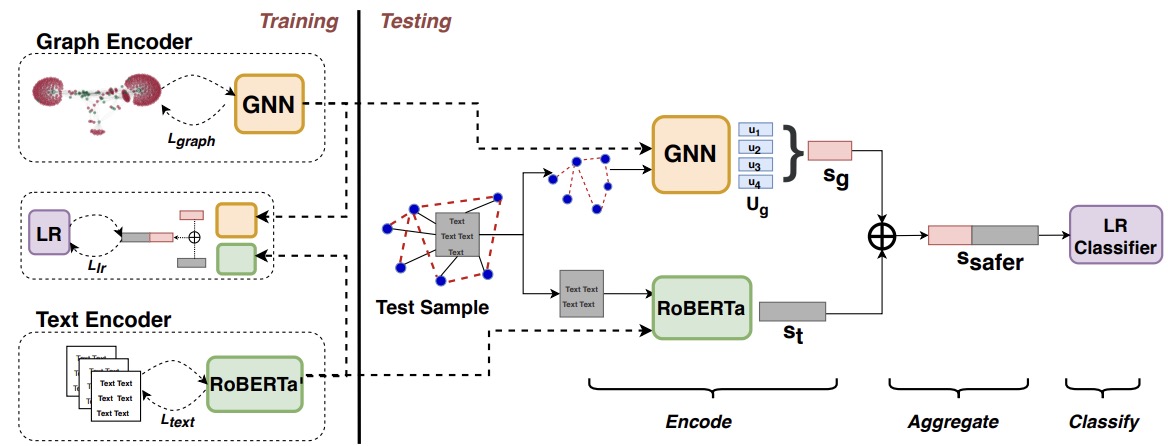

- (2020.08) [Arxiv' 2020] Graph-based Modeling of Online Communities for Fake News Detection [Paper | Code]

- (2021.05) [NeurIPS' 2021] GraphFormers: GNN-nested Transformers for Representation Learning on Textual Graph [Paper | Code]

- (2021.11) [EMNLP' 2021] Text2Mol: Cross-Modal Molecule Retrieval with Natural Language Queries [Paper | Code]

- (2022.09) [Arxiv' 2022] A Molecular Multimodal Foundation Model Associating Molecule Graphs with Natural Language [Paper | Code]

- (2022.10) [ICLR' 2023] Learning on Large-scale Text-attributed Graphs via Variational Inference [Paper | Code]

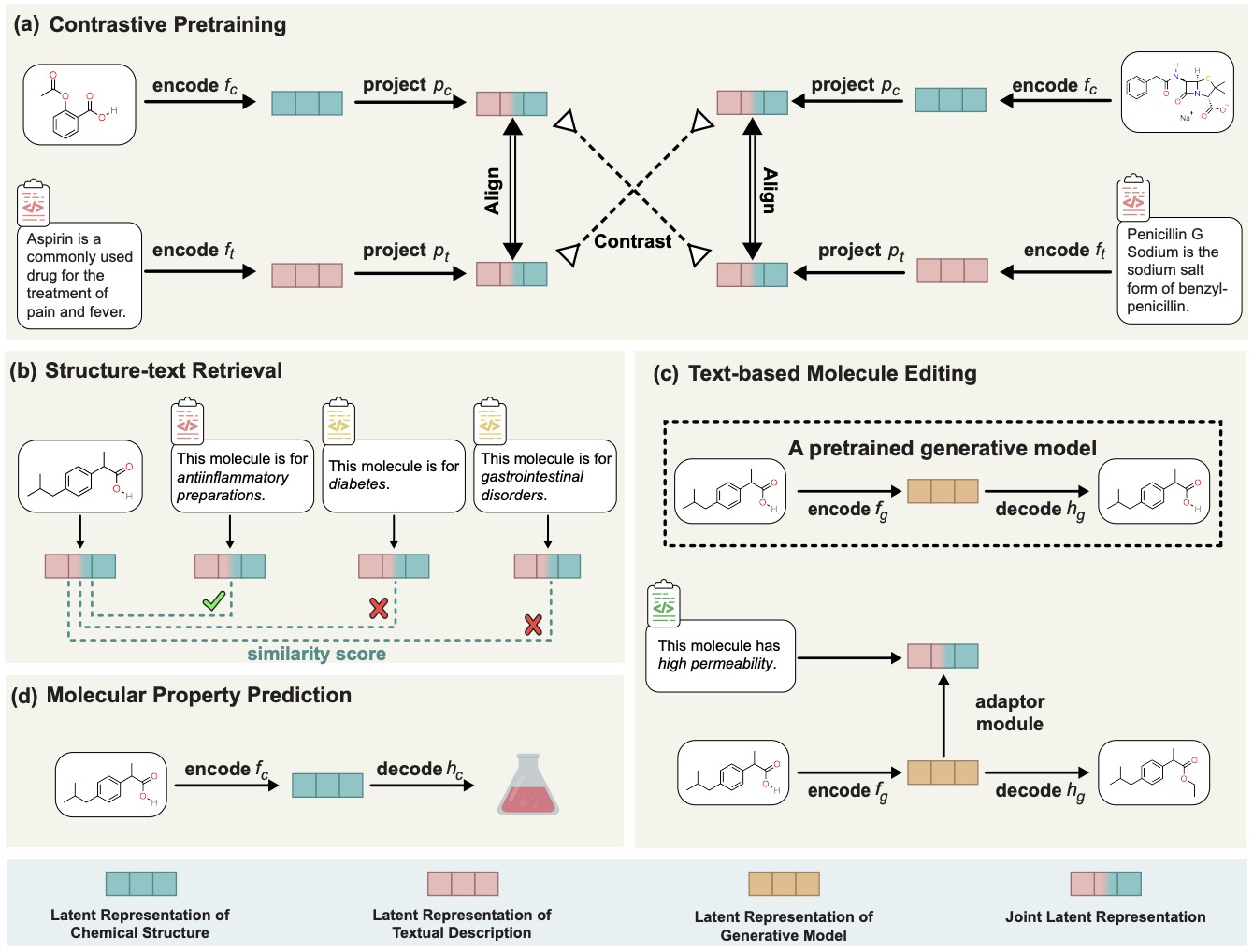

- (2022.12) [NMI' 2023] Multi-modal Molecule Structure-text Model for Text-based Editing and Retrieval [Paper | Code]

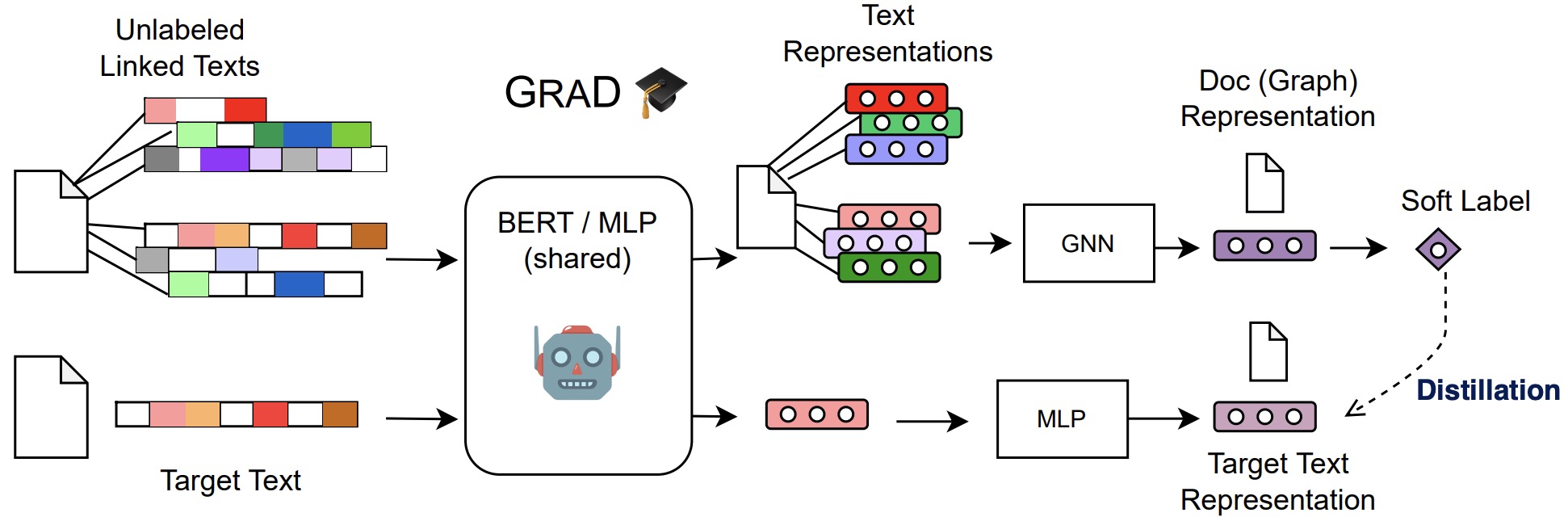

- (2023.04) [Arxiv' 2023] Train Your Own GNN Teacher: Graph-Aware Distillation on Textual Graphs [Paper | Code]

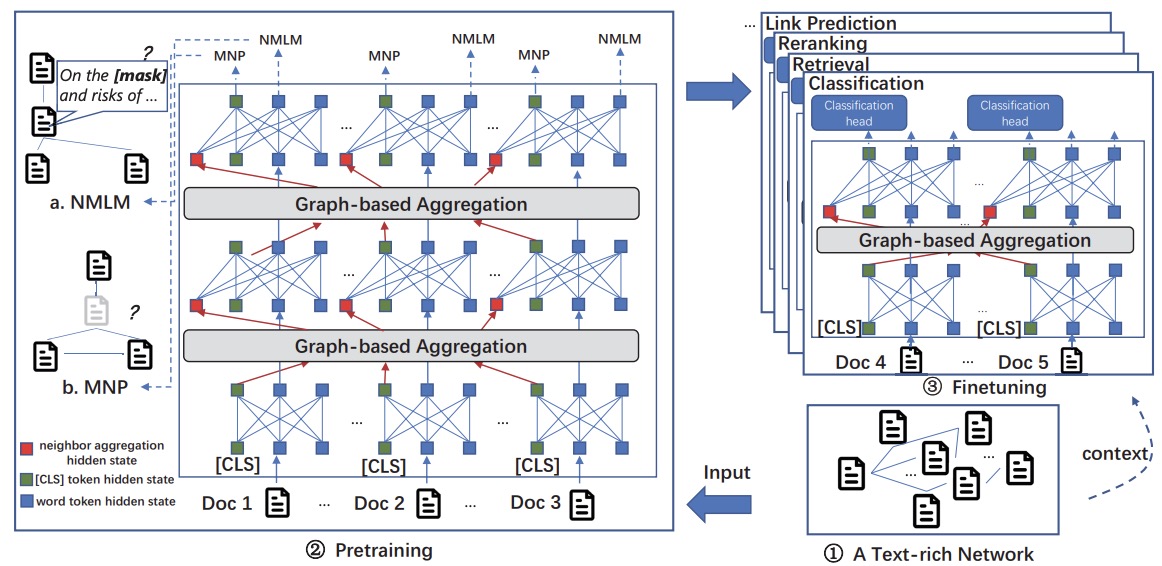

- (2023.05) [ACL' 2023] PATTON : Language Model Pretraining on Text-Rich Networks [Paper | Code]

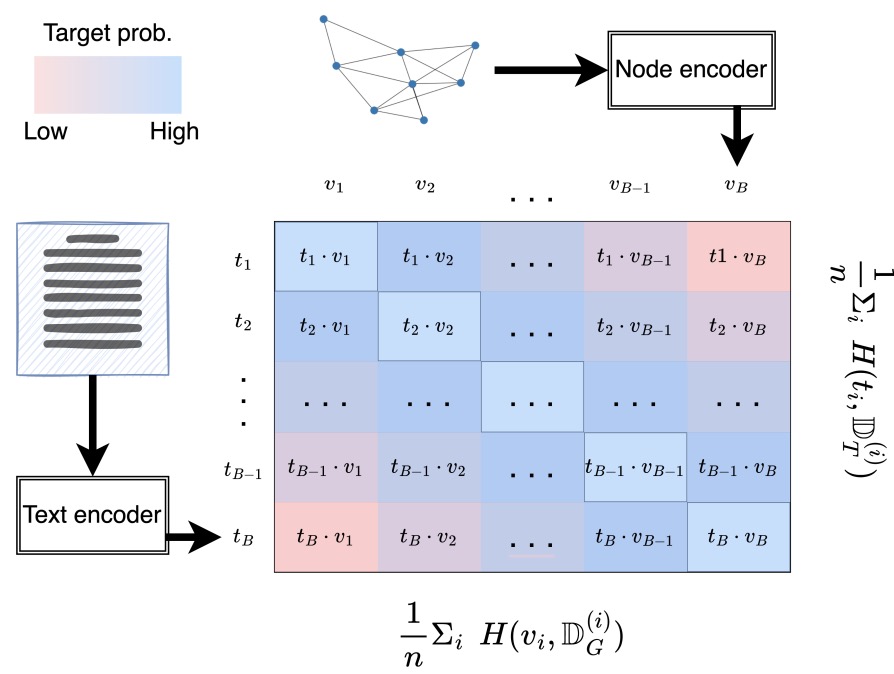

- (2023.05) [Arxiv' 2023] ConGraT: Self-Supervised Contrastive Pretraining for Joint Graph and Text Embeddings [Paper | Code]

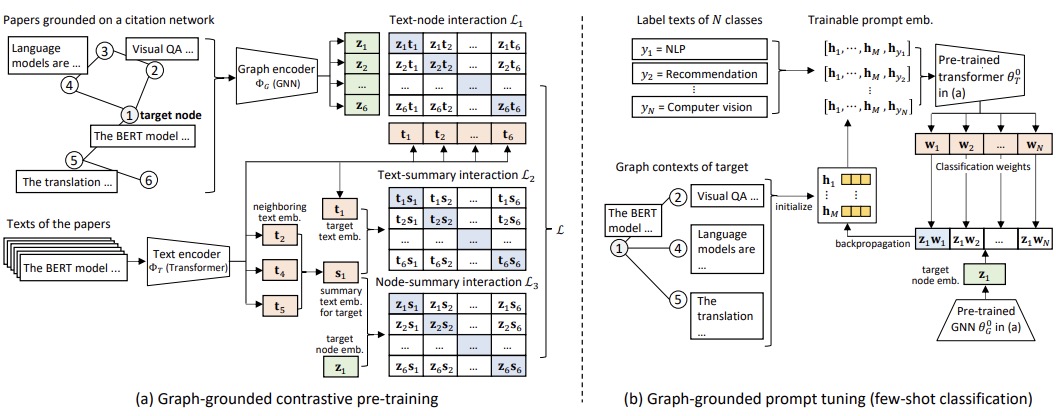

- (2023.07) [Arxiv' 2023] Prompt Tuning on Graph-augmented Low-resource Text Classification [Paper | Code]

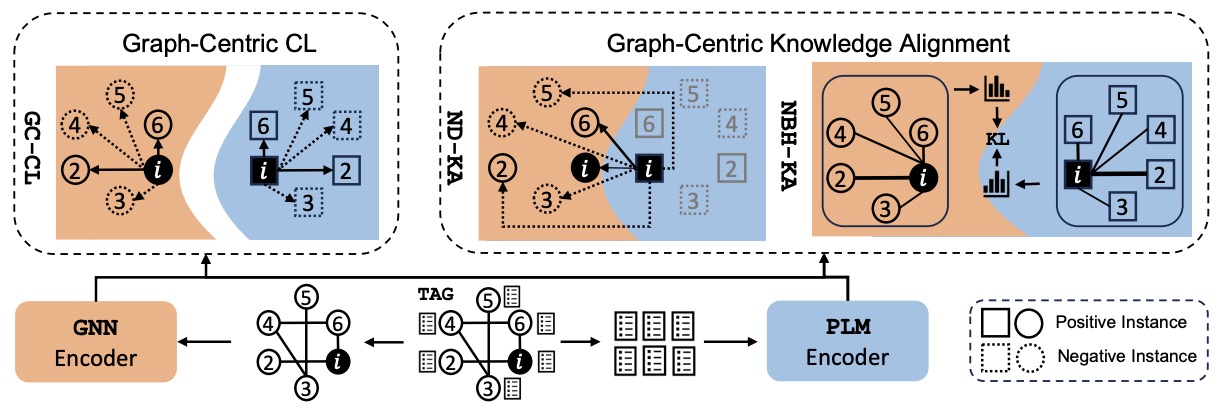

- (2023.10) [Arxiv' 2023] GRENADE: Graph-Centric Language Model for Self-Supervised Representation Learning on Text-Attributed Graphs [Paper | Code]

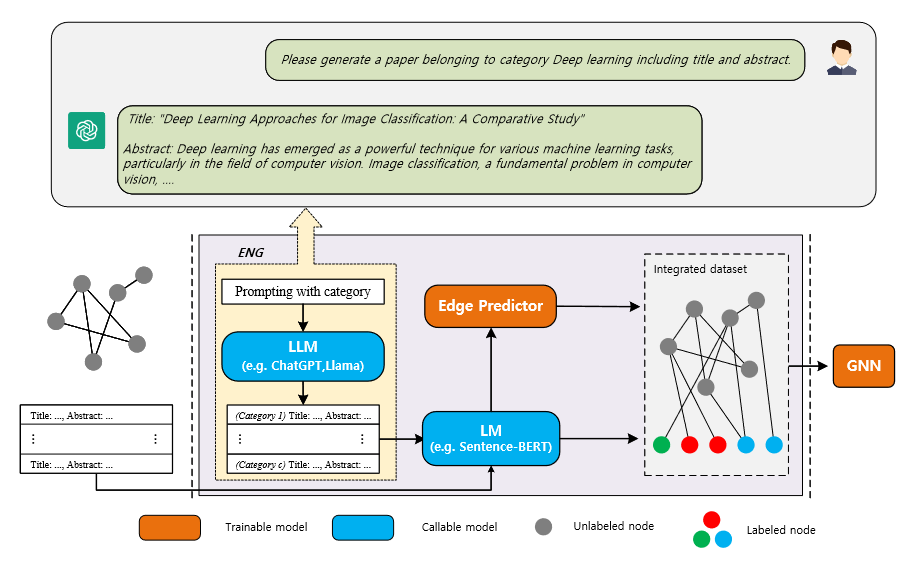

- (2023.10) [Arxiv' 2023] Label-free Node Classification on Graphs with Large Language Models (LLMs) [Paper | Code]

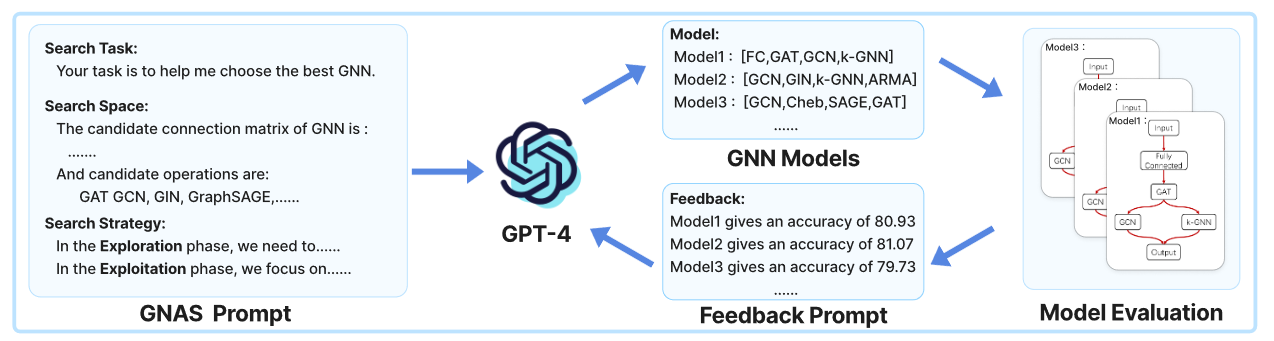

- (2023.10) [Arxiv' 2023] Graph Neural Architecture Search with GPT-4 [Paper]

- (2023.10) [Arxiv' 2023] Empower Text-Attributed Graphs Learning with Large Language Models (LLMs) [Paper]

We note that several repos also summarize papers on the integration of LLMs and graphs. However, we differentiate ourselves by organizing these papers leveraging a new and more granular taxonomy. We recommend researchers to explore some repositories for a comprehensive survey.

-

Awesome-Graph-LLM, created by Xiaoxin He from NUS.

-

Awesome-Large-Graph-Model, created by Ziwei Zhang from THU.

We highly recommend a repository that summarizes the work on Graph Prompt, which is very close to Graph-LLM.

- Awesome-Graph-Prompt, created by Xixi Wu from FDU.

If you have come across relevant resources, feel free to open an issue or submit a pull request.

* (_time_) [conference] **paper_name** [[Paper](link) | [Code](link)]

<details close>

<summary>Model name</summary>

<p align="center"><img width="75%" src="Figures/xxx.jpg" /></p>

<p align="center"><em>The framework of model name.</em></p>

</details>

Feel free to cite this work if you find it useful to you!

@article{li2023survey,

title={A Survey of Graph Meets Large Language Model: Progress and Future Directions},

author={Li, Yuhan and Li, Zhixun and Wang, Peisong and Li, Jia and Sun, Xiangguo and Cheng, Hong and Yu, Jeffrey Xu},

journal={arXiv preprint arXiv:2311.12399},

year={2023}

}