Note: This is an example of adapting the original implementation (Panda arm) to a different robotic arm (UR5e). For more details, please refer to the Robot Grasping (UR5e) section.

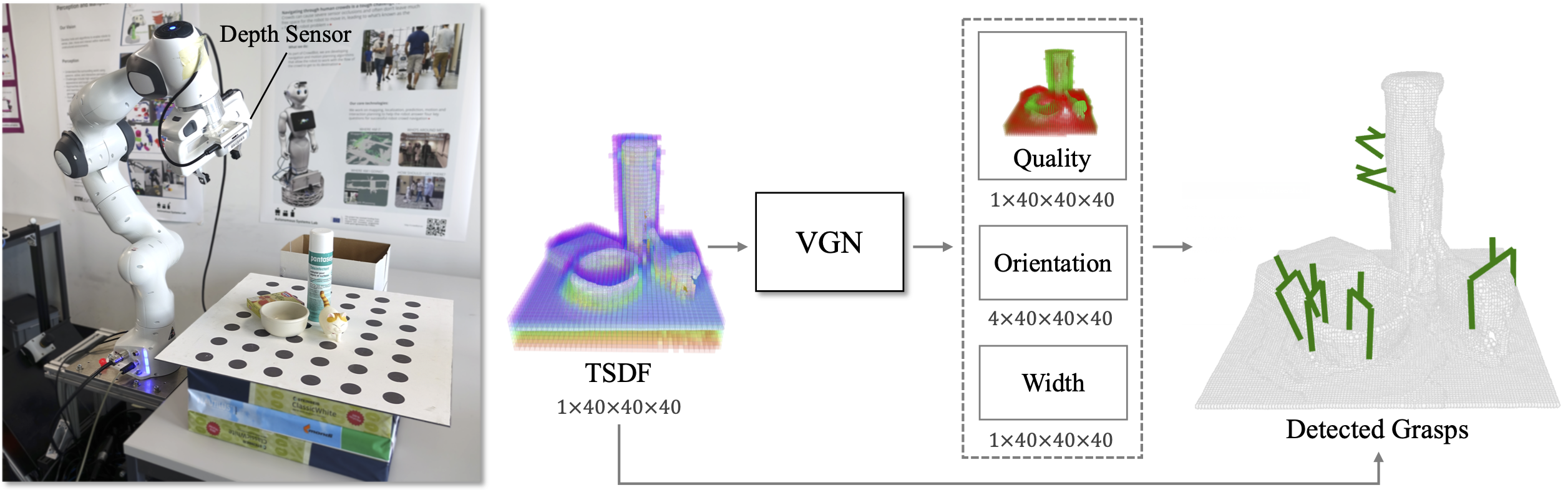

VGN is a 3D convolutional neural network for real-time 6 DOF grasp pose detection. The network accepts a Truncated Signed Distance Function (TSDF) representation of the scene and outputs a volume of the same spatial resolution, where each cell contains the predicted quality, orientation, and width of a grasp executed at the center of the voxel. The network is trained on a synthetic grasping dataset generated with physics simulation.

This repository contains the implementation of the following publication:

- M. Breyer, J. J. Chung, L. Ott, R. Siegwart, and J. Nieto. Volumetric Grasping Network: Real-time 6 DOF Grasp Detection in Clutter. Conference on Robot Learning (CoRL 2020), 2020. [pdf][video]

If you use this work in your research, please cite accordingly.

The next sections provide instructions for getting started with VGN.

The following instructions were tested on Ubuntu 20.04 with ROS Noetic.

Clone the repository into the src folder of a catkin workspace.

git clone https://github.com/ethz-asl/vgn

git clone https://github.com/mbreyer/robot_helpers

OpenMPI is optionally used to distribute the data generation over multiple cores/machines.

sudo apt install libopenmpi-dev

Create and activate a new virtual environment.

cd /path/to/vgn

python3 -m venv --system-site-packages /path/to/venv

source /path/to/venv/bin/activate

Install the Python dependencies within the activated virtual environment.

pip3 install -r requirements.txt

Build and source the catkin workspace.

catkin build vgn

source /path/to/catkin_ws/devel/setup.zsh

Finally, download the URDFs and pretrained model and place them inside assets.

Generate a database of labeled grasp configurations.

mpirun -np <num-workers> python3 scripts/generate_data.py --root=data/grasps/blocks

Next, clean and balance the data using the process_data.ipynb notebook.

You can also visualize a scene and the associated grasp configurations.

python3 scripts/visualize_data.py data/grasps/blocks

Finally, generate the voxel grid / grasp target pairs to train VGN.

python3 scripts/create_dataset.py data/grasps/blocks data/datasets/blocks

python3 scripts/train_vgn.py --dataset data/datasets/blocks --augment

python3 scripts/sim_grasp.py

This package contains an example of open-loop grasp execution with a Franka Emika Panda and a wrist-mounted Intel Realsense D435.

Start a roscore and launch the ROS nodes.

roscore &

roslaunch vgn panda_grasp.launch

Run a grasping experiment.

python3 scripts/panda_grasp.py

This is an example of adapting the original implementation (Panda arm) to a different robotic arm (UR5e). To understand the specific changes made, please refer to commits <70ee0de> and <8e5f6f8>.

roslaunch vgn ur5e_grasp.launch

python3 scripts/ur5e_grasp.py

@inproceedings{breyer2020volumetric,

title={Volumetric Grasping Network: Real-time 6 DOF Grasp Detection in Clutter},

author={Breyer, Michel and Chung, Jen Jen and Ott, Lionel and Roland, Siegwart and Juan, Nieto},

booktitle={Conference on Robot Learning},

year={2020},

}