This repository contains a PyTorch implementation of the paper:

Learning Feature Descriptors using Camera Pose Supervision [Project page] [Arxiv]

Qianqian Wang, Xiaowei Zhou, Bharath Hariharan, Noah Snavely

ECCV 2020 (Oral)

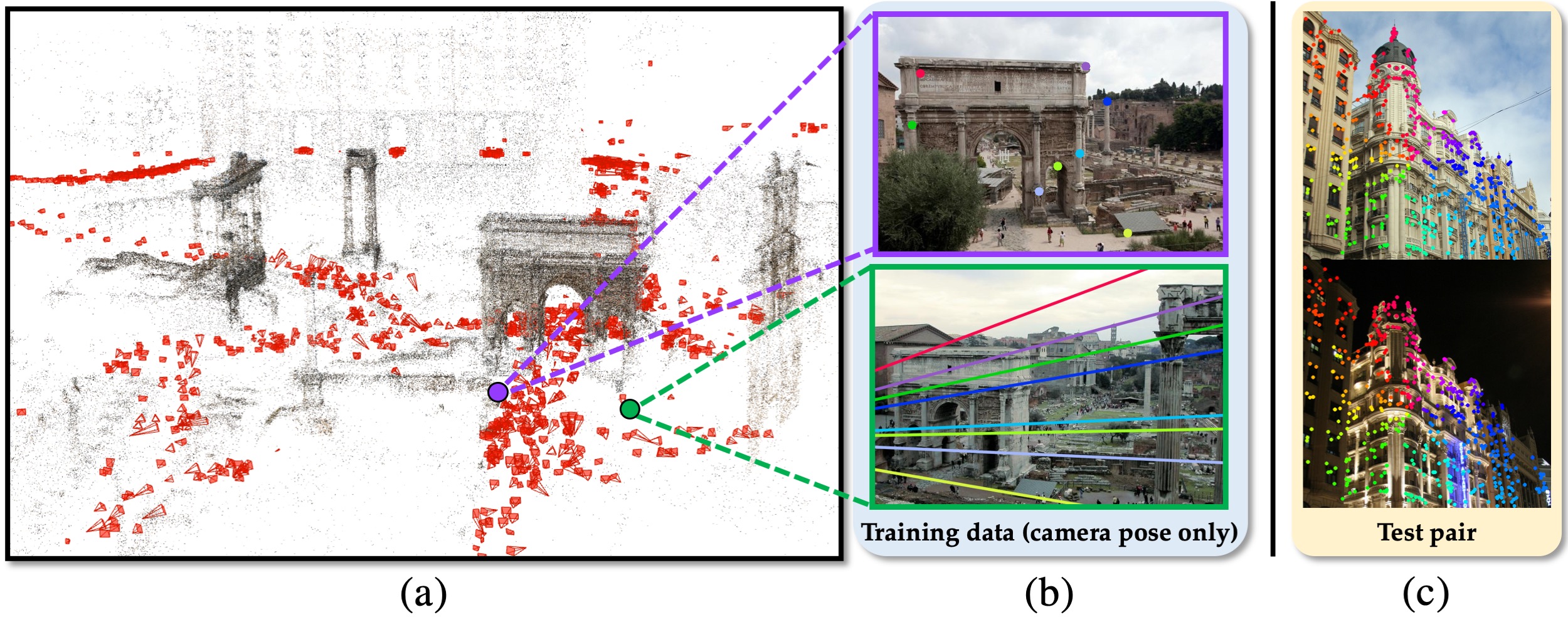

Recent research on learned visual descriptors has shown promising improvements in correspondence estimation, a key component of many 3D vision tasks. However, existing descriptor learning frameworks typically require ground-truth correspondences between feature points for training, which are challenging to acquire at scale. In this paper we propose a novel weakly-supervised framework that can learn feature descriptors solely from relative camera poses between images. To do so, we devise both a new loss function that exploits the epipolar constraint given by camera poses, and a new model architecture that makes the whole pipeline differentiable and efficient. Because we no longer need pixel-level ground-truth correspondences, our framework opens up the possibility of training on much larger and more diverse datasets for better and unbiased descriptors. We call the resulting descriptors CAmera Pose Supervised, or CAPS, descriptors. Though trained with weak supervision, CAPS descriptors outperform even prior fully-supervised descriptors and achieve state-of-the-art performance on a variety of geometric tasks.

# Create conda environment with torch 1.0.1 and CUDA 10.0

conda env create -f environment.yml

conda activate capsIf you encounter problems with OpenCV, try to uninstall your current opencv packages and reinstall them again

pip uninstall opencv-python

pip uninstall opencv-contrib-python

pip install opencv-python==3.4.2.17

pip install opencv-contrib-python==3.4.2.17Pretrained model can be downloaded using this link.

Please download the preprocessed MegaDepth dataset using this link.

To start training, please download our training data, and run the following command:

# example usage

python train.py --config configs/train_megadepth.yamlWe provide code for extracting CAPS descriptors on HPatches dataset. To download and use the HPatches Sequences, please refer to this link.

To extract CAPS features on HPatches dataset, download the pretrained model, modify paths in configs/extract_features_hpatches.yaml and run

python extract_features.py --config configs/extract_features_hpatches.yamlWe provide an interactive demo where you could click on locations in the first image and see their predicted correspondences in the second image.

Please refer to jupyter/visualization.ipynb for more details.

Please cite our work if you find it useful:

@inproceedings{wang2020learning,

Title = {Learning Feature Descriptors using Camera Pose Supervision},

Author = {Qianqian Wang and Xiaowei Zhou and Bharath Hariharan and Noah Snavely},

booktitle = {Proc. European Conference on Computer Vision (ECCV)},

Year = {2020},

}Acknowledgements. We thank Kai Zhang, Zixin Luo, Zhengqi Li for helpful discussion and comments. This work was partly supported by a DARPA LwLL grant, and in part by the generosity of Eric and Wendy Schmidt by recommendation of the Schmidt Futures program.