NTable: A Dataset for Camera-based Table Detection

Most of the existing table detection methods are designed for scanned document images or Portable Document Format (PDF). And tables in the real world are seldom collected in the current mainstream table detection datasets. Therefore, we construct a dataset named NTable for camera-based table detection. NTable consists of a smaller-scale dateset NTable-ori, an augmented dataset NTable-cam, and a generated dataset NTable-gen. More details are available in our paper "NTable: A Dataset for Camera-based Table Detection".

Description

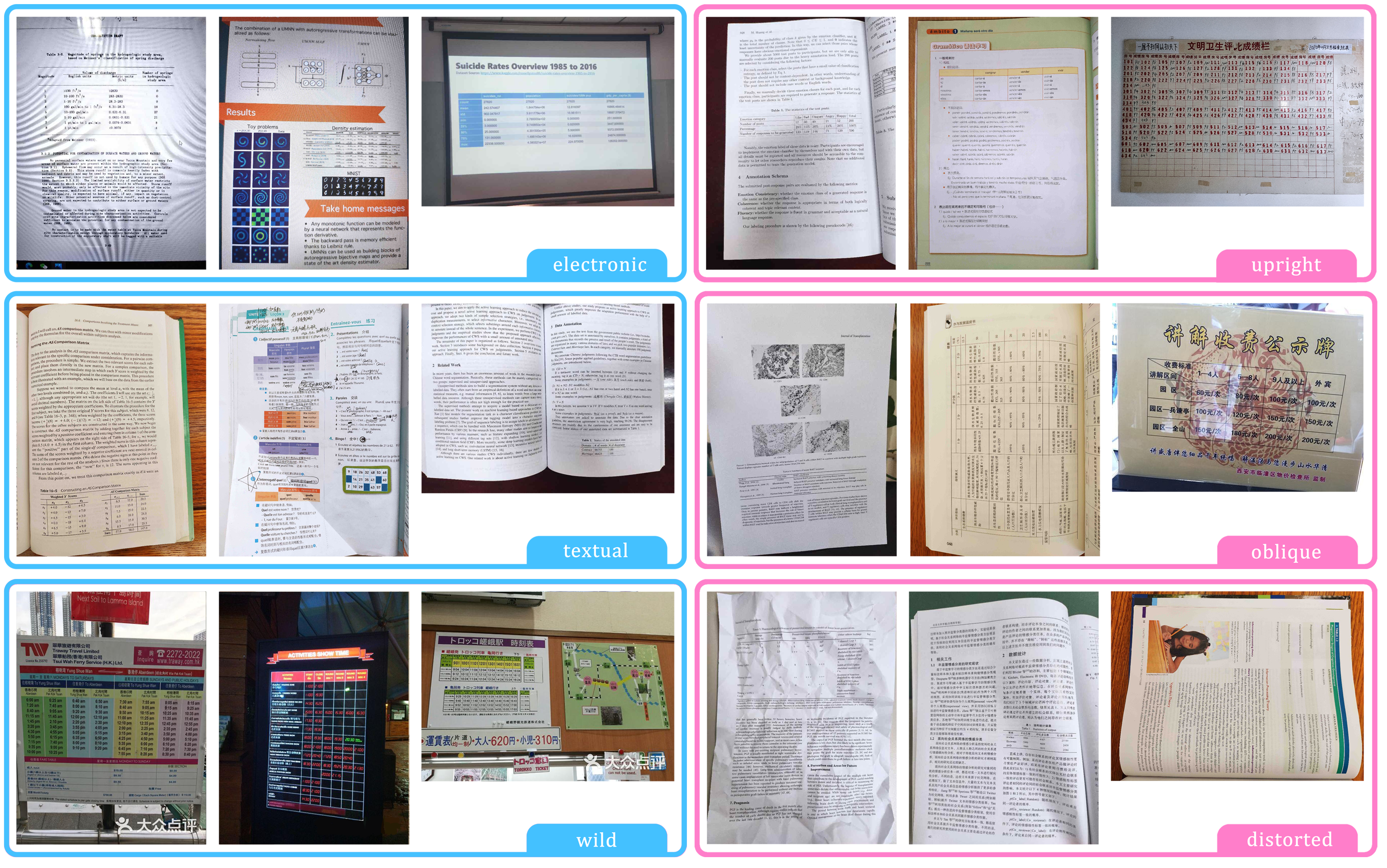

NTable-ori is made up of 2.1k+ images taken by different cameras and mobile phones. We provide two classification methods, one is based on the source, the other is based on the shape (see Examples). According to the source, NTable-ori can be divided into textual, electronic and wild. According to the shape, NTable-ori can be divided into upright, oblique and distorted. Table 1 counts the classification results.

| category | source | shape | ||||

| subcategory | textual | electronic | wild | upright | oblique | distorted |

| # of pages | 1674 | 254 | 198 | 758 | 421 | 947 |

NTable-cam is augmented from NTable-ori. By changing rotation, brightness and contrast, original 2.1k+ images are expanded eightfold to 17k+ images (see Examples).

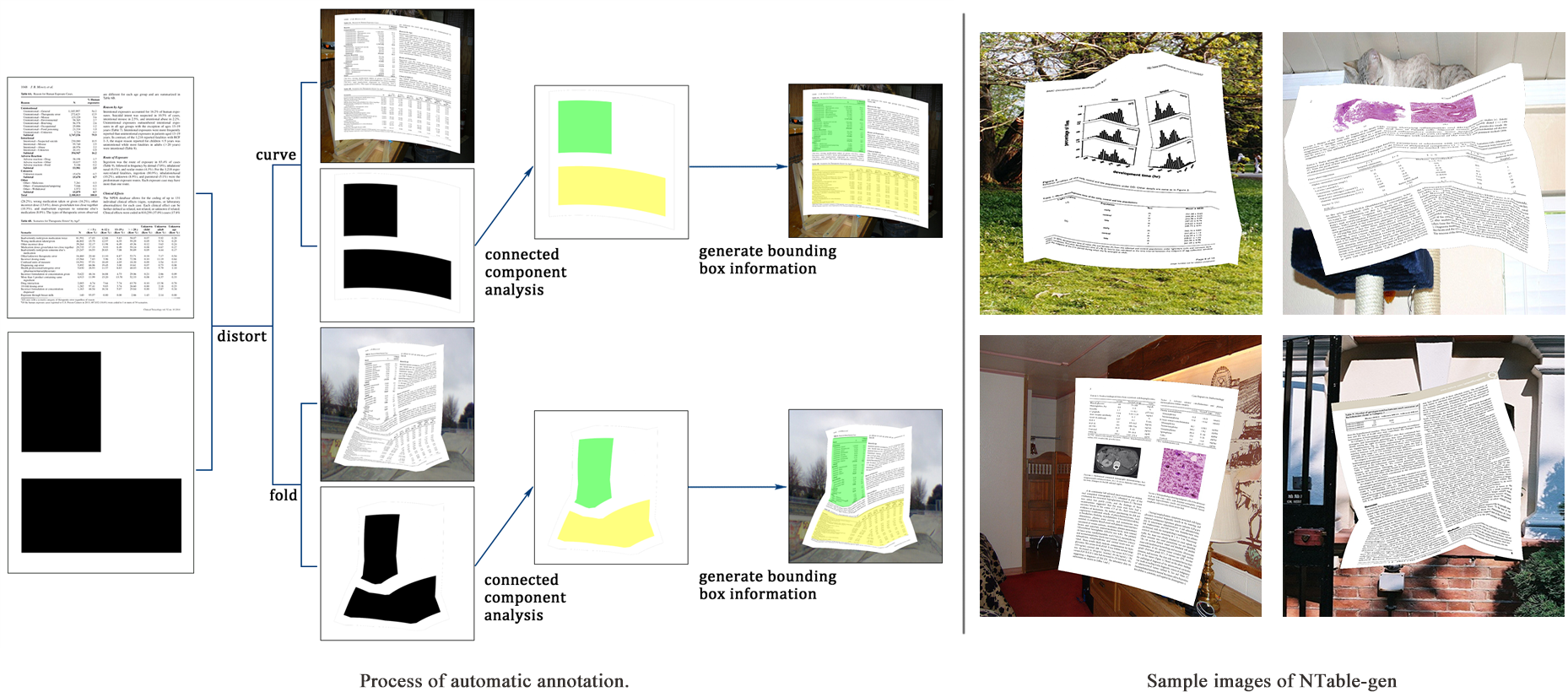

NTable-gen is a synthetic dataset, it simulates as much as possible the various deformation conditions, which is to address the limitations of the current data, ulteriorly improve data richness. We chose PubLayNet as the original document images. There are 86950 pages with at least one table in PubLayNet’s training set. We randomly select 8750 pages. Background images are from the VOC2012 dataset (see Examples).

Get data

NTable-gen: Link 1 (Google Drive), Link 2 (Baidu Disk)

The original NTable-ori and NTable-cam: Link 1 (Google Drive), Link 2 (Baidu Disk)

We collected 607 new images, including 1000 tables. The statistics are shown in Table 2. Download link of the updated NTable-ori and NTable-cam: Link 1 (Google Drive), Link 2 (Baidu Disk)

| category | source | shape | ||||

| subcategory | textual | electronic | wild | upright | oblique | distorted |

| # of pages | 285 | 195 | 484 | 396 | 221 | 347 |

Annotation format

The annotation files follows the format of YOLO, [x, y, w, h] determines a bounding box, (x, y) is the coordinate of the center of the bounding box, w and h is the normalized width and height of the bounding box, where w is the width of the bbox divided by the width of the image, h is the height of the bbox divided by the height of the image.

Add new images

We provide the code to add new images into NTable. Here are the steps to enlarge NTable:

- Use Labelme to annotate the images, it will generate a json file for every image.

- Put the images and annotations into ./orimage

- Run anno_aug.py, it will separately append the original images, the augmented images and the corresponding annotations into ./NTable-ori and ./NTable-cam

Examples

Note

- The classification is subjective. For example, you may find some tables that have been classified as 'upright' also have some slight deformation or tilts.

- There are some clerical errors in the tables in our paper. The correct tables are as follows:

| training | validation | test | |

| NTable-cam | 11904 | 1696 | 3408 |

| NTable-gen | 11984 | 1712 | 3424 |

| total | 23888 | 3408 | 6832 |

| source | shape | |||||

| textual | electronic | wild | upright | oblique | distorted | |

| train | 7152 | 1336 | 3416 | 3072 | 2136 | 6696 |

| validation | 944 | 200 | 552 | 464 | 304 | 928 |

| test | 2064 | 288 | 1056 | 912 | 600 | 1896 |

Acknowledgement

I would like to thank @Jotaro-Kujo, Zhang Meiqing and Zhu Chaowen. In the process of collecting data, they offered great help.