use eye gaze to control the movements of a vehicle in ros

- A robot with camera sensor

- Face detection and analysis

- Eye gaze estimation

- Mouth status estimation

- Control signal rendering to the robot

- install ROS and gazebo

- install tensorflow-gpu==1.14.0 (cuda-10.0, cudnn-7.4)

- install python libs:

dlib,scipy - Download the eye gaze models and extract it to $ROOT_REPO

cd $ROOT_REPO

catkin_make

source devel/setup.bash

roslaunch launch/one_of_the_files.launch

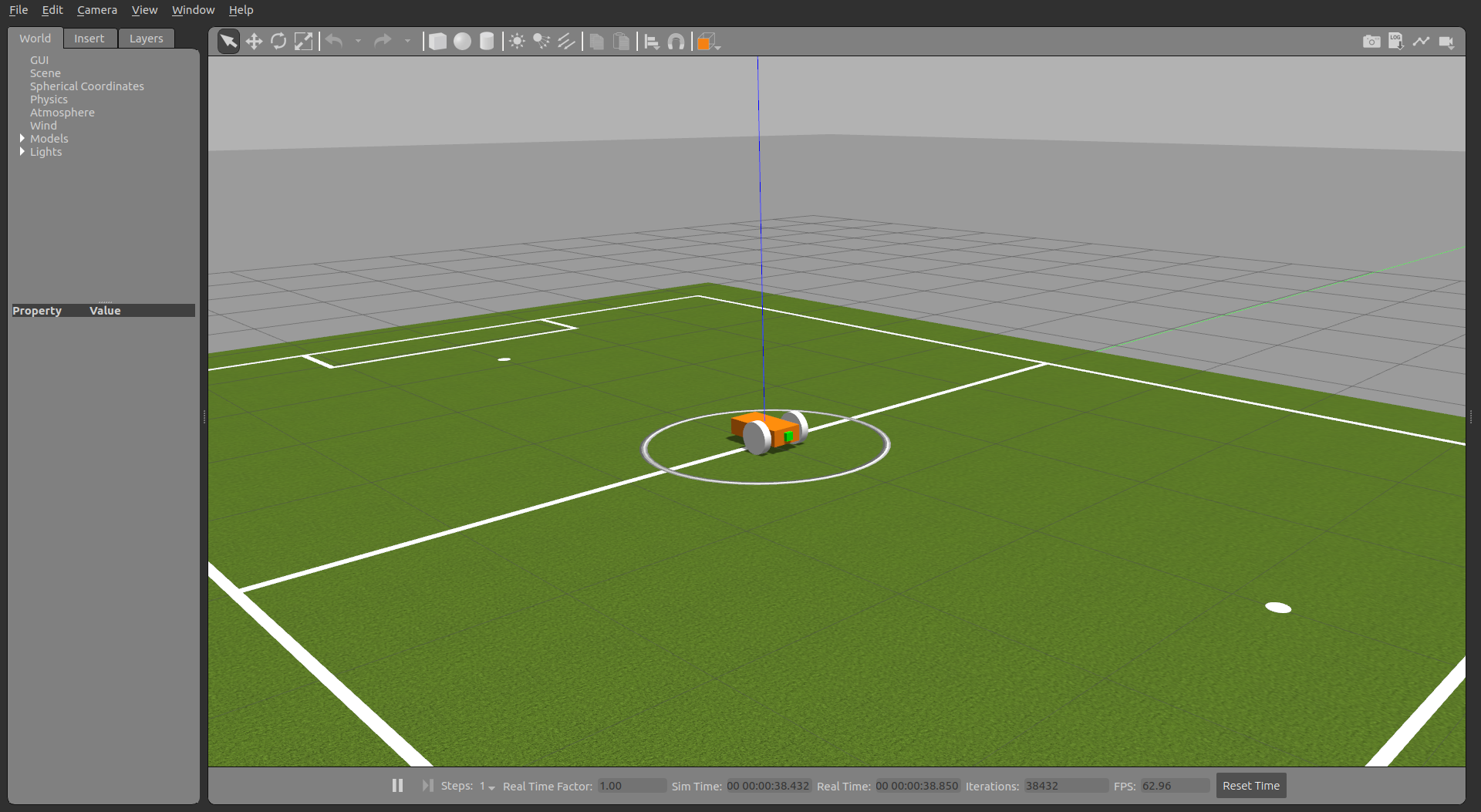

- Show robot in gazebo simulator

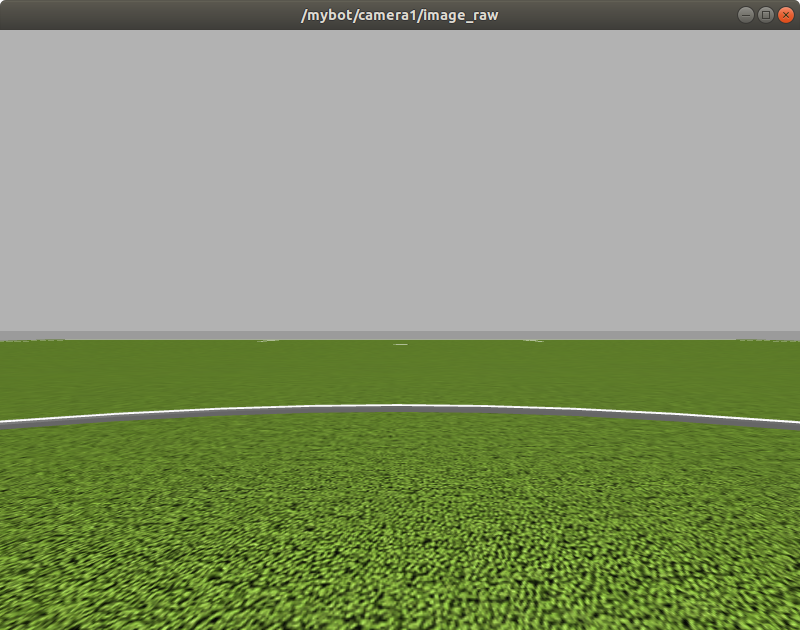

- Show image obtained by the camera on the robot

-

Analyze mouth status and estimate eye gaze to control the robot

- Use keys as commands

- Use gaze as direction and a space key as a moving command

- Use gaze dewll to push the command buttons

This repo's model is from the paper below:

@inproceedings{poy2021multimodal,

title={A multimodal direct gaze interface for wheelchairs and teleoperated robots},

author={Poy, Isamu and Wu, Liang and Shi, Bertram E},

booktitle={2021 43rd Annual International Conference of the IEEE Engineering in Medicine \& Biology Society (EMBC)},

pages={4796--4800},

year={2021},

organization={IEEE}

}

@inproceedings{chen2018appearance,

title={Appearance-based gaze estimation using dilated-convolutions},

author={Chen, Zhaokang and Shi, Bertram E},

booktitle={Asian Conference on Computer Vision},

pages={309--324},

year={2018},

organization={Springer}

}