Hanlin Chen, Fangyin Wei, Chen Li, Tianxin Huang, Yunsong Wang, Gim Hee Lee

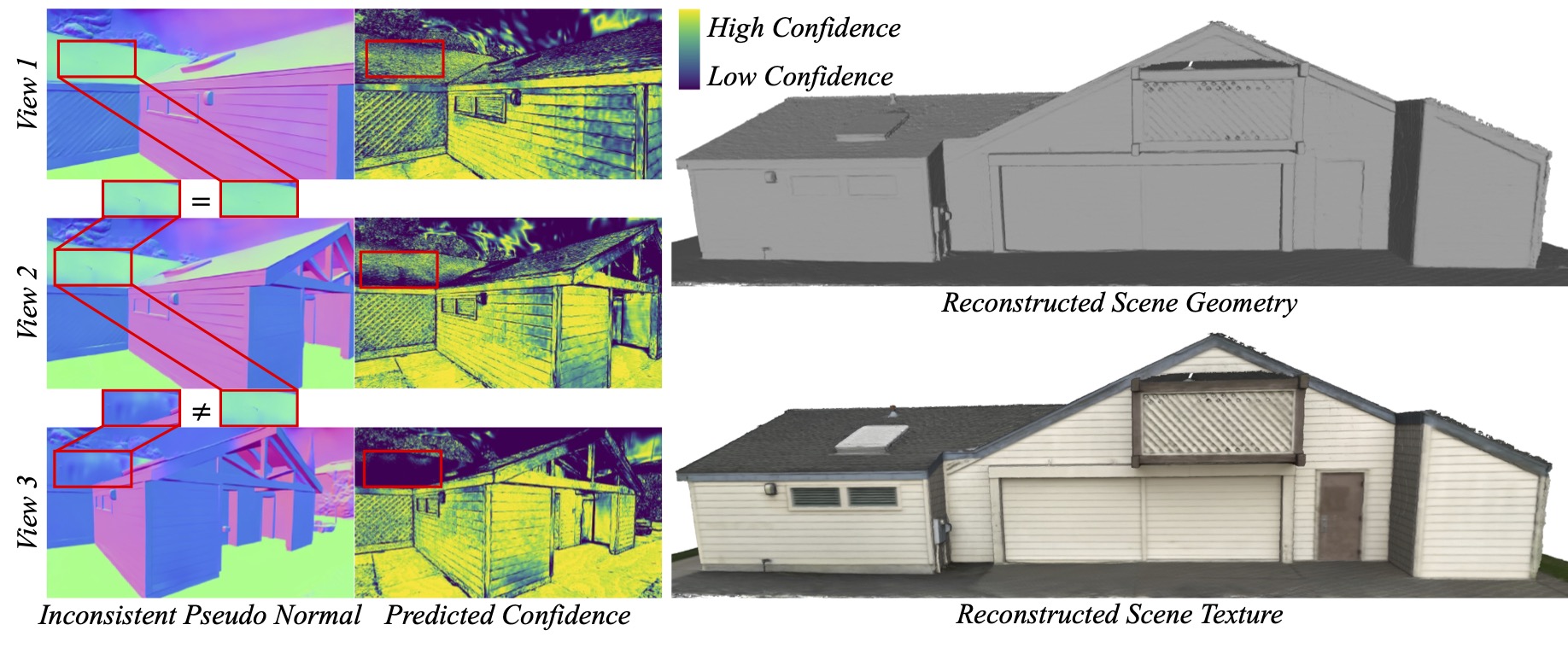

VCR-GauS formulates a novel multi-view D-Normal regularizer that enables full optimization of the Gaussian geometric parameters to achieve better surface reconstruction. We further design a confidence term to weigh our D-Normal regularizer to mitigate inconsistencies of normal predictions across multiple views.

- [2024.09.24]: VCR-GauS is accepted to NeurIPS 2024.

Clone the repository and create an anaconda environment using

git clone https://github.com/HLinChen/VCR-GauS.git --recursive

cd VCR-GauS

git pull --recurse-submodules

env=vcr

conda create -n $env -y python=3.10

conda activate $env

pip install -e ".[train]"

# you can specify your own cuda path

export CUDA_HOME=/usr/local/cuda-11.8

pip install -r requirements.txt

For eval TNT with the official scripts, you need to build a new environment with open3d==0.10:

env=f1eval

conda create -n $env -y python=3.8

conda activate $env

pip install -e ".[f1eval]"

For extract normal maps based on DSINE, you need to build a new environment:

conda create --name dsine python=3.10

conda activate dsine

conda install pytorch torchvision torchaudio pytorch-cuda=12.1 -c pytorch -c nvidia

python -m pip install geffnet

Similar to Gaussian Splatting, we also use colmap to process data and you can follow COLMAP website to install it.

You can download the proprocessed Tanks and Temples dataset from here. Or proprocess it by your self:

Download the data from Tanks and Temples website.

You will also need to download additional COLMAP/camera/alignment and the images of each scene.

The file structure should look like (you need to move the downloaded images to folder images_raw):

tanks_and_temples

├─ Barn

│ ├─ Barn_COLMAP_SfM.log (camera poses)

│ ├─ Barn.json (cropfiles)

│ ├─ Barn.ply (ground-truth point cloud)

│ ├─ Barn_trans.txt (colmap-to-ground-truth transformation)

│ └─ images_raw (raw input images downloaded from Tanks and Temples website)

│ ├─ 000001.png

│ ├─ 000002.png

│ ...

├─ Caterpillar

│ ├─ ...

...

Run the following command to generate json and colmap files:

# Modify --tnt_path to be the Tanks and Temples root directory.

sh bash_scripts/1_preprocess_tnt.shYou need to download the code and model weight of DSINE first. Then, modify CODE_PATH to be the DSINE root directory, CKPT to be the DSINE model path, DATADIR to be the TNT root directory in the bash script. Run the following command to generate normal maps:

sh bash_scripts/2_extract_normal_dsine.shIf you don't want to use the semantic masks, you can set optim.loss_weight.semantic=0 and skip the mask generation.

You need to download the code and model of Grounded-SAM first. Then, install the environment based on 'Install without Docker' in the webside. Next, modify GSAM_PATH to be the GSAM root directory, DATADIR to be the TNT root directory in the bash script. Run the following command to generate semantic masks:

sh bash_scripts/3_extract_mask.shPlease download the Mip-NeRF 360 dataset from the official webiste, the preprocessed DTU dataset from 2DGS. And extract normal maps with DSINE following the above scripts. You can also use GeoWizard to extract normal maps by following the script: 'bash_scripts/4_extract_normal_geow.sh', and please install the corresponding environment and download the code as well as model weights first.

# you might need to update the data path in the script accordingly

# Tanks and Temples dataset

python python_scripts/run_tnt.py

# Mip-NeRF 360 dataset

python python_scripts/run_mipnerf360.py

We have uploaded the extracted meshes, you can download and eval them by yourselves (TNT and DTU). You might need to update the mesh and data path in the script accordingly. And set do_train and do_extract_mesh to be False.

# Tanks and Temples dataset

python python_scripts/run_tnt.py

# DTU dataset

python python_scripts/run_dtu.py

We also incorporate some regularizations, like depth distortion loss and normal consistency loss, following 2DGS and GOF. You can play with it by:

- normal consistency loss: setting optim.loss_weight.consistent_normal > 0;

- depth distortion loss:

- set optim.loss_weight.depth_var > 0

- set NUM_DIST = 1 in submodules/diff-gaussian-rasterization/cuda_rasterizer/config.h, and reinstall diff-gaussian-rasterization

We use the same data format from 3DGS, please follow here to prepare the your dataset. Then you can train your model and extract a mesh.

# Generate bounding box

python process_data/convert_data_to_json.py \

--scene_type outdoor \

--data_dir /your/data/path

# Extract normal maps

# Use DSINE:

python -W ignore process_data/extract_normal.py \

--dsine_path /your/dsine/code/path \

--ckpt /your/ckpt/path \

--img_path /your/data/path/images \

--intrins_path /your/data/path/ \

--output_path /your/data/path/normals

# Or use GeoWizard

python process_data/extract_normal_geo.py \

--code_path ${CODE_PATH} \

--input_dir /your/data/path/images/ \

--output_dir /your/data/path/ \

--ensemble_size 3 \

--denoise_steps 10 \

--seed 0 \

--domain ${DOMAIN_TYPE} # outdoor indoor object

# training

# --model.resolution=2 for using downsampled images with factor 2

# --model.use_decoupled_appearance=True to enable decoupled appearance modeling if your images has changing lighting conditions

python train.py \

--config=configs/reconstruct.yaml \

--logdir=/your/log/path/ \

--model.source_path=/your/data/path/ \

--model.data_device=cpu \

--model.resolution=2 \

--wandb \

--wandb_name vcr-gaus"

# extract the mesh after training

python tools/depth2mesh.py \

--voxel_size 5e-3 \

--max_depth 8 \

--clean \

--cfg_path /your/gaussian/path/config.yaml"

This project is built upon 3DGS. Evaluation scripts for DTU and Tanks and Temples dataset are taken from DTUeval-python and TanksAndTemples respectively. We also utilize the normal estimation DSINE as well as GeoWizard, and semantic segmentation SAM and Grounded-SAM. In addition, we use the pruning method in LightGaussin. We thank all the authors for their great work and repos.

If you find our code or paper useful, please cite

@article{chen2024vcr,

author = {Chen, Hanlin and Wei, Fangyin and Li, Chen and Huang, Tianxin and Wang, Yunsong and Lee, Gim Hee},

title = {VCR-GauS: View Consistent Depth-Normal Regularizer for Gaussian Surface Reconstruction},

journal = {arXiv preprint arXiv:2406.05774},

year = {2024},

}

If you the flatten 3D Gaussian useful, please kindly cite

```bibtex

@article{chen2023neusg,

title={Neusg: Neural implicit surface reconstruction with 3d gaussian splatting guidance},

author={Chen, Hanlin and Li, Chen and Lee, Gim Hee},

journal={arXiv preprint arXiv:2312.00846},

year={2023}

}