Gaussian Grouping: Segment and Edit Anything in 3D Scenes

[Project Page]

arXiv 2023

ETH Zurich

We propose Gaussian Grouping, which extends Gaussian Splatting to jointly reconstruct and segment anything in open-world 3D scenes via lifting 2D SAM. It also efficiently supports versatile 3D scene editing tasks. Refer to our paper for more details.

🔥🔥 2024/01/16: We released the LERF-Mask dataset and evaluation code.

2024/01/06: We released the 3D Object Removal & Inpainting code.

2023/12/20: We released the Install Notes and Training & Rendering code.

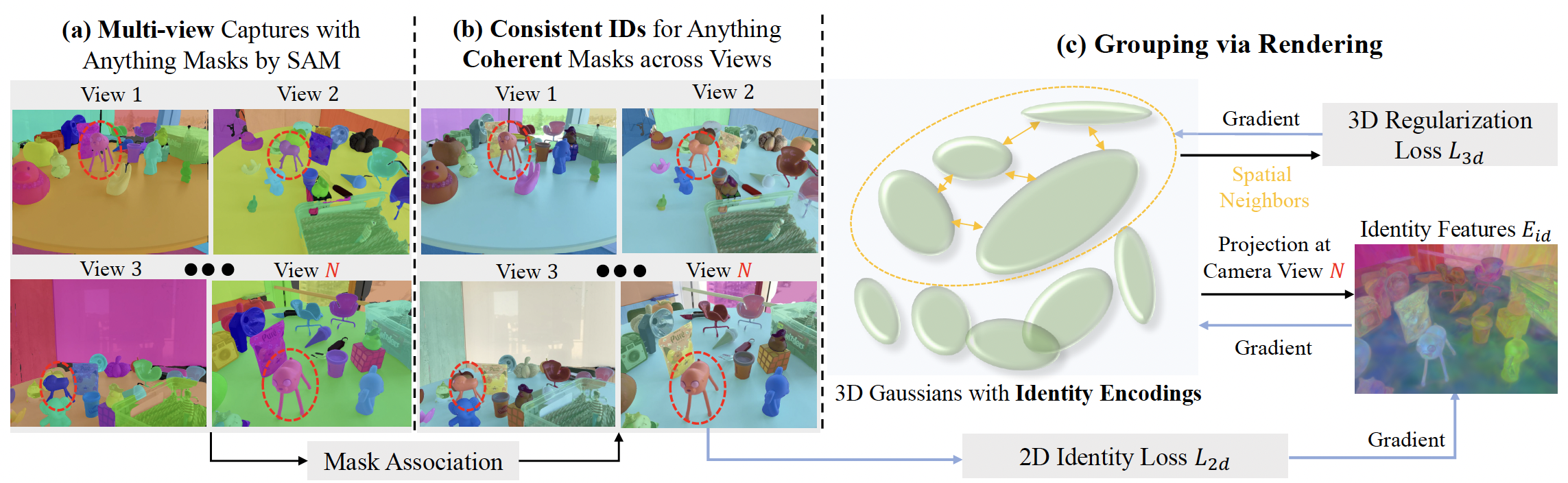

The recent Gaussian Splatting achieves high-quality and real-time novel-view synthesis of the 3D scenes. However, it is solely concentrated on the appearance and geometry modeling, while lacking in fine-grained object-level scene understanding. To address this issue, we propose Gaussian Grouping, which extends Gaussian Splatting to jointly reconstruct and segment anything in open-world 3D scenes. We augment each Gaussian with a compact Identity Encoding, allowing the Gaussians to be grouped according to their object instance or stuff membership in the 3D scene. Instead of resorting to expensive 3D labels, we supervise the Identity Encodings during the differentiable rendering by leveraging the 2D mask predictions by SAM, along with introduced 3D spatial consistency regularization. Comparing to the implicit NeRF representation, we show that the discrete and grouped 3D Gaussians can reconstruct, segment and edit anything in 3D with high visual quality, fine granularity and efficiency. Based on Gaussian Grouping, we further propose a local Gaussian Editing scheme, which shows efficacy in versatile scene editing applications, including 3D object removal, inpainting, colorization and scene recomposition.

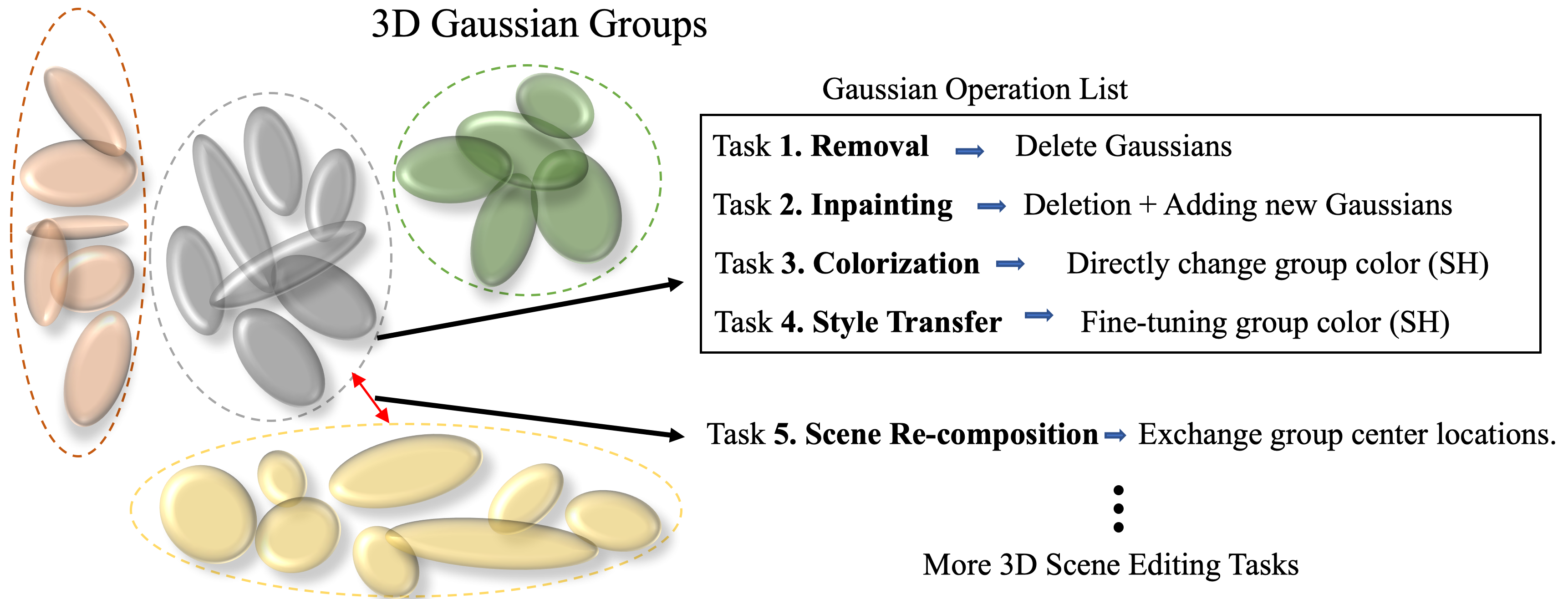

Local Gaussian Editing scheme: Grouped Gaussians after training. Each group represents a specific instance / stuff of the 3D scene and can be fully decoupled.

Our Gaussian Grouping can remove the large-scale objects on the Tanks & Temples dataset, from the whole 3D scene with greatly reduced artifacts. Zoom for better view.

github_object_removal1_short.mp4

Comparison on 3D object inpainting cases, where SPIn-NeRF requires 5h training while our method with better inpainting quality only needs 1 hour training and 20 minutes tuning.

github_inpainting_case1_short.mp4

github_inpainting_case2.mp4

Comparison on 3D object style transfer cases, Our Gaussian Grouping produces more coherent and natural transfer results across views, with faithfully preserved background.

3d_object_style_transfer_case_short.mp4

Our Gaussian Grouping approach jointly reconstructs and segments anything in full open-world 3D scenes. The masks predicted by Gaussian Grouping contains much sharp and accurate boundary than LERF.

github_3d_seg_case_short.mp4

Our Gaussian Grouping approach jointly reconstructs and segments anything in full open-world 3D scenes. Then we concurrently perform 3D object editing for several objects.

github_multi_editing_case-2.mp4

You can refer to the install document to build the Python environment.

Then refer to the train document to train your own scene.

For evaluation on the LERF-Mask dataset proposed in our paper, you can refer to the dataset document.

You can select the 3D object for removal and inpainting after training. Details are in the edit removal inpaint document.

If you find Gaussian Grouping useful in your research or refer to the provided baseline results, please star ⭐ this repository and consider citing 📝:

@article{gaussian_grouping,

title={Gaussian Grouping: Segment and Edit Anything in 3D Scenes},

author={Ye, Mingqiao and Danelljan, Martin and Yu, Fisher and Ke, Lei},

journal={arXiv preprint arXiv:2312.00732},

year={2023}

}