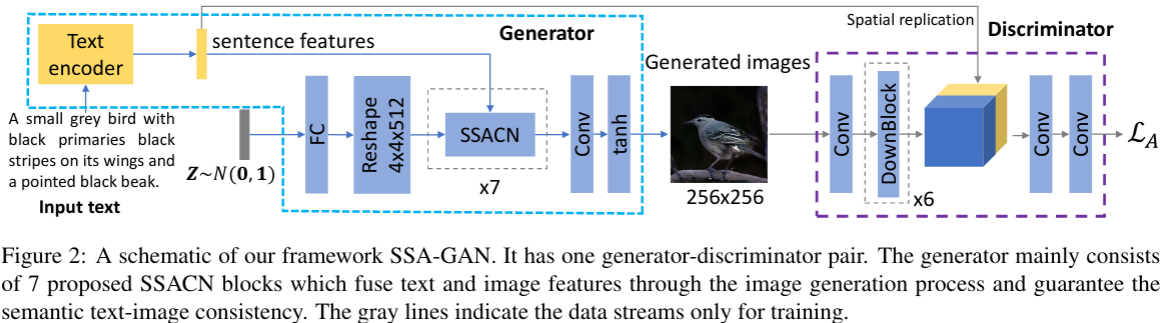

This repository includes the implementation for Text to Image Generation with Semantic-Spatial Aware GAN

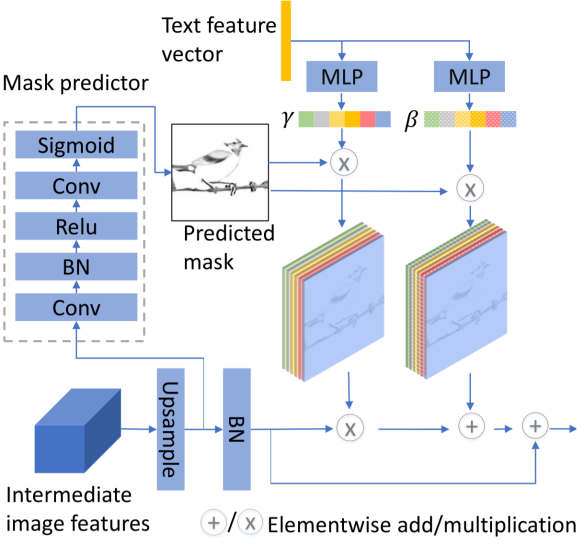

The structure of the spatial-semantic aware (SSA) block is shown as below

- python 3.6+

- pytorch 1.0+

- numpy

- matplotlib

- opencv

- Download the preprocessed metadata for birds and coco and save them to

data/ - Download birds dataset and extract the images to

data/birds/ - Download coco dataset and extract the images to

data/coco/

- Download the pre-trained DAMSM for CUB and save it to

DAMSMencoders/ - Download the pre-trained DAMSM for coco and save it to

DAMSMencoders/

you can download our trained models from our onedrive repo

Run main.py file. Please adjust args in the file as your need.

please run IS.py and test_lpips.py (remember to change the image path) to evaluate the IS and diversity scores, respectively.

For evaluating the FID score, please use this repo https://github.com/bioinf-jku/TTUR.

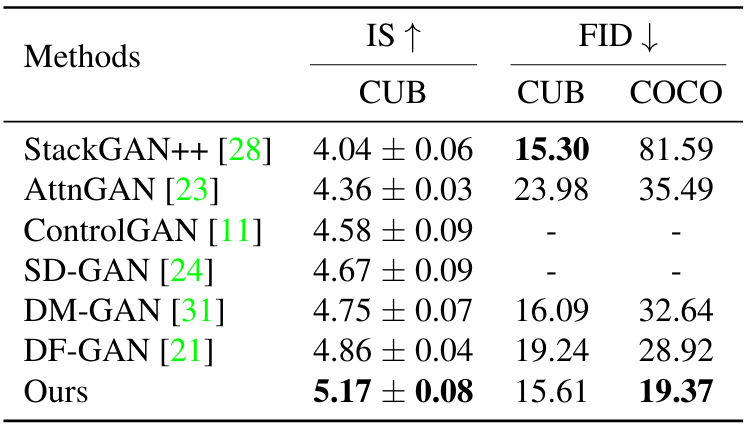

You will get the scores close to below after training under xe loss for xxxxx epochs:

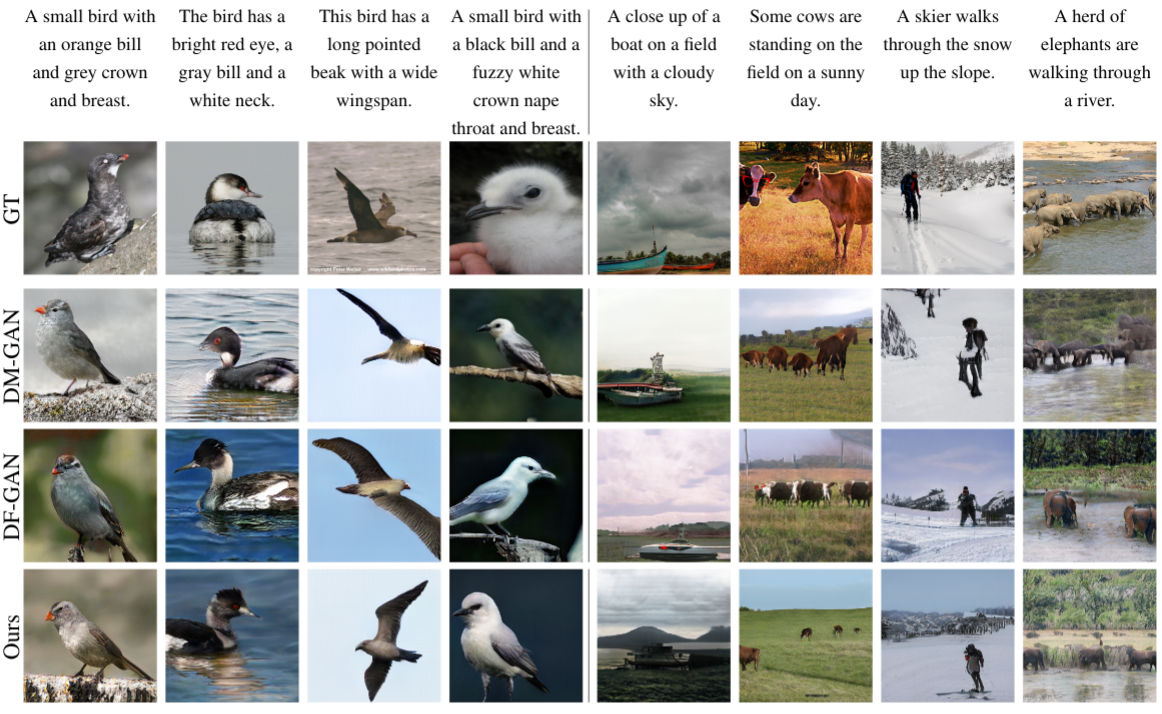

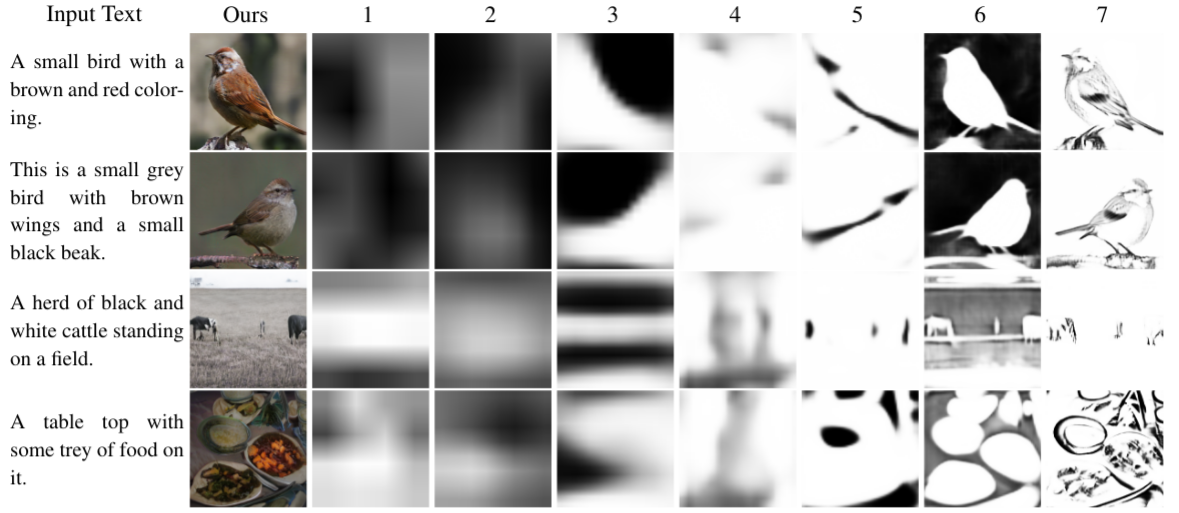

Some qualitative results on coco and birds dataset from different methods are shown as follows:

The predicted mask maps on different stages are shown as as follows:

If you find this repo helpful in your research, please consider citing our paper:

@article{liao2021text,

title={Text to Image Generation with Semantic-Spatial Aware GAN},

author={Liao, Wentong and Hu, Kai and Yang, Michael Ying and Rosenhahn, Bodo},

journal={arXiv preprint arXiv:2104.00567},

year={2021}

}

The code is released for academic research use only. For commercial use, please contact Wentong Liao.

This implementation borrows part of the code from DF-GAN.