This repo implements the system described in the paper:

Visual Odometry Revisited: What Should Be Learnt?

Huangying Zhan, Chamara Saroj Weerasekera, Jiawang Bian, Ian Reid

The demo video can be found here.

@article{zhan2019dfvo,

title={Visual Odometry Revisited: What Should Be Learnt?},

author={Zhan, Huangying and Weerasekera, Chamara Saroj and Bian, Jiawang and Reid, Ian},

journal={arXiv preprint arXiv:1909.09803},

year={2019}

}

This repo includes

- the frame-to-frame tracking system DF-VO;

- evaluation scripts for visual odometry;

- trained models and VO results

This code was tested with Python 3.6, CUDA 9.0, Ubuntu 16.04, and PyTorch.

We suggest use Anaconda for installing the prerequisites.

conda env create -f requirement.yml -p dfvo # install prerequisites

conda activate dfvo # activate the environment [dfvo]

The main dataset used in this project is KITTI Driving Dataset. After downloaing the dataset, create a softlink in the current repo.

ln -s KITTI_ODOMETRY/sequences dataset/kitti_odom/odom_data

For our trained models, please visit here to download the models and save the models into the directory model_zoo/.

The main algorithm is inplemented in vo_moduels.py.

We have created different configurations for running the algrithm.

# Example 1: run default kitti setup

python run.py -d options/kitti_default_configuration.yml

# Example 2: Run custom kitti setup

# kitti_default_configuration.yml and kitti_stereo_0.yml are merged

python run.py -c options/kitti_stereo_0.yml

The result (trajectory pose file) is saved in result_dir defined in the configuration file.

Please check the kitti_default_configuration.yml for more possible configuration.

Note that, we have cleaned and optimized the code for better readability and it changes the randomness such that the quantitative result is slightly different from the result reported in the paper.

The original results, including related works, can be found here.

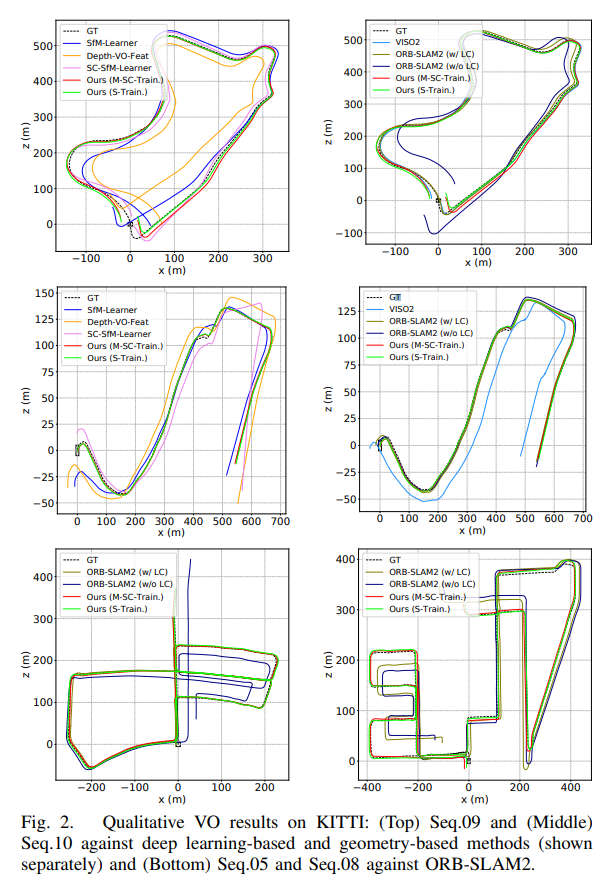

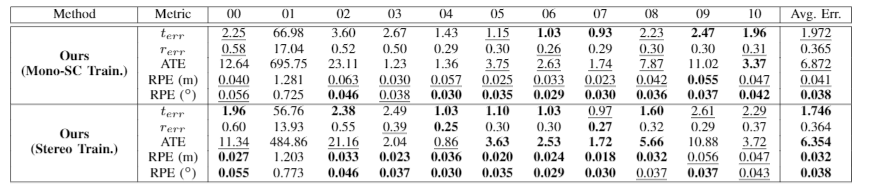

KITTI Odometry benchmark contains 22 stereo sequences, in which 11 sequences are provided with ground truth. The 11 sequences are used for evaluating visual odometry.

python tool/evaluation/eval_odom.py --result result/tmp/0 --align 6dof

For more information about the evaluation toolkit, please check the toolbox page or the wiki page.

For academic usage, the code is released under the permissive MIT license. For any commercial purpose, please contact the authors.

Some of the codes were borrowed from the excellent works of monodepth2, LiteFlowNet and pytorch-liteflownet. The borrowed files are licensed under their original license respectively.

- Release more pretrained models

- Release more results

- (maybe) training code: it takes longer time to clean the training code. Also, the current training code involves other projects which increases the difficulty in cleaning the code.