- Aviral Singh (aviralksingh@gmail.com)

- Marco Marasca (marascamarco@gmail.com)

- Thomas Brakel (twbrakel@gmail.com)

- Bilal Elsheemy (belaalelsheemy93@gmail.com)

- Chuqiao Dong (chd415@lehigh.edu)

This repository contains the code for the final project of the Udacity Self-Driving Car Nanodegree: Programming a Real Self-Driving Car developed by the team SuperAI.

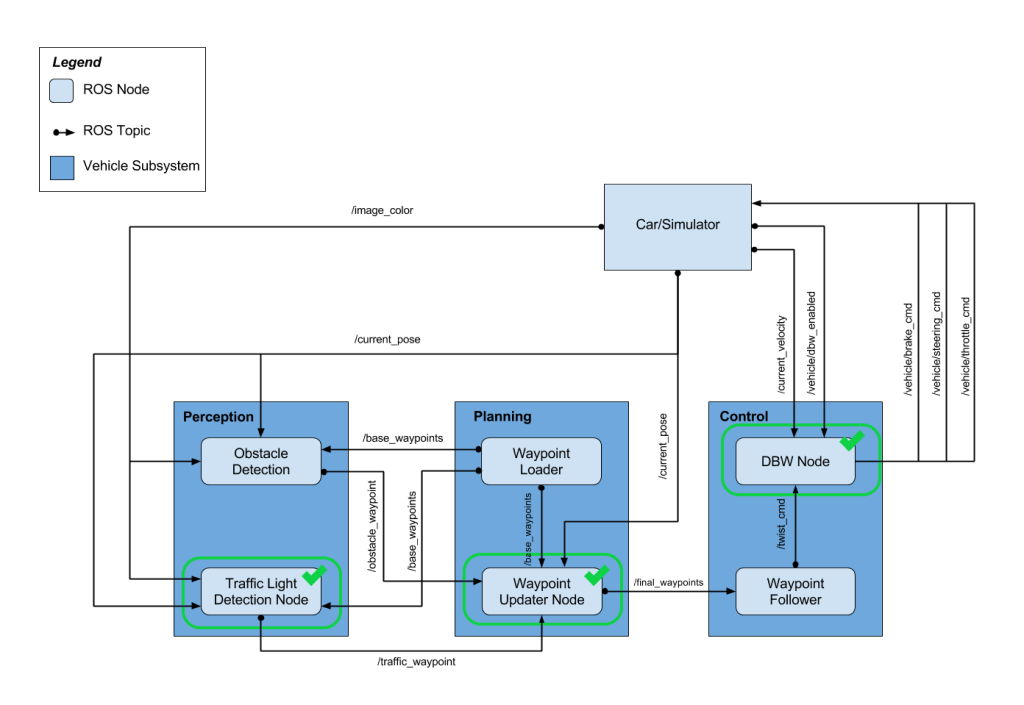

It consists of a set of components implemented and deployed as ROS nodes that work together in order to drive Carla (Udacity's self-driving car) autonomously around a test track. In particular the project tackles 3 different sub-system of the vehicle: Perception, Planning and Control:

System Architecture

Among the components depicted in the picture above, the team focused on the implementation and integration of the following 3 nodes:

- Traffic Light Detection: Part of the perception sub-system, the node takes care of detecting traffic lights as well as classifying their state

- Waypoint Updater: Part of the planning sub-system, takes care of generating a trajectory (as a set of waypoints with their respective target velocities) taking into account the detected traffic lights in the environment

- Drive by Wire Controller: Part of the control sub-system, takes care of translating the Twist Messages generated by the waypoint follower into throttle, brake and steering values

The traffic light detection node is in charge of the part of the perception sub-system that needs to detect incoming traffic lights and their state. In this simple implementation the detector computes the position (in term of waypoint index) of the upcoming traffic light if its state is currently detected as a red light, so that the waypoint updater can take the upcoming red light in generating a trajectory.

The node subscribes to 3 topics:

- /base_waypoints: The topic publishes the waypoints that the car should follow (in this project it contains all the waypoints)

- /vehicle/traffic_lights: The topic publishes the position of the traffic lights are and their state (only in simulator)

- /current_pose: The topic publishes the current position of the vehicle

- /image_color: The topic publishes the RGB image captured by the camera

With the information of the current pose and the traffic lights the node computes the distance to the closest traffic light and if within a reasonable range runs a detection on the whole image to establish the traffic light state (if any). If a traffic light is detected and its state is red the node publishes (topic /traffic_waypoint) the waypoint index at which the traffic light is detected.

Note: the simulator publishes the traffic light state, so in order to avoid running the real detector (e.g. for testing) is it possible to disable the detector, setting the value of the use_light_state to True in the sim_traffic_light_config.yaml configuration file.

The detector uses Tensorflow to run a variant of a Single Shot MultiBox Detector that uses as feature extractor MobileNetV2. The model weights used by the included graph are pre-trained on the COCO Dataset that already contains the traffic light category and provided by the Tensorflow Object Detection API.

The model was fine-tuned on 3 different classes corresponding to the various states of the traffic lights, in order to fold the object detection, localization and classification into a single end-to-end model.

The model was fine-tuned for the task at end using labelled images from various sources, including the Udacity Simulator, the Udacity Training Bag and images captured by Carla from real runs at the test lot of Udacity (Download here). Note that we deploy a single model that runs for both the simulator and for the test lot, as the model generalized well enough to recognize various images.

The images used for training were labelled semi-automatically first running a pre-trained high accuracy object detection model (Faster RCNN) and then manually validated using LabelImg. The final dataset used for both training, validation and testing can be downloaded from here.

Various models were fine-tuned and tested, for more details about the whole process and the evaluation results see the dedicated Traffic Light Detection Repository.

The waypoint updater node takes care of generating a trajectory in terms of waypoints so that the waypoint follower can generate the correct Twist Messages consumed by the DBW Node. Each of the waypoints are generated from a subset of the overall set of waypoints provided according to the vehicle position (published in the /current_pose topic). For each waypoint a target velocity is generated to smoothen the transition between each waypoint.

The node also subscribes to the /traffic_waypoint topic published by the Traffic Light Detection Node in order to adjust the waypoints in the presence of a red light:

Finally the node publishes the list of waypoints ahead of the vehicle to the /final_waypoints topic.

Udacity's self-driving car Carla is equipped with a drive-by-wire (DBW) system, which controls the throttle, brake, and steering electronically.

The DBW Node is responsible for the vehicle motion control by sending throttle, brake and steering commands. The node subscribes to the /current_velocity topic along with the /twist_cmd topic to receive target linear and angular velocities (generated by the waypoint follower node from the waypoints published by the waypoint updater. The DBW node primarily defines the communication interface. This node publishes throttle, brake, and steering commands to the /vehicle/throttle_cmd, /vehicle/brake_cmd, and /vehicle/steering_cmd topics. The twist_controller contains the algorithms used to generate the control values.

The default yaw_controller function was used to generate the steering target. No significant deviation or undesirable behaviour was experienced using this function.

The longitudinal control (throttle/brake) relies on the speed target generated by the waypoint updater node. A low pass filter is used to remove high-frequency noise from the measured vehicle velocity. Separate PID controllers generate the throttle and brake commands (see lines 72-79 in twist_controller), using different gains summarized in the Table below:

| P | I | D | |

|---|---|---|---|

| Throttle | 0.40 | 0.05 | 0.0 |

| Brake | 100 | 0.0 | 1.0 |

Note: the throttle demand is normalized to [0:1], whereas the brake command is a physical torque value so the difference in magnitude is due to the units. The main point of interest is that the braking is more aggressive and reactive to slow the vehicle, compared to the throttle response. The throttle and brake commands are generated, within maximal limits specifed by parameters (EgoParam.accel_limit for throttle and 5000 for brake).

Under acceleration, integral gain is used to achieve higher accuracy to the speed target while cruising. Only proportional and derivative gains are used under braking so no residual brake demand is present when the speed target changes (e.g. due to a green light).

The brake and throttle commands are exclusive through a conditional branch so they can not be applied simultaneously. In addition, the brake PID controller is reset under acceleration and the throttle controller is reset during a braking event, to ensure a smooth transition between the two controllers.

In order to hold the vehicle stationary, a fixed brake torque is requested when the vehicle speed is less than a threshold (0.2 m/s) and the speed target is 0.0.

-

Be sure that your workstation is running Ubuntu 16.04 Xenial Xerus or Ubuntu 14.04 Trusty Tahir. Ubuntu downloads can be found here.

-

Follow these instructions to install ROS

- ROS Kinetic if you have Ubuntu 16.04.

- ROS Indigo if you have Ubuntu 14.04.

Note: If using a server without GUI support ROS can be installed using the

ROS Baseoption:sudo apt-get install ros-kinetic-ros-base

In this case you will need to later add the cv_bridge module:

sudo apt-get install ros-(ROS version name)-cv-bridge

-

Install Dataspeed DBW, use the One Line SDK Install (binary) to install the SDK on a workstation that already has ROS installed.

-

Download the Udacity Simulator.

If using a Virtual Machine to install Ubuntu, use the following configuration as minimum:

- 2 CPU

- 2 GB system memory

- 25 GB of free hard drive space

Udacity provides a VM Disk Image that has ROS and Dataspeed DBW already installed, so you can skip the next two steps if you are using it.

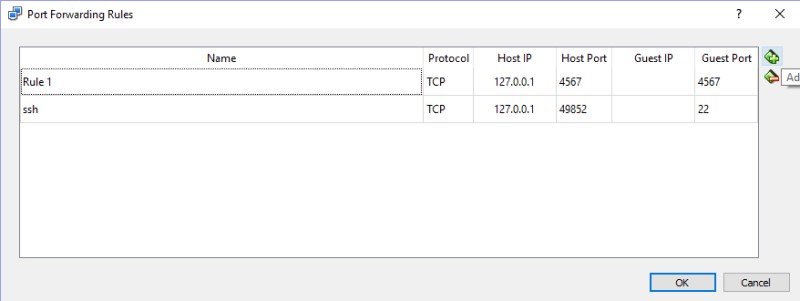

Note: If running in a VM port 4567 needs to be forwarded, for example in Virtual Box:

- First open up Oracle VM VirtualBox

- Click on the default session and select settings.

- Click on Network, and then Advanced.

- Click on Port Forwarding

- Click on the green plus, adds new port forwarding rule.

- Add a rule that assigns 4567 as both the host port and guest Port, as in the screenshot:

Build the docker container

docker build . -t capstoneRun the docker file

docker run -p 4567:4567 -v $PWD:/capstone -v /tmp/log:/root/.ros/ --rm -it capstoneROS can be installed in the Windows Subsystem for Linux, simply install Ubuntu Xenial 16.04 and follow the Native Installation instructions selected the ROS Base installation option.

To install multiple versions of Ubuntu (in case you already have a different version of Ubuntu) use LxRunOffline.

-

Clone the project repository

git clone https://github.com/marcomarasca/SDCND-SuperAI-Capstone.git

-

Install python dependencies

cd CarND-Capstone pip install -r requirements.txtNote: If you run into

IndexError: tuple index out of rangeplease update Pillow:sudo apt-get install --upgrade Pillow -

Make and run styx

cd ros catkin_make source devel/setup.sh roslaunch launch/styx.launch

-

Run the simulator