Created with the TechZone Accelerator Toolkit

This collection of terraform automation bundles has been crafted from a set of Terraform modules created by Ecosystem Engineering

- 08/2022 - Updated IBM Cloud Storage Class support

- 05/2022 - Usability updates and Bug Fixes

- 05/2022 - Bug Fix Release

- 04/2022 - Initial Release

This collection of Turbonomic terraform automation layers has been crafted from a set of Terraform modules created by the IBM GSI Ecosystem Lab team part of the IBM Partner Ecosystem organization. Please contact Matthew Perrins mjperrin@us.ibm.com, Vijay Sukthankar vksuktha@in.ibm.com, Sean Sundberg seansund@us.ibm.com, Tom Skill tskill@us.ibm.com, or Andrew Trice amtrice@us.ibm.com for more details or raise an issue on the repository.

The automation will support the installation of Turbonomic on three cloud platforms AWS, Azure, and IBM Cloud.

The Turbonomic automation assumes you have an OpenShift cluster already configured on your cloud of choice. The supported managed options are ROSA for AWS, ARO for Azure or ROKS for IBM Cloud .

Before you start to install and configure Turbonomic, you will need to identify what your target infrastructure is going to be. You can start from scratch and use one of the pre-defined reference architectures from IBM or bring your own. It is recommended to install this on a 4cpu x 16gb x 2 worker nodes OpenShift Cluster

The reference architectures are provided in three different forms, with increasing security and associated sophistication to support production configuration. These three forms are as follows:

- Quick Start - a simple architecture to quickly get an OpenShift cluster provisioned

- Standard - a standard production deployment environment with typical security protections, private endpoints, VPN server, key management encryption, etc

- Advanced - a more advanced deployment that employs network isolation to securely route traffic between the different layers.

For each of these reference architecture, we have provided a detailed set of automation to create the environment for the software. If you do not have an OpenShift environment provisioned, please use one of these. They are optimized for the installation of this solution.

| Cloud Platform | Automation and Documentation |

|---|---|

| TechZone for IBMers and Partners | You can provision ARO, ROSA and ROKS through IBM TechZone. This is only supported for IBMers and IBM Partners |

| IBM Cloud | IBM Cloud Quick Start IBM Cloud Standard |

| AWS | AWS Quick Start AWS Standard |

| Azure | Azure Quick Start Azure Standard |

| Bring You Own Infrastructure | You will need to setup GitOps and Storage details on the following steps |

Within this repository you will find a set of Terraform template bundles that embody best practices for provisioning Turbonomic in multiple cloud environments. This README.md describes the SRE steps required to provision the Turbonomic software.

This suite of automation can be used for a Proof of Technology environment, or used as a foundation for production workloads with a fully working end-to-end cloud-native environment. The software installs using GitOps best practices with Red Hat Open Shift GitOps

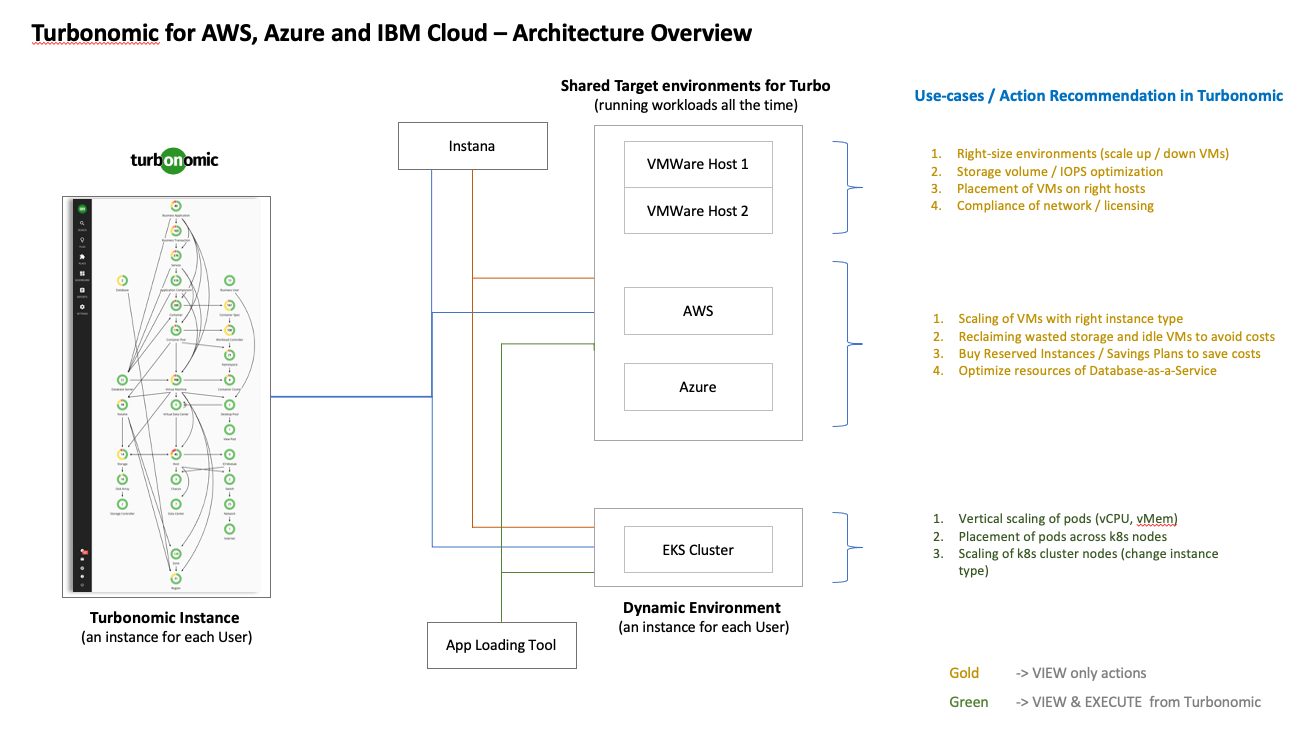

The following reference architecture represents the logical view of how Turbonomic works after it is installed. After obtaining a license key you will need to register your data sources. They can range from other Kubernetes environment to VMWare and Virtual Machines.

The following instructions will help you install Turbonomic into AWS, Azure, and IBM Cloud OpenShift Kubernetes environment.

To use Turbonomic you are required to install a license key. For Proof of Concepts, IBM Partners and IBMers can obtain a license key using the steps provided below.

For Partners follow these steps:

- For PoCs/PoTs, Partners can download a license key from Partner World Software Catalog

- You can search the software catalog for M07FSEN Turbonomic Application Resource Management On-Prem 8.6 for installation on Kubernetes. Once found, download the installation package. Once downloaded, unzip the archive. Note: This file is 12gb in size,

- Extract this download package to get the turbonomic license key This package contains license file for turbonomic, with a name similar to “TURBONOMIC-ARM.lic"

- This file is covered by Turbonomic ARM P/N are currently available under IBM PPA terms and conditions

For IBMers you can download a license key using these steps:

- Go to XL Leverage

- Search with keyword: turbonomic

- Select the package M07FSEN Turbonomic Application Resource Management On-Prem 8.6 for installation on Kubernetes. Once found, download the installation package. Once downloaded, unzip the archive. Note: This file is 12gb in size,

- Extract this download package to get the turbonomic license key This package contains license file for turbonomic, with a name similar to “TURBONOMIC-ARM.lic"

- This file is covered by Turbonomic ARM P/N are currently available under IBM PPA terms and conditions

The Turbonomic automation is broken into several layers of automation. Each layer enables SRE activities to be optimized. This automation stack can be used stand-alone on an existing OpenShift cluster running on any cloud or on-premises infrastructure or in combination with provided infrastructure bundles.

| BOM ID | Name | Description | Run Time |

|---|---|---|---|

| 105 | 105 - Existing OpenShift | Logs into an existing openshift cluster and retrieves cluster configuration information. | 2 Min |

| 200 | 200 - OpenShift Gitops | Set up OpenShift GitOps infrastructure within the provided cluster and the GitOps repository that will hold the cluster configuration. By default, the automation will provision a GitOps repository on a Gitea instance running within the cluster. | 10 Min |

| 250 | 250 - Turbonomic Multi Cloud | Provision Turbonomic into Multi Cloud environment AWS, Azure and IBM Cloud supported | 10 Min |

At this time the most reliable way of running this automation is with Terraform in your local machine either through a bootstrapped container image or with native tools installed. We provide a Container image that has all the common SRE tools installed. CLI Tools Image, Source Code for CLI Tools

Before you start the installation please install the pre-req tools on your machine.

-

Have access to an IBM Cloud Account, Enterprise account is best for workload isolation but if you only have a Pay Go account this set of terraform can be run in that level of account.

-

At this time the most reliable way of running this automation is with Terraform in your local machine either through a bootstrapped docker image or Virtual Machine. We provide both a container image and a virtual machine cloud-init script that have all the common SRE tools installed.

We recommend using Docker Desktop if choosing the container image method, and Multipass if choosing the virtual machine method. Detailed instructions for downloading and configuring both Docker Desktop and Multipass can be found in RUNTIMES.md

The installation process will use a standard GitOps repository that has been built using the Modules to support Turbonomic installation. The automation is consistent across three cloud environments AWS, Azure, and IBM Cloud.

-

The first step is to clone the automation code to your local machine. Run this git command in your favorite command-line shell.

git clone https://github.com/IBM/automation-turbonomic -

Navigate into the automation-turbonomic folder using your command line. a. The README.md has a comprehensive instructions on how to install this into other cloud environments than TechZone. This document focuses on getting it running in a TechZone requested environment.

-

Next you will need to set-up your credentials.properties file. This will enable secure access to your cluster. Copy credentials.template to credentials.properties.

cp credentials.template credentials.properties

-

Provide values for the variables in credentials.properties (Note:

*.propertieshas been added to .gitignore to ensure that the file containing the apikey cannot be checked into Git.)- TF_VAR_server_url - The server url of the existing OpenShift cluster where Turbonomic will be installed.

- TF_VAR_cluster_login_token - The token used to log into the existing OpenShift cluster.

- TF_VAR_gitops_repo_host - (Optional) The host for the git repository (e.g. github.com, bitbucket.org). Supported Git servers are GitHub, Github Enterprise, Gitlab, Bitbucket, Azure DevOps, and Gitea. If this value is left commented out, the automation will default to using Gitea.

- TF_VAR_gitops_repo_username - (Optional) The username on git server host that will be used to provision and access the gitops repository. If the

gitops_repo_hostis blank this value will be ignored and the Gitea credentials will be used. - TF_VAR_gitops_repo_token - (Optional) The personal access token that will be used to authenticate to the git server to provision and access the gitops repository. (The user should have necessary access in the org to create the repository and the token should have

delete_repopermission.) If the host is blank this value will be ignored and the Gitea credentials will be used. - TF_VAR_gitops_repo_org - (Optional) The organization/owner/group on the git server where the gitops repository will be provisioned/found. If not provided the org will default to the username.

- TF_VAR_gitops_repo_project - (Optional) The project on the Azure DevOps server where the gitops repository will be provisioned/found. This value is only required for repositories on Azure DevOps.

-

We are now ready to start installing Turbonomic. Launch the automation runtime.

- If using Docker Desktop, run

./launch.sh. This will start a container image with the prompt opened in the/terraformdirectory. - If using Multipass, run

mutlipass shell cli-toolsto start the interactive shell, and cd into the/automation/{template}directory, where{template}is the folder you've cloned this repo. Be sure to runsource credentials.propertiesonce in the shell.

- If using Docker Desktop, run

-

Run the

setup-workspace.shcommand to configure a workspace environment that will provision an instance of Turbonomic. Two arguments should be provided:-pfor the platform (which can beazure|awsoribm) and-nto supply a prefix name./setup-workspace.sh -p ibm -n turbo01

-

Two different configuration files have been created: turbonomic.tfvars and gitops.tfvars. turbonomic.tfvars contains the variables specific to the Turbonomic install. gitops.tfvars contains the variables that define the gitops configuration. Inspect both of these files to see if there are any variables that should be changed. (The setup-workspace.sh script has generated these two files with default values and can be used without updates, if desired.)

From the /workspace/current directory, run the following:

./apply-all.shThe script will run through each of the terraform layers in sequence to provision the entire infrastructure.

From the /workspace/current directory, change the directory into each of the layer subdirectories, in order, and run the following:

./apply.sh-

Log into the OpenShift console for the cluster.

-

You will see the first change as a purple banner describing what was installed.

-

The next step is to validate if everything has installed correctly. The link to the configured GitOps repository will be available from the application menu in the OpenShift console.

-

Check if the payload folder has been created with the correct definitions for GitOps. Navigate to the

payload/2-services/namespace/turbonomicfolder and look at the content of the installation YAML files. You should see the Operator CR definitions -

The final Step is to Open up Argo CD (OpenShift GitOps) check it is correctly configured, click on the Application menu 3x3 Icon on the header and select Cluster Argo CD menu item.

-

Complete the authorization with OpenShift, and, then narrow the filters by selecting the turbonomic namespace.

-

This will show you the GitOps dashboard of the software you have installed using GitOps techniques

-

Click on turbonomic-turboinst tile

-

You will see all the microservices that Turbonomic uses to install with their enablement state

Configure Turbonomic after installation into your cluster with your downloaded license key.

- In the OpenShift console navigate to the Networking->Routes and change the project from to turbonomic you will see the route to launch dashboard for Turbonomic. Click on the Location URL to open Turbonomic

- The first time you will launch the dashboard it will ask you to define an Administration password. Enter your new password and confirm it.

Don’t forget to store it in your password manager

- Once the account has been created you will be greeted with the default screen.

- Make sure you have downloaded the license key following the instructions in the pre-requisites section at the front of this document.

- Click on Settings on left menu, then click on License icon, click Import license

- Drag you license key into the drop area and you will get a screen stating it has been added

- Now we need to point Turbonomic at an environment for it to monitor ,

- Click on the Add Targets button.

- Click on

Kubernetes-Turbonomicthen Validate button to complete the validation - Then click on the On icon at the top of the left menu to see a monitor view of Turbonomic

Please refer to the Troubleshooting Guide for uninstallation instructions and instructions to correct common issues.

This concludes the instructions for installing Turbonomic on AWS, Azure, and IBM Cloud

This set of automation packages was generated using the open-source isacable tool. This tool enables a Bill of Material yaml file to describe your software requirements. If you want up stream releases or versions you can use iascable to generate a new terraform module.

The

iascabletool is targeted for use by advanced SRE developers. It requires deep knowledge of how the modules plug together into a customized architecture. This repository is a fully tested output from that tool. This makes it ready to consume for projects.

The following steps can be used to retrieve the server_url and cluster_login_token values from the console of an existing OpenShift cluster for use in the automation:

- Log into the OpenShift console.

- Click on the top-right menu and select "Copy login command". On the subsequent page, click on "Display Token".

- The api token is listed at the top of the page.

- The server url is listed in the login command.

The following steps can be used to retrieve the server_url and cluster_login_token values of an existing OpenShift cluster from a command-line terminal for use in the automation:

- Open a terminal that is logged into the cluster.

- Print the server url by running:

oc whoami --show-server

- Print the login token:

oc whoami --show-token