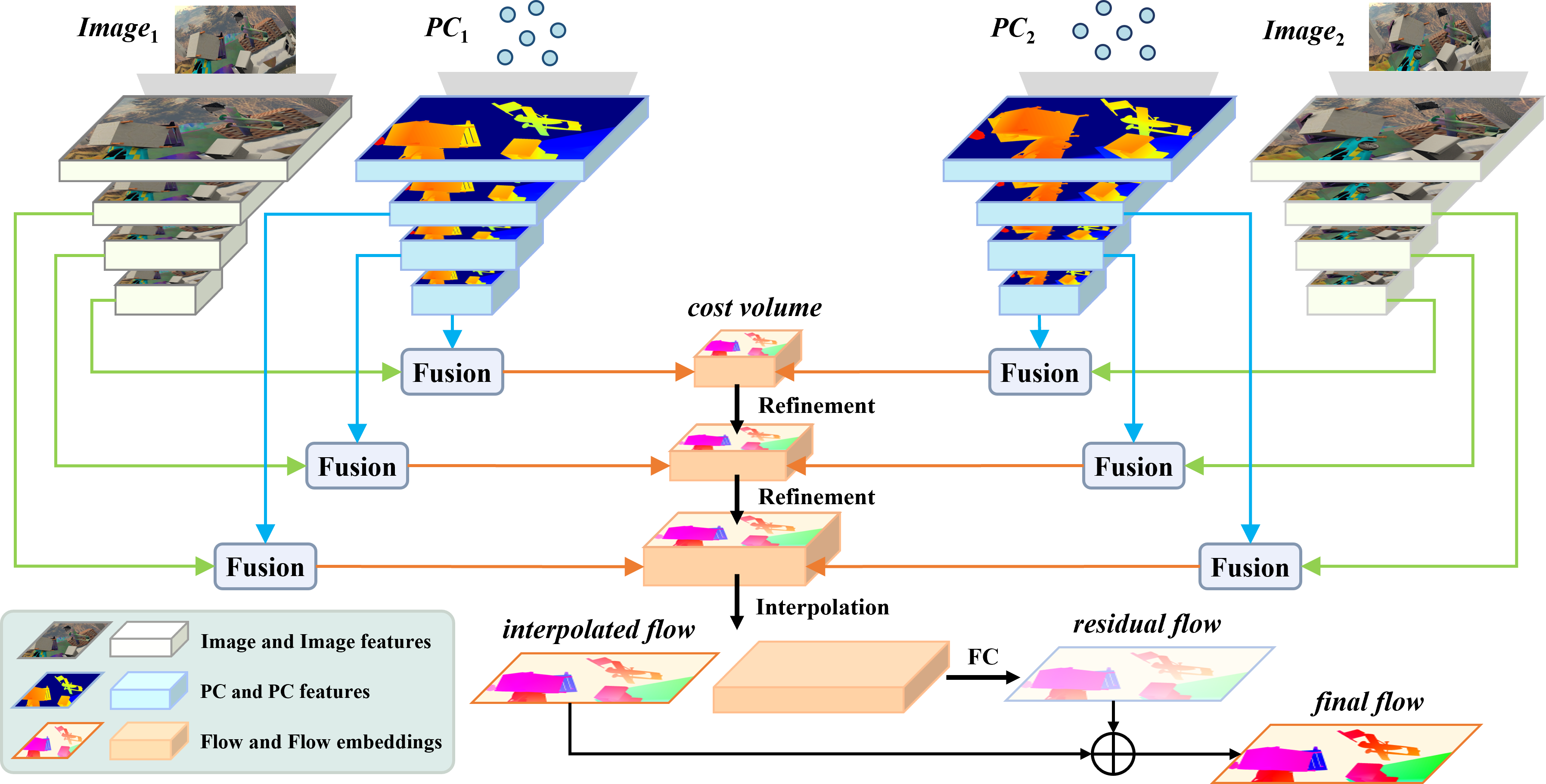

Point clouds are naturally sparse, while image pixels are dense. The inconsistency limits feature fusion from both modalities for point-wise scene flow estimation. Previous methods rarely predict scene flow from the entire point clouds of the scene with one-time inference due to the memory inefficiency and heavy overhead from distance calculation and sorting involved in commonly used farthest point sampling, KNN, and ball query algorithms for local feature aggregation.

To mitigate these issues in scene flow learning, we regularize raw points to a dense format by storing 3D coordinates in 2D grids. Unlike the sampling operation commonly used in existing works, the dense 2D representation

- preserves most points in the given scene,

- brings in a significant boost of efficiency

- eliminates the density gap between points and pixels, allowing us to perform effective feature fusion.

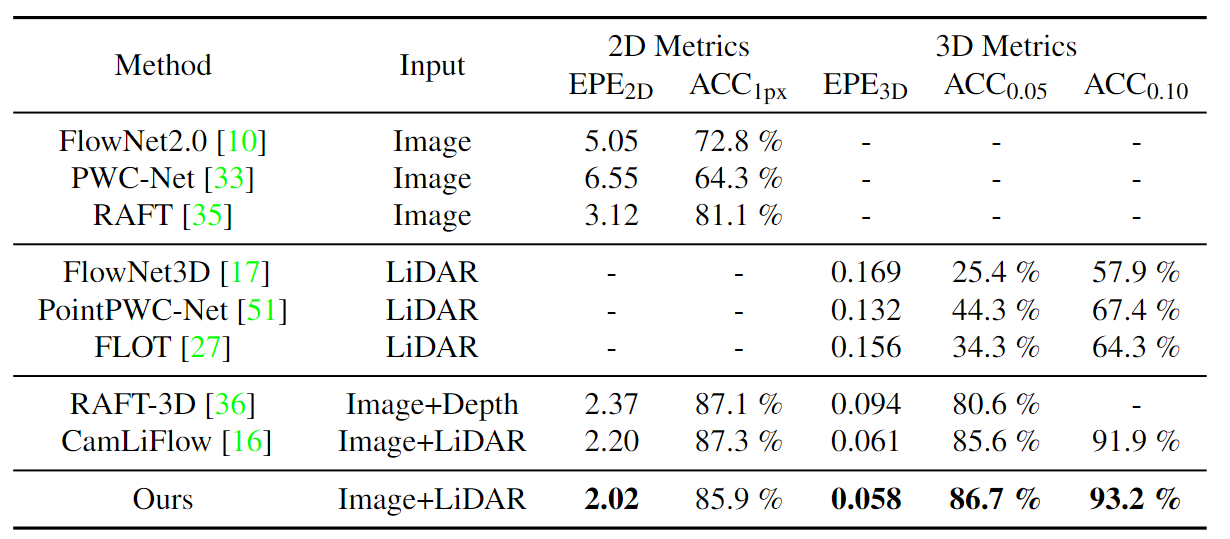

We also present a novel warping projection technique to alleviate the information loss problem resulting from the fact that multiple points could be mapped into one grid during projection when computing cost volume. Sufficient experiments demonstrate the efficiency and effectiveness of our method, outperforming the prior-arts on the FlyingThings3D and KITTI dataset.

For more details, please refer to our paper, arxiv.

Create a PyTorch environment using conda:

git clone https://github.com/IRMVLab/DELFlow.git

cd DELFlow

conda env create -f env.yaml

conda activate delflowCompile CUDA extensions

cd models/csrc

python setup.py build_ext --inplace

cd ops_pytorch/fused_conv_select

python setup.py install

cd ops_pytorch/gpu_threenn_sample

python setup.py installFirst, download and preprocess the FlyingThings3D subset dataset, then process them as follows (you may need to change the input_dir and output_dir):

python dataset/preprocess_data.pyFirst, download the following parts:

- Main data: data_scene_flow.zip

- Calibration files: data_scene_flow_calib.zip

- Disparity estimation (from GA-Net): disp_ganet.zip

- Semantic segmentation (from DDR-Net): semantic_ddr.zip

[Unzip them and organize the directory as follows (click to expand)]

dataset/kitti_scene_flow

├── testing

│ ├── calib_cam_to_cam

│ ├── calib_imu_to_velo

│ ├── calib_velo_to_cam

│ ├── disp_ganet

│ ├── flow_occ

│ ├── image_2

│ ├── image_3

│ ├── semantic_ddr

└── training

├── calib_cam_to_cam

├── calib_imu_to_velo

├── calib_velo_to_cam

├── disp_ganet

├── disp_occ_0

├── disp_occ_1

├── flow_occ

├── image_2

├── image_3

├── obj_map

├── semantic_ddr

Run the code using the following command:

python train.py config/$config_.yaml$If you use this codebase or model in your research, please cite:

@inproceedings{peng2023delflow,

title={Delflow: Dense efficient learning of scene flow for large-scale point clouds},

author={Peng, Chensheng and Wang, Guangming and Lo, Xian Wan and Wu, Xinrui and Xu, Chenfeng and Tomizuka, Masayoshi and Zhan, Wei and Wang, Hesheng},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={16901--16910},

year={2023}

}

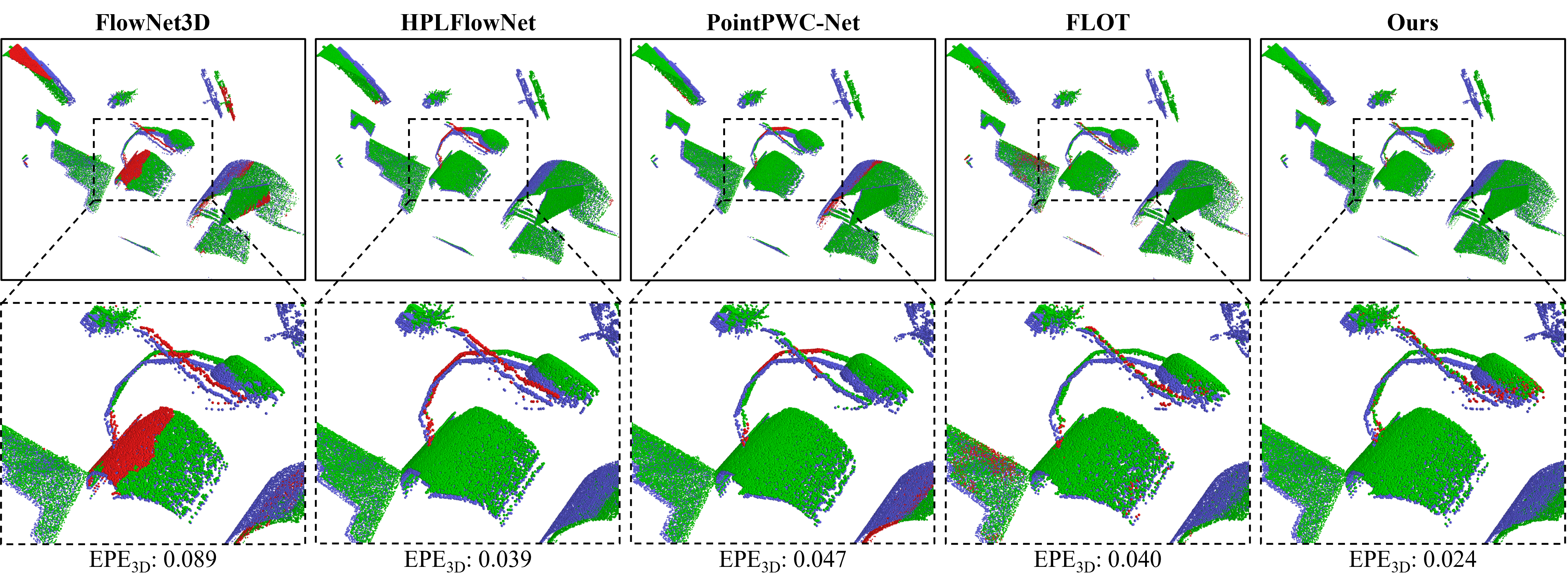

In this section, we present illustrative examples that demonstrate the effectiveness of our proposal.

This code benefits a lot from CamLiFlow. Thanks for making codes public available.