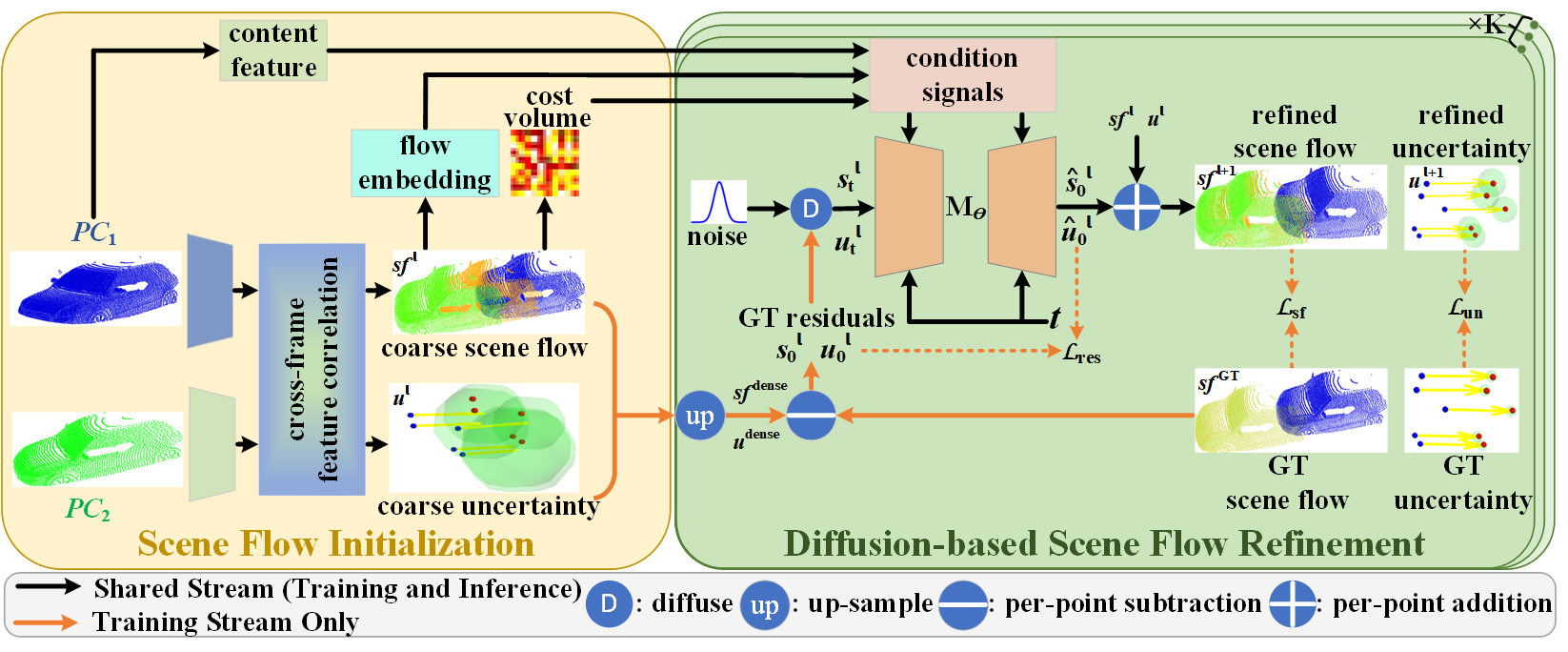

DifFlow3D: Toward Robust Uncertainty-Aware Scene Flow Estimation with Iterative Diffusion-Based Refinement

Jiuming Liu, Guangming Wang, Weicai Ye, Chaokang Jiang, Jinru Han, Zhe Liu, Guofeng Zhang, Dalong Du, Hesheng Wang#

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024

- [19/Mar/2024] We have released our models and checkpoints based on MSBRN!

- [27/Feb/2024] Our paper has been accepted by CVPR 2024! 🥳🥳🥳

Our model is trained and tested under:

- Python 3.8.10

- NVIDIA GPU RTX3090 + CUDA CuDNN

- PyTorch (torch == 1.7.1+ cu110)

- scipy

- tqdm

- sklearn

- numba

- cffi

- pypng

- pptk

- thop

Please follow the instructions below for compiling the furthest point sampling, grouping and gathering operation for PyTorch.

cd pointnet2

python setup.py install

cd ../

We adopt the equivalent preprocessing steps in HPLFlowNet and PointPWCNet.

- FlyingThings3D:

Download and unzip the "Disparity", "Disparity Occlusions", "Disparity change", "Optical flow", "Flow Occlusions" for DispNet/FlowNet2.0 dataset subsets from the FlyingThings3D website (we used the paths from this file, now they added torrent downloads)

. They will be upzipped into the same directory,

RAW_DATA_PATH. Then run the following script for 3D reconstruction:

python3 data_preprocess/process_flyingthings3d_subset.py --raw_data_path RAW_DATA_PATH --save_path SAVE_PATH/FlyingThings3D_subset_processed_35m --only_save_near_pts- KITTI Scene Flow 2015

Download and unzip KITTI Scene Flow Evaluation 2015 to directory

RAW_DATA_PATH. Run the following script for 3D reconstruction:

python3 data_preprocess/process_kitti.py RAW_DATA_PATH SAVE_PATH/KITTI_processed_occ_finalSet data_root in the configuration file to SAVE_PATH in the data preprocess section before evaluation.

We provide pretrained model in pretrain_weights.

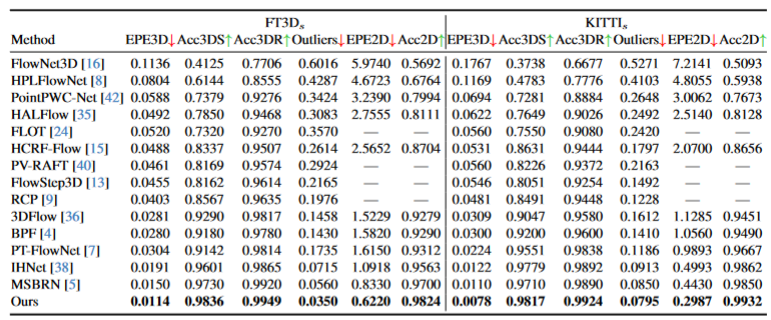

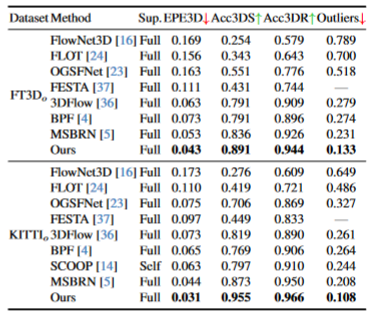

model_difflow_355_0.0114.pth: checkpoints for flt3d_s and KITTI_s.model_difflow_occ_327_0.0428.pth: checkpoints for flt3d_o and KITTI_o.

Please run the following instrcutions for evaluation.

- For flt3d_s and KITTI_s

python3 evaluate.py config_evaluate.yaml

- For flt3d_o and KITTI_o

python3 evaluate_occ.py config_evaluate_occ.yaml

If you want to train from scratch, please set data_root in the configuration file to SAVE_PATH in the data preprocess section before evaluation at the first. Then excute following instructions.

- For flt3d_s and KITTI_s

python3 train_difflow.py config_train.yaml

- For flt3d_o and KITTI_o

python3 train_difflow_occ.py config_train_occ.yaml

@inproceedings{liu2024difflow3d,

title={DifFlow3D: Toward Robust Uncertainty-Aware Scene Flow Estimation with Iterative Diffusion-Based Refinement},

author={Liu, Jiuming and Wang, Guangming and Ye, Weicai and Jiang, Chaokang and Han, Jinru and Liu, Zhe and Zhang, Guofeng and Du, Dalong and Wang, Hesheng},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={15109--15119},

year={2024}

}

We thank the following open-source project for the help of the implementations: