Self-supervised object segmentation via multi-object tracking and mask propapagtion based on video object segmentation.

This is the object segmentation code in the following paper. arXiv, Project

The dataset we collected via robot interaction can be downloaded from here.

The code is released under the MIT License (refer to the LICENSE file for details).

If you find the package is useful in your research, please consider citing:

@article{lu2023self,

title={Self-Supervised Unseen Object Instance Segmentation via Long-Term Robot Interaction},

author={Lu, Yangxiao and Khargonkar, Ninad and Xu, Zesheng and Averill, Charles and Palanisamy, Kamalesh and Hang, Kaiyu and Guo, Yunhui and Ruozzi, Nicholas and Xiang, Yu},

journal={arXiv preprint arXiv:2302.03793},

year={2023}

}

-

Set up XMem for video object segmentation. Follow instruction here XMem. After that, create a symbol link of XMem in the root folder this repo.

-

Set up MMFlow for optical flow estimation. Follow instruction here MMFLow.

-

Install python packages

pip install -r requirements.txt

-

Download our sample dataset from here for testing data.zip, and unzip it to the root folder

-

Run the following python script

python object_tracking.py

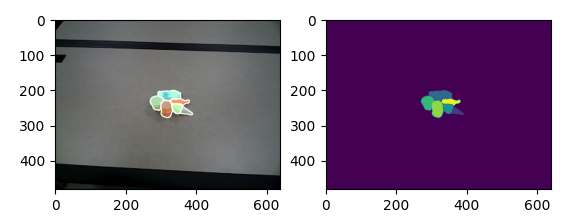

The code will run over the image sequences under the data folder, and generate the final segmentation masks of all the images. Here is one example output: