In NeurIPS 2020 (Spotlight) [Project Website] [Project Video]

Shikhar Bahl, Mustafa Mukadam, Abhinav Gupta, Deepak Pathak

Carnegie Mellon University & Facebook AI Research

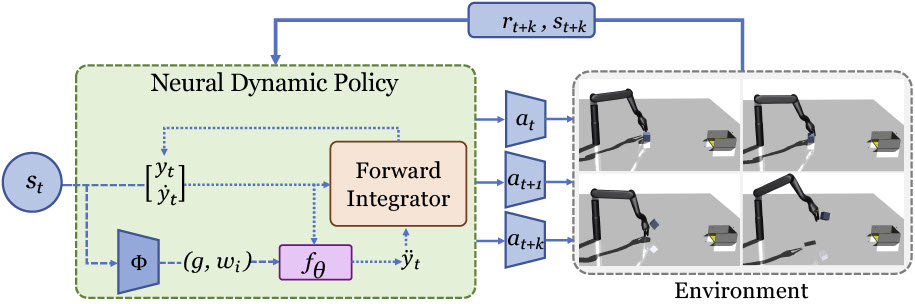

This is a PyTorch based implementation for our NeurIPS 2020 paper on Neural Dynamic Policies for end-to-end sensorimotor learning. In this work, we begin to close this gap and embed dynamics structure into deep neural network-based policies by reparameterizing action spaces with differential equations. We propose Neural Dynamic Policies (NDPs) that make predictions in trajectory distribution space as opposed to prior policy learning methods where action represents the raw control space. The embedded structure allow us to perform end-to-end policy learning under both reinforcement and imitation learning setups. If you find this work useful in your research, please cite:

@inproceedings{bahl2020neural,

Author = { Bahl, Shikhar and Mukadam, Mustafa and

Gupta, Abhinav and Pathak, Deepak},

Title = {Neural Dynamic Policies for End-to-End Sensorimotor Learning},

Booktitle = {NeurIPS},

Year = {2020}

}

- This code is based on PyTorch. This code needs MuJoCo 1.5 to run. To install and setup the code, run the following commands:

#create directory for data and add dependencies

cd neural-dynamic-polices; mkdir data/

git clone https://github.com/rll/rllab.git

git clone https://github.com/openai/baselines.git

#create virtual env

conda create --name ndp python=3.5

source activate ndp

#install requirements

pip install -r requirements.txt

#OR try

conda env create -f ndp.yaml- Training imitation learning

cd neural-dynamic-polices

# name of the experiment

python main_il.py --name NAME- Training RL: run the script

run_rl.sh.ENV_NAMEis the environment (could bethrow,pick,push,soccer,faucet).ALGO-TYPEis the algorithm (dmpfor NDPs,ppofor PPO [Schulman et al., 2017] andppo-multifor the multistep actor-critic architecture we present in our paper).

sh run_rl.sh ENV_NAME ALGO-TYPE EXP_ID SEED- In order to visualize trained models/policies, use the same exact arguments as used for training but call

vis_policy.sh

sh vis_policy.sh ENV_NAME ALGO-TYPE EXP_ID SEEDWe use the PPO infrastructure from: https://github.com/ikostrikov/pytorch-a2c-ppo-acktr-gail. We use environment code from: https://github.com/dibyaghosh/dnc/tree/master/dnc/envs, https://github.com/rlworkgroup/metaworld, https://github.com/vitchyr/multiworld. We use pytorch and RL utility functions from: https://github.com/vitchyr/rlkit. We use the DMP skeleton code from https://github.com/abr-ijs/imednet, https://github.com/abr-ijs/digit_generator. We also use https://github.com/rll/rllab.git and https://github.com/openai/baselines.git.