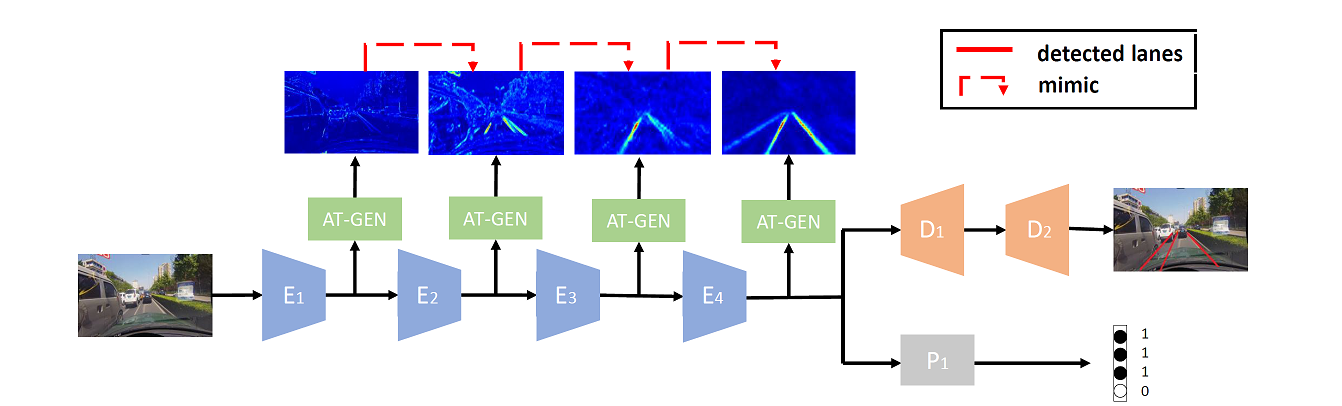

Pytorch implementation of "Learning Lightweight Lane Detection CNNs by Self Attention Distillation (ICCV 2019)"

- BDD100K Dataloader is released. now support all the three datasets (CULane, TuSimple, BDD100K)

- ENET_SAD model has been updated to be more similar to the original implementation. (generated model is the same as before).

- Fixed issue with calculating IoU by taking softmax excluding BG.

- Evaluation codes for CULane, TuSimple dataset have been updated.

You can find the previous version here

Demo trained with CULane dataset & tested with \driver_193_90frame\06051123_0635.MP4

gpu_runtime: 0.016253232955932617 FPS: 61

total_runtime: 0.017553091049194336 FPS: 56 on RTX 2080 TI

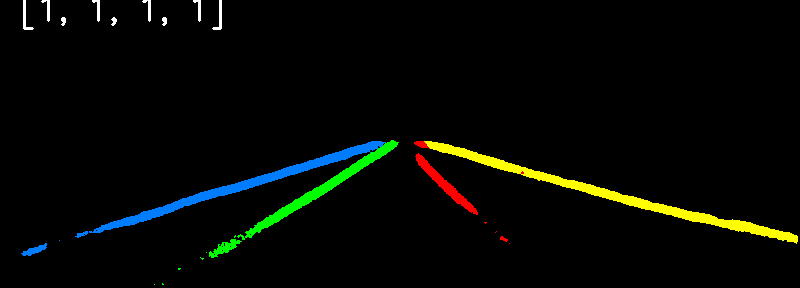

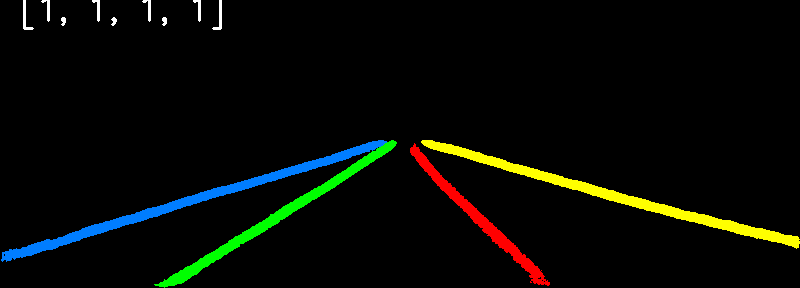

| Category | 40k episode (before SAD) | 60k episode (after SAD) |

|---|---|---|

| Image |  |

|

| Lane |  |

|

- pytorch

- tensorflow (for tensorboard)

- opencv

-

CULane dataset path (click to expand)

CULane_path ├─ driver_100_30frame ├─ driver_161_90frame ├─ driver_182_30frame ├─ driver_193_90frame ├─ driver_23_30frame ├─ driver_37_30frame ├─ laneseg_label_w16 ├─ laneseg_label_w16_test └─ list

-

TuSimple dataset path (click to expand)

Tusimple_path ├─ clips ├─ label_data_0313.json ├─ label_data_0531.json ├─ label_data_0601.json └─ test_label.json

-

- Download modified labels and lists generated by author's method (train, val and list) and put the BDD100K dataset in the desired folder.

BDD100K dataset path (click to expand)

BDD100K_path ├─ images │ ├─ 10k │ └─ 100k ├─ list │ ├─ test_gt_bdd.txt │ ├─ train_gt_bdd.txt │ └─ val_gt_bdd.txt ├─ train_label │ └─ final_train └─ val_label └─ final_val

You need to change the correct dataset path in ./config.py

Dataset_Path = dict(

CULane = "/workspace/CULANE_DATASET",

Tusimple = "/workspace/TUSIMPLE_DATASET",

BDD100K = "/workspace/BDD100K_DATASET",

VPGNet = "/workspace/VPGNet_DATASET"

)First, change some hyperparameters in ./experiments/*/cfg.json

{

"model": "enet_sad", <- "scnn" or "enet_sad"

"dataset": {

"dataset_name": "CULane", <- "CULane" or "Tusimple"

"batch_size": 12,

"resize_shape": [800, 288] <- [800, 288] with CULane, [640, 368] with Tusimple, and [640, 360] with BDD100K

This size is defined in the ENet-SAD paper, any size is fine if it is a multiple of 8.

},

...

}

And then, start training with train.py

python train.py --exp_dir ./experiments/exp1

If you write your own code using ENet-SAD model, you can init ENet_SAD with some parameters.

class ENet_SAD(nn.Module):

"""

Generate the ENet-SAD model.

Keyword arguments:

- input_size (tuple): Size of the input image to segment.

- encoder_relu (bool, optional): When ``True`` ReLU is used as the

activation function in the encoder blocks/layers; otherwise, PReLU

is used. Default: False.

- decoder_relu (bool, optional): When ``True`` ReLU is used as the

activation function in the decoder blocks/layers; otherwise, PReLU

is used. Default: True.

- sad (bool, optional): When ``True``, SAD is added to model;

otherwise, SAD is removed. Default: False.

- weight_share (bool, optional): When ``True``, weights are shared

with encoder blocks (E2, E3 and E4); otherwise, encoders will each

learn with their own weights. Default: True.

"""Will continue to be updated.

-

TuSimple dataset

Category ENet-SAD Pytorch ENet-SAD paper Accuracy 93.93% 96.64% FP 0.2279 0.0602 FN 0.0838 0.0205 -

CULane dataset (F1-measure, FP measure for crossroad)

Category ENet-SAD Pytorch ENet-SAD paper Normal 86.8 90.1 Crowded 65.3 68.8 Night 54.0 66.0 No line 37.3 41.6 Shadow 52.4 65.9 Arrow 78.2 84.0 Dazzle light 51.0 60.2 Curve 58.6 65.7 Crossroad 2278 1995 Total 65.5 70.8 -

BDD100K

Category ENet-SAD Pytorch ENet-SAD paper Accuracy 37.09% 36.56% IoU 15.14 16.02

This repo is built upon official implementation ENet-SAD and based on PyTorch-ENet, SCNN_Pytorch.