Why can sparse loss functions supervise the generation of dense depth maps?

bcy1252 opened this issue · 11 comments

Thank you for your excellent work, network design gives me a lot of inspiration.

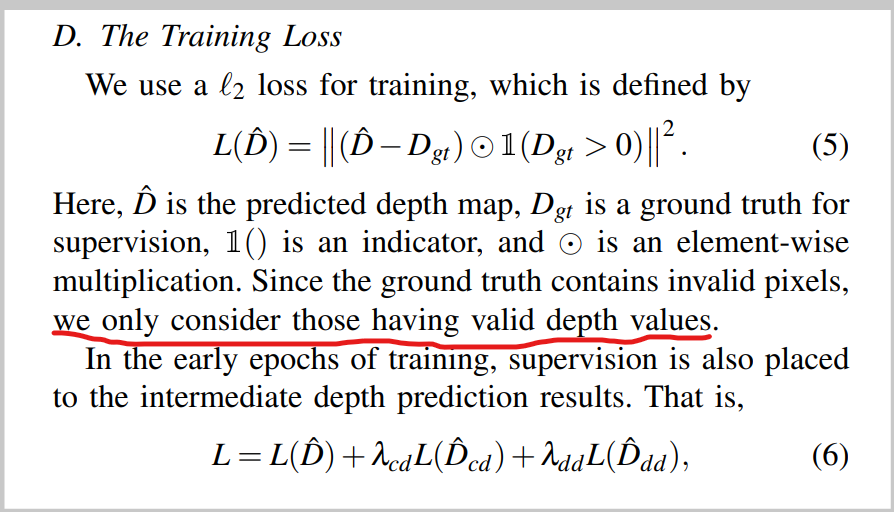

But there's one thing I never understood. I noticed that the training loss function in the paper only focuses on pixels with valid depth(sparse),so why can networks generate dense depth maps? How to ensure that pixels are accurate without supervision?

Thanks for your interest! For one specific (rgb, sd, gt) tuple, the supervision is semi-dense and there're many pixels where supervision doesn't exist. However, for the whole dataset, on most of the pixels in the 2D-image coordinate the network receives supervision from different data samples. And we should also notice some pixels where there're truly no supervision, like those in the top of the full image (out of the FoV of the LiDAR).

However, for the whole dataset, on most of the pixels in the 2D-image coordinate the network receives supervision from different data samples

I am so happy to receive your prompt reply!

I still don't quite understand, as shown in the picture below, how to ensure the accuracy of this depth in the absence of supervision(no GT)? Could you be more detailed? Thank you!

One of my interpretations is that the network randomly generates dense depth maps and then constrains only the supervised parts, and when the supervised parts are accurate, the rest(no GT) should also be accurate (possibly taking advantage of some hidden properties of the image). I don't know if I'm right.

However, for the whole dataset, on most of the pixels in the 2D-image coordinate the network receives supervision from different data samples

I am so happy to receive your prompt reply! I still don't quite understand, as shown in the picture below, how to ensure the accuracy of this depth in the absence of supervision(no GT)? Could you be more detailed? Thank you!

In this case, we cannot guaranty the depth values there is accurate as there're no nearby input LiDAR points. But it does not mean that it owes to the lack of supervision that we can't get reliable depth here. The accuracy of depth values of a specific predicted depth is more related to the input sparse depth. It has very minor relationship with its supervision. However, it is related to the overall distribution of supervision data in the whole dataset.

Hi, haven't seen you for a while.

I'm still interested in this problem, and this Friday, I trained a simple deep completion model using sparse loss functions (paper).

But here's what you get.

As shown in the figure below, no reliable depth is generated in the unsupervised part.

Why is that?

Ask for help,thank you!!!

(GT at the top and results at the bottom)

Hi, haven't seen you for a while. I'm still interested in this problem, and this Friday, I trained a simple deep completion model using sparse loss functions (paper). But here's what you get. As shown in the figure below, no reliable depth is generated in the unsupervised part. Why is that? Ask for help,thank you!!!

(GT at the top and results at the bottom)

How do you get ground truth data in the object detection dataset? In supervised depth completion, models can predict relatively reliable depth maps because the groundtruth data is multiple times denser than the inputs. In unsupervised depth completion, addition loss items such as photometric loss (minimum the re-projection error between sequential frames) and smoothness loss is required. If you take the inputs as the groundtruth data for training a single-frame depth completion model, I think the model will probably not work.

I projected a depth map (0 < value < 80) through lidar points, and then concated it with RGB and sent it directly to the network for learning. I don't know much about deep completion, and there may be some common sense mistakes.So, how should I make it work? I understand. After downsampling the original depth map(GT)(e.g 12w point decreased 4w point), concated it with RGB and sending it to the network for learning, is that right?

I have not conducted experiments under a similar setting. But I guess there could be two main reasons: (1) The groundtruth data you used is not dense enough to support the convergence of a single-image depth completion model. (2) Not all pixels are reliable. The noisy pixels should be filtered out before adapting to downstream tasks. You could refer to a previous issue about this.

You could refer to a previous issue about this.

hello, I read your paper found kitti depth complete task raw datasets and 3d object datasets have large differents.

raw datasets have 86k image and temporal(5% sparse depth and 16% depth GT). 3d object datasets have 7.8k and non-temporal. At this point, I understand your first reply.

Now,I'd like to share with you my latest progress. I seem to have made the network work through sampling and data enhancement in some ways, as shown in the picture, the car's 3D points are starting to dense.But there is still some noise (this seems to be the general noise of depth completion?)..I am interested in confidence map solve noise (I want to try some simple methods first), can you recommend this article, there is a code is best > < thank you!!!

You could refer to [1]【PNConv-CNN】CVPR2020 Uncertainty Aware CNNs for Depth Completion Uncertainty from Beginning to End. [2] 【PwP】ICCV2019 Depth Completion From Sparse LiDAR Data With Depth-Normal Constraints. for better understanding of predicting a confidence map.