[Project Page] [Paper] [Models] [Processed Dataset] [Raw GoPro Videos (Coming Soon)]

Fanqi Lin1,2,3*, Yingdong Hu1,2,3*, Pingyue Sheng1, Chuan Wen1,2,3, Jiacheng You1, Yang Gao1,2,3,

1Tsinghua University, 2Shanghai Qi Zhi Institute, 3Shanghai Artificial Intelligence Laboratory

* indicates equal contributions

See the UMI repository for installation.

We release data for all four of our tasks: pour water, arrange mouse, fold towel, and unplug charger. You can view or download all raw GoPro videos from this link (Coming Soon), and generate the dataset for training by running:

bash run_slam.sh && bash run_generate_dataset.shAlternatively, we provide processed dataset here, ready for direct use in training.

You can visualize the dataset with a simple script:

python visualize_dataset.pyFor the hardware setup, please refer to the UMI repo (note: we remove the mirror from the gripper, see link).

For each task, we release a policy trained on data collected from 32 unique environment-object pairs, with 50 demonstrations per environment. These polices generalize well to any new environment and new object. You can download them from link and run real-world evaluation using:

bash eval.shThe temporal_agg parameter in eval.sh refers to temporal ensemble strategy mentioned in our paper, enabling smoother robot actions.

Additionally, you can use the -j parameter to reset the robot arm to a fixed initial position (make sure that the initial joint configuration specified in example/eval_robots_config.yaml is safe for your robot !!!).

After downloading the processed dataset, you can train a policy by running:

cd train_scripts && bash <task_name>.shFor multi-GPU training, configure your setup with accelerate config, then replace python with accelerate launch in the <task_name>.sh script. Additionally, you can speed up training without sacrificing policy performance by adding the --mixed_precision 'bf16' argument.

Note that for the pour_water and unplug_charger tasks, we incorporate an additional step of historical observation for policy training and inference.

The current parameters in the <task_name.sh> scripts correspond to our released models, but you can customize training:

- Use

policy.obs_encoder.model_nameto specify the type of vision encoder for the diffusion policy. Other options includevit_base_patch14_dinov2.lvd142m(DINOv2 ViT-Base) andvit_large_patch14_clip_224.openai(CLIP ViT-Large). - To adjust the number of training environment-object pairs (up to a maximum of 32), modify

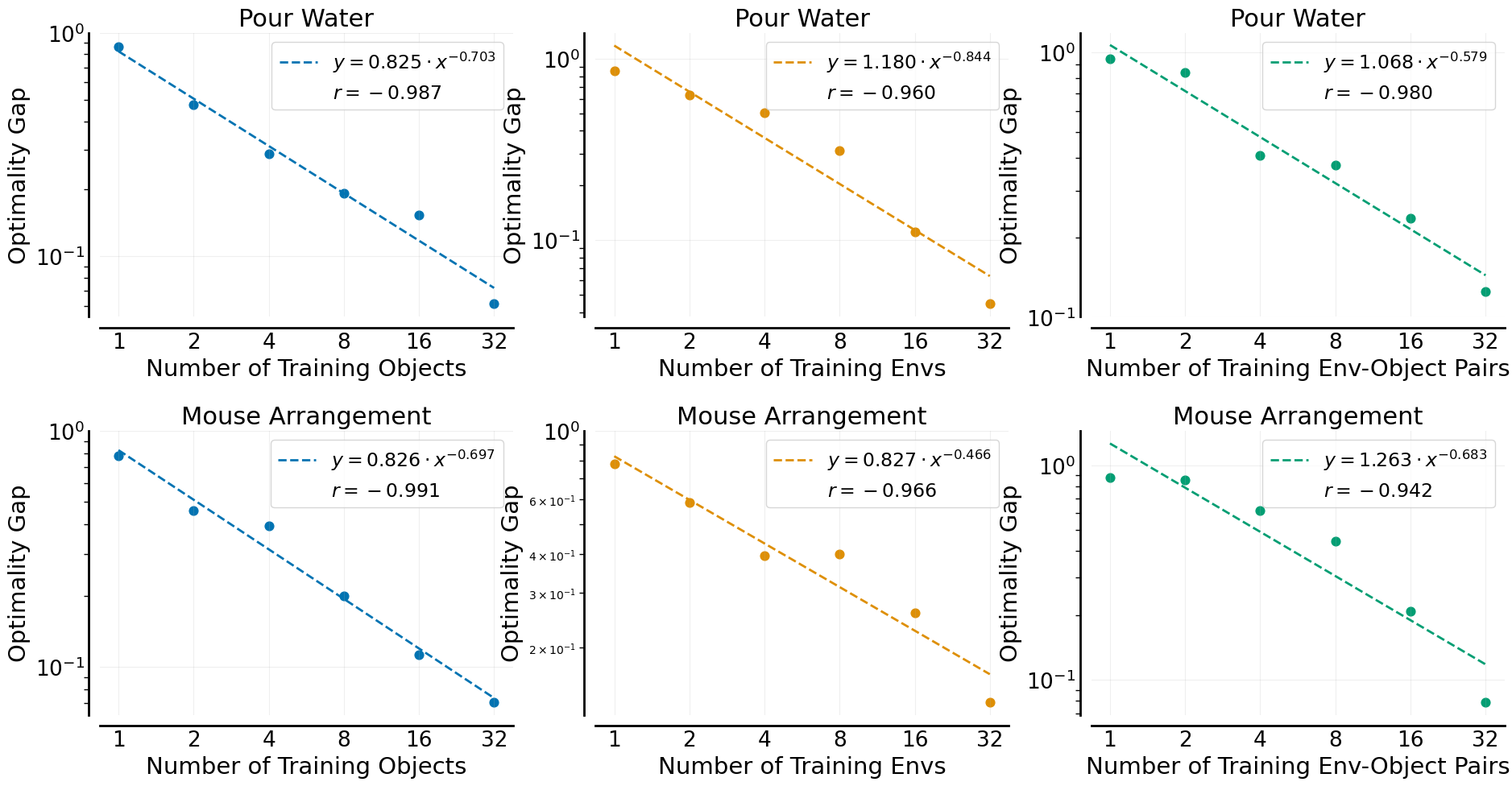

task.dataset.dataset_idx. You can change the proportion of demonstrations used by adjustingtask.dataset.use_ratiowithin the range (0, 1]. Training policies on data from different environment-object pairs, using 100% of the demonstrations, generates scaling curves similar to the following:

The curve (third column) shows that the policy’s ability to generalize to new environments and objects scales approximately as a power law with the number of training environment-object pairs.

We thank the authors of UMI for sharing their codebase.