The official PyTorch implementation of the paper "Multi-granularity Localization Transformer with Collaborative Understanding for Referring Multi-Object Tracking".

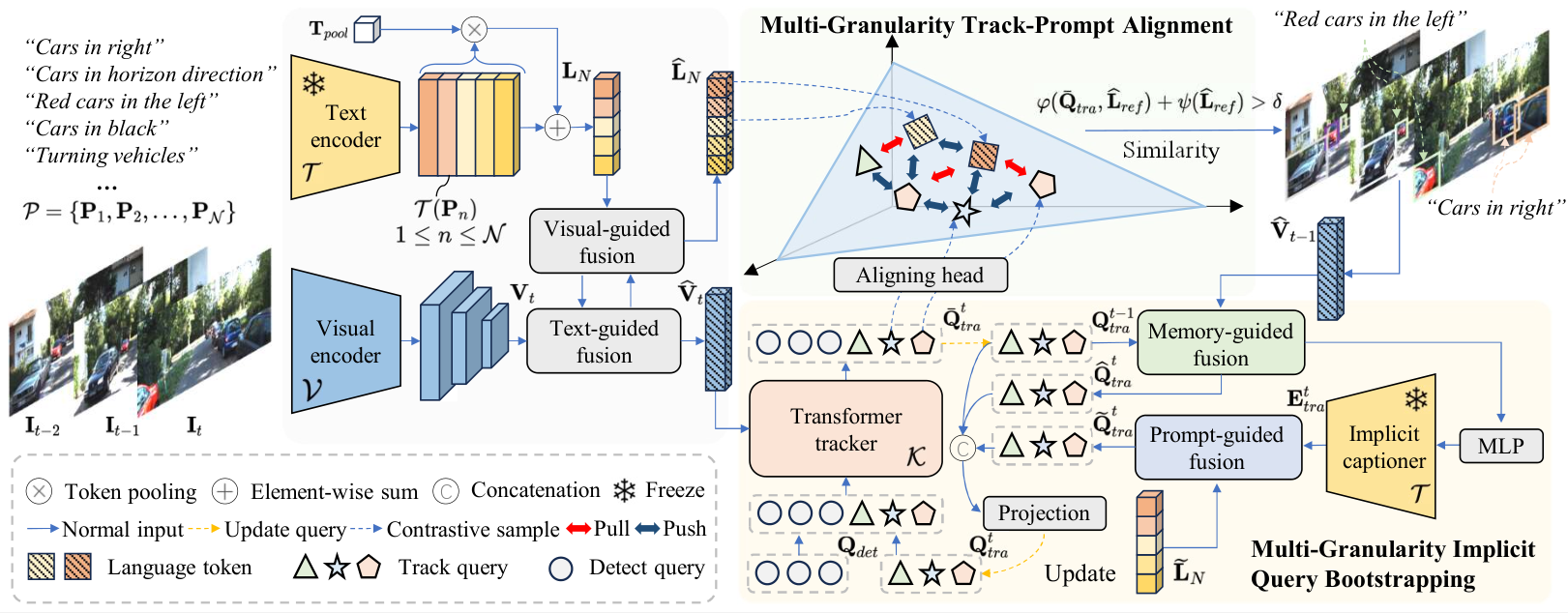

Referring Multi-Object Tracking (RMOT) involves localizing and tracking specific objects in video frames by utilizing linguistic prompts as references. To enhance the effectiveness of linguistic prompts when training, we introduce a novel Multi-Granularity Localization Transformer with collaborative understanding, termed MGLT. Unlike previous methods focused on visual-language fusion and post-processing, MGLT reevaluates RMOT by preventing linguistic clues attenuation during propagation and poor collaborative localization ability. MGLT comprises two key components: Multi-Granularity Implicit Query Bootstrapping (MGIQB) and Multi-Granularity Track-Prompt Alignment (MGTPA). MGIQB ensures that tracking and linguistic features are preserved in later layers of network propagation by bootstrapping the model to generate text-relevant and temporal-enhanced track queries. \revised{Simultaneously, MGTPA with multi-granularity linguistic prompts enhances the model's localization ability by understanding the relative positions of different referred objects within a frame.} Extensive experiments on well-recognized benchmarks demonstrate that MGLT achieves state-of-the-art performance. Notably, it shows significant improvements on Refer-KITTI dataset of 2.73%, 7.95% and 3.18% in HOTA, AssA, and IDF1, respectively.

Preparing data for Refer-KITTI and Refer-BDD.

Before training, please download the pretrained weights from Deformable DETR and CLIP-R50.

Then organizing project as follows:

├── refer-kitti

│ ├── KITTI

│ ├── training

│ ├── labels_with_ids

│ └── expression

├── refer-bdd

│ ├── BDD

│ ├── training

│ ├── labels_with_ids

│ ├── expression

├── weights

│ ├── r50_deformable_detr_plus_iterative_bbox_refinement-checkpoint.pth

│ ├── RN50.pt

...

To do training of MGLT with 4 GPUs, run:

sh configs/r50_rmot_train.shTo do evaluation of MGLT with 1 GPU, run:

sh configs/r50_rmot_test.shThe main results of MGLT:

| Method | Dataset | HOTA | DetA | AssA | DetRe | DetPr | AssRe | AssRr | LocA | MOTA | IDFI | IDS | URL |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MGLT | Refer-KITTI | 49.25 | 37.09 | 65.50 | 49.28 | 58.72 | 69.88 | 88.23 | 91.10 | 21.13 | 55.91 | 2442 | model |

| MGLT | Refer-BDD | 40.26 | 28.44 | 57.59 | 37.24 | 52.48 | 63.52 | 81.87 | 86.98 | 11.68 | 44.41 | 12935 | model |

This project is under the MIT license. See LICENSE for details.

-

2024.5.8 Release code and checkpoint.

-

2024.3.25 Init repository.

Our project is based on RMOT and CO-MOT. Many thanks to these excellence works.