The official Pytorch implementation of the NeurIPS 2023 paper, GenPose.

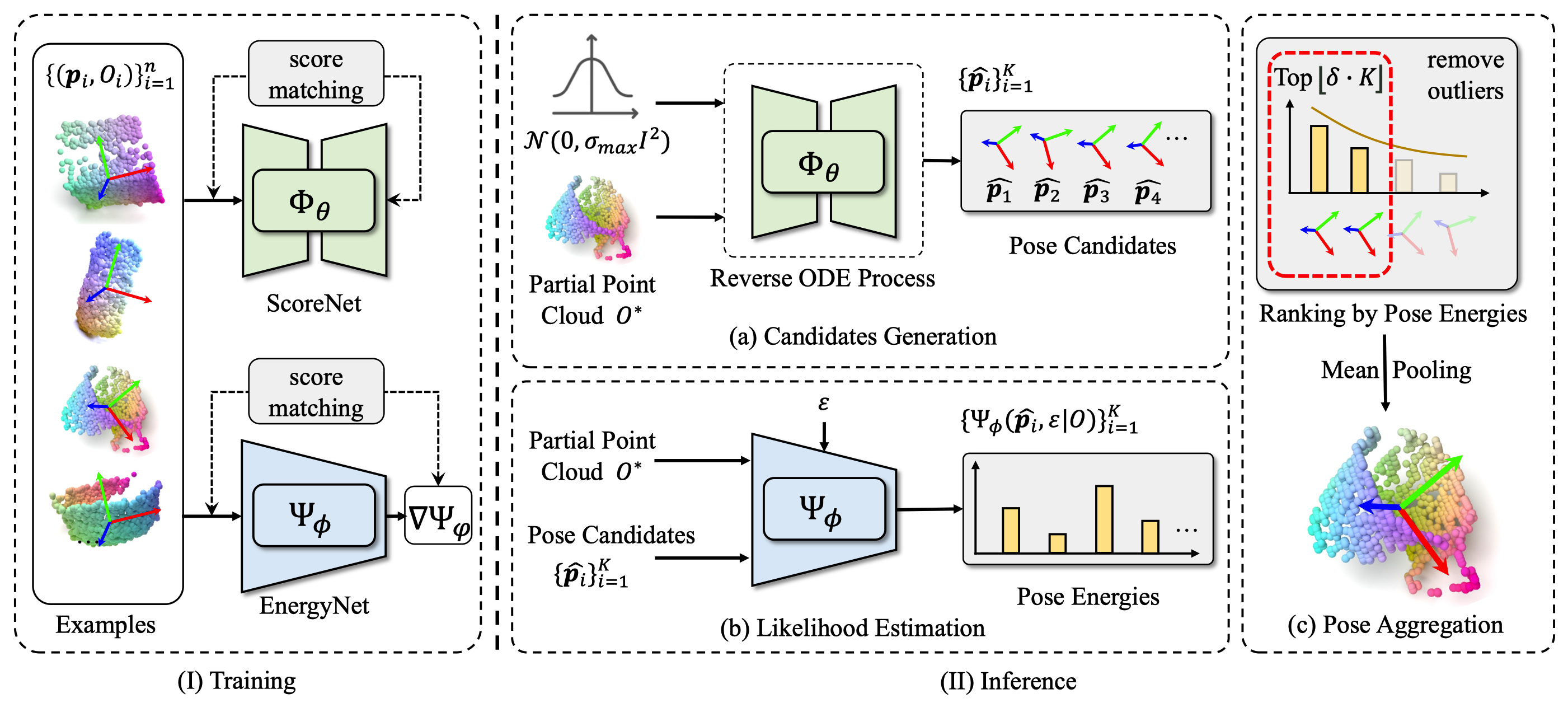

(I) A score-based diffusion model and an energy-based diffusion model is trained via denoising score-matching. (II) a) We first generate pose candidates from the score-based model and then b) compute the pose energies for candidates via the energy-based model. c) Finally, we rank the candidates with the energies and then filter out low-ranking candidates. The remaining candidates are aggregated into the final output by mean-pooling.

Contents of this repo are as follows:

- Overview

- TODO

- Requirements

- Installation

- Download dataset and models

- Training

- Evaluation

- Citation

- Contact

- License

- Release the code for object pose tracking.

- Release the preprocessed data for object pose tracking.

- Ubuntu 20.04

- Python 3.8.15

- Pytorch 1.12.0

- Pytorch3d 0.7.2

- CUDA 11.3

- 1 * NVIDIA RTX 3090

pip install torch==1.12.0+cu113 torchvision==0.13.0+cu113 torchaudio==0.12.0 --extra-index-url https://download.pytorch.org/whl/cu113git clone https://github.com/facebookresearch/pytorch3d.git

cd pytorch3d

git checkout -f v0.7.2

pip install -e .pip install -r requirements.txt cd networks/pts_encoder/pointnet2_utils/pointnet2

python setup.py install- Download camera_train, camera_val, real_train, real_test, ground-truth annotations and mesh models provided by NOCS and unzip the data. Then move the file "mug_handle.pkl" from this repository's "data/Real/train" folder to the corresponding unzipped folders. The file "mug_handle.pkl" is provided by GPV-Pose. Organize these files in $ROOT/data as follows:

data

├── CAMERA

│ ├── train

│ └── val

├── Real

│ ├── train

│ │ ├── mug_handle.pkl

│ │ └── ...

│ └── test

├── gts

│ ├── val

│ └── real_test

└── obj_models

├── train

├── val

├── real_train

└── real_test- Preprocess NOCS files following SPD.

We provide the preprocessed testing data (REAL275) and checkpoints here for a quick evaluation. Download and organize the files in $ROOT/results as follows:

results

├── ckpts

│ ├── EnergyNet

│ │ └── ckpt_genpose.pth

│ └── ScoreNet

│ └── ckpt_genpose.pth

├── evaluation_results

│ ├── segmentation_logs_real_test.txt

│ └── segmentation_results_real_test.pkl

└── mrcnn_results

├── aligned_real_test

├── real_test

└── valThe ckpts are the trained models of GenPose.

The evaluation_results are the preprocessed testing data, which contains the segmentation results of Mask R-CNN, the segmented pointclouds of obejcts, and the ground-truth poses.

The file mrcnn_results represents the segmentation results provided by SPD, and you also can find it here. Note that the file mrcnn_results/aligned_real_test contains the manually aligned segmentation results, used for object pose tracking.

Note: You need to preprocess the dataset as mentioned before first if you want to evaluate on CAMERA dataset.

Set the parameter '--data_path' in scripts/train_score.sh and scripts/train_energy.sh to your own path of NOCS dataset.

Train the score network to generate the pose candidates.

bash scripts/train_score.shTrain the energy network to aggragate the pose candidates.

bash scripts/train_energy.shSet the parameter --data_path in scripts/eval_single.sh and scripts/eval_tracking to your own path of NOCS dataset.

Set the parameter --test_source in scripts/eval_single.sh to 'real_test' and run:

bash scripts/eval_single.shSet the parameter --test_source in scripts/eval_single.sh to 'val' and run:

bash scripts/eval_single.shbash scripts/eval_tracking.shIf you find our work useful in your research, please consider citing:

@article{zhang2023genpose,

title={GenPose: Generative Category-level Object Pose Estimation via Diffusion Models},

author={Jiyao Zhang and Mingdong Wu and Hao Dong},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023},

url={https://openreview.net/forum?id=l6ypbj6Nv5}

}If you have any questions, please feel free to contact us:

Jiyao Zhang: jiyaozhang@stu.pku.edu.cn

Mingdong Wu: wmingd@pku.edu.cn

This project is released under the MIT license. See LICENSE for additional details.