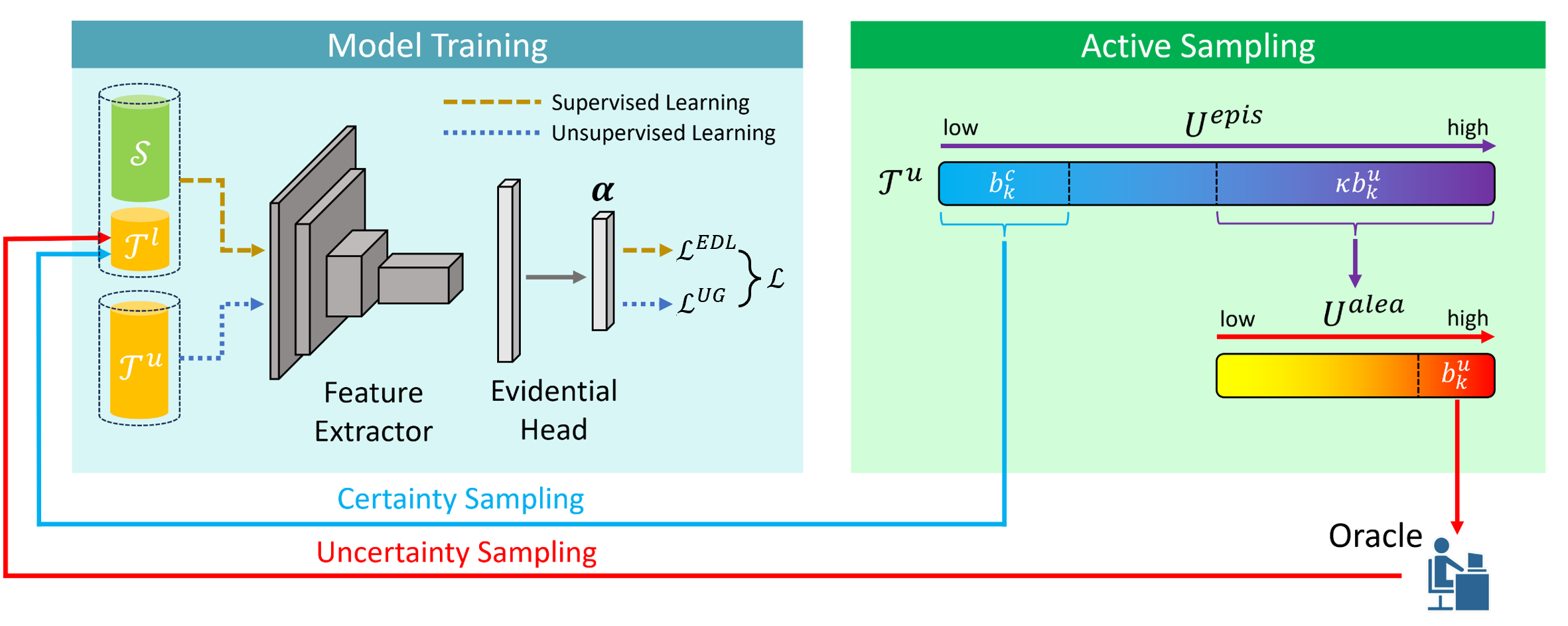

An active domain adaptation framework based on evidential deep learning (EDL) implemented with

- two sampling strategies: uncertainty sampling and certainty sampling

- two uncertainty quantification methods: entropy-based and variance-based

- three EDL loss functions: negative log-likelihood, cross-entropy, and sum-of-squares

Official implementation of

Evidential Uncertainty Quantification: A Variance-Based Perspective [WACV 2024]

Ruxiao Duan1,

Brian Caffo1,

Harrison X. Bai2,

Haris I. Sair2,

Craig Jones1

1Johns Hopkins University,

2Johns Hopkins University School of Medicine

paper | code | slides | poster | abstract

- src/config.py: parameter configurations.

- src/data.py: data preprocessing and loading.

- src/loss.py: loss functions.

- src/model.py: model architecture.

- src/sampling.py: uncertainty and certainty sampling strategies.

- src/train.py: model training.

- src/transforms.py: image transformations.

- src/uncertainty.py: uncertainty quantification.

- src/utils.py: utility functions.

- Install environment:

git clone https://github.com/KerryDRX/EvidentialADA.git

conda create -y --name active python=3.7.5

conda activate active

pip install -r requirements.txt

- Download dataset from Office-Home or Visda-2017 to local environment. Image files should be stored in the hierarchy of

<dataset-folder>/<domain>/<class>/<image-filename>.

- In src/config.py:

- Set

DATASET.NAMEto"Office-Home"or"Visda-2017". - Set

PATHS.DATA_DIRto<dataset-folder>. - Set

PATHS.OUTPUT_DIRto the output folder. - Set other parameters.

- Set

- Train the model:

python src/train.py

- Results are saved to

PATHS.OUTPUT_DIR.

The active learning framework is partially adapted from Dirichlet-based Uncertainty Calibration.

@inproceedings{duan2024evidential,

title={Evidential Uncertainty Quantification: A Variance-Based Perspective},

author={Duan, Ruxiao and Caffo, Brian and Bai, Harrison X and Sair, Haris I and Jones, Craig},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

year={2024}

}