The official repository with Pytorch

Our method can realize arbitrary face swapping on images and videos with one single trained model.

Training and test code are now available!

We are working with our incoming paper SimSwap++, keeping expecting!

The high resolution version of SimSwap-HQ is supported!

Our paper can be downloaded from [Arxiv] [ACM DOI]

This project is for technical and academic use only. Please do not apply it to illegal and unethical scenarios.

In the event of violation of the legal and ethical requirements of the user's country or region, this code repository is exempt from liability

Please do not ignore the content at the end of this README!

If you find this project useful, please star it. It is the greatest appreciation of our work.

2023-04-25: We fixed the "AttributeError: 'SGD' object has no attribute 'defaults' now" bug. If you have already downloaded arcface_checkpoint.tar, please download it again. Also, you also need to update the scripts in ./models/.

2022-04-21: For resource limited users, we provide the cropped VGGFace2-224 dataset [Google Driver] VGGFace2-224 (10.8G) [Baidu Driver] [Password: lrod].

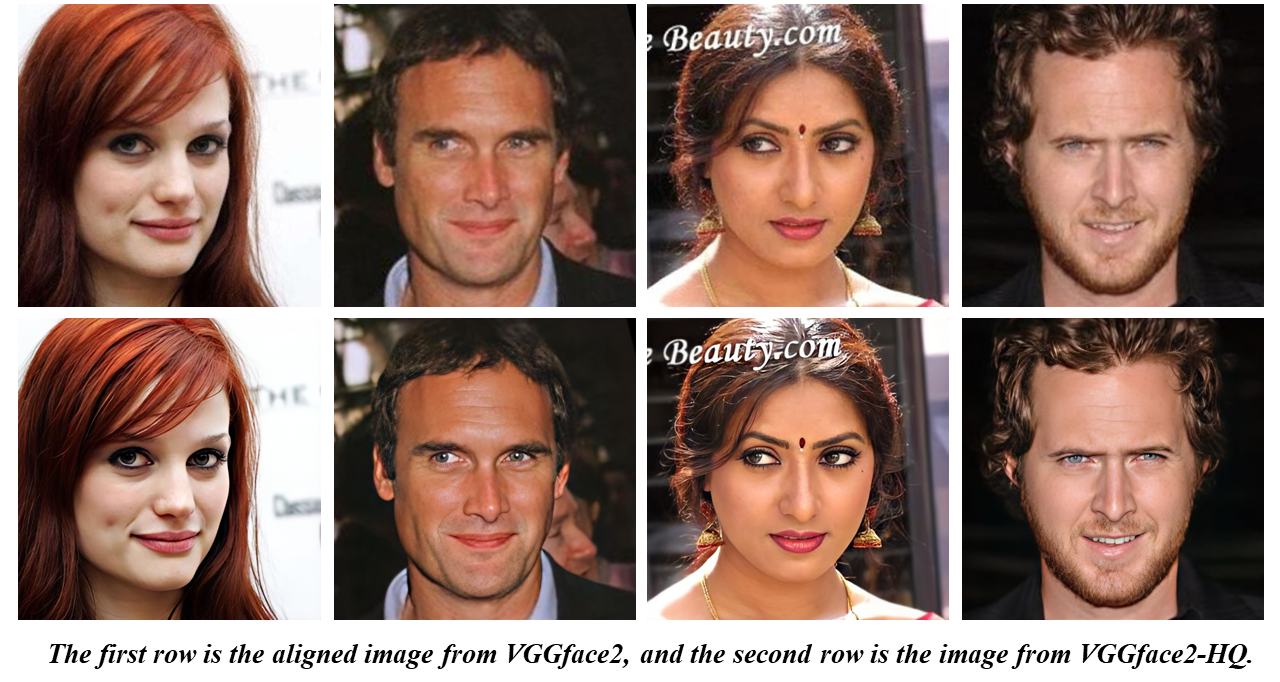

2022-04-20: Training scripts are now available. We highly recommend that you guys train the simswap model with our released high quality dataset VGGFace2-HQ.

2021-11-24: We have trained a beta version of SimSwap-HQ on VGGFace2-HQ and open sourced the checkpoint of this model (if you think the Simswap 512 is cool, please star our VGGFace2-HQ repo). Please don’t forget to go to Preparation and Inference for image or video face swapping to check the latest set up.

2021-11-23: The google drive link of VGGFace2-HQ is released.

2021-11-17: We released a high resolution face dataset VGGFace2-HQ and the method to generate this dataset. This dataset is for research purpose.

2021-08-30: Docker has been supported, please refer here for details.

2021-08-17: We have updated the Preparation, The main change is that the gpu version of onnx is now installed by default, Now the time to process a video is greatly reduced.

2021-07-19: Obvious border abruptness has been resolved. We add the ability to using mask and upgrade the old algorithm for better visual effect, please go to Inference for image or video face swapping for details. Please don’t forget to go to Preparation to check the latest set up. (Thanks for the help from @woctezuma and @instant-high)

High Resolution Dataset VGGFace2-HQ

- python3.6+

- pytorch1.5+

- torchvision

- opencv

- pillow

- numpy

- imageio

- moviepy

- insightface

The training script is slightly different from the original version, e.g., we replace the patch discriminator with the projected discriminator, which saves a lot of hardware overhead and achieves slightly better results.

In order to ensure the normal training, the batch size must be greater than 1.

Friendly reminder, due to the difference in training settings, the user-trained model will have subtle differences in visual effects from the pre-trained model we provide.

- Train 224 models with VGGFace2 224*224 [Google Driver] VGGFace2-224 (10.8G) [Baidu Driver] [Password: lrod]

For faster convergence and better results, a large batch size (more than 16) is recommended!

We recommend training more than 400K iterations (batch size is 16), 600K~800K will be better, more iterations will not be recommended.

python train.py --name simswap224_test --batchSize 8 --gpu_ids 0 --dataset /path/to/VGGFace2HQ --Gdeep False

Colab demo for training 224 model

For faster convergence and better results, a large batch size (more than 16) is recommended!

- Train 512 models with VGGFace2-HQ 512*512 VGGFace2-HQ.

python train.py --name simswap512_test --batchSize 16 --gpu_ids 0 --dataset /path/to/VGGFace2HQ --Gdeep True

Inference for image or video face swapping

Colab for switching specific faces in multi-face videos

Image face swapping demo & Docker image on Replicate

High-quality videos can be found in the link below:

[Google Drive link for video 1]

[Google Drive link for video 2]

[Google Drive link for video 3]

[Baidu Drive link for video] Password: b26n

If you have some interesting results after using our project and are willing to share, you can contact us by email or share directly on the issue. Later, we may make a separate section to show these results, which should be cool.

At the same time, if you have suggestions for our project, please feel free to ask questions in the issue, or contact us directly via email: email1, email2, email3. (All three can be contacted, just choose any one)

For academic and non-commercial use only.The whole project is under the CC-BY-NC 4.0 license. See LICENSE for additional details.

@inproceedings{DBLP:conf/mm/ChenCNG20,

author = {Renwang Chen and

Xuanhong Chen and

Bingbing Ni and

Yanhao Ge},

title = {SimSwap: An Efficient Framework For High Fidelity Face Swapping},

booktitle = {{MM} '20: The 28th {ACM} International Conference on Multimedia},

year = {2020}

}

Please visit our another ACMMM2020 high-quality style transfer project

Please visit our AAAI2021 sketch based rendering project

Please visit our high resolution face dataset VGGFace2-HQ

Learn about our other projects