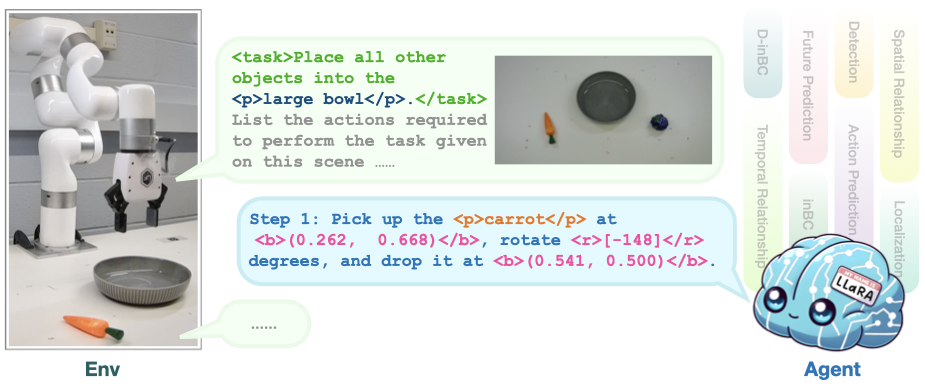

LLaRA: Supercharging Robot Learning Data for Vision-Language Policy [Arxiv]

Xiang Li1, Cristina Mata1, Jongwoo Park1, Kumara Kahatapitiya1, Yoo Sung Jang1, Jinghuan Shang1, Kanchana Ranasinghe1, Ryan Burgert1, Mu Cai2, Yong Jae Lee2, and Michael S. Ryoo1

1Stony Brook University 2University of Wisconsin-Madison

-

Set Up Python Environment:

Follow the instructions to install the same Python environment as used by LLaVA. Details are available here.

-

Replace LLaVA Implementation:

Navigate to

train-llavain this repo and install the llava package there:cd train-llava && pip install -e . -

Install VIMABench:

Complete the setup for VIMABench to use its functionalities within your projects.

-

Download the Pretrained Model:

Download the following model to

./checkpoints/- llava-1.5-7b-D-inBC + Aux(B) trained on VIMA-80k Hugging Face

More models are available at Model Zoo

-

Run the evaluation:

cd eval # evaluate the model with oracle object detector python3 eval-llara.py D-inBC-AuxB-VIMA-80k --model-path ../checkpoints/llava-1.5-7b-llara-D-inBC-Aux-B-VIMA-80k --prompt-mode hso # the results will be saved to ../results/[hso]D-inBC-AuxB-VIMA-80k.json -

Check the results: Please refer to llara-result.ipynb

-

Prepare the Dataset:

Visit the datasets directory to prepare your dataset for training.

-

Finetune a LLaVA Model:

To start finetuning a LLaVA model, refer to the instructions in train-llava.

-

Evaluate the Trained Model:

Follow the steps in eval to assess the performance of your trained model.

-

Train a MaskRCNN for Object Detection:

If your project requires training a MaskRCNN for object detection, check out train-maskrcnn for detailed steps.

If you encounter any issues or have questions about the project, please submit an issue on our GitHub issues page.

This project is licensed under the Apache-2.0 License - see the LICENSE file for details.

If you find this work useful in your research, please consider giving it a star ⭐ and cite our work:

@article{li2024llara,

title={LLaRA: Supercharging Robot Learning Data for Vision-Language Policy},

author={Li, Xiang and Mata, Cristina and Park, Jongwoo and Kahatapitiya, Kumara and Jang, Yoo Sung and Shang, Jinghuan and Ranasinghe, Kanchana and Burgert, Ryan and Cai, Mu and Lee, Yong Jae and Ryoo, Michael S.},

journal={arXiv preprint arXiv:2406.20095},

year={2024}

}Thanks!