Pytorch implementation of HS-Pose: Hybrid Scope Feature Extraction for Category-level Object Pose Estimation. (Paper, Project)

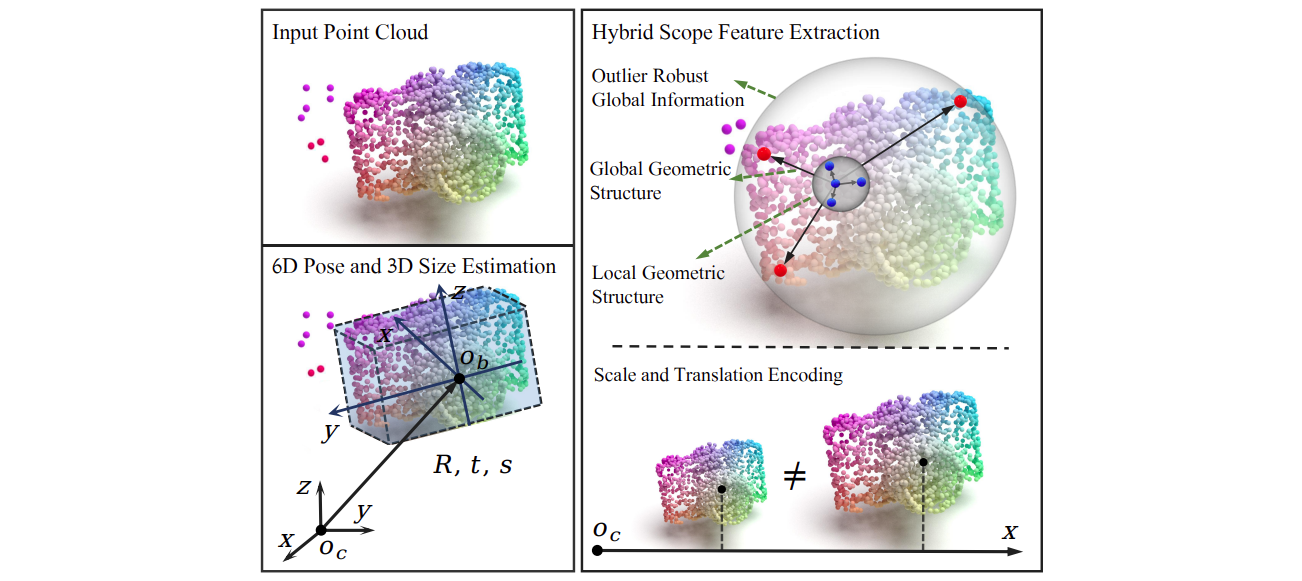

Illstraction of the hybrid feature extraction.

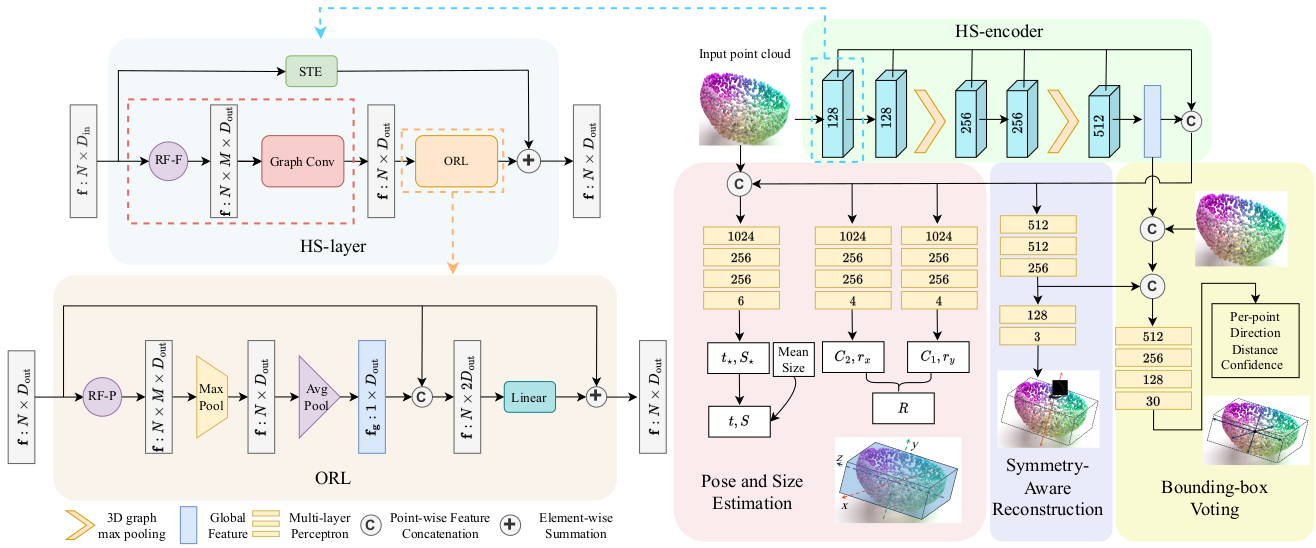

The overall framework.

- Ubuntu 18.04

- Python 3.8

- Pytorch 1.10.1

- CUDA 11.2

- 1 * RTX 3090

cd HS-Pose

virtualenv HS-Pose-env -p /usr/bin/python3.8Then, copy past the following lines to the end of ./HS-Pose-env/bin/activate file:

CUDAVER=cuda-11.2

export PATH=/usr/local/$CUDAVER/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/$CUDAVER/lib:$LD_LIBRARY_PATH

export LD_LIBRARY_PATH=/usr/local/$CUDAVER/lib64:$LD_LIBRARY_PATH

export CUDA_PATH=/usr/local/$CUDAVER

export CUDA_ROOT=/usr/local/$CUDAVER

export CUDA_HOME=/usr/local/$CUDAVERThen, use source to activate the virtualenv:

source HS-Pose-env/bin/activate-

Install CUDA-11.2

-

Install basic packages:

chmod +x env_setup.sh

./env_setup.shTo generate your own dataset, use the data preprocess code provided in this git. Download the detection results in this git. Change the dataset_dir and detection_dir to your own path.

Since the handle visibility labels are not provided in the original NOCS REAL275 train set, please put the handle visibility file ./mug_handle.pkl under YOUR_NOCS_DIR/Real/train/ folder. The mug_handle.pkl is mannually labeled and originally provided by the GPV-Pose.

Download the trained model from this google link or baidu link (code: w8pw). After downloading it, please extracted it and then put the HS-Pose_weights folder into the output/models/ folder.

Run the following command to check the results for REAL275 dataset:

python -m evaluation.evaluate --model_save output/models/HS-Pose_weights/eval_result --resume 1 --resume_model ./output/models/HS-Pose_weights/model.pth --eval_seed 1677483078Download the trained model from this google link or baidu link (code: 9et7). After downloading it, please extracted it and then put the HS-Pose_CAMERA25_weights folder into the output/models/ folder.

Run the following command to check the results for CAMERA25 dataset:

python -m evaluation.evaluate --model_save output/models/HS-Pose_CAMERA25_weights/eval_result --resume 1 --resume_model ./output/models/HS-Pose_CAMERA25_weights/model.pth --eval_seed 1678917637 --dataset CAMERAPlease note, some details are changed from the original paper for more efficient training.

Specify the dataset directory and run the following command.

python -m engine.train --dataset_dir YOUR_DATA_DIR --model_save SAVE_DIRDetailed configurations are in config/config.py.

python -m evaluation.evaluate --dataset_dir YOUR_DATA_DIR --detection_dir DETECTION_DIR --resume 1 --resume_model MODEL_PATH --model_save SAVE_DIRYou can run the following training and testing commands to get the results similar to the below table.

python -m engine.train --model_save output/models/HS-Pose/ --num_workers 20 --batch_size 16 --train_steps 1500 --seed 1677330429 --dataset_dir YOUR_DATA_DIR --detection_dir DETECTION_DIR

python -m evaluation.evaluate --model_save output/models/HS-Pose/model_149 --resume 1 --resume_model ./output/models/HS-Pose/model_149.pth --eval_seed 1677483078 --dataset_dir YOUR_DATA_DIR --detection_dir DETECTION_DIR| Metrics | IoU25 | IoU50 | IoU75 | 5d2cm | 5d5cm | 10d2cm | 10d5cm | 10d10cm | 5d | 2cm |

|---|---|---|---|---|---|---|---|---|---|---|

| Scores | 84.3 | 82.8 | 75.3 | 46.2 | 56.1 | 68.9 | 84.1 | 85.2 | 59.1 | 77.8 |

Cite us if you found this work useful.

@InProceedings{Zheng_2023_CVPR,

author = {Zheng, Linfang and Wang, Chen and Sun, Yinghan and Dasgupta, Esha and Chen, Hua and Leonardis, Ale\v{s} and Zhang, Wei and Chang, Hyung Jin},

title = {HS-Pose: Hybrid Scope Feature Extraction for Category-Level Object Pose Estimation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {17163-17173}

}

Our implementation leverages the code from 3dgcn, FS-Net, DualPoseNet, SPD, GPV-Pose.