3D UX-Net: A Large Kernel Volumetric ConvNet Modernizing Hierarchical Transformer for Medical Image Segmentation

Official Pytorch implementation of 3D UX-Net, from the following paper:

3D UX-Net: A Large Kernel Volumetric ConvNet Modernizing Hierarchical Transformer for Medical Image Segmentation. ICLR 2023 (Accepted, Poster)

Ho Hin Lee, Shunxing Bao, Yuankai Huo, Bennet A. Landman

Vanderbilt University

[arXiv]

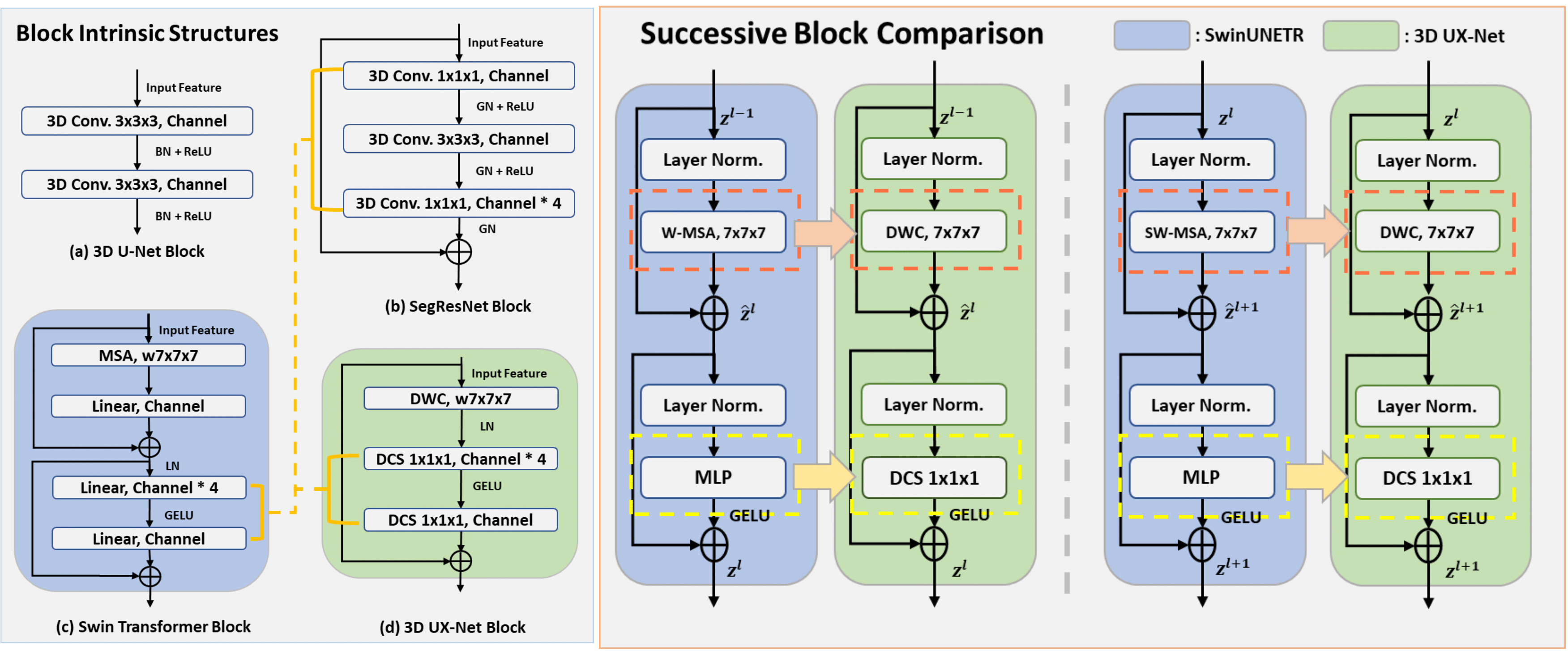

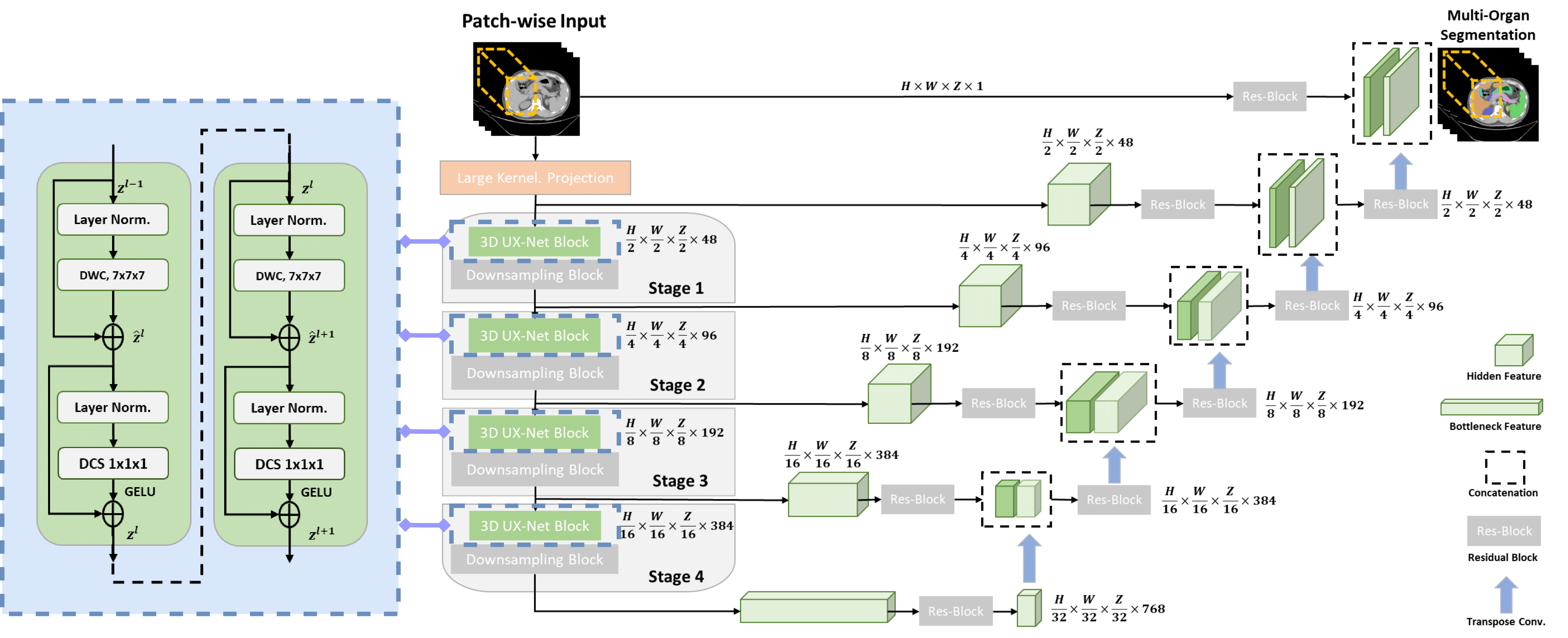

We propose 3D UX-Net, a pure volumetric convolutional network to adapt hierarchical transformers behaviour (e.g. Swin Transformer) for Medical Image Segmentation with less model parameters.

Please look into the INSTALL.md for creating conda environment and package installation procedures.

- FeTA 2021, FLARE 2021 Training Code TRAINING.md

- AMOS 2022 Finetuning Code TRAINING.md

(Feel free to post suggestions in issues of recommending latest proposed transformer network for comparison. Currently, the network folder is to put the current SOTA transformer. We can further add the recommended network in it for training.)

| Methods | resolution | #params | FLOPs | Mean Dice | Model |

|---|---|---|---|---|---|

| TransBTS | 96x96x96 | 31.6M | 110.4G | 0.868 | |

| UNETR | 96x96x96 | 92.8M | 82.6G | 0.860 | |

| nnFormer | 96x96x96 | 149.3M | 240.2G | 0.863 | |

| SwinUNETR | 96x96x96 | 62.2M | 328.4G | 0.867 | |

| 3D UX-Net | 96x96x96 | 53.0M | 639.4G | 0.874 | Weights |

| Methods | resolution | #params | FLOPs | Mean Dice | Model |

|---|---|---|---|---|---|

| TransBTS | 96x96x96 | 31.6M | 110.4G | 0.902 | |

| UNETR | 96x96x96 | 92.8M | 82.6G | 0.886 | |

| nnFormer | 96x96x96 | 149.3M | 240.2G | 0.906 | |

| SwinUNETR | 96x96x96 | 62.2M | 328.4G | 0.929 | |

| 3D UX-Net | 96x96x96 | 53.0M | 639.4G | 0.936 (latest) | Weights |

| Methods | resolution | #params | FLOPs | Mean Dice (AMOS2022) | Model |

|---|---|---|---|---|---|

| TransBTS | 96x96x96 | 31.6M | 110.4G | 0.792 | |

| UNETR | 96x96x96 | 92.8M | 82.6G | 0.762 | |

| nnFormer | 96x96x96 | 149.3M | 240.2G | 0.790 | |

| SwinUNETR | 96x96x96 | 62.2M | 328.4G | 0.880 | |

| 3D UX-Net | 96x96x96 | 53.0M | 639.4G | 0.900 (kernel=7) | Weights |

Training and fine-tuning instructions are in TRAINING.md. Pretrained model weights will be uploaded for public usage later on.

Efficient evaulation can be performed for the above three public datasets as follows:

python test_seg.py --root path_to_image_folder --output path_to_output \

--dataset flare --network 3DUXNET --trained_weights path_to_trained_weights \

--mode test --sw_batch_size 4 --overlap 0.7 --gpu 0 --cache_rate 0.2 \

This repository is built using the timm library.

This project is released under the MIT license. Please see the LICENSE file for more information.

If you find this repository helpful, please consider citing:

@article{lee20223d,

title={3D UX-Net: A Large Kernel Volumetric ConvNet Modernizing Hierarchical Transformer for Medical Image Segmentation},

author={Lee, Ho Hin and Bao, Shunxing and Huo, Yuankai and Landman, Bennett A},

journal={arXiv preprint arXiv:2209.15076},

year={2022}

}