This is a fork of the Coach framework by Intel, used to control a simulated magneto-optical trap(MOT) through reinforcement learning. Original README.md can be found here.

Note: Some parts of the original installation that are not needed for MOT control (e.g. PyGame and Gym) have been excluded here.

The corresponding docker images are based on Ubuntu 22.04 with Python 3.7.14.

We highly recommend starting with the docker image.

Instructions for the installation of the Docker Engine can be found here for Ubuntu and here for Windows.

Instruction for building of a docker container are here.

Alternatively, coach can be installed step-by-step:

There are a few prerequisites required. This will setup all the basics needed to get the user going with running Coach:

# General

sudo apt-get update

sudo apt-get install python3-pip cmake zlib1g-dev python3-tk -y

# Boost libraries

sudo apt-get install libboost-all-dev -y

# Scipy requirements

sudo apt-get install libblas-dev liblapack-dev libatlas-base-dev gfortran -y

# Other

sudo apt-get install dpkg-dev build-essential libjpeg-dev libtiff-dev libnotify-dev -y

sudo apt-get install ffmpeg swig curl software-properties-common build-essential nasm tar libbz2-dev libgtk2.0-dev git unzip wget -y

# Python 3.7.14

sudo add-apt-repository -y ppa:deadsnakes/ppa

sudo apt-get update

sudo apt-get install -y python3.7 python3.7-dev python3.7-venv

# pip

sudo curl -o /usr/local/bin/patchelf https://s3-us-west-2.amazonaws.com/openai-sci-artifacts/manual-builds/patchelf_0.9_amd64.elf

sudo chmod +x /usr/local/bin/patchelf

We recommend installing coach in a virtualenv:

python3.7 -m venv --copies venv

. venv/bin/activate

Clone the repository:

git clone https://github.com/MPI-IS/RL-coach-for-MOT.git

Install from the cloned repository:

cd RL-coach-for-MOT

pip install .

To allow reproducing results in Coach, we use a mechanism called preset.

Several presets can be defined in the presets directory.

To list all the available presets use the -l flag.

To run a preset, use:

coach -p <preset_name>For example:

- MOT simulation with continuous control parameters using deep deterministic policy gradients algorithm (DDPG):

coach -p ContMOT_DDPGThere are several options that are recommended:

-

The

-eflag allows you to specify the name of the experiment and the folder where the results, logs, and copies of the preset and environment files will be written to. When using the docker container use the/checkpoint/<experiment name>-folder to make the results available outside of the container (mounted to/tmp/checkpoint). -

The

-dgflag enables the output of npz-files containing the output of evaluation episodes to thenpzfolder inside the experiment folder. -

The

-sflag specifies in seconds the interval at which checkpoints are saved

For example:

coach -p ContMOT_DDPG -dg -e /checkpoint/Test -s 1800New presets can be created for different sets of parameters or environments by following the same pattern as in ContMOT_DDPG.

Another posibility to change the value of certain parameters is by using the custom parameter flag -cp.

For example:

coach -p ContMOT_DDPG -dg -e /checkpoint/Test -s 1800 -cp "agent_params.exploration.sigma = 0.2"The training can be started from an existing checkpoint by specifying its location using the -crd flag, this will load the last checkpoint in the folder.

For example:

coach -p ContMOT_DDPG -dg -e /checkpoint/Test -s 1800 -crd /checkpoint/Test/18_04_2023-15_20/checkpointFinally, in order to evaluate the performance of a trained agent without further training use the --evaluate flag followed by the number of evaluation steps/episodes?

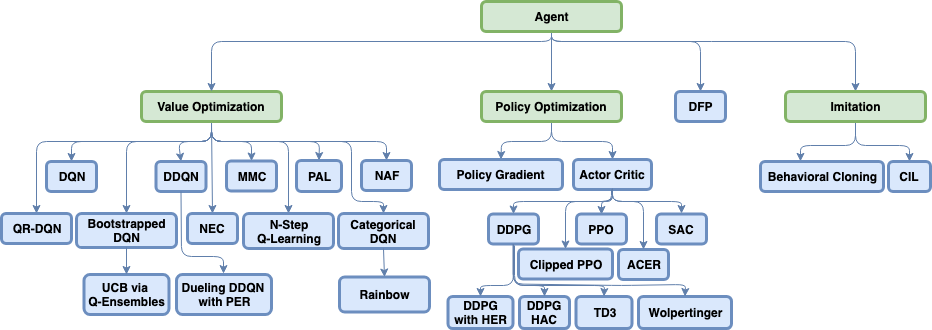

coach -p ContMOT_DDPG -dg -e /checkpoint/Test -s 1800 -crd /checkpoint/Test/18_04_2023-15_20/checkpoint --evaluate 10000- Deep Q Network (DQN) (code)

- Double Deep Q Network (DDQN) (code)

- Dueling Q Network

- Mixed Monte Carlo (MMC) (code)

- Persistent Advantage Learning (PAL) (code)

- Categorical Deep Q Network (C51) (code)

- Quantile Regression Deep Q Network (QR-DQN) (code)

- N-Step Q Learning | Multi Worker Single Node (code)

- Neural Episodic Control (NEC) (code)

- Normalized Advantage Functions (NAF) | Multi Worker Single Node (code)

- Rainbow (code)

- Policy Gradients (PG) | Multi Worker Single Node (code)

- Asynchronous Advantage Actor-Critic (A3C) | Multi Worker Single Node (code)

- Deep Deterministic Policy Gradients (DDPG) | Multi Worker Single Node (code)

- Proximal Policy Optimization (PPO) (code)

- Clipped Proximal Policy Optimization (CPPO) | Multi Worker Single Node (code)

- Generalized Advantage Estimation (GAE) (code)

- Sample Efficient Actor-Critic with Experience Replay (ACER) | Multi Worker Single Node (code)

- Soft Actor-Critic (SAC) (code)

- Twin Delayed Deep Deterministic Policy Gradient (TD3) (code)

- Direct Future Prediction (DFP) | Multi Worker Single Node (code)

- Behavioral Cloning (BC) (code)

- Conditional Imitation Learning (code)

- E-Greedy (code)

- Boltzmann (code)

- Ornstein–Uhlenbeck process (code)

- Normal Noise (code)

- Truncated Normal Noise (code)

- Bootstrapped Deep Q Network (code)

- UCB Exploration via Q-Ensembles (UCB) (code)

- Noisy Networks for Exploration (code)

If you used Coach for your work, please use the following citation:

@misc{caspi_itai_2017_1134899,

author = {Caspi, Itai and

Leibovich, Gal and

Novik, Gal and

Endrawis, Shadi},

title = {Reinforcement Learning Coach},

month = dec,

year = 2017,

doi = {10.5281/zenodo.1134899},

url = {https://doi.org/10.5281/zenodo.1134899}

}

We'd be happy to get any questions or suggestions, we can be contacted over email

RL-coach-for-MOT is released as a reference code for research purposes.