Model-free and Bayesian Ensembling Model-based Deep Reinforcement Learning for Particle Accelerator Control Demonstrated on the FERMI FEL

Contact: simon.hirlaender(at)sbg.ac.at

Pre-print https://arxiv.org/abs/2012.09737

Please cite code as:

- To run the NAF2 as used in the paper on the pendulum run: run_naf2.py

- To run the AE-DYNA as used in the paper on the pendulum run: AEDYNA.py

- To run the AE-DYNA with tensorflow 2 on the pendulum run: AE_Dyna_Tensorflow_2.py

The rest should be straight forward, otherwise contact us.

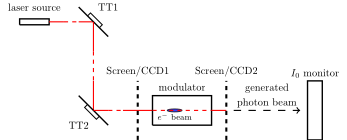

The problem has four degrees of freedom in state and action space. A schematic overview:

| Algorithm | Type | Representational power | Noise resistive | Sample efficiency |

|---|---|---|---|---|

| NAF | Model-free | Low | No | High |

| NAF2 | Model-free | Low | Yes | High |

| ME-TRPO | Model-based | High | No | High |

| AE-DYNA | Model-based | High | Yes | High |

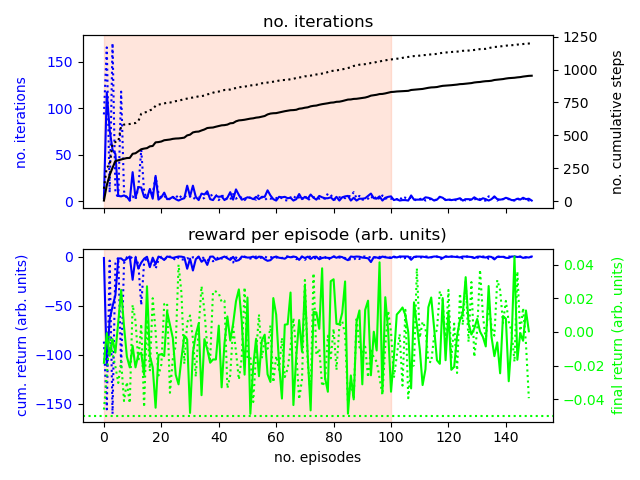

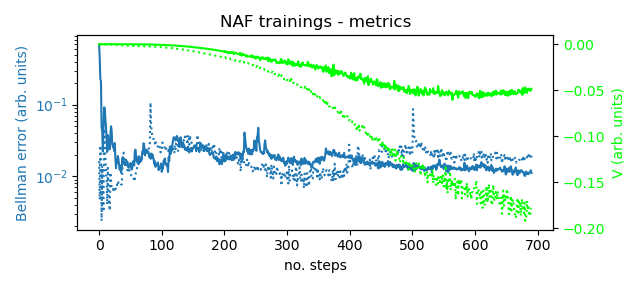

A new implementation of the NAF with double Q learning (single network dashed, double network solid):

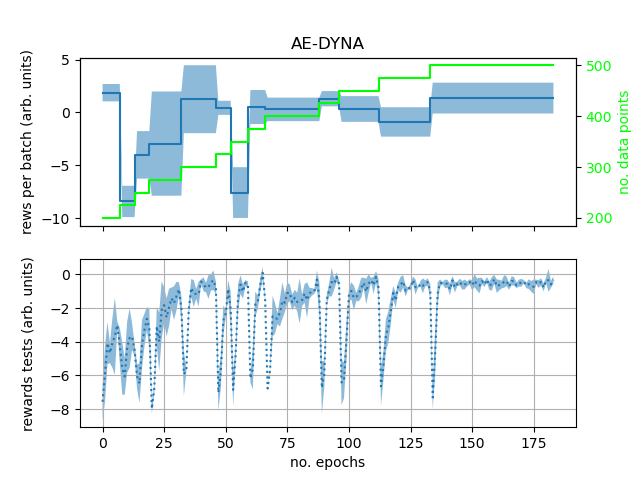

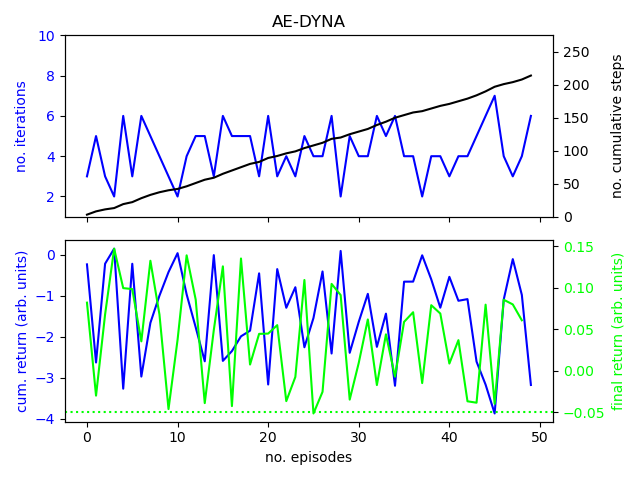

A new implementation of a AE-DYNA:

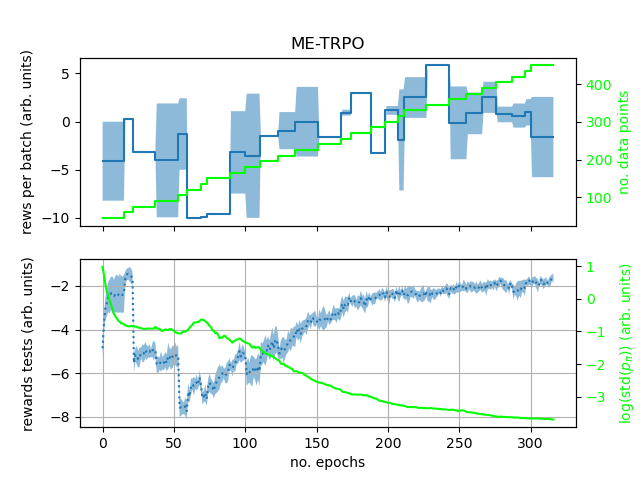

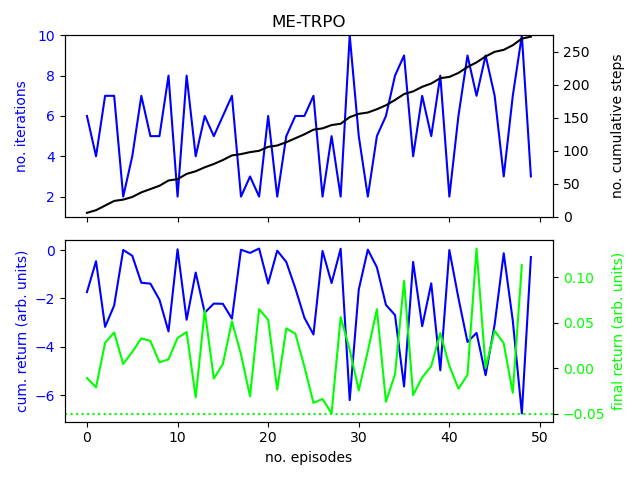

A variant of the ME-TRPO:

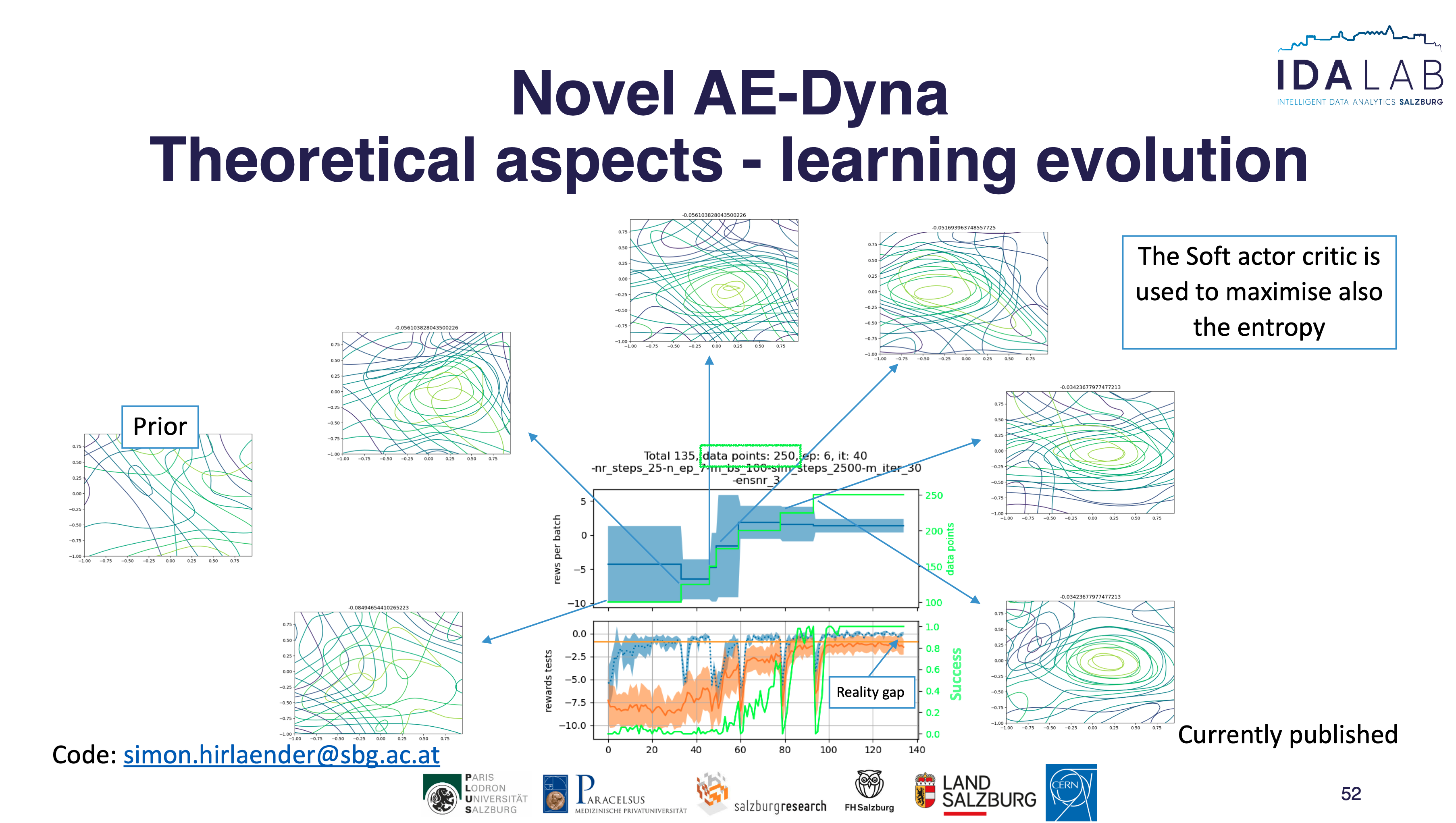

The evolution as presented at GSI Towards Artificial Intelligence in Accelerator Operation:

Experiments done on the inverted pendulum openai gym environment:

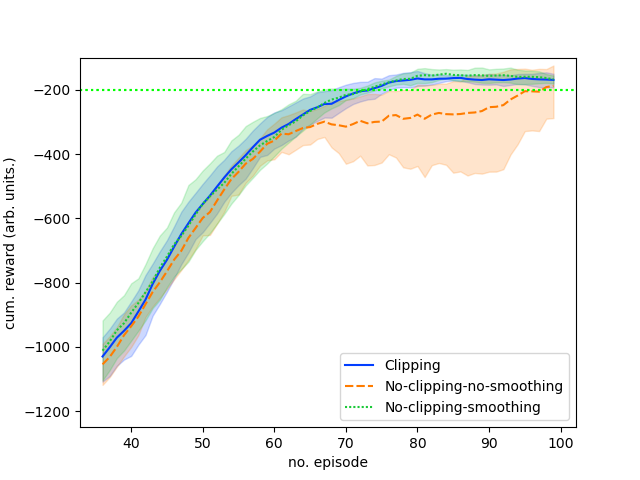

Cumulative reward of different NAF implementations on the inverted pendulum with artificial noise.

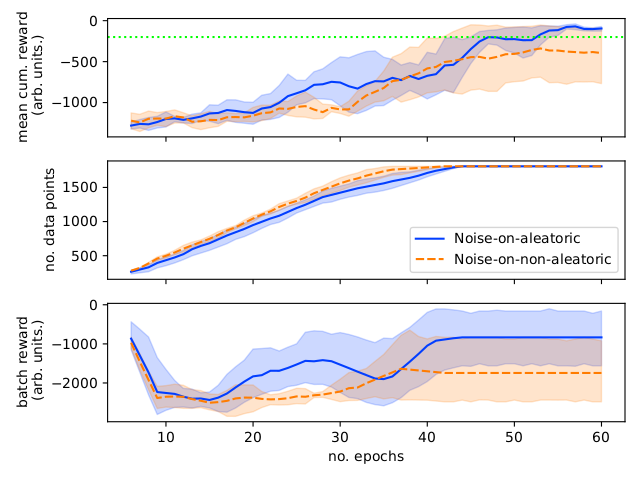

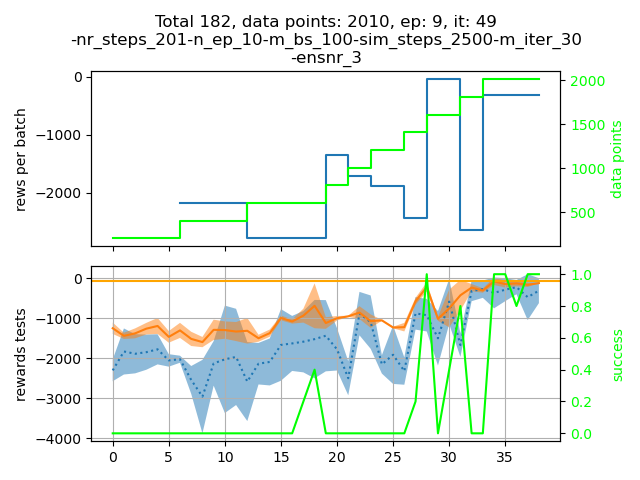

Comparison of the inclusion of aleatoric noise in the AE-DYNA in the noisy inverted pendulum:

Comparison of the inclusion of aleatoric noise in the AE-DYNA in the noisy inverted pendulum:

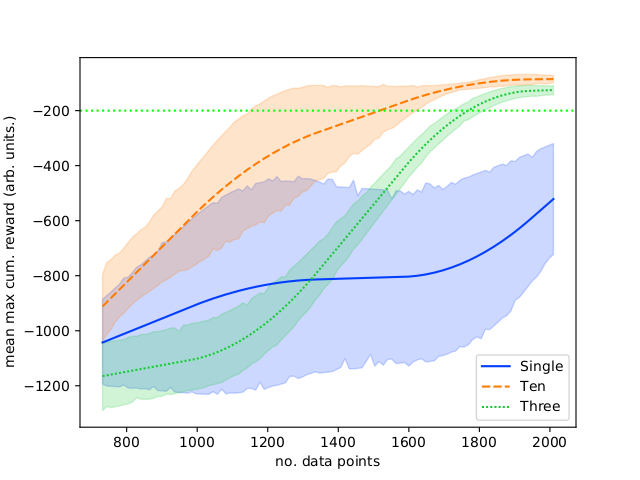

Sample efficiency of NAF and AE-DYNA:

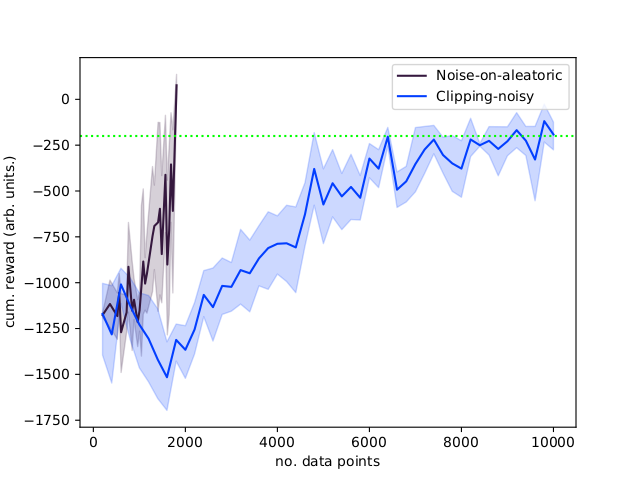

Free run on the inverted pendulum:

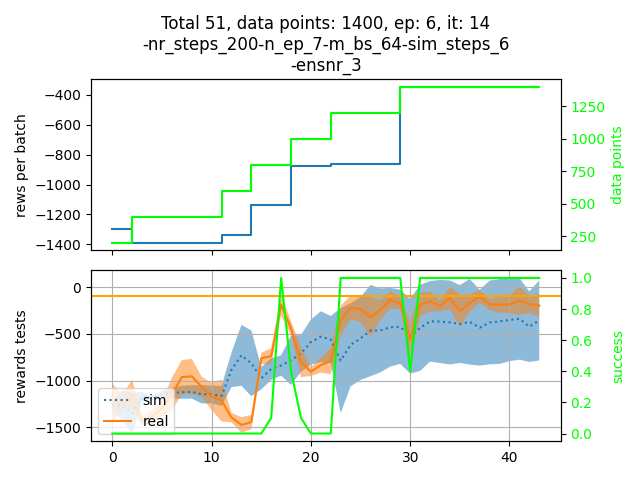

Finally, there is an update of the AE-dyna to use tensorflow 2. Run the script AE_Dyna_Tensorflow_2.py. It is based on tensor_layers tensorlayer, which has to be installed. The script AE_Dyna_Tensorflow_2.py runs on the inverted pendulum and produces results like shown in the figure below.