Training convolutional neural network models requires a substantial amount of labeled training data to achieve good performance. However, the collection and annotation of training data is a costly, time-consuming, and error-prone process. One promising approach to overcome this limitation is to use Computer Generated Imagery (CGI) technology to create a virtual environment for generating synthetic data and conducting automatic labeling.

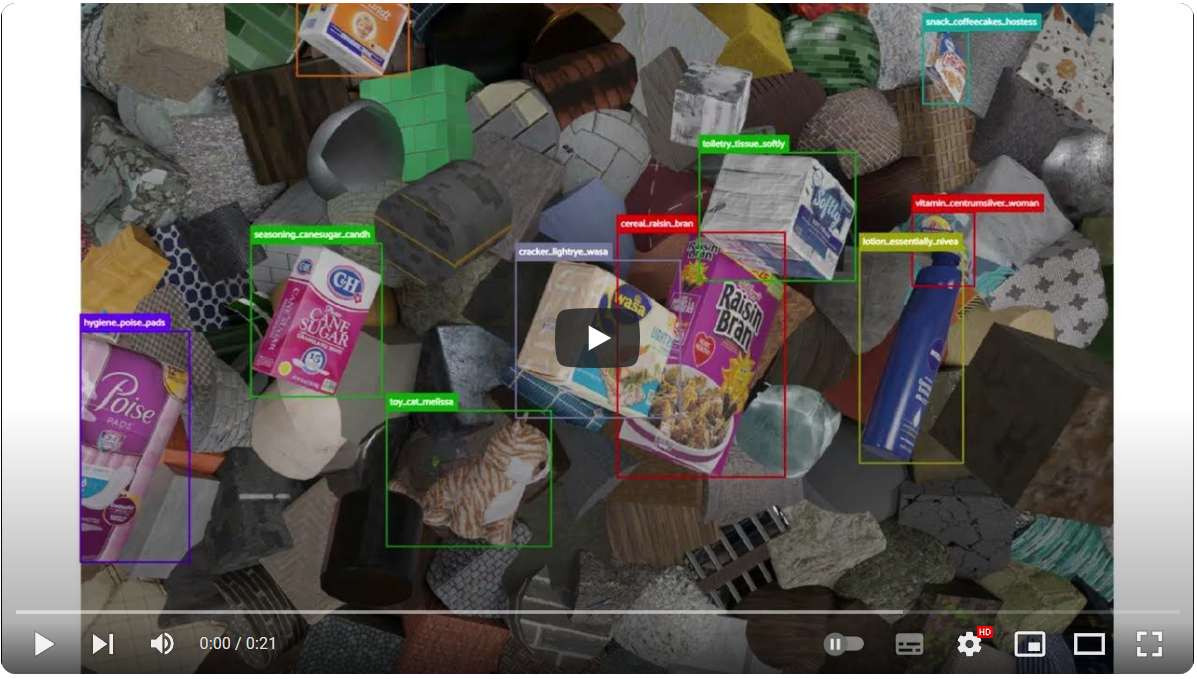

"Synthetic Data Generator for Retail Products Detection" is an open-source project aimed at constructing a synthetic image data generation pipeline using Blender and Python. The generated synthetic data is utilized for training YOLOv5 models and applied to the task of recognizing retail products. This project leverages Blender to produce randomized synthetic images containing 63 types of retail products (e.g., cereal boxes, soda cans, etc.), and exports corresponding data labels and annotations (2D detection boxes in YOLO format).

Visit the Blender 3.3 LTS web page and click on the Windows – Installer link to initiate the download. Once you have downloaded, install Blender in your PC.

Download this repo via git

git clone https://github.com/MichaelLiLee/Synthetic-Data-Generator-for-Retail-Products-Detection.git

or download via ZIP file.

Before using the synthetic data generator to generate data, it is necessary to prepare digital assets for creating virtual scenes.These digital assets include retail product models, background and occluder 3d models, PBR materials, and lighting.

In this project, the required digital assets to be prepared are as follows:

- 3D models of 63 retail products(.blend file), You can download from this Google Drive Link.

- 3D Models with No Texture Serving as background and occluder (.blend file), You can download from this Google Drive Link.

- 10 PBR materials from ambientCG, You can download from this Google Drive Link. You can also download more materials manually from the AmbientCG website. Alternatively, you can use this python script from BlenderProc to download them.

- 10 HDRIs from PolyHaven, You can download from this Google Drive Link. You can also download more HDRIs manually from the PolyHaven website. Alternatively, you can use this python script from BlenderProc to download them.

Once you have downloaded these digital assets, please place the assets in the corresponding folders in the following order:

retail products(.blend) >> Assets/foreground_object

background and occluder(.blend) >> Assets/background_occluder_object

pbr_texture(folders contain a series of jpg images) >> Assets/pbr_texture

HDRIs(.exr) >> Assets/hdri_lighting

After completing the aforementioned steps, it is necessary to set several parameters related to the path in theSDG_200_SDGParameter.pyfile:

blender_exe_path: The path to the blender executable(default: C:/program Files/Blender Foundation/Blender 3.3/blender).asset_background_object_folder_path: The path to background object assets(default: Assets/background_occluder_object).asset_foreground_object_folder_path: The path to foreground object assets(default: Assets/foreground_object).asset_ambientCGMaterial_folder_path: The path to the downloaded ambientCG PBR materials(default: Assets/pbr_texture).asset_hdri_lighting_folder_path: The path to the downloaded Poly Haven HDRIs(default: Assets/pbr_texture).asset_occluder_folder_path: The path to occlusion object assets(default: Assets/background_occluder_object).output_img_path: The path where rendered images will be saved(default: gen_data/images).output_label_path: The path where YOLO format bounding box annotations will be saved(default: gen_data/labels).

After completing the paths settings, execute the SDG_400_Looper.py file in vscode.

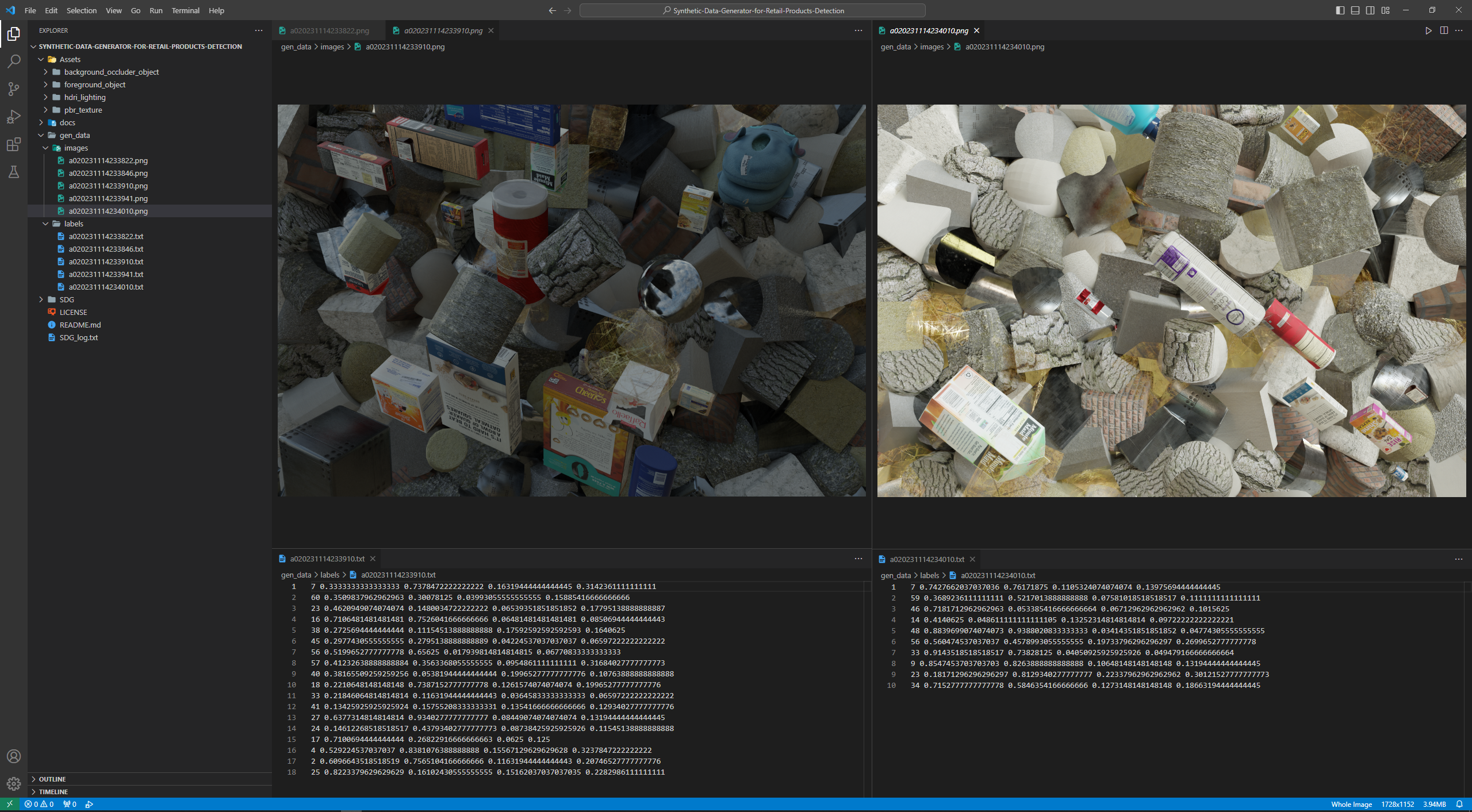

When rendering images and generating data labels, image and label files will be generated in thegen_datafolder.

This python file contains a configuration class to configure this blender-based synthetic data generator pipeline, The following parameters can be adapted to your specific application.

| Category | Parameter | Description | Distribution |

|---|---|---|---|

| Dataset size | gen_num | The quantity of synthetic images needed to be generated. | Constant(10) |

| 3D Object | asset_background_object_folder_path | A set of 3D models containing various simple geometric shapes such as cubes and cylinders. | A set of 3d model assets(10) |

| background_poisson_disk_sampling_radius | Background objects separation distance. | Constant(0.2) | |

| bg_obj_scale_ratio_range | The distribution of the scale ratio of background objects within the blender scene. | Constant(2.5) | |

| asset_occluder_folder_path | A set of 3D models containing various simple geometric shapes such as cubes and cylinders. | A set of 3d model assets(10) | |

| occluder_area | Spatial distribution area of occlusion objects. | Cartesian[Uniform(-0.6, 0.6), Uniform(-0.4, 0.4), Uniform(1.5, 1.9)] | |

| occluder_poisson_disk_sampling_radius | Occlusion objects separation distance. | Constant(0.25) | |

| num_occluder_in_scene_range | The distribution of the number of occlusion objects within the blender scene. | Uniform(5, 10) | |

| occluder_scale_ratio_range | The distribution of the scale ratio of occluder objects within the blender scene. | Uniform(0.5, 2) | |

| asset_foreground_object_folder_path | A set of 63 retail items 3D assets. | A set of 3d model assets(63) | |

| foreground_area | Spatial distribution area of foreground objects. | Cartesian[Uniform(-1.25, 1.25), Uniform(-0.75, 0.75), Uniform(0.5, 1)] | |

| foreground_poisson_disk_sampling_radius | Foreground objects separation distance. | Constant(0.3) | |

| num_foreground_object_in_scene_range | The distribution of the number of retail items within the blender scene. | Uniform(8, 20) | |

| fg_obj_scale_ratio_range | The distribution of the scale ratio of foreground objects within the blender scene. | Uniform(0.5, 2.2) | |

| - | Random rotation angle to background and occluder objects. | Euler[Uniform(0, 360), Uniform(0, 360), Uniform(0, 360)] | |

| - | Random unified rotation angle to all foreground (retail products) objects. | Euler[Uniform(0, 360), Uniform(0, 360), Uniform(0, 360)] | |

| Texture | asset_ambientCGMaterial_folder_path | A set of PBR materials that are randomly applied to the surfaces of the background and occluder objects | A set of PBR texture assets(10) |

| Environment Lighting | asset_hdri_lighting_folder_path | A set of high dynamic range images (HDRI) for scene lighting. | A set of hdri assets(10) |

| hdri_lighting_strength_range | The distribution of the strength factor for the intensity of the HDRI scene light. | Uniform(0.1, 2.2) | |

| - | Randomly rotate HDRI map. | Euler[Uniform(-30, 120), Uniform(-30, 30), Uniform(0, 360)] | |

| Camera & Post-processing | - | Perspective Camera focal length value in millimeters. | Constant(35) |

| img_resolution_x | Number of horizontal pixels in the rendered image. | Constant(1728) | |

| img_resolution_y | Number of vertical pixels in the rendered image. | Constant(1152) | |

| max_samples | Number of samples to render for each pixel. | Constant(128) | |

| chromatic_aberration_value_range | The distribution of the value of Lens Distortion nodes input-Dispersion, which simulates chromatic aberration. | Uniform(0.1, 1) | |

| blur_value_range | The distribution of the value of Blur nodes input-Size, which controls the blur radius values. | Uniform(2, 4) | |

| motion_blur_value_range | The distribution of the value of Vector Blur nodes input-Speed, which controls the direction of motion. | Uniform(2, 7) | |

| exposure_value_range | The distribution of the value of Exposure nodes input-Exposure, which controls the scalar factor to adjust the exposure. | Uniform(-0.5, 2) | |

| noise_value_range | The distribution of the value of brightness of the noise texture. | Uniform(1.6, 1.8) | |

| white_balance_value_range | The distribution of the value of WhiteBalanceNode input-ColorTemperature, which adjust the color temperature. | Uniform(3500, 9500) | |

| brightness_value_range | The distribution of the value of Bright/Contrast nodes input-Bright, which adjust the brightness. | Uniform(-1, 1) | |

| contrast_value_range | The distribution of the value of Bright/Contrast nodes input-Contrast, which adjust the contrast. | Uniform(-1, 5) | |

| hue_value_range | The distribution of the value of Hue Saturation Value nodes input-Hue, which adjust the hue. | Uniform(0.45, 0.55) | |

| saturation_value_range | The distribution of the value of Hue Saturation Value nodes input-Saturation, which adjust the saturation. | Uniform(0.75, 1.25) | |

| chromatic_aberration_probability | Probability of chromatic aberration effect being enabled. | P(enabled) = 0.1, P(disabled) = 0.9 | |

| blur_probability | Probability of blur effect being enabled. | P(enabled) = 0.1, P(disabled) = 0.9 | |

| motion_blur_probability | Probability of motion blur effect being enabled. | P(enabled) = 0.1, P(disabled) = 0.9 | |

| exposure_probability | Probability of exposure adjustment being enabled. | P(enabled) = 0.15, P(disabled) = 0.85 | |

| noise_probability | Probability of noise effect being enabled. | P(enabled) = 0.1, P(disabled) = 0.9 | |

| white_balance_probability | Probability of white balance adjustment being enabled. | P(enabled) = 0.15, P(disabled) = 0.85 | |

| brightness_probability | Probability of brightness adjustment being enabled. | P(enabled) = 0.15, P(disabled) = 0.85 | |

| contrast_probability | Probability of contrast adjustment being enabled. | P(enabled) = 0.15, P(disabled) = 0.85 | |

| hue_probability | Probability of hue adjustment being enabled. | P(enabled) = 0.15, P(disabled) = 0.85 | |

| saturation_probability | Probability of saturation adjustment being enabled. | P(enabled) = 0.15, P(disabled) = 0.85 |

Once the parameter settings are configured, execute the SDG_400_Looper.py file to initiate the synthetic data generation loop.

Real Retail Product Image Dataset for validation purpose : Consisting of 1267 images of real retail products, this dataset originated from the UnityGroceries-Real Dataset. This project has corrected annotation errors and converted the data labels into YOLO format. The dataset can be downloaded from this Google Drive link."

This project is inspired by the Unity SynthDet project, with improvements in methodology (including the addition of PBR materials, HDRI lighting, and ray tracing rendering), and it has been recreated using Blender.

Borkman, Steve, et al. (2021). Unity perception: Generate synthetic data for computer vision.