This repository contains theory and codes based on Udacity's Intro to deep learning with Pytorch. This work is intended to be a starting point for any Deep learning project. Different topics such as neural networks, convolutional networks, recurrent networks, Autoencoders, and GANs are explained and implemented in Python.

The next table of content aims to briefly describe the topics in each folder of this repository. Each folder is composed by a different set of codes implemented in Python (Notebooks) and its respective theory in Tex format.

The content of this repository is expected to by reviewed as follows.

- Introduction to Neural Networks: Basic theory of neural networks, the simple perceptron, backpropagation, error functions, regularization, activation functions among others.

- Introduction to PyTorch: Implementation of simple perceptron, multi-layer perceptrons and classification examples with Pytorch. Most of the examples were made using the MNIST and Fashion-MNIST dateset. Here is also an example of transfer learning.

- Convolutional Neural Networks: Theory of Convolutional neural networks, operations and parameters. Different classification examples are given with the MNIST dataset and CIFAR10 dataset.

- Autoencoders: In this folder is presented how autoencoders work by learning from the input data and applications as noise removal gadgets in both MLP and convolutional neural networks. In this folder is also presented the concept of upsampling and transposed convolution.

- Style Transfer: Here is shown how to extract style and content features from images, using a pre-trained network. This is an implementation of style transfer according to the paper, Image Style Transfer Using Convolutional Neural Networks by Gatys et. al. Define appropriate losses for iteratively creating a target. The theory and implementation are given.

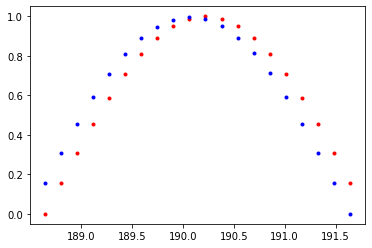

- Intro to Recurrent Networks (Time series & Character-level RNN): Here are presented the recurrent neural networks and how they are able to use information about the sequence of data, such as the sequence of characters in text. Here are implemented different mini-projects such as sentiment analysis, text generation and prediction of a time series.

- Generative Adversarial Network: Presented the theory behind GAN networks, how to train them and their potential. Different implementations are given in Pytorch with the MNIST dataset to generate digits and a Deep convolutional GAN to generate new images based on the Street View House Numbers (SVHN) dataset.

- Miscellaneous: Here are given two notebooks related to weight initialization and their effects during training and batch normalization to improve training rates and stability.

Note All the codes were taken from the Udacity MOOC as mentioned above. Some codes were slightly modified and others are just the solution proposed by the mentor. The idea is to have a group of codes and theory ready to use with any AI-related project.