Additional models and pipelines for 🤗 Diffusers created by Lambda Labs

git clone https://github.com/LambdaLabsML/lambda-diffusers.git

cd lambda-diffusers

python -m venv .venv

source .venv/bin/activate

pip install -r requirements.txtA fine-tuned version of Stable Diffusion conditioned on CLIP image embeddings to enabel Image Variations.

from diffusers import StableDiffusionImageVariationPipeline

from PIL import Image

device = "cuda:0"

sd_pipe = StableDiffusionImageVariationPipeline.from_pretrained(

"lambdalabs/sd-image-variations-diffusers",

revision="v2.0",

)

sd_pipe = sd_pipe.to(device)

im = Image.open("path/to/image.jpg")

tform = transforms.Compose([

transforms.ToTensor(),

transforms.Resize(

(224, 224),

interpolation=transforms.InterpolationMode.BICUBIC,

antialias=False,

),

transforms.Normalize(

[0.48145466, 0.4578275, 0.40821073],

[0.26862954, 0.26130258, 0.27577711]),

])

inp = tform(im).to(device)

out = sd_pipe(inp, guidance_scale=3)

out["images"][0].save("result.jpg")Stable Diffusion fine tuned on Pokémon by Lambda Labs.

Put in a text prompt and generate your own Pokémon character, no "prompt engineering" required!

If you want to find out how to train your own Stable Diffusion variants, see this example from Lambda Labs.

Girl with a pearl earring, Cute Obama creature, Donald Trump, Boris Johnson, Totoro, Hello Kitty

Trained on BLIP captioned Pokémon images using 2xA6000 GPUs on Lambda GPU Cloud for around 15,000 step (about 6 hours, at a cost of about $10).

import torch

from diffusers import StableDiffusionPipeline

from torch import autocast

pipe = StableDiffusionPipeline.from_pretrained("lambdalabs/sd-pokemon-diffusers", torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "Yoda"

scale = 10

n_samples = 4

# Sometimes the nsfw checker is confused by the Pokémon images, you can disable

# it at your own risk here

disable_safety = False

if disable_safety:

def null_safety(images, **kwargs):

return images, False

pipe.safety_checker = null_safety

with autocast("cuda"):

images = pipe(n_samples*[prompt], guidance_scale=scale).images

for idx, im in enumerate(images):

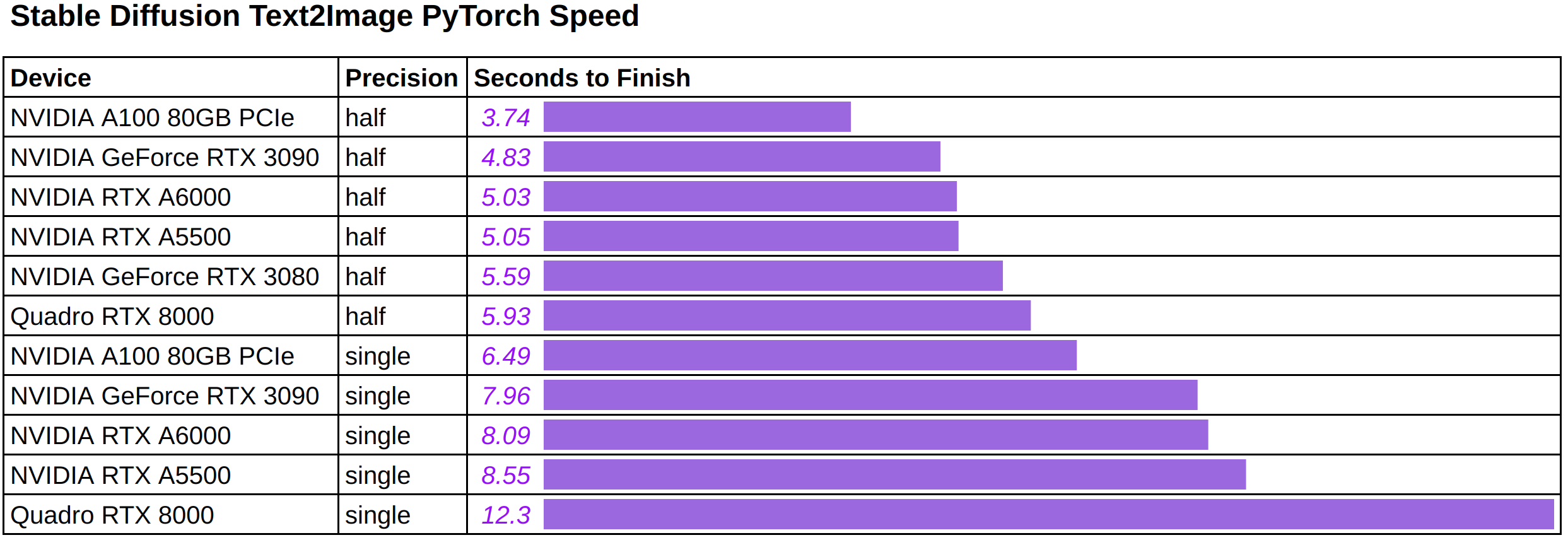

im.save(f"{idx:06}.png")Detailed benchmark documentation can be found here.

Before running the benchmark, make sure you have completed the repository installation steps.

You will then need to set the huggingface access token:

- Create a user account on HuggingFace and generate an access token.

- Set your huggingface access token as the

ACCESS_TOKENenvironment variable:

export ACCESS_TOKEN=<hf_...>

Launch the benchmark script to append benchmark results to the existing benchmark.csv results file:

python ./scripts/benchmark.py

Trained by Justin Pinkney (@Buntworthy) at Lambda Labs.