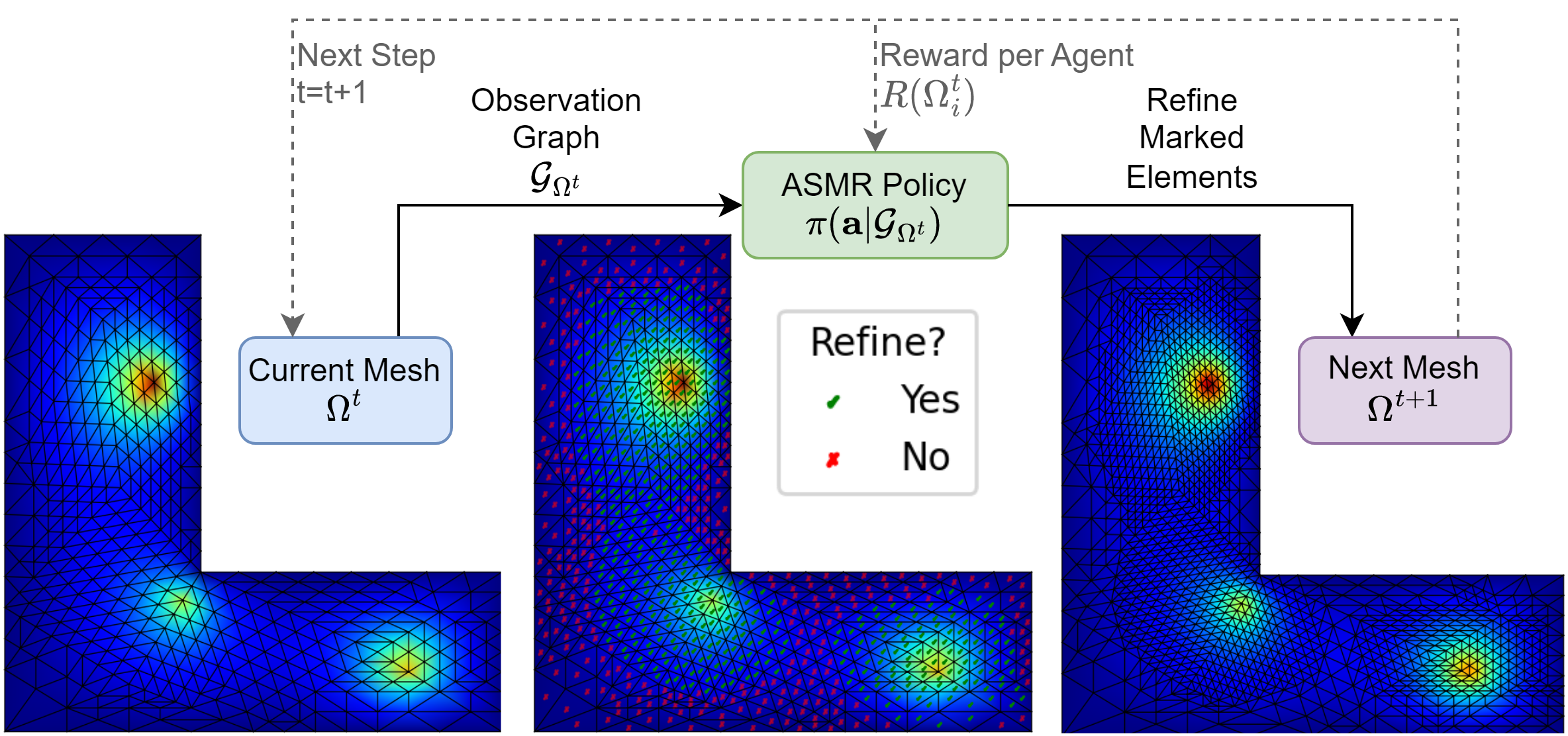

The Finite Element Method, an important technique in engineering, is aided by Adaptive Mesh Refinement (AMR), which dynamically refines mesh regions to allow for a favorable trade-off between computational speed and simulation accuracy. Classical methods for AMR depend on task-specific heuristics or expensive error estimators, hindering their use for complex simulations. Recent learned AMR methods tackle these problems, but so far scale only to simple toy examples. We formulate AMR as a novel Adaptive Swarm Markov Decision Process in which a mesh is modeled as a system of simple collaborating agents that may split into multiple new agents. This framework allows for a spatial reward formulation that simplifies the credit assignment problem, which we combine with Message Passing Networks to propagate information between neighboring mesh elements. We experimentally validate the effectiveness of our approach, Adaptive Swarm Mesh Refinement (ASMR), showing that it learns reliable, scalable, and efficient refinement strategies on a set of challenging problems. Our approach improves computation speed by more than tenfold compared to uniform refinements in complex simulations. Additionally, we outperform learned baselines and achieve a refinement quality that is on par with a traditional error-based AMR strategy without expensive oracle information about the error signal.

This project uses conda (https://docs.conda.io/en/latest/) (or better yet, mamba https://github.com/conda-forge/miniforge#mambaforge) and pip for handling packages and dependencies.

You should be able to install all requirements using the commands below:

# for cpu use

mamba env create -f ./env/environment-cpu.yaml

mamba activate ASMR_cpu

# for gpu use

mamba env create -f ./env/environment-cuda.yaml

mamba activate ASMR_cuda

# dont forget to login into wandb

wandb login

Test if everything works by running the test experiments, which will test all methods and tasks for a single simplified training episode:

python main.py configs/asmr/tests.yaml -oWe give examples for environment generation and the general training interface in example.py. Here,

ASMR is trained on the Poisson equation using the PPO algorithm.

We provide all experiments used in the paper in the configs/asmr folder. The experiments are organized by task/pde.

For example, configs/asmr/poisson.yaml contains all Poisson experiments, and ASMR can be trained on this task via the

provided poisson_asmr config by executing python main.py configs/poisson.yaml -e poisson_asmr -o

Experiments are configured and distributed via cw2 (https://www.github.com/ALRhub/cw2.git).

For this, the folder configs contains a number of .yaml files that describe the configuration of the task to run.

The configs are composed of individual

experiments, each of which is separated by three dashes and uniquely identified with an $EXPERIMENT_NAME$.

To run an experiment from any of these files on a local machine, type

python main.py configs/$FILE_NAME$.yaml -e $EXPERIMENT_NAME$ -o.

To start an experiment on a cluster that uses Slurm

(https://slurm.schedmd.com/documentation.html), run

python main.py configs/$FILE_NAME$.yaml -e $EXPERIMENT_NAME$ -o -s --nocodecopy.

Running an experiments provides a (potentially nested) config dictionary to main.py. For more information on how to use this, refer to the cw2 docs.

After running the first experiment, a folder reports will be created.

This folder contains everything that the loggers pick up, organized by the name of the experiment and the repetition.

The src folder contains the source code for the algorithms. It is organized into the following subfolders:

This folder includes all the iterative training algorithms, that are used by cw2.

The rl directory implements common Reinforcement Learning algorithms, such as PPO and DQN.

We provide a logger for all console outputs, different scalar metrics and task-dependent visualizations per iteration. The scalar metrics may optionally be logged to the wandb dashboard. The metrics and plot that are recorded depend on both the task and the algorithm being run. The loggers can (and should) be extended to suite your individual needs, e.g., by plotting additional metrics or animations whenever necessary.

All locally recorded entities are saved the reports directory, where they are organized by their experiment and repetition.

Common utilities used by the entire source-code can be found here. This includes additions torch code, common definitions and functions, and save and load functionality.

The folder asmr_evaluations contains the code for the evaluation of the ASMR algorithm and all baselines. It uses

checkpoint files from the training of the algorithms and evaluates them on the separate evaluation PDE.

The environments include a Mesh Refinement and a Sweep Mesh Refinement environment.

Both deal with geometric graphs of varying size that represent meshes over a fixed boundary

and are used for the finite element method.

Building blocks for the Message Passing Network architecture.

Please cite this code and the corresponding paper as

@article{freymuth2024swarm,

title={Swarm reinforcement learning for adaptive mesh refinement},

author={Freymuth, Niklas and Dahlinger, Philipp and W{\"u}rth, Tobias and Reisch, Simon and K{\"a}rger, Luise and Neumann, Gerhard},

journal={Advances in Neural Information Processing Systems},

volume={36},

year={2024}

}

Fixed an issue that did not consider edge features for the VDGN-GAT architecture.