FER2013 is a challenging datasets with human-level accuracy only at 65 ± 5 %. It is not a balanced dataset, as it contains images of 7 facial expressions, with distributions of Angry (4953), Disgust (547), Fear (5121), Happy (8989), Sad (6077), Surprise (4002), and Neutral (6198). In addition to that the depicted faces vary significantly in terms of person age, face pose, and other factors, reflecting realistic conditions.

The data consists of 48x48 pixel grayscale images of faces. The faces have been automatically registered so that the face is more or less centered and occupies about the same amount of space in each image. The task is to categorize each face based on the emotion shown in the facial expression in to one of seven categories (0=Angry, 1=Disgust, 2=Fear, 3=Happy, 4=Sad, 5=Surprise, 6=Neutral).

The dataset contains three columns, ’emotion’, ’pixels ’, and ’Usage’. The ’emotion’ column contains a numeric code ranging from 0 to 6, inclusive, for the emotion that is present in the image. The ’pixels’ column contains a string surrounded in quotes for each image. The contents of this string a space-separated pixel values in row major order. The ’Usage’ column contains the purpose of the data (e.g. test set or training set).

The training set consists of 28,709 examples. The public test set used for the leaderboard consists of 3589 examples. The final test set consists of another 3589 examples. (Goodfellow et al. 2013)

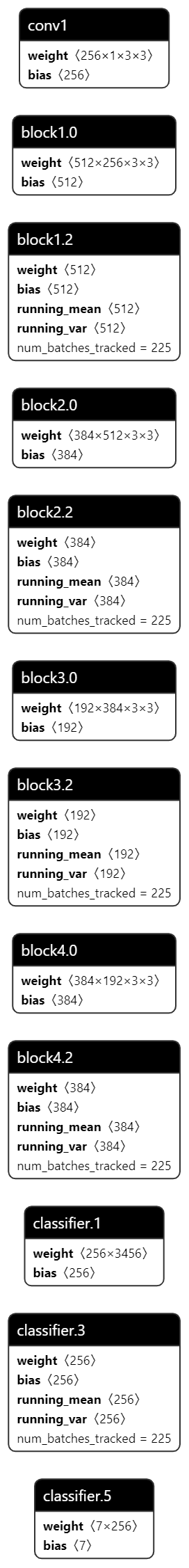

The model was chosen taking inspiration from the work of (Vulpe-Grigoraşi and Grigore 2021), in this paper they used Random Search Algorithms which are an automated method capable of generating models with various architectures and different configurations of hyperparameters from a discrete space of possible solutions that can be applied in the imposed problem. They selected a single model out of 500 which provided an accuracy of 69.96% on the validation data after 750 epochs. As we are unable (both because of hardare and because of time) of reaching such a running time the network was slightly tweaked. For example the last Softmax layer was removed as not useful for the type of loss used (Stevens, Antiga, and Viehmann 2020) and because it degratated performance.

Several transformation were tried (mirroring, shifting, cropping) also for addressing the unbalance in the data however at the end the following were chosen:

RandomHorizontalFlip;

Normalize.

This refers to the training set, in addition to that we normalized all the data and because we used ToTensor combined with uint8 type of data we had our data automatically scaled according to (Stevens, Antiga, and Viehmann 2020).

CrossEntropyLoss was used given that we are dealing with a classification problem, because of how the loss is defined in PyTorch there was no need to Hot-Encode the labels (Stevens, Antiga, and Viehmann 2020).

SGD with momentum and SGD Nesterov were the main optimizer tried as suggested by (Khaireddin and Chen 2021) but experimentally nothing performed as well as Adam, which was the one used. The learning rate used was 0.001 as almost all the papers consulted agreed on this value.

The batch size used is 128 according to (Vulpe-Grigoraşi and Grigore 2021). The maximum number of epochs that we were able to train was 20.

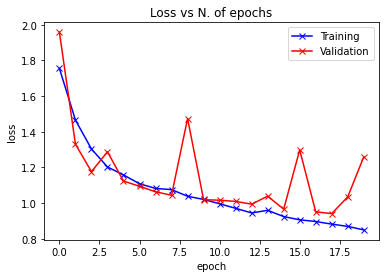

At the end the following was obtained:

A loss of 0.8817 on the training set.

A loss of 0.9406 on the validation set.

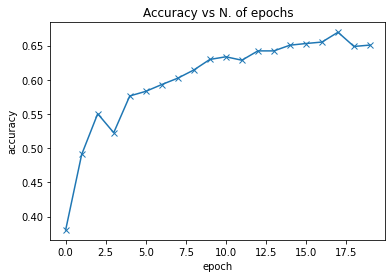

An accuracy of 66.95% on the validation set.

An accuracy of 62.62% on the test set.

It goes without saying that in order to get close to the actual state of the art it is necessary to apply transfer learning techniques on already known network (e.g. VGG16) as in (Khaireddin and Chen 2021) and (Khanzada, Bai, and Celepcikay 2020).

The result obtained however are worse than (Vulpe-Grigoraşi and Grigore 2021) mostly because of the limited number of epochs1 and because of the different transformations at the beginning as for some reason the one suggested in the paper seemed to decrease the performance of the network. The last reason is that the model is somewhat different (we already stated the removal of some layer, but even stride was added and so on); those decision were made only by trying and seeing the outcome without too much, if none at all, theory or references behind.

In addition to that there seems to be a problem with weight initialization in PyTorch that might provide better performance on the dataset using other libraries.

The result are not satisfactory, unfortunately lack of time and hardware played a major role in this work as probability keep going in this direction could have provided some valid results.

A graphical representation of the model. Loss. Accuracy.Looking at figure 1 there seems to be a sign of overfitting in the last few epochs, but looking at the past epochs is reasonable to suppose that it could be something periodic.↩︎