Zhangyang Qi1,5*, Yunhan Yang1*, Mengchen Zhang2,5, Long Xing3,5, Xiaoyang Wu1, Tong Wu4,5, Dahua Lin4,5, Xihui Liu1, Jiaqi Wang5✉, Hengshuang Zhao1✉

* Equation Contribution, ✉ Corresponding Authors,

1 The University of Hong Kong,

2 Zhejiang University,

3 University of Science and Technology of China,

4 The Chinese University of Hong Kong,

5 Shanghai AI Laboratory,

- [2024.07.03] We release the arxiv paper, code v1.0, project page, model weight and the dataset card of Tailor3D. Note that in v1.0, the fusion front and back triplane way is the conv2d.

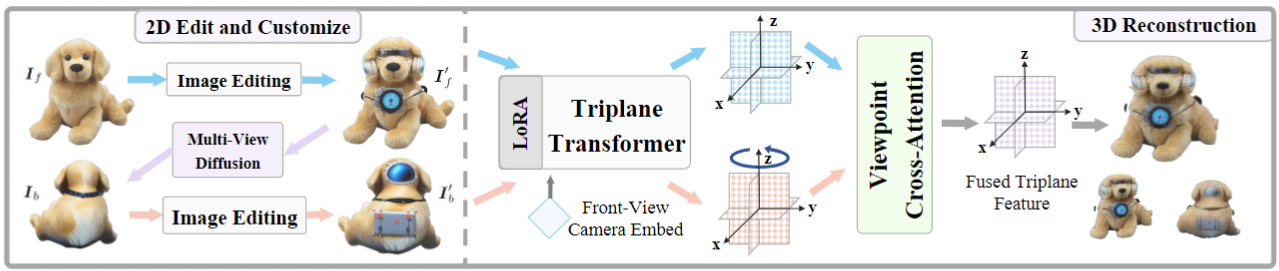

We propose Tailor3D, a novel pipeline creating customized 3D assets from editable dual-side images and feed-forward reconstruction methods. This approach mimics a tailor's local object changes and style transfers:

- Use image editing methods to edit the front-view image. The front-view image can be provided or generated from text.

- Use multi-view diffusion techniques (e.g., Zero-1-to-3) to generate the back view of the object.

- Use image editing methods to edit the back-view image.

- Use our proposed Dual-sided LRM (large reconstruction model) to seamlessly combine front and back images and get the customized 3D asset.

Each step takes only a few seconds, allowing users to interactively obtain the 3D objects they desire. Experimental results show Tailor3D's effectiveness in 3D generative fill and style transfer, providing an efficient solution for 3D asset editing.

conda create -n tailor3d python=3.11

conda activate tailor3d

conda install pytorch==2.2.2 torchvision==0.17.2 torchaudio==2.2.2 pytorch-cuda=11.8 -c pytorch -c nvidia

pip install -r requirements.txt

Model weights are released on Hugging Face and weights will be downloaded automatically when you run the inference script for the first time.

| Model | Pretained Model | Layers | Feat. Dim | Trip. Dim. | In. Res. | Image Encoder |

|---|---|---|---|---|---|---|

| tailor3d-small-1.0 | openlrm-mix-small-1.1 | 12 | 512 | 32 | 224 | dinov2_vits14_reg |

| tailor3d-base-1.0 | openlrm-mix-base-1.1 | 12 | 768 | 48 | 336 | dinov2_vitb14_reg |

| tailor3d-large-1.0 | openlrm-mix-large-1.1 | 16 | 1024 | 80 | 448 | dinov2_vitb14_reg |

- We put some sample inputs under

assets/sample_input/demo. Note that the folders "front" and "back" should respectively contain edited images of the object's frontal view and rear view. - Prepare RGBA images or RGB images with white background (with some background removal tools, e.g., Rembg, Clipdrop).

An example usage of tatlor3d-large is as follows:

# Example usage

EXPORT_VIDEO=true

EXPORT_MESH=true

DOUBLE_SIDED=true

HUGGING_FACE=true

INFER_CONFIG="./configs/all-large-2sides.yaml"

MODEL_NAME="alexzyqi/tailor3d-large-1.0"

PRETRAIN_MODEL_HF="zxhezexin/openlrm-mix-large-1.1"

IMAGE_INPUT="./assets/sample_input/demo"

python -m openlrm.launch infer.lrm --infer $INFER_CONFIG model_name=$MODEL_NAME pretrain_model_hf=$PRETRAIN_MODEL_HF image_input=$IMAGE_INPUT export_video=$EXPORT_VIDEO export_mesh=$EXPORT_MESH double_sided=$DOUBLE_SIDED inferrer.hugging_face=$HUGGING_FACE

- You may specify which form of output to generate by setting the flags

EXPORT_VIDEO=trueandEXPORT_MESH=true. - When

DOUBLE_SIDED=true, Tailor3D uses both front and back view images as input; when set to false, it degenerates to LRM. - When

HUGGING_FACE=true, the Hugging Face model is used for inference; if set to false, inference will be conducted to evaluate the common training process. INFER_CONFIGis the config for both training and inference, you can change to small and tiny version.MODEL_NAMEcorresponds to the model version specified inINFER_CONFIG.PRETRAIN_MODEL_HFcorresponds to the pretrained model used in OpenLRM."

We use gobjaverse-lvis dataset. You can get access through the hugging face dataset card.

configs/accelerate-train.yaml: a sample accelerate config file to use 8 GPUs withbf16mixed precision.configs/accelerate-train-4gpus.yaml: a sample accelerate config file to use 4 GPUs withbf16mixed precision.

An example training usage of tatlor3d-large is as follows:

# Example usage

ACC_CONFIG="./configs/accelerate-train.yaml"

TRAIN_CONFIG="./configs/all-large-2sides.yaml"

accelerate launch --config_file $ACC_CONFIG -m openlrm.launch train.lrm --config $TRAIN_CONFIG

- A sample training config file is provided under

TRAIN_CONFIG, training and inference configs are all in the same config yaml file.

-

The inference pipeline is compatible with huggingface utilities for better convenience.

-

You need to convert the training checkpoint to inference models by running the following script.

python scripts/convert_hf.py --config configs/all-large-2sides.yaml -

The converted model will be saved under

exps/releasesby default and can be used for inference following the inference. -

Note: In this way the model.safetensors have the full parameters.

| Model | Model Size | Model Size (with pretrained model) |

|---|---|---|

| tailor3d-small-1.0 | 17.4 MB | 436 MB |

| tailor3d-base-1.0 | 26.8 MB | 1.0 GB |

| tailor3d-large-1.0 | 45 MB | 1.8 GB |

python scripts/upload_hub.py --model_type lrm --local_ckpt exps/releases/gobjaverse-2sides-large/0428_conv_e10/step_013340 --repo_id alexzyqi/Tailor3D-Large-1.0

Note that you should change --local_ckpt and --repo_id to your own.

- Gobjaverse: This is rendered by making two complete rotations around the object in Objaverse (28K 3D Objects).

- LVIS: It is Large Vocabulary Instance Segmentation (LVIS) dataset which focus on objects. Gobjaverse-LVIS have 21445 objects in total.

- Stable Diffusion, Midjourney,: Text-to-Image tools to generate the front-view image.

- Adobe Express: Image generative geometry and pattern fill.

- InstantStyle: Image customized styling.

- ControlNet: Image style Transfer.

- Stable Zero123: 3D-aware Multi-view Diffusion method to generate the back-view image based on the front image.

- Large Reconstuction Model: The paper and the open source code OpenLRM.

If you find this work useful for your research, please consider citing:

@misc{qi2024tailor3dcustomized3dassets,

title={Tailor3D: Customized 3D Assets Editing and Generation with Dual-Side Images},

author={Zhangyang Qi and Yunhan Yang and Mengchen Zhang and Long Xing and Xiaoyang Wu and Tong Wu and Dahua Lin and Xihui Liu and Jiaqi Wang and Hengshuang Zhao},

year={2024},

eprint={2407.06191},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2407.06191},

}