Embodiment mapping for robotic hands from human hand motions

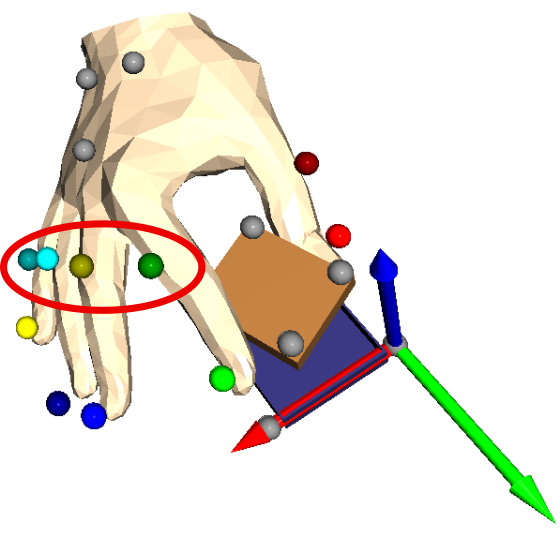

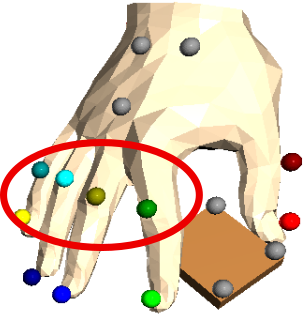

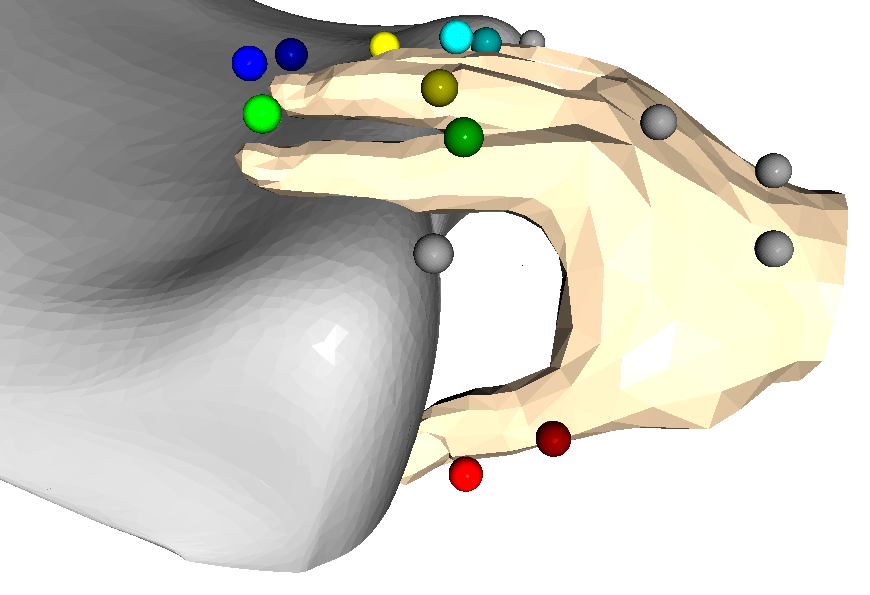

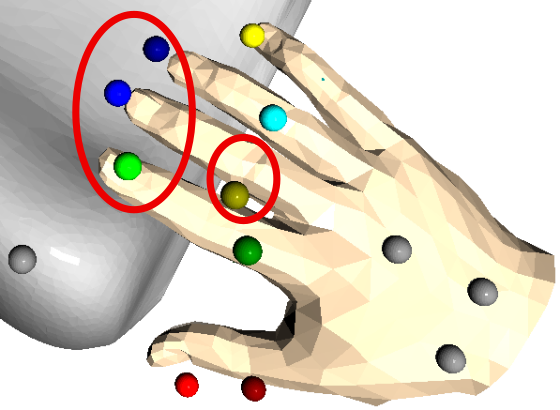

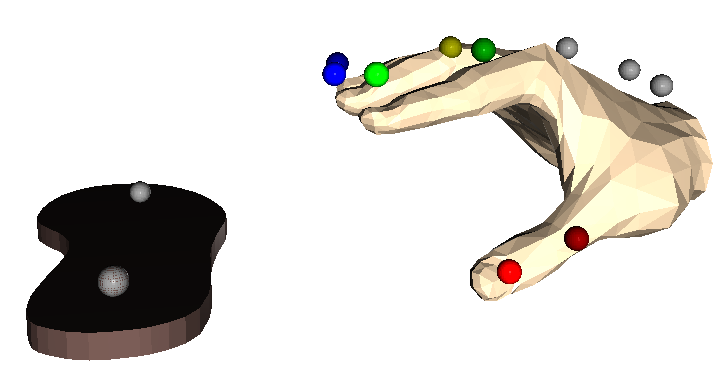

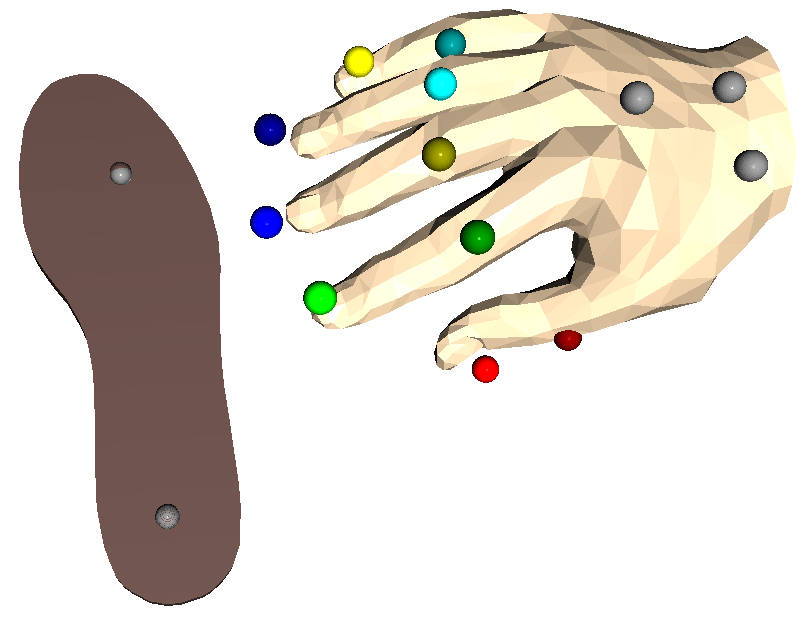

The general idea of this software package is to use the MANO hand model to represent human hand configurations and then transfer the state of the MANO model to robotic hands. This allows us to quickly change the motion capture approach because we have an independent representation of the hand's state. Furthermore, we can easily change the target system because we just need to configure the mapping from MANO to the target hand.

The currently implemented motion capture approaches are:

- marker-based motion capture with the Qualisys system

The currently implemented target systems are:

- Mia hand from Prensilia

- Dexterous Hand from Shadow

- Robotiq 2F-140 gripper

- BarrettHand

This is the implementation of the paper

A. Fabisch, M. Uliano, D. Marschner, M. Laux, J. Brust and M. Controzzi,

A Modular Approach to the Embodiment of Hand Motions from Human Demonstrations,

2022 IEEE-RAS 21st International Conference on Humanoid Robots (Humanoids),

Ginowan, Japan, 2022, pp. 801-808, doi: 10.1109/Humanoids53995.2022.10000165.

It is available from arxiv.org as a preprint or from IEEE.

Please cite it as

@INPROCEEDINGS{Fabisch2022,

author={Fabisch, Alexander and Uliano, Manuela and Marschner, Dennis and Laux, Melvin and Brust, Johannes and Controzzi, Marco},

booktitle={2022 IEEE-RAS 21st International Conference on Humanoid Robots (Humanoids)},

title={A Modular Approach to the Embodiment of Hand Motions from Human Demonstrations},

year={2022},

pages={801--808},

doi={10.1109/Humanoids53995.2022.10000165}

}The dataset is available at Zenodo:

We recommend to use zenodo_get to download the data:

pip install zenodo-get

mkdir -p some/folder/in/which/the/data/is/located

cd some/folder/in/which/the/data/is/located

zenodo_get 7130208A detailed documentation is available here.

Scripts are located at bin/.

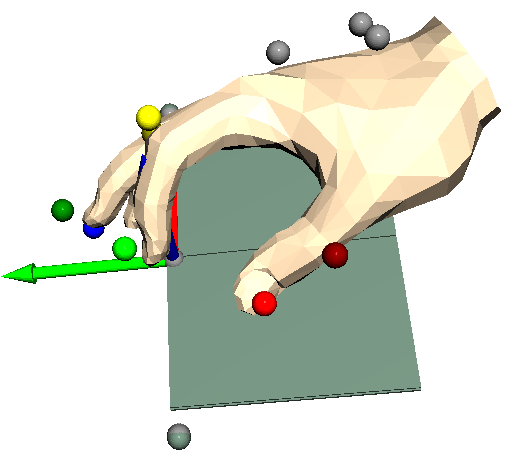

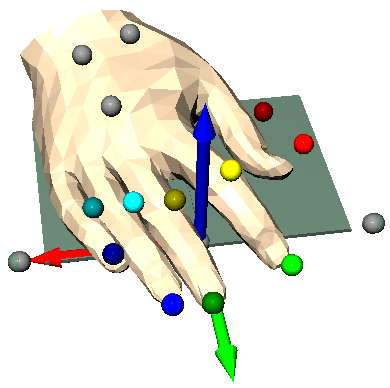

See bin/vis_markers_to_mano_trajectory.py

|

|

|

|

|

|

|

|

|

|

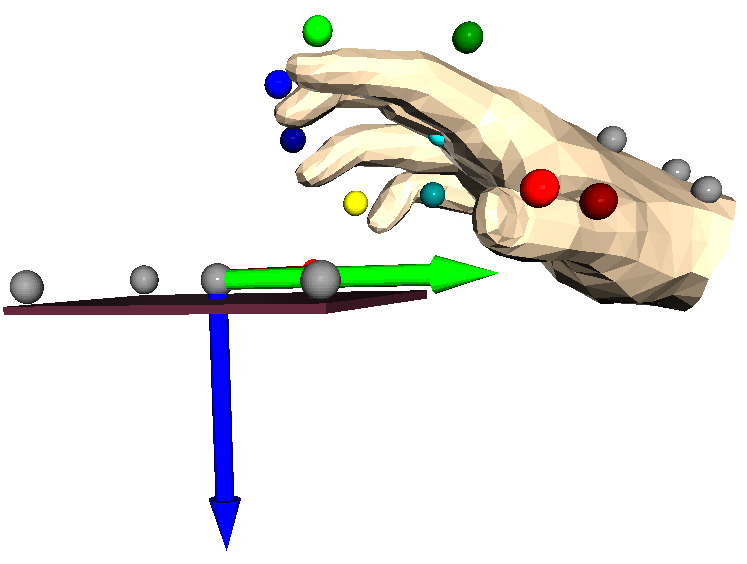

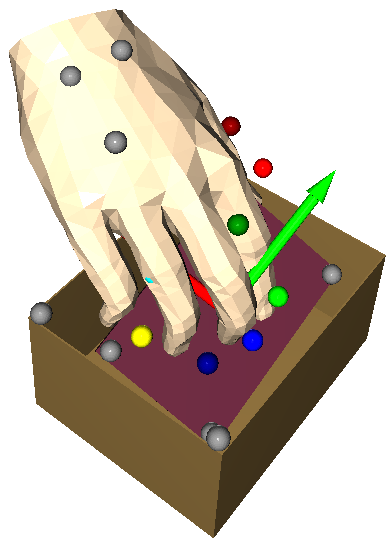

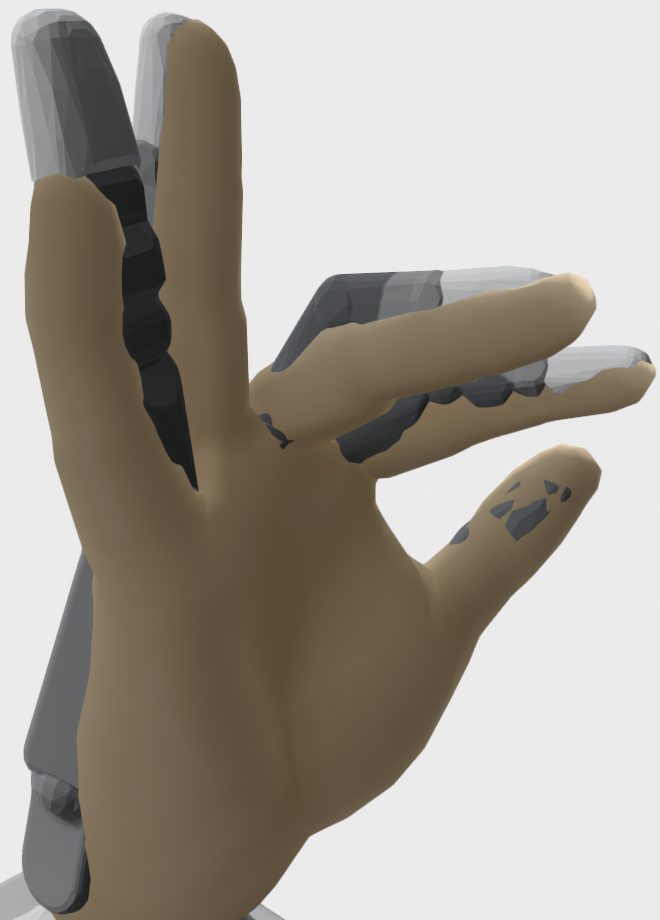

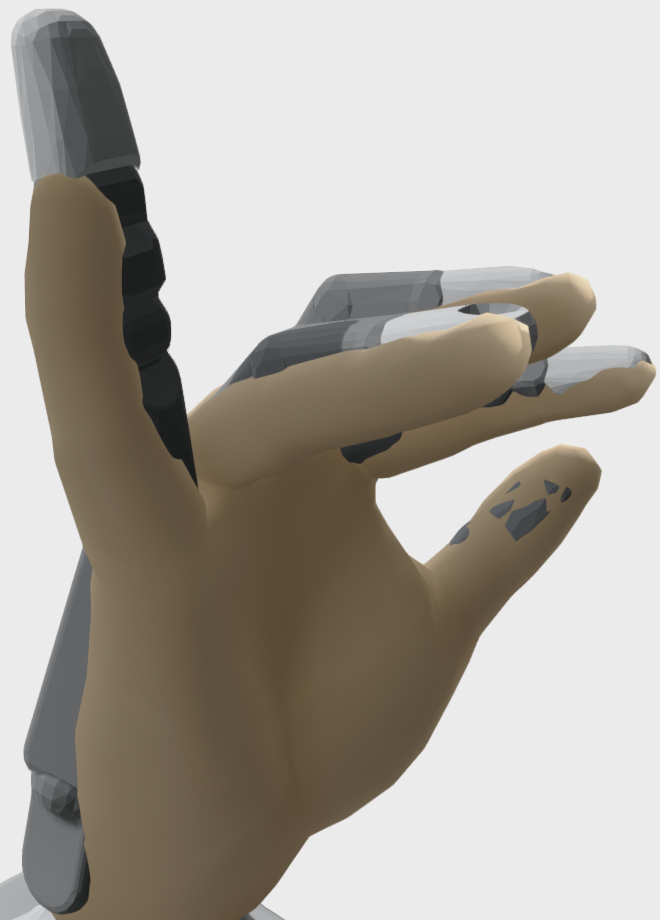

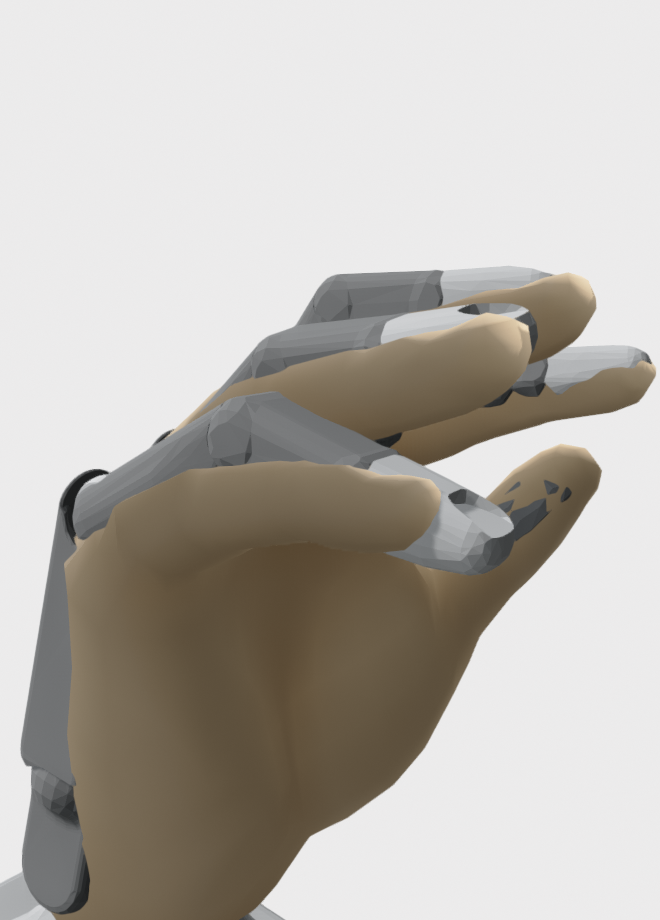

See bin/gui_robot_embodiment.py

|

|

|

|

|

|

|

|

You can run an example with test data from the main directory with

python bin/vis_markers_to_robot.py shadow --demo-file test/data/recording.tsv --mocap-config examples/config/markers/20210826_april.yaml --mano-config examples/config/mano/20210610_april.yaml --record-mapping-config examples/config/record_mapping/20211105_april.yaml --visual-objects pillow-small --show-manoor

python bin/vis_markers_to_robot.py mia --demo-file test/data/recording.tsv --mocap-config examples/config/markers/20210826_april.yaml --mano-config examples/config/mano/20210610_april.yaml --record-mapping-config examples/config/record_mapping/20211105_april.yaml --visual-objects pillow-small --mia-thumb-adducted --show-manoWhile this source code is released under BSD-3-clause license, it also contains models of robotic hands that have been released under different licenses:

- Mia hand: BSD-3-clause license

- Shadow dexterous hand: GPL v2.0

- Robotiq 2F-140: BSD-2-clause license

This library has been developed initially at the Robotics Innovation Center of the German Research Center for Artificial Intelligence (DFKI GmbH) in Bremen. At this phase the work was supported through a grant from the European Commission (870142).