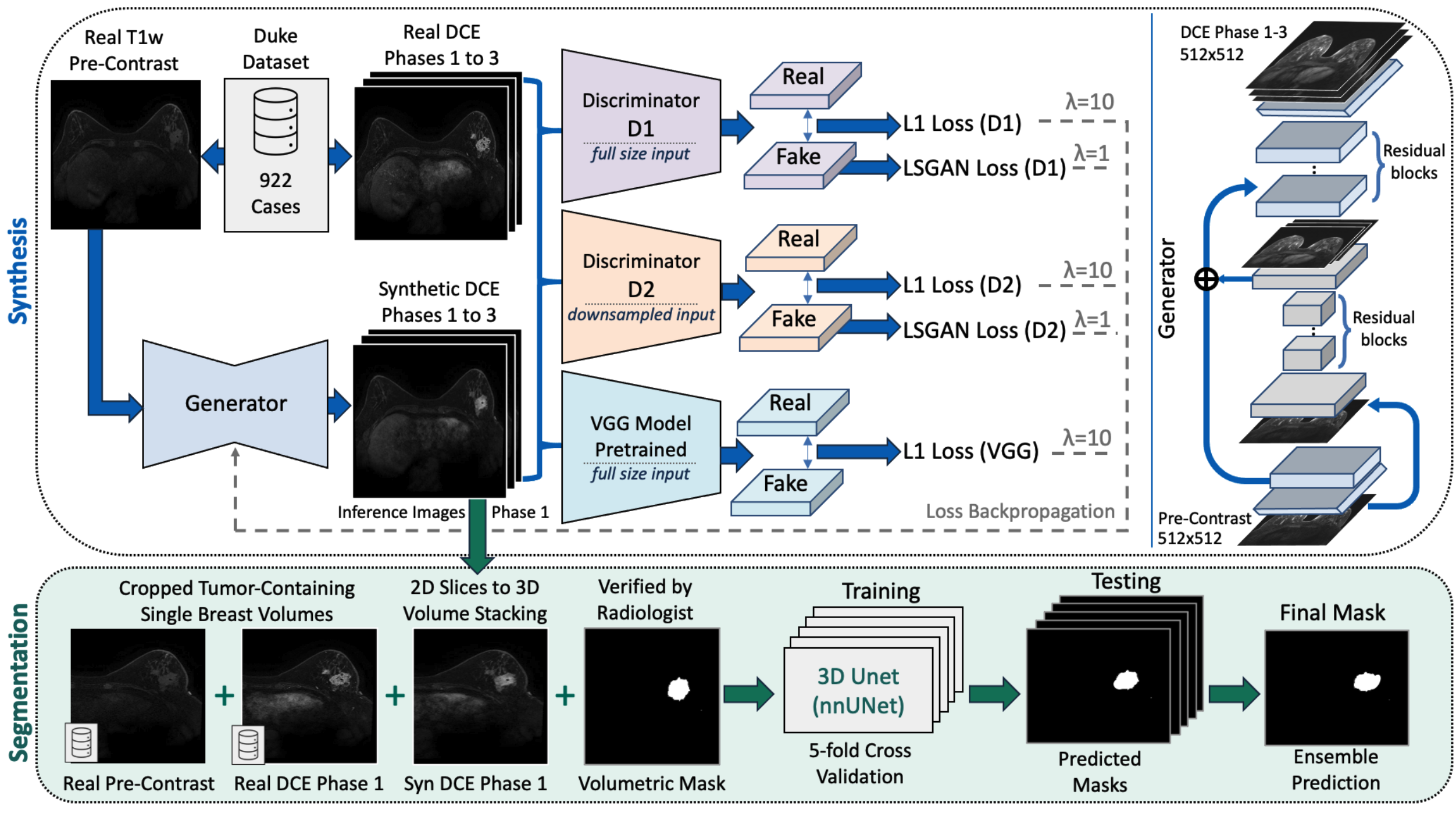

Official repository of "Simulating Dynamic Tumor Contrast Enhancement in Breast MRI using Conditional Generative Adversarial Networks"

- Code to extract 2D pngs from 3D NiFti files for the multi-sequence train-val-test split.

- Code to concat multiple DCE-MRI sequences into channels of an image, which is later used as input into the synthesis model.

- Script to run a training of the version of the synthesis model that jointly generates images for corresponding DCE-MRI sequences 1, 2 and 3.

- Script to run a test (inference) of the trained synthesis model that will then jointly generates images for corresponding DCE-MRI sequences 1, 2 and 3 for the test set.

- Script Compute per DCE-MRI sequence (e.g. 1, 2 and 3) the imagenet and radimagenet-based Frèchet Inception Distances (FIDs) for the GAN generated data.

- Script Compute per DCE-MRI sequence (e.g. 1, 2 and 3) the imagenet and radimagenet-based Frèchet Inception Distances (FIDs) for the GAN generated data.

- Notebook to compute and visualize contrast enhancement kinetic patterns for corresponding multi-sequence DCE-MRI data.

- Code to extract 2D pngs from 3D NiFti files for the single-sequence train-val-test split.

- Script to run a training of the image synthesis model.

- Script to run a test of the image synthesis model.

You may find some examples of synthetic nifti files in synthesis/examples.

- Code to transform Duke DICOM files to NiFti files.

- Code to create 3D NiFti files from axial 2D pngs.

- Code to separate synthesis training and test cases.

- Code to compute the image quality metrics such as SSIM, MSE, LPIPS, and more.

- Code to compute the Frèchet Inception Distance (FID) based on ImageNet and RadImageNet.

- The Duke Dataset used in this study is available on The Cancer Imaging Archive (TCIA).

- Code to prepare 3D single breast cases for nnunet segmentation.

- Train-test-splits of the segmentation dataset.

- Script to run the full nnunet pipeline on the Duke dataset.

Model weights are stored on on Zenodo and made available via the medigan library.

To create your own post-contrast data, simply run:

pip install medigan# import medigan and initialize Generators

from medigan import Generators

generators = Generators()

# generate 10 samples with model 23 (00023_PIX2PIXHD_BREAST_DCEMRI).

# Also, auto-install required model dependencies.

generators.generate(model_id='00023_PIX2PIXHD_BREAST_DCEMRI', num_samples=10, install_dependencies=True)Please consider citing our work if you found it useful:

@article{osuala2024simulating,

title={Simulating Dynamic Tumor Contrast Enhancement in Breast MRI using Conditional Generative Adversarial Networks},

author={Osuala, Richard and Joshi, Smriti and Tsirikoglou, Apostolia and Garrucho, Lidia and Pinaya, Walter HL and Lang, Daniel M and Schnabel, Julia A and Diaz, Oliver and Lekadir, Karim},

journal={arXiv preprint arXiv:2409.18872},

year={2024}

}This repository borrows code from the pre-post-synthesis, the pix2pixHD and the nnUNet repositories. The 254 tumour segmentation masks used in this study were provided by Caballo et al.