This is the github repo for Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking [arxiv].

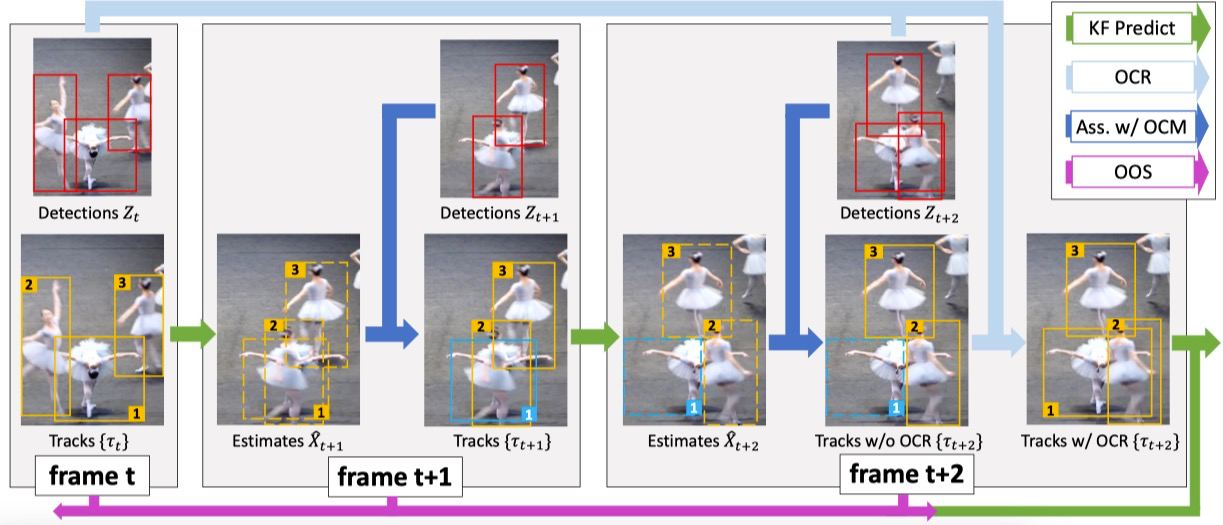

Observation-Centric SORT (OC-SORT) is a pure motion-model-based multi-object tracker. It aims to improve tracking robustness in crowded scenes and when objects are in non-linear motion. It is designed by recognizing and fixing limitations in Kalman filter and SORT. It is flexible to integrate with different detectors and matching modules, such as appearance similarity. It remains, Simple, Online and Real-time.

- [04/02/2022]: A preview version is released after a primary cleanup and refactor.

- [03/27/2022]: The arxiv preprint of OC-SORT is released.

| Dataset | HOTA | AssA | IDF1 | MOTA | FP | FN | IDs | Frag |

|---|---|---|---|---|---|---|---|---|

| MOT17 (private) | 63.2 | 63.2 | 77.5 | 78.0 | 15,129 | 107,055 | 1,950 | 2,040 |

| MOT17 (public) | 52.4 | 57.6 | 65.1 | 58.2 | 4,379 | 230,449 | 784 | 2,006 |

| MOT20 (private) | 62.4 | 62.5 | 76.4 | 75.9 | 20,218 | 103,791 | 938 | 1,004 |

| MOT20 (public) | 54.3 | 59.5 | 67.0 | 59.9 | 4,434 | 202,502 | 554 | 2,345 |

| KITTI-cars | 76.5 | 76.4 | - | 90.3 | 2,685 | 407 | 250 | 280 |

| KITTI-pedestrian | 54.7 | 59.1 | - | 65.1 | 6,422 | 1,443 | 204 | 609 |

| DanceTrack | 55.1 | 38.0 | 54.2 | 89.4 | 114,107 | 139,083 | 1,992 | 3,838 |

| CroHD HeadTrack | 44.1 | - | 62.9 | 67.9 | 102,050 | 164,090 | 4,243 | 10,122 |

-

Results are from reusing detections of previous methods and shared hyper-parameters. Tune the implementation adaptive to datasets may get higher performance.

-

The inference speed is ~28FPS by a RTX 2080Ti GPU. If the detections are provided, the inference speed of OC-SORT association is 700FPS by a i9-3.0GHz CPU.

-

A sample from DanceTrack-test set is as below and more visualizatiosn are available on Google Drive

-

See INSTALL.md for instructions of installing required components.

-

See GET_STARTED.md for how to get started with OC-SORT.

-

See DEPLOY.md for deployment support over ONNX, TensorRT and ncnn.

The pretrained model trained on DanceTrack-train set is the original version provided in DanceTrack, we rename and redirect it on Google Drive for convenience. The weights should output results as below:

| Dataset | HOTA | IDF1 | AssA | MOTA | DetA |

|---|---|---|---|---|---|

| DanceTrack-val | 52.1 | 51.6 | 35.3 | 87.3 | 77.2 |

| DanceTrack-test | 55.1 | 54.2 | 38.0 | 89.4 | 80.3 |

- For model weights for other datasets, please refer to the model zoo of ByteTrack for options.

To run the tracker on a provided demo video from Youtube:

python3 tools/demo_track.py --demo_type video -f exps/example/mot/yolox_dancetrack_test.py -c pretrained/bytetrack_dance_model.pth.tar --path videos/dance_demo.mp4 --fp16 --fuse --save_result --out_path demo_out.mp4The codebase is built highly upon YOLOX, filterpy, and ByteTrack. We thank their wondeful works. OC-SORT, filterpy and ByteTrack are available under MIT License. And YOLOX uses Apache License 2.0 License.

If you find this work useful, please consider to cite our paper:

@article{cao2022observation,

title={Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking},

author={Cao, Jinkun and Weng, Xinshuo and Khirodkar, Rawal and Pang, Jiangmiao and Kitani, Kris},

journal={arXiv preprint arXiv:2203.14360},

year={2022}

}