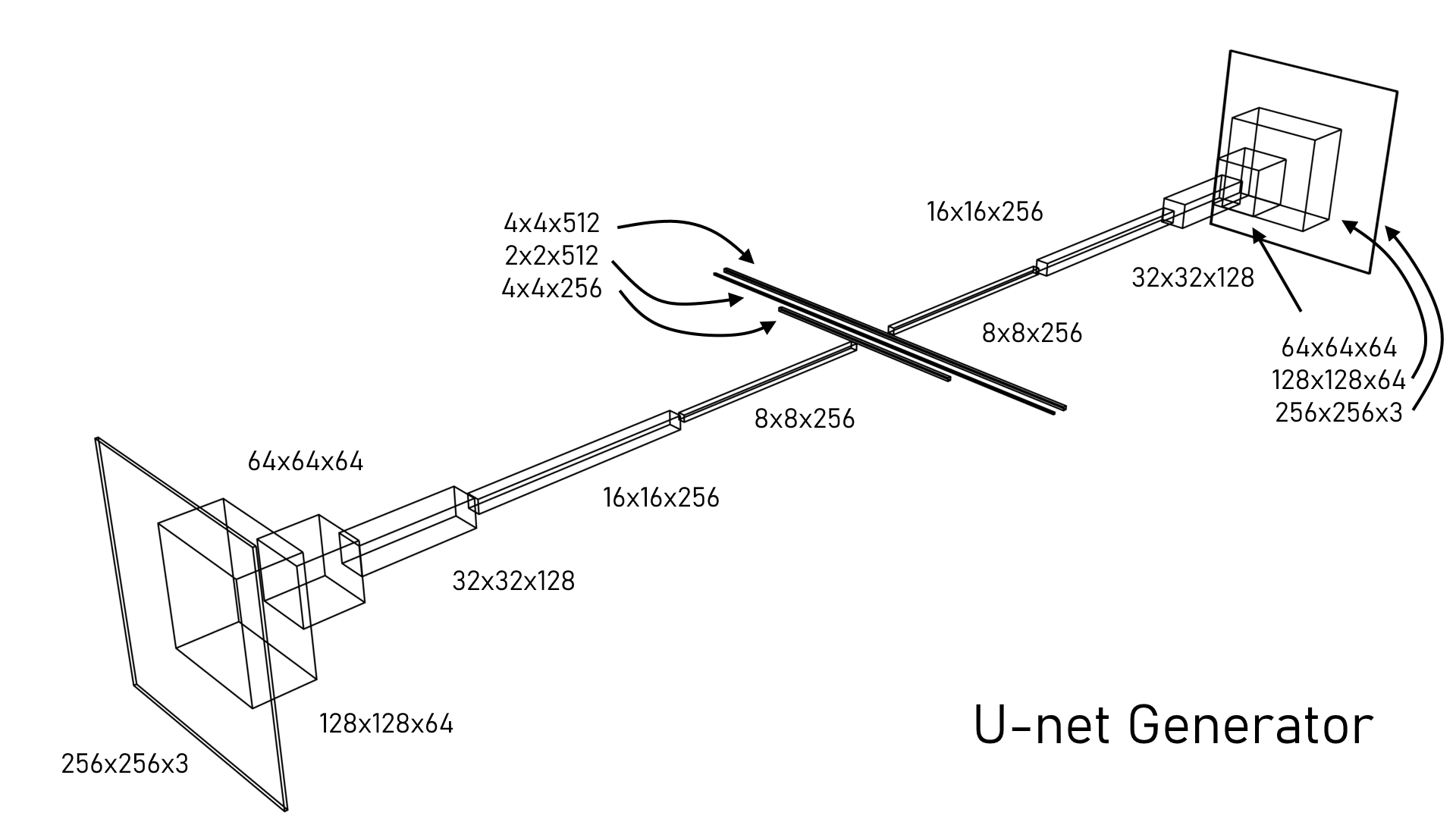

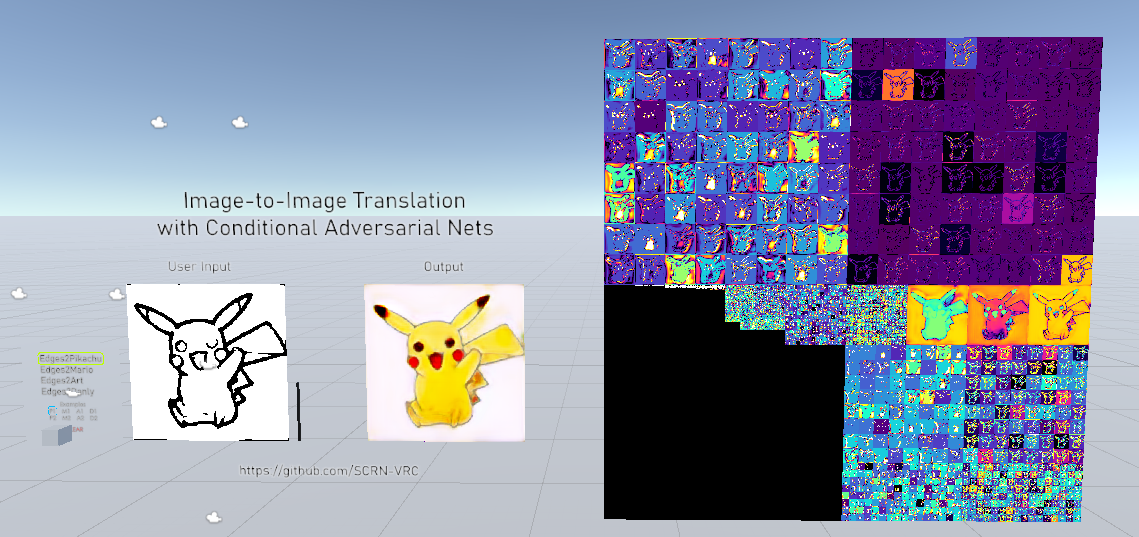

A simplified version of the pix2pix generator inside a fragment shader for Unity. This implementation is only 1/4 of the original pix2pix model to help with real time performance in VR.

- Three version of pix2pix: Python, C++, HLSL

- Python + Keras version is the high level overview of the network structure, also does the offline training.

- C++ + OpenCV version is a low level version to help me convert the network into HLSL.

- HLSL version is the one used in-game in VRChat.

- Four pre-trained networks include: Edges2Pikachu, Edges2Mario, Edges2Tree, and Edges2Danly. All networks were trained from 100 to 200 epochs or 1 to 3 million iterations depending on the training size.

- VRC SDK 2 setup in a Unity project

- VRChat layers must be setup already

- Clone the repository

- Open the Unity project

- Import VRCSDK2

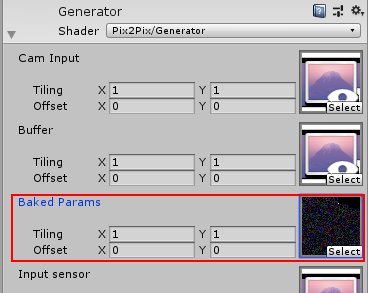

- Download the baked weights from https://mega.nz/file/N2BkAJoI#f65jOmX_wFiOjcnnFDX1qj_Gr6pZrG_LOGE4xl9vT44

- Put WeightTex.asset inside \Assets\Pix2Pix\Weights

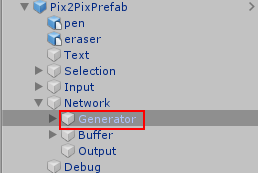

- Link WeightTex.asset to the "Baked Params" inside the Generator shader.

OR

- Open a new Unity project

- Import VRCSDK2

- Import the Pix2Pix.unitypackage in Releases

If you wish to run the Python code, here's what you need.

- Anaconda 1.19.12

- Python 3.7

- TensorFlow 1.14.0

- Keras 2.3.1

I suggest following a guide on Keras + Anaconda installations like this one https://inmachineswetrust.com/posts/deep-learning-setup/

If you wish to run the C++ code.

You can follow a guide on OpenCV + Visual Studio here https://www.deciphertechnic.com/install-opencv-with-visual-studio/

If you have any questions or suggestions, you can find me on Discord: SCRN#8008