GAPR

Introduction

[RA-L 23] Heterogeneous Deep Metric Learning for Ground and Aerial Point Cloud-Based Place Recognition

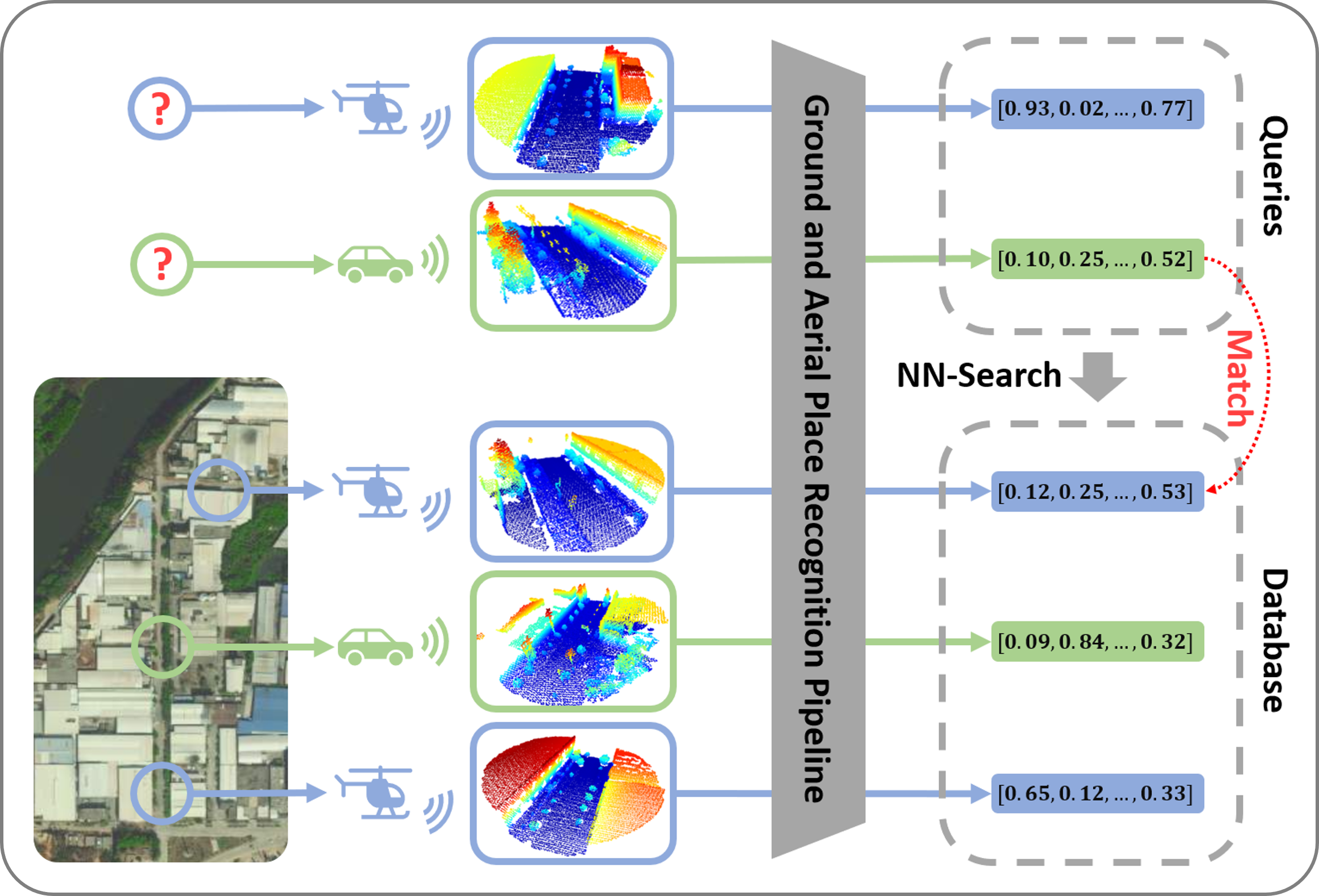

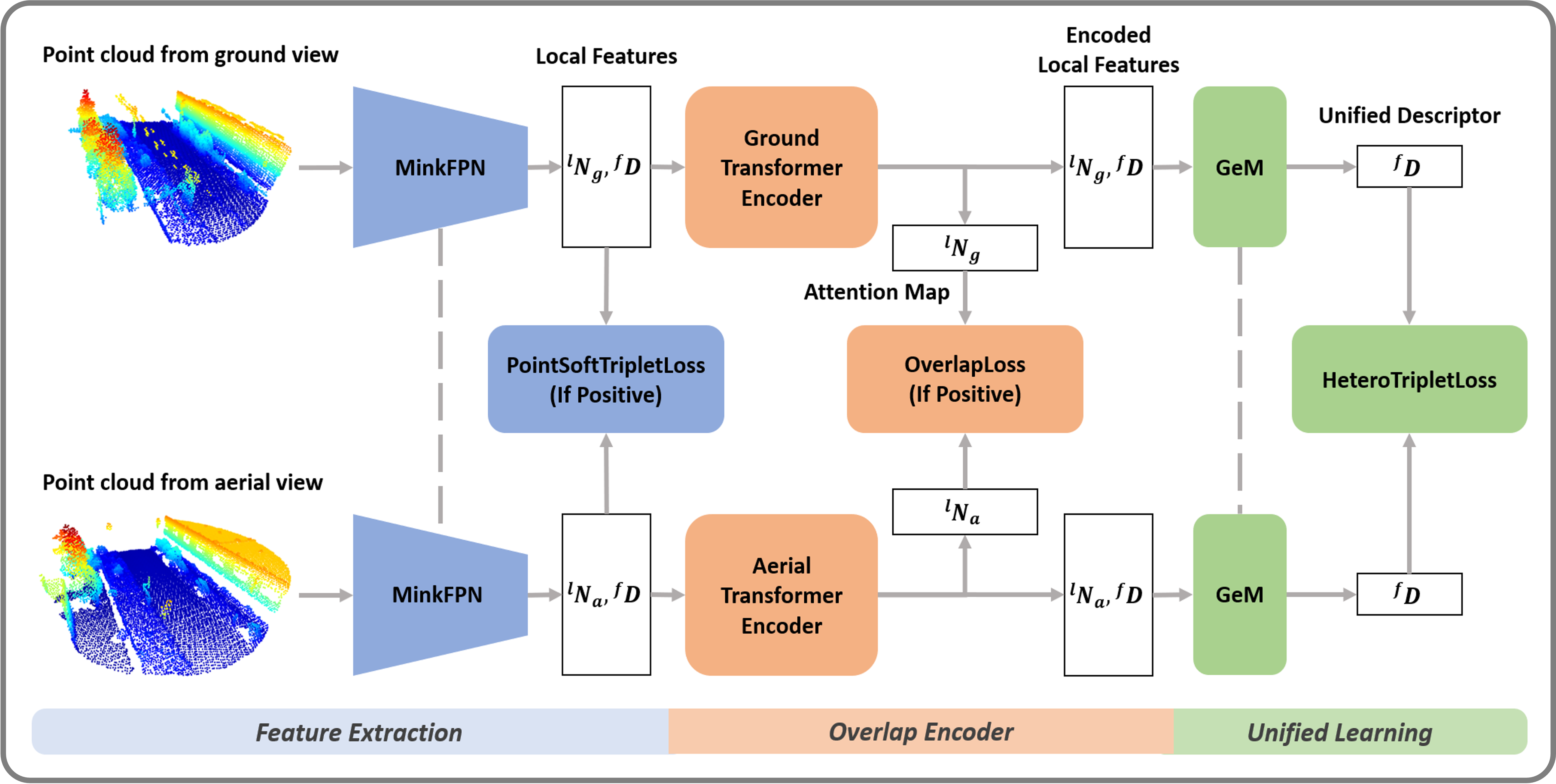

In this paper, we propose a heterogeneous deep metric learning pipeline for ground and aerial point cloud-based place recognition in large-scale environments.The pipeline extracts local features from ground and aerial raw point clouds by a sparse convolution module. The local features are processed by transformer encoders to capture the overlaps between ground and aerial point clouds, and then transformed to unified descriptors for retrieval purposes by backpropagation of heterogeneous loss functions.To facilitate training and provide a reliable benchmark, a large-scale dataset is also proposed, which is collected from well-equipped ground and aerial robotic platforms. We demonstrate the superiority of the proposed method by comparing it with existing well-performed methods. We also show that our method is capable to detect loop closures in a collaborative ground and aerial robotic system in the experimental results.

Contributors

Yingrui Jie 揭英睿, Yilin Zhu 朱奕霖, and Hui Cheng 成慧 from SYSU RAPID Lab.

Citation

@ARTICLE{10173571,

author={Jie, Yingrui and Zhu, Yilin and Cheng, Hui},

journal={IEEE Robotics and Automation Letters},

title={Heterogeneous Deep Metric Learning for Ground and Aerial Point Cloud-Based Place Recognition},

year={2023},

volume={},

number={},

pages={1-8},

doi={10.1109/LRA.2023.3292623}}Usage

Environment

This project has been tested on a system with Ubuntu 18.04. Main dependencies include: CUDA >= 10.2; PyTorch >= 1.9.1; MinkowskiEngine >= 0.5.4; Opne3D >= 0.15.2. Please set up the requirments as follows.

-

Install cuda-10.2.

-

Create the anaconda environment.

conda create -n gapr python=3.8

conda activate gaprpip install torch==1.9.1+cu102 torchvision==0.10.1+cu102 torchaudio==0.9.1 -f https://download.pytorch.org/whl/torch_stable.htmlconda install openblas-devel -c anaconda

git clone https://github.com/NVIDIA/MinkowskiEngine.git

cd MinkowskiEngine

export CXX=g++-7

git checkout v0.5.4

python setup.py install --blas_include_dirs=${CONDA_PREFIX}/include --blas=openblas

cd ..- Install requirements.

# install setuptools firstly to avoid some bugs

pip install setuptools==58.0.4

pip install tqdm open3d tensorboard pandas matplotlib pillow ptflops timm==0.9.2- Download this repository.

git clone https://github.com/SYSU-RoboticsLab/GAPR.git

cd GAPRAdd the python path before running codes:

export PYTHONPATH=$PYTHONPATH:/PATH_TO_CODE/GAPRDataset

Please download our benchmark dataset and unpack the tar file.

Run the following command to check the dataset (train and evaluate).

python datasets/lprdataset.py --dataset /PATH_TO_DATASET/benchmark/train

python datasets/lprdataset.py --dataset /PATH_TO_DATASET/benchmark/evaluateEvaluate

- Change the path of dataset in

config/evaluate/once.yaml.

# ...

dataloaders:

evaluate:

dataset: /PATH_TO_DATASET/benchmark/evaluate

# ...- We provide a pretrain weights for evaluation.

python evaluate/once.py --weights pretrain/GAPR.pth --yaml config/evaluate/once.yamlParameter weights is used to set the path of model weights. The results are saved at results/evaluate/YYMMDD_HHMMSS.

Train

- Change the path of dataset in

config/gapr/train.yaml.

# ...

dataloaders:

train:

dataset: /PATH_TO_DATASET/benchmark/train

# ...- Select the GPUs and start training. For example, here GPU 1,3 are sued and

nproc_per_nodeis set to 2 (number of selected GPUs).

CUDA_VISIBLE_DEVICES=1,3 python -m torch.distributed.launch --nproc_per_node=2 train/train.py --yaml config/gapr/train.yamlThe training weights are saved at results/weights/YYMMDD_HHMMSS.

Acknowledgement

We acknowledge the authors of MinkLoc3D for their excellent codebase which has been used as a starting point for this project.