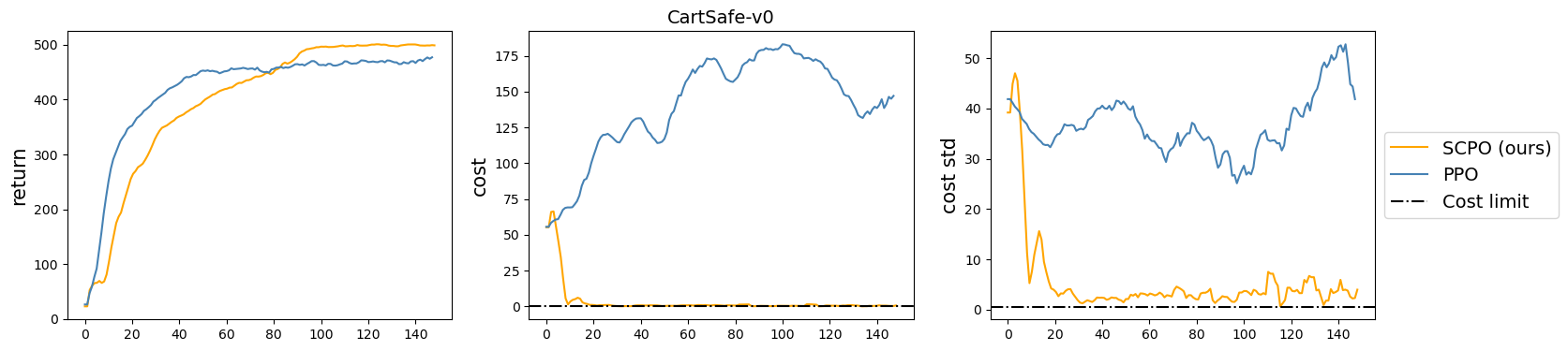

SCPO is a safe reinforcement learning algorithm. This repo is a fork of Stable Baselines3.

Note: Stable-Baselines3 supports PyTorch >= 1.11

SCPO requires Python 3.7+.

Install the Stable Baselines3 package:

pip install -r requirements.txt

We use environments from Bullet-Safety-Gym. Please follow the installation steps from https://github.com/SvenGronauer/Bullet-Safety-Gym.

If you want to run pytorch in gpu mode, please install cuda and pytorch separately https://pytorch.org/

Example code for training can be found at train.py. To train models with the best hyperparameters, please check train_best_hyper.py.

Check play.py.

|

|

|---|---|

|

|

If you find the repository useful, please cite the study

@article{mhamed2023scpo,

title={SCPO: Safe Reinforcement Learning with Safety Critic Policy Optimization},

author={Mhamed, Jaafar and Gu, Shangding},

journal={arXiv preprint arXiv:2311.00880},

year={2023}

}