The aim of this project is to provide a tool to train an agent on Minigrid. The human player can make game demonstrations and then the agent is trained from these demonstrations using Inverse Reinforcement Learning techniques.

The IRL algorithms are based on the following paper: Extrapolating Beyond Suboptimal Demonstrations via Inverse Reinforcement Learning from Observations [1].

git clonethis repoconda create -n venv_irl python=3.9conda activate venv_irl- Install pytorch

conda install -c anaconda pyqt- cd to repo root

pip install -e .

- Run

python agents_window.py - Press

Add environment, then select from the drop-down - Press

Create new agent - Add some demonstrations:

- Press

New game - Control the agent: WASD/arrow keys to move, 'p' to pickup, 'o' to drop, 'i' or space to interact (e.g. with a door), backspace/delete to reset

- Commands are defined in

play_minigrid.py,qt_key_handler

- Commands are defined in

- When you finish the episode, the

Savebutton will activate -- press it to save the demonstration - Collect at least 2 demonstrations

- Press

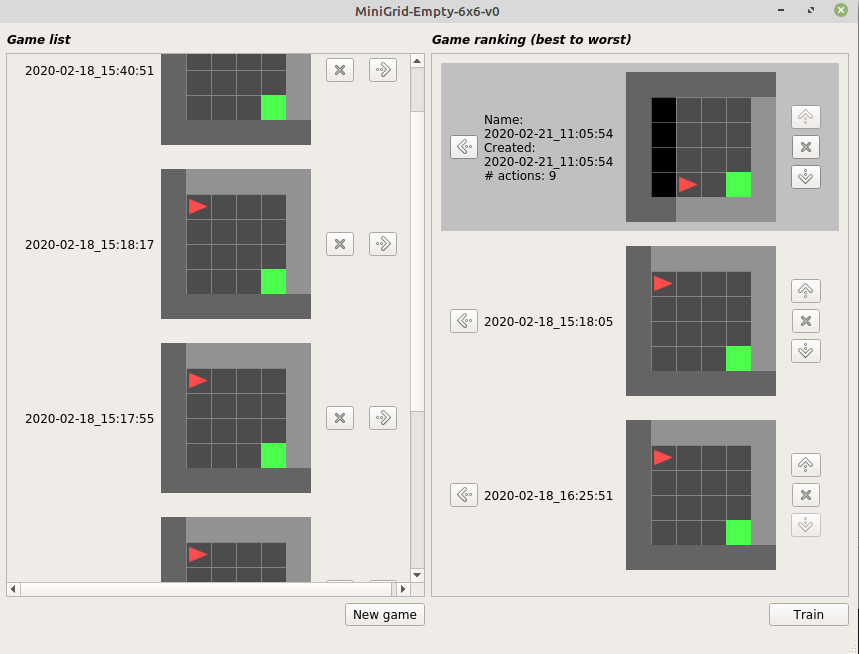

- Press the -> button next to each demonstration to add it to the list that will be used for training (rank as described)

- Press

Trainto start training - To see training progress and example runs, press "Info" next to the agent in the Agents list

- See training plots:

- In a terminal, cd to repo root

- run

tensorboard --logdir data --port 6006 - In a browser, navigate to

localhost:6006

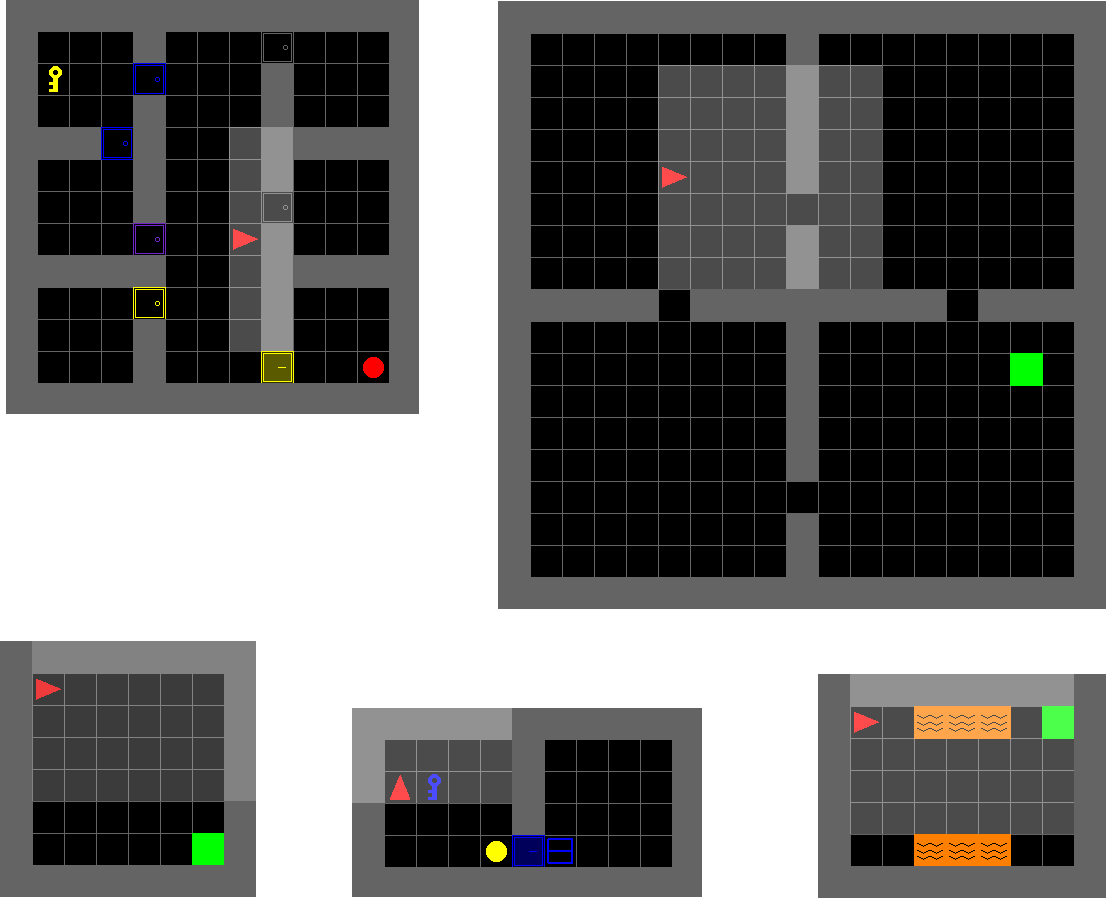

Gym-minigrid [2] is a minimalistic gridworld package for OpenAI Gym.

There are many different environments, you can see some examples below.

The red triangle represents the agent that can move within the environment, while the green square (usually) represents the goal. There may also be other objects that the agent can interact with (doors, keys, etc.) each with a different color.

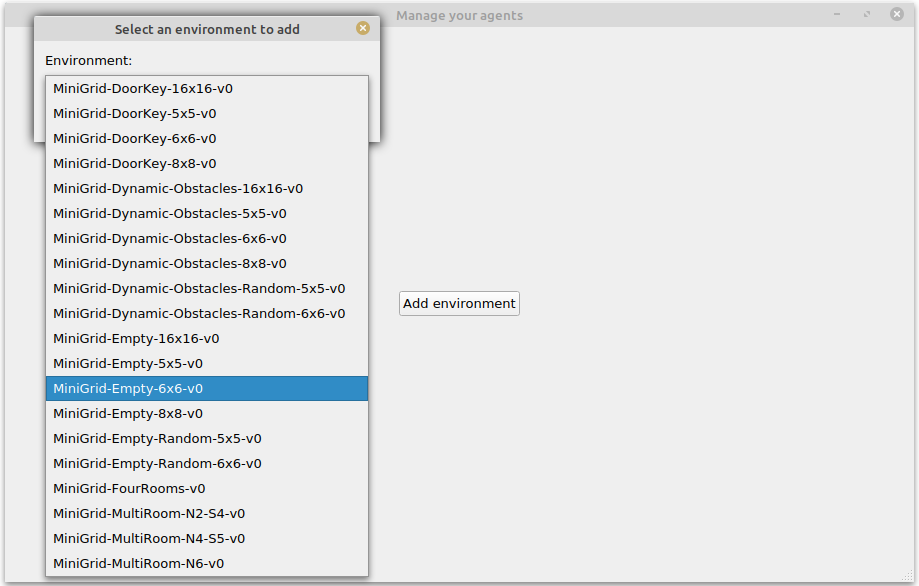

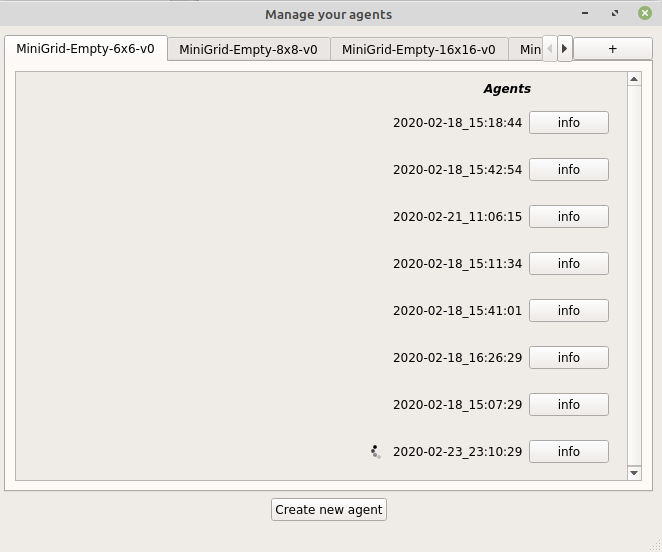

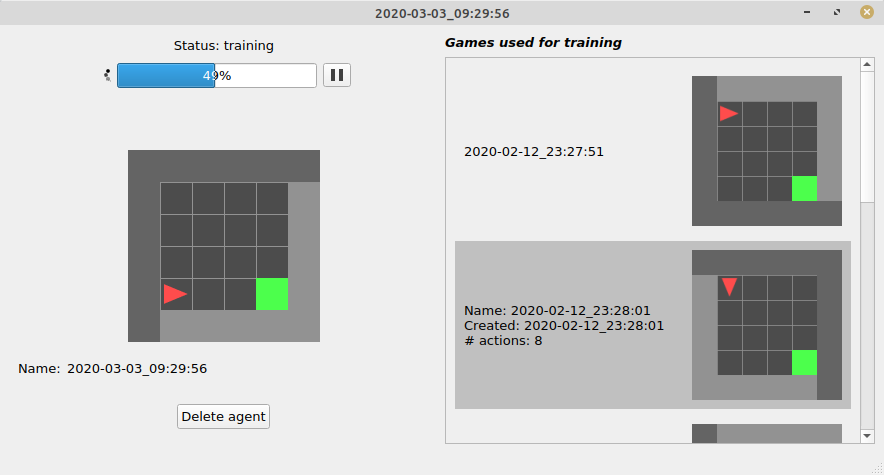

The graphical interface allows the user to create, order and manage a set of games n order to create an agent that shows a desired behavior. Below you can see the application windows.

Choose an environment to use

Browse list of created agents

Add demonstrations and create a new agent

Check trained agent

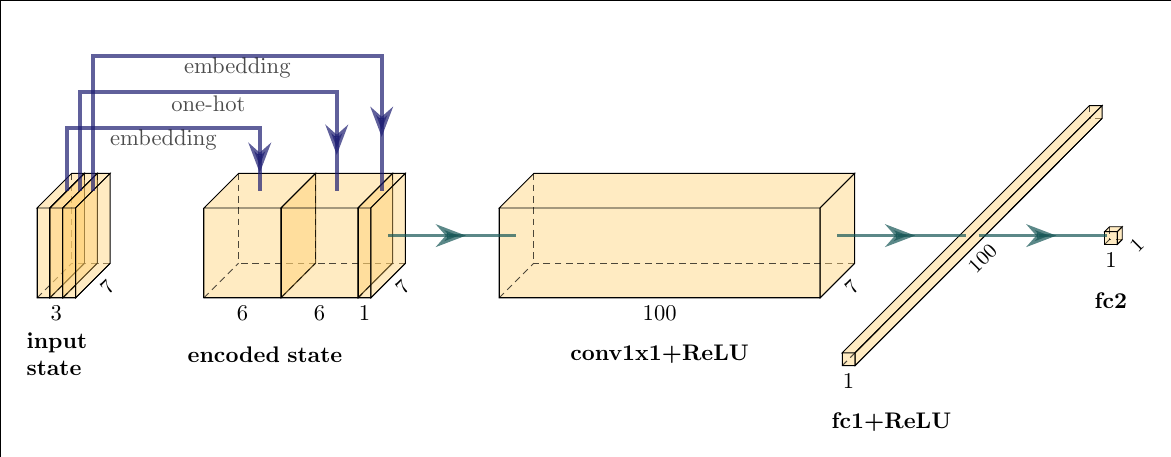

Architecture of the Reward Neural Network:

- input: MiniGrid observation

- output: reward

Trained with T-REX loss. [1]

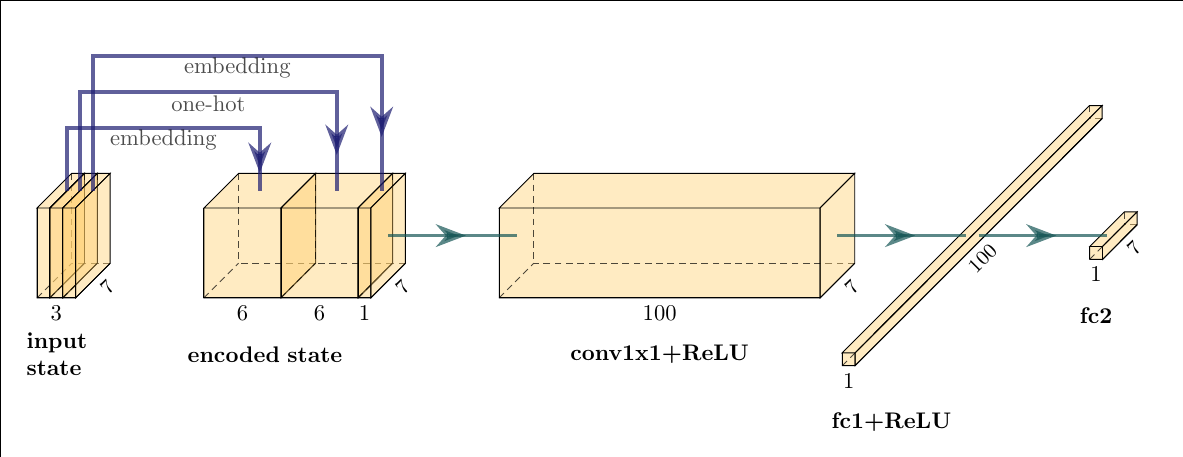

Architecture of the Policy Neural Network:

- input: MiniGrid observation

- output: probability distribution of the actions

Trained with loss: -log(action_probability) * discounted_reward

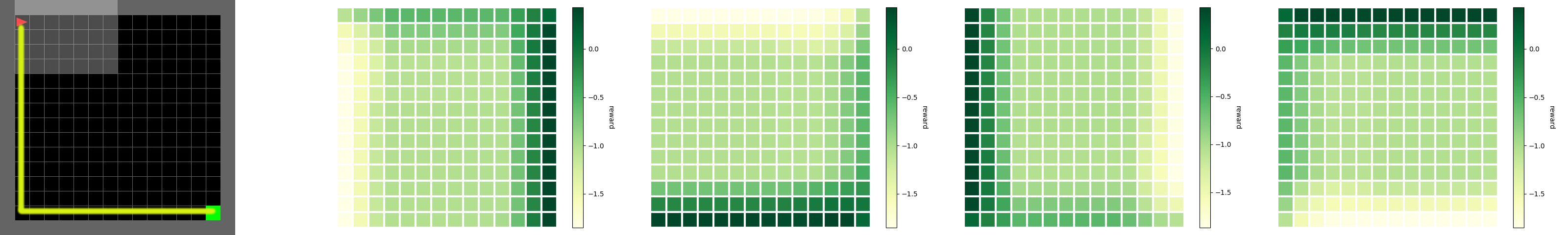

We made a set of demonstrations to try to get the desired behavior shown on the left in the image below.

Next, the heatmaps of the rewards given by the trained reward network are shown. The different heatmaps represent different directions of the agent, in order: up, right, down, left.

- go to the directory in which you have downloaded the project

- go inside Minigrid_HCI-project folder with the command:

cd Minigrid_HCI-project - run the application with the command

python agents_window.py

[1] Daniel S. Brown, Wonjoon Goo, Prabhat Nagarajan, Scott Niekum. Extrapolating Beyond Suboptimal Demonstrations via Inverse Reinforcement Learning from Observations. (Jul 2019) T-REX

[2] Chevalier-Boisvert, Maxime and Willems, Lucas and Pal, Suman. Minimalistic Gridworld Environment for OpenAI Gym, (2018) GitHub repository Gym-minigrid