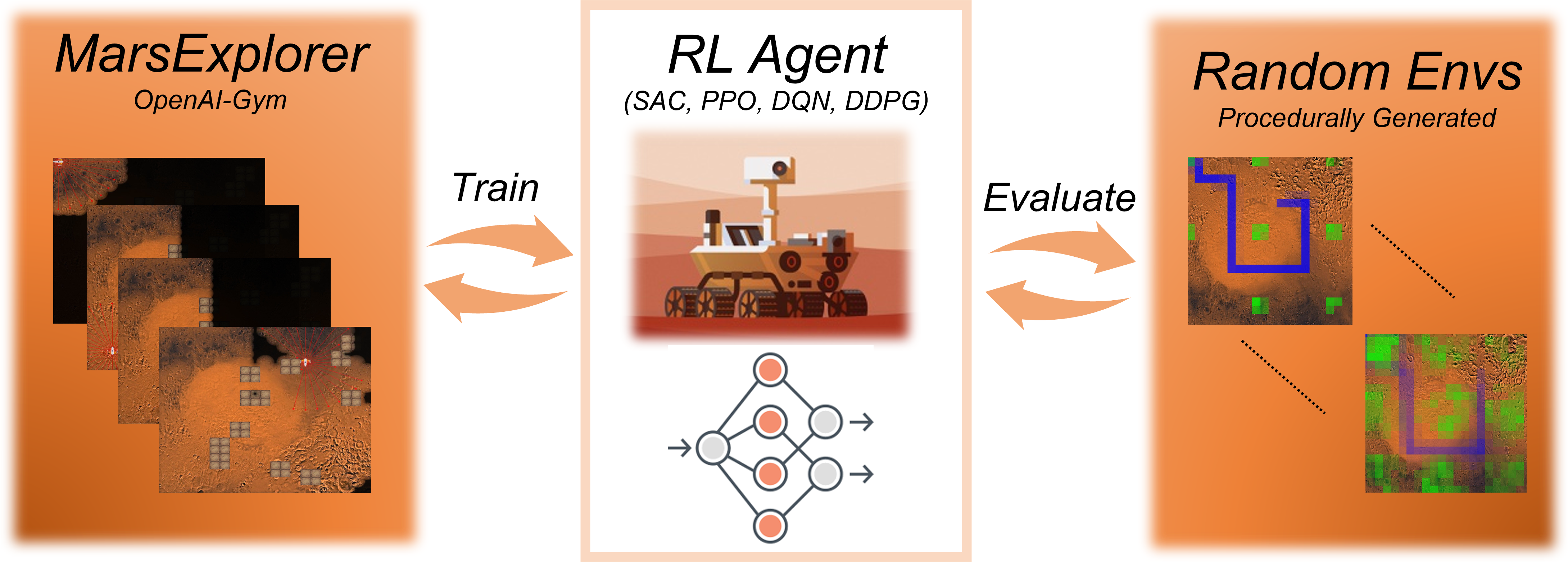

Mars Explorer is an openai-gym compatible environment designed and developed as an initial endeavor to bridge the gap between powerful Deep Reinforcement Learning methodologies and the problem of exploration/coverage of an unknown terrain. For full description and performance analysis, please check out our companion paper MarsExplorer: Exploration of Unknown Terrains via Deep Reinforcement Learning and Procedurally Generated Environments

Terrain diversification is one of the MarsExplorer kye attributes. For each episode, the general dynamics are determined by a specific automated process that has different levels of variation. These levels correspond to the randomness in the number, size, and positioning of obstacles, the terrain scalabality (size), the percentage of the terrain that the robot must explore to consider the problem solved and the bonus reward it will receive in that case. This procedural generation of terrains allows training in multiple/diverse layouts, forcing, ultimately, the RL algorithm to enable generalization capabilities, which are of paramount importance in real-life applicaiton where unforeseen cases may appear.

You can install MarsExplorer environment by using the following command:

$ git clone https://github.com/dimikout3/GeneralExplorationPolicy.git

$ pip install -e mars-explorerIf you want you can proceed with a full isntallation, that includes a pre-configured CONDA environment with the Ray/RLlib and all the dependancies. Thereby, enabling a safe and robust pipelining approach to training your own agent on exploration/coverage missions.

$ sh setup.shYou can have a better look at the dependencies at:

setup/environment.ymlPlease run the following command to make sure that everything works as expected:

$ python mars-explorer/tests/test.pyWe have included a manual control of the agent, via the corresponding arrow keys. Run the manual control environment via:

$ python mars-explorer/tests/manual.pyYou can train you own agent by using Ray\RLlib formulation. For a more detailed guidance, have a look at our implementation of a PPO agent in a 21-by-21 terrain:

$ python trainners/runner.pyYou can also test multiple implemantations with different agent (e.g SAC, DQN-Rainbow, A3C) by batch training:

$ python trainners/batch.pyBy default all of the results, with the saved agents, will be located at:

$ ~/ray_resultsIn order to provide a comparison with existing and well-established frontier-based approaches, we have included two different implemantation.

Cost-based

The next action is chosen based on the distance from the nearest frontier cell.

Utility-based

the dicision-making is governed by a frequently updated information potential field

Which can be tested by running the corresponding scripts:

$ python non-learning/frontierCostExploration.py.py

$ python non-learning/frontierUtilityExploration.py.pyIf you find this useful for your research, please use the following:

@article{Koutras2021MarsExplorer,

title={MarsExplorer: Exploration of Unknown Terrains via Deep Reinforcement Learning and Procedurally Generated Environments},

author={Dimitrios I. Koutras and A. C. Kapoutsis and A. Amanatiadis and E. Kosmatopoulos},

journal = {arXiv preprint arXiv:2107.09996},

year={2021}

}