Supplementary code repository to the Masters thesis by Marius Memmel

Short paper presented at the Embodied AI Workshop at CVPR 2022

Visualization of an agent using the proposed Visual Odometry Transformer (VOT) as GPS+compass substitute.

Backbone is a ViT-B with MultiMAE pre-training and depth input.

- Setup

- Training

- Evaluation

- Visualizations

- Visualizations Examples

- Privileged Information

- Privileged Information Examples

- References

This repository provides a Dockerfile that can be used to setup an environment for running the code. It installs the corresponding versions of habitat-lab and habitat-sim, habitat-sim, timm, and their dependencies. Note that for running the code at least one GPU supporting cuda 11.0 is required.

This repository requires two datasets to train and evaluate the VOT models:

- Gibson scene dataset

- PointGoal Navigation splits, specifically

pointnav_gibson_v2.zip.

Please follow Habitat's instructions to download them. The following data structure is assumed under ./dataset:

.

+-- dataset

| +-- Gibson

| | +-- gibson

| | | +-- Adrian.glb

| | | +-- Adrian.navmesh

| | | ...

| +-- habitat_datasets

| | +-- pointnav

| | | +-- gibson

| | | | +-- v2

| | | | | +-- train

| | | | | +-- val

| | | | | +-- valmini

This repository provides a script to generate the proposed training and validation datasets.

Run

./generate_data.sh

and specify the following arguments to generate the dataset. A dataset of 250k samples takes approx. 120GB.

| Argument | Usage |

|---|---|

--act_type |

Type of actions to be saved, -1 for saving all actions |

--N_list |

Sizes for train and validation dataset. Thesis uses 250000 and 25000 |

--name_list |

Names for train and validation dataset, default is train and val |

When generating the data, habitat-sim sometimes causes an "isNvidiaGpuReadable(eglDevId) [EGL] EGL device 0, CUDA device 0 is not readable" error. To fix it follow this issue. Overwrite habitat-sim/src/esp/gfx/WindowlessContext.cpp by the provided WindowlessContext.cpp.

Download the pre-trained MultiMAE checkpoint from this link, rename the model checkpoint to MultiMAE-B-1600.pth and place it in ./pretrained.

Download the pre-trained RL navigation policy checkpoint from PointNav-VO download the pre-trained checkpoint of the RL navigation policy from this link and place rl_tune_vo.pth under pretrained_ckpts/rl/no_tune.pth.

To train a VOT model, specify the experiment configuration in a yaml file similar to here. Then run

./start_vo.sh --config-yaml PATH/TO/CONFIG/FILE.yaml

To evaluate a trained VOT model, specify the evaluation configuration in a yaml file similar to here. Then run

./start_rl.sh --run-type eval --config-yaml PATH/TO/CONFIG/FILE.yaml

Note that passing --run-type train fine-tunes the navigation policy to the VOT model. This thesis does not make use of this functionality.

To visualize agent behavior, the evaluation configuration has a VIDEO_OPTION here that renders videos directly to a logging platform or disk.

To visualize attention maps conditioned on the action, refer to the visualize_attention_maps notebook that provides functionality to plot all attention heads of a trained VOT.

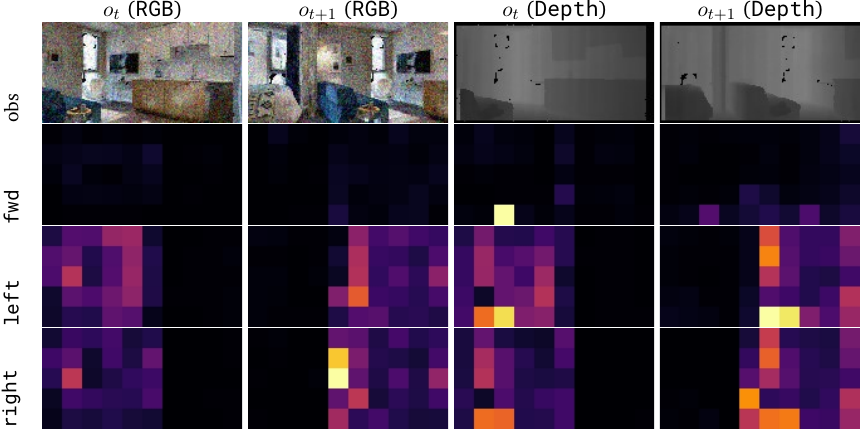

Attention maps of the last attention layer of the VOT trained on RGBD and pre-trained with a MultiMAE. The model focuses on regions present in both time steps t,t+1. Action taken by the agent is left.

Impact of the action token on a VOT trained on RGBD and pre-trained with a MultiMAE. Ground truth action: fwd. Injected actions: fwd, left, right. Embedded fwd causes the attention to focus on the image center while both left and right move attention towards regions of the image that would be consistent across time steps t,t+1 in case of rotation.

To run modality ablations and privileged information experiments, define the modality in the evaluation configuration as VO.REGRESS.visual_strip=["rgb"] or VO.REGRESS.visual_strip=["depth"]. Set VO.REGRESS.visual_strip_proba=1.0 to define the probability of deactivating the input modality.

Visualization of an agent using the Visual Odometry Transformer (VOT) as GPS+compass substitute. Backbone is a ViT-B with MultiMAE pre-training and RGB-D input. The scene is from the evaluation split of the Gibson4+ dataset. A red image boundary indicates collisions of the agent with its environment. All agents navigate close to the goal even though important modalities are not available.

Training: Training: RGBD, Test: Depth, RGB dropped 50% of the time

Training: Training: RGBD, Test: RGB, Depth dropped 50% of the time

Training: Training: RGBD, Test: RGB, Depth dropped 100% of the time

Training: Training: RGBD, Test: Depth, RGB dropped 100% of the time

This repository is a fork of PointNav-VO by Xiaoming Zhao. Please refer to the code and the thesis for changes made to the original repository.